Acknowledgment: This project was carried out as part of the "Careers with Impact" program during the 14-week mentorship phase.

Problem Context:

In recent years, artificial intelligence (AI), and particularly reinforcement learning (RL), has gained significant relevance in the field of robotics. Unlike imitation learning (IL), where robots depend on expert demonstrations to learn, RL allows robots to acquire skills through direct interaction with their environment, based on predefined rewards and punishments. Although RL may require more time and data due to the need for exploration, its main advantage is that it is not limited by the availability of an expert, but rather by the reward setup and system architecture, making it especially suitable for complex and dynamic environments.

However, despite significant advancements in the field, its large-scale implementation faces a number of major challenges, including model scalability, adaptation to real-world environments, and integration into autonomous robotics systems. While robotics has traditionally been associated with industrial applications, more and more technologies that we use in everyday life come from this field. A clear example is autonomous vehicles, which integrate key areas of robotics such as environmental sensing and motion planning to operate safely and efficiently. This is why it is crucial to pay attention to the bias issues that can arise in these systems. A recent study, Bias Behind the Wheel, reveals that pedestrian detectors, a fundamental component in autonomous vehicles, show significant biases based on age, gender, and lighting conditions, particularly affecting children and women at night. Such biases not only compromise safety but also perpetuate social inequalities, underscoring the need to design fairer and more equitable systems in AI applied to robotics.

Initiatives such as Epoch AI and similar projects have provided valuable databases on AI models that, like this project, aim to expand knowledge about emerging trends and challenges in the field of robotics.

These efforts are particularly relevant in a context where artificial intelligence is having an impact comparable to other revolutionary general-use technologies. However, the limited number of experts dedicated to preventing the potential risks of AI, estimated at only 400 people worldwide in 2022, highlights the urgent need for progress in both the safety and governance of these technologies.

Governance is essential to prevent algorithms from perpetuating dangerous biases that affect vulnerable groups. Without proper regulation, AI can amplify pre-existing inequalities. The creation of laws and regulations can mitigate these threats by requiring regular algorithmic audits, promoting transparency in model development, and ensuring accountability in cases of failures or damages caused by AI systems. In terms of safety, the lack of adequate measures could lead to the malfunction of robots and autonomous systems in uncontrolled environments, posing risks to both people's physical safety and the privacy of sensitive data. Safety and governance, therefore, must work together to ensure that future developments are not only efficient but also ethical, safe, and fair, with clear standards that minimize negative impacts, especially protecting communities most affected by technological inequality.

Objective:

1. General Objective:

Identify current applications of reinforcement learning processes in the field of robotics and the challenges that have arisen during their application, in order to provide a comprehensive view of emerging trends and recognize areas of opportunity in the regulation of these technologies.

2. Specific Objectives:

- Conduct an exhaustive analysis of the scientific literature on various current applications of robotics.

- Identify the main challenges faced by the most relevant robotic applications today and the proposed solutions to overcome these challenges.

- Suggest future research directions based on the analysis of current challenges in the use of AI in robotics, with the aim of establishing a framework for future discussions on the ethical development of these technologies.

Methodology:

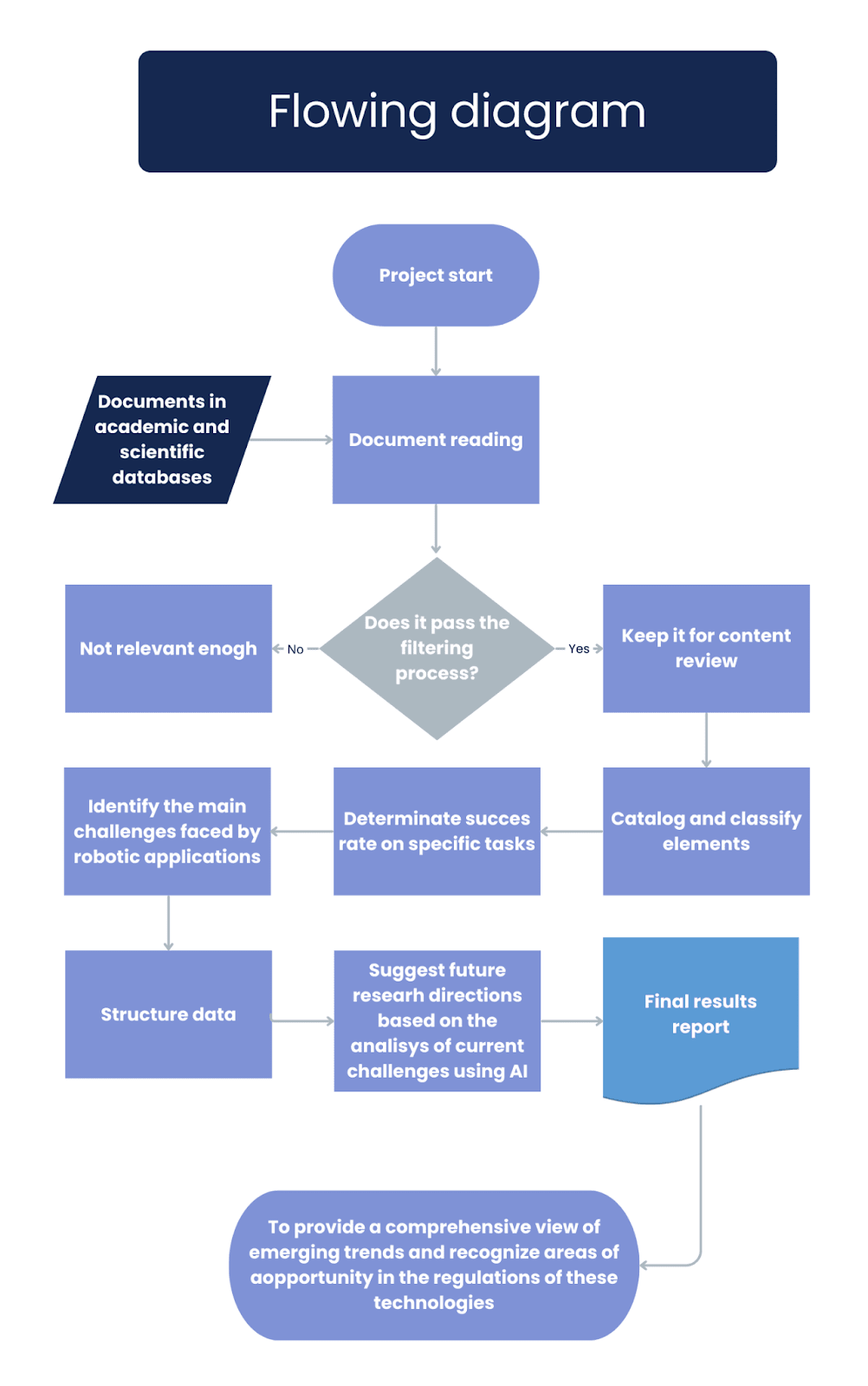

A series of structured steps were followed, ranging from the search and selection of scientific articles to the synthesis of the results obtained and the analysis of future challenges and solutions (Figure 1). Each step is described in detail below:

Figure 1. Flowchart summarizing the methodological process used during the project's development. The different stages of the process are shown, from the literature review and filtering to data analysis and the proposal of future directions within reinforcement learning in robotics.

- Literature Review:

An exhaustive search was conducted in well-known academic databases, including Epoch, IEEE Xplore, Scopus, Google Scholar, and arXiv, among others. Keywords related to reinforcement learning in robotics, such as reinforcement learning, robotics, robot, autonomous, robotic applications, AI, and RL, were used.

- Article Filtering:

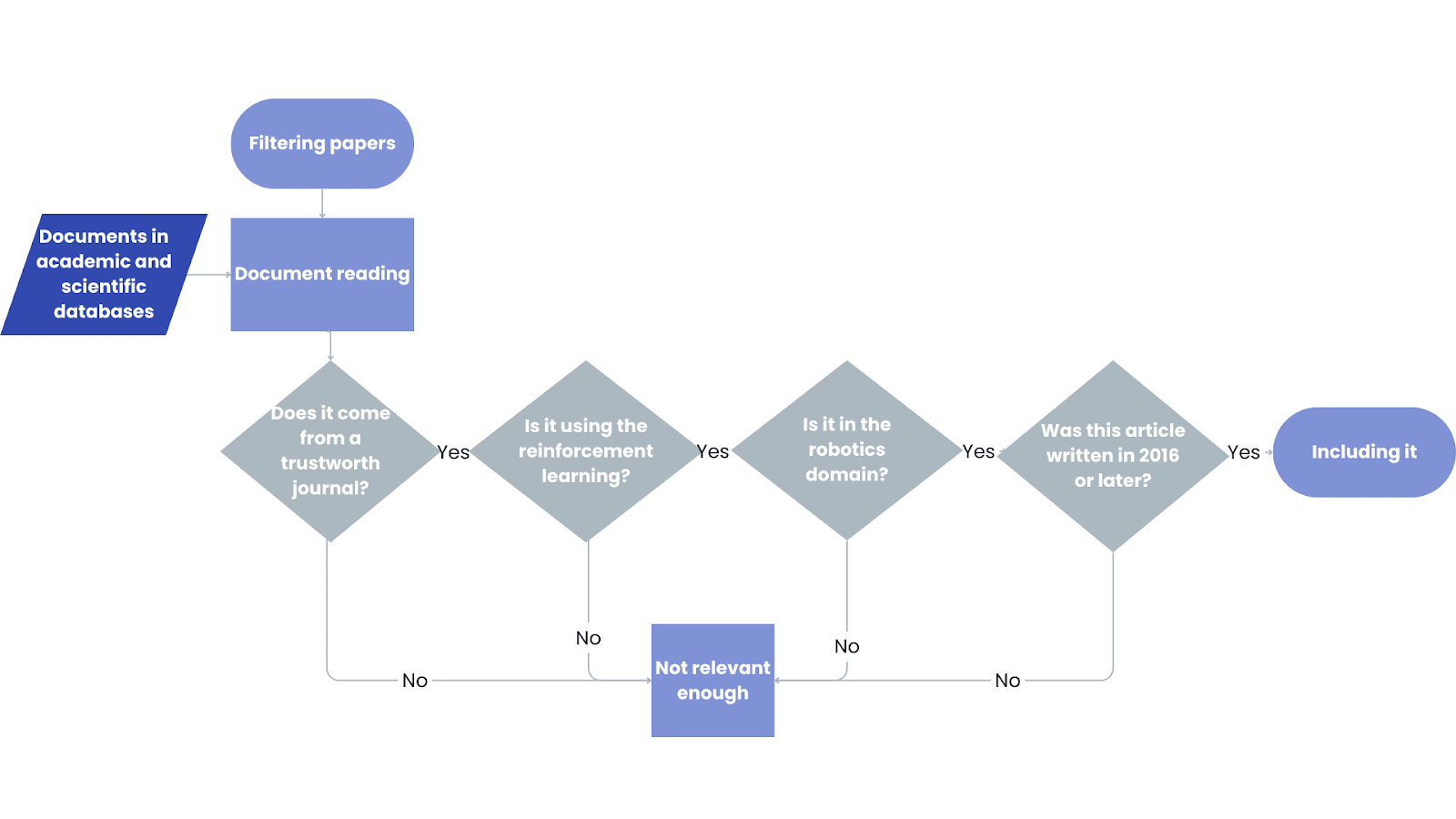

From the retrieved articles, filtering was performed using Python to exclude publications from "predatory" journals (those that charge authors processing fees without providing editorial and publishing services, thereby compromising the reliability of the published research due to the lack of a rigorous peer-review process). This process was based on consulting recognized lists of predatory journals and applying strict quality and reputation criteria during the evaluation of sources. Subsequently, a manual selection of relevant articles for analysis was conducted, discarding those without a clear approach to reinforcement learning, unrelated to the field of robotics, and/or published before 2016 (Figure 2).

Figure 2. Flowchart summarizing the article filtering process using four key questions to determine their relevance within the current research.

- Database Creation:

A thorough review of the selected articles was conducted to extract quantitative data such as the success rate in specific robotics tasks and the year of publication. This information was compiled into a publicly accessible database, categorized according to the specific area of robotics to which the studied applications belong.

- Identification of Current Challenges and Solutions:

From the collected articles, a second literature review was performed to identify the main challenges recognized in the field of robotics, as well as current proposals for overcoming these challenges.

- Analysis of Future Directions:

Based on the analysis of the collected information on development patterns and issues associated with the use of AI in robotics, future directions in the field were identified to foster discussion on its use and development.

Results:

Refining the Set of Articles for the Study

The initial analysis began with 50 relevant articles. After applying the filtering process described earlier, the number was reduced to 36 scientific articles, which formed the final database. The filtering process was carried out as follows:

- 5 articles were discarded for not being openly accessible.

- 1 article was excluded for not belonging to the field of robotics.

- 2 articles were excluded for focusing on supervised learning (IL) instead of reinforcement learning (RL).

- 4 articles did not present an associated AI model.

- 2 articles were discarded for not meeting the time period established for recent studies.

As a result, 72% of the initial articles were selected, and 28% were discarded, ensuring a specific and high-quality focus for the analysis of emerging applications and trends in reinforcement learning in robotics.

The structured database created from the selected articles included the following fields: Link, Title, Abstract, Authors, Journal, Year, Organization, Country (from Organization), Citations, Application, Specific Task to Measure, Real World Success %, and Virtual Environment Success %. This database can be publicly accessed at the following link: Article Database.

Classification of Reinforcement Learning Applications in Robotics

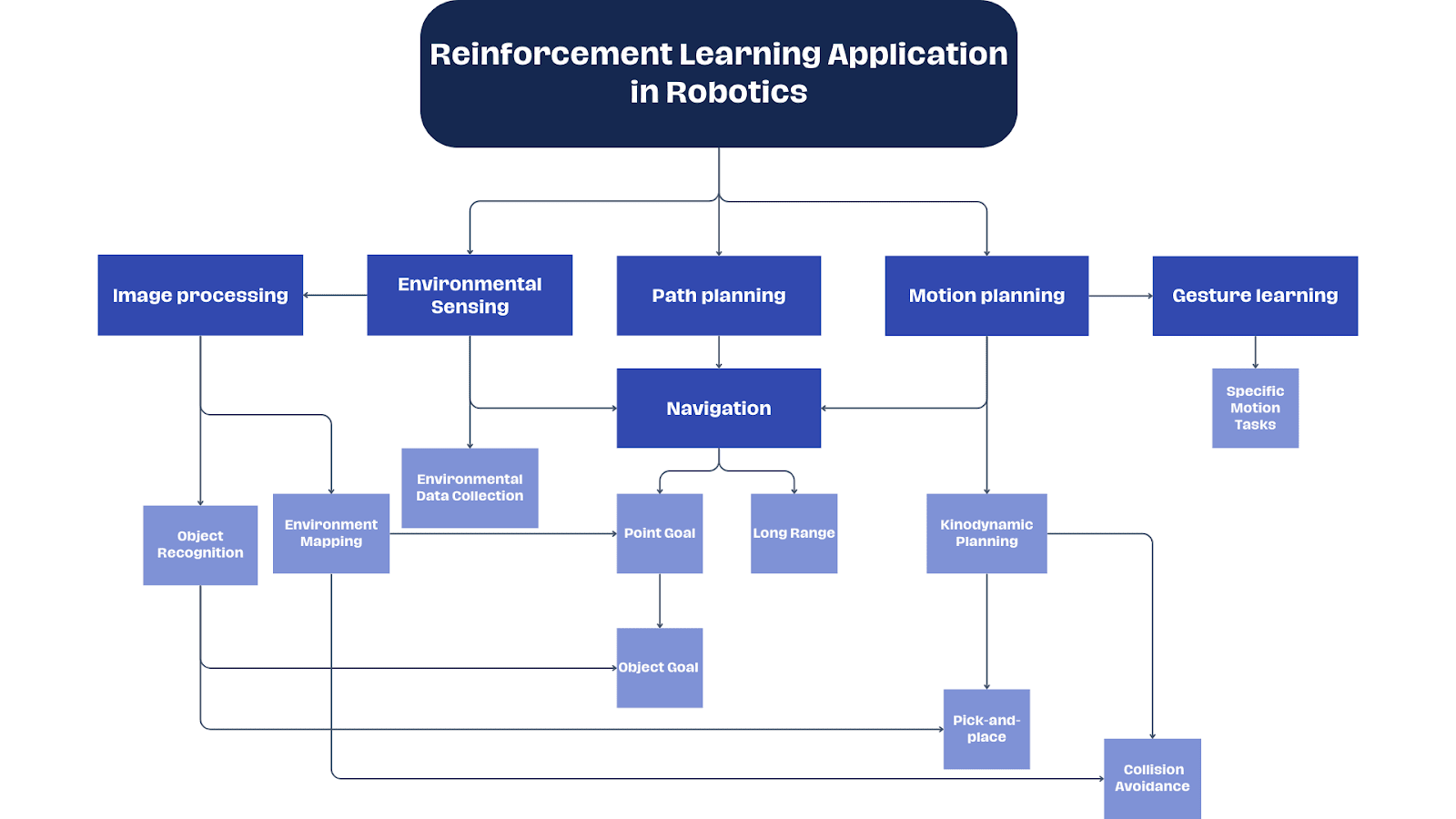

Given the wide variety of applications associated with reinforcement learning in the field of robotics, the models were classified into 17 different fields (Fig. 2). While some applications share common techniques and challenges, this classification facilitates the understanding and analysis of emerging trends and the specific difficulties of each field. Of the 17 classified fields, 6 stand out as the most frequently studied, from which the other applications are derived:

- Image Processing

- Environmental Sensing

- Path Planning

- Navigation

- Motion Planning

- Gesture Learning

Image Processing is distinguished by its focus on visual perception, using deep neural networks to recognize objects and navigate unstructured environments. In contrast, Environmental Sensing focuses on the collection and analysis of sensory data to adapt the robot’s actions in real-time. Both cases involve interpreting sensory information but require different approaches due to the nature of the processed data.

Path Planning and Navigation focus on calculating efficient trajectories for mobile robots, using algorithms such as rapidly exploring random trees (RRT) and probabilistic roadmaps (PRM). Unlike the previous areas, these approaches require integrating reinforcement learning models that consider the environment's dynamics and the robot's constraints, highlighting the importance of hybrid systems that combine deep learning and sample-based planning.

Gesture Learning and Motion Planning focus on human-robot interaction, where robots must learn complex movement patterns and adapt to collaborative environments. This involves a different level of complexity, as the challenge is not only to execute an action but to learn how to do it in specific contexts with minimal human intervention.

From these areas, or a combination of several of them, the other fields of robotics are derived, allowing the grouping of robotic applications into six fundamental domains (Figure 3), each with specific difficulties that require unique solutions and different technical approaches.

It is important to note the limited number of articles identified that specifically address Object Goal Navigation, suggesting a significant opportunity for improvement and development in future research in this area. Unlike Point Goal Navigation, this task presents a higher degree of difficulty, which could also justify the scarcity of available studies. Due to its complexity, Object Goal Navigation requires a more advanced approach and represents a significant challenge in the field of robotics.

Figure 3. Hierarchical classification diagram of various reinforcement learning (RL) applications in robotics, illustrating how they relate to one another. The six key areas are highlighted in dark blue, with specific subfields detailed in light blue.

Technical Challenges and Future Solutions in RL Applications in Robotics

Based on the content analysis of the collected articles, the main challenges and current trends were identified across the 17 key areas of robotics previously outlined (Table 1). Each field presents a distinct set of technical challenges, as well as emerging trends aimed at providing solutions to these challenges.

Table 1. Challenges and trends in RL for robotics. The table lists the different areas of robotics alongside the challenges and trends observed in each.

Field | Challenges | Trend |

Pick-and-Place | Robots require feedback on whether a piece has been correctly grasped. Additionally, it is crucial for them to understand the orientation and shape of the object. This becomes particularly challenging with deformable objects (such as a can), as applying more force alters their shape | Cameras or tactile sensors are being implemented to provide robots with feedback on the grip, shape, and orientation of the object. |

Collision Avoidance | The primary challenges involve moving objects, as the robot must quickly calculate the direction and speed of the obstacle and adjust its trajectory to avoid it. Another significant challenge is multi-robot navigation; when multiple robots are involved, the complexity increases as they must also avoid each other. Implementing a centralized algorithm to control them becomes increasingly difficult to scale as the number of robots grows | Decentralized robots are emerging as a trend, capable of calculating their own individual routes and delineating a safe zone in which they can move based on both moving and stationary obstacles. |

Path Planning | Autonomous navigation is crucial for mobile robotics, requiring the ability to traverse collision-free paths toward a target destination. Classical approaches typically involve various hand-engineered modules, each solving specific sub-tasks such as mapping, localization, and planning. However, these systems are often heavily parameter-dependent, particularly when deployed in unfamiliar environments or across different robotic platforms. | RRT (Rapidly-exploring Random Trees): is a popular path planning algorithm that efficiently explores the search space by incrementally building a tree. It is particularly useful for solving high-dimensional planning problems and can quickly find feasible paths in complex environments. PRM (Probabilistic Roadmaps): is another widely used algorithm that constructs a roadmap by randomly sampling the configuration space and connecting these samples to form a network of possible paths. This approach is effective for solving path planning problems in static environments and can handle high-dimensional spaces. |

Long-Range Navigation | Long-range navigation tasks require robots to traverse substantial distances while adhering to task constraints and avoiding obstacles. Challenges include managing noisy sensor data, handling changes in the environment, compensating for measurement errors, and ensuring that the robot's control system can maintain task-specific constraints. Additionally, long-range navigation often involves sparse rewards and complex maps, making it difficult to train reinforcement learning (RL) agents effectively, as they may become trapped in local minima or fail to generalize across different environments | PRM-RL (Probabilistic Roadmaps with Reinforcement Learning): "PRM-RL combines the efficiency of PRMs for long-range path planning with the robustness of RL agents for local control. This hybrid approach creates roadmaps that not only avoid collisions but also respect the robot's dynamics and task constraints, offering resilience against environmental changes and mitigating the challenges of local minima. |

Point-Goal Navigation | The separation of SLAM, localization, path planning, and control processes, which complicates the integration and efficiency of autonomous navigation systems; the need for human intervention, particularly in verifying map accuracy; the limited adaptability of current reinforcement learning (RL) methods, which struggle to retain solutions to previous problems while adapting to new ones; and the interrelated issues of "robot freezing" and "navigation lost" in dynamic environments, where the robot may either stop due to complex surroundings or fail to accurately localize itself, leading to navigation failures. | Autonomous self-verification mechanisms are being explored to minimize human intervention. Advanced reinforcement learning techniques are being enhanced for better adaptability, allowing robots to retain previous solutions while adapting to new tasks. Hybrid navigation modes are being developed to handle dynamic environments by switching between normal and recovery strategies, tackling issues like robot freezing and navigation lost. |

Kinodynamic Planning | Ensuring that the planned trajectory is both feasible and efficient. Feasibility means that the path must avoid collisions and respect kinodynamic constraints, such as velocity and acceleration limits, even in the presence of sensor noise. Efficiency requires the path to be near-optimal in terms of objectives like time to reach the goal. Current methods struggle with steering functions and distance functions like Time to Reach (TTR), which are expensive to compute and not sensor-informed, potentially leading to errors in planning, especially in environments with complex obstacles. | Emerging trends to address these challenges include the use of deep learning algorithms, particularly Reinforcement Learning (RL), which can learn policies that integrate obstacle avoidance directly from sensor observations, thereby enhancing local planning capabilities. Combinations of RL with sampling-based methods, such as RL-RRT, are being developed, using RL policies for local planning and obstacle-aware reachability estimators to improve the accuracy and efficiency of planning. |

Object-Goal Navigation | One of the primary challenges in OBJECTNAV is the tendency of end-to-end learning models to overfit and exhibit sample inefficiency due to the complexity of the environment representations required. Additionally, the task is complicated by the need for efficient exploration, as the agent must search for objects that can be located anywhere within the environment, without the aid of compact representations like those in POINTNAV. Another significant challenge is the misalignment of exploration rewards with the OBJECTNAV goal, where agents may continue to explore even after locating the target object. Moreover, the chaotic dynamics introduced in RNNs by complex visual inputs can further degrade the agent's performance, highlighting the need for more robust learning strategies. | Current trends in OBJECTNAV focus on improving agent efficiency and success through the integration of auxiliary learning tasks and exploration rewards. The development of "tethered-policy" multitask learning techniques has shown promise in aligning exploration efforts with the OBJECTNAV goals, reducing the reliance on explicit spatial maps. Additionally, there is an increasing use of advanced semantic segmentation techniques and features like "Semantic Goal Exists," which enhance the agent's ability to recognize when it is close to the target object and adjust its navigation strategy accordingly. |

Environmental Sensing | Perceiving trails autonomously presents significant challenges due to their highly variable appearance and lack of well-defined boundaries, which make them harder to detect than paved roads. The frequent changes in trail surface appearance further complicate the task. Additionally, training deep neural networks for such perception tasks demands extensive labeled data, which is often impractical to gather manually. In model-based reinforcement learning, accurately developing models from raw sensory inputs for complex environments remains a challenge, and ensuring that these models perform well in real-world scenarios, given discrepancies between simulation and reality, adds another layer of difficulty. | Recent advancements in machine learning have driven the adoption of deep neural networks for trail perception, capitalizing on their ability to learn features from data without manual design. Reinforcement learning is increasingly used for navigation, allowing robots to learn behaviors through environmental interactions. Additionally, the integration of differentiable physics-based simulation and rendering techniques is emerging, enhancing model accuracy and efficiency. The use of variable size point clouds as observations in reinforcement learning-based local planning is also gaining traction, providing more robust and adaptable representations. |

Image Processing | Image processing for robotics faces significant challenges, including the high computational cost of training models in real-world environments and the difficulty of collecting large-scale visual data. Deep reinforcement learning (DRL) approaches require extensive training for each new objective, which is inefficient in terms of time and resources. Generalizing these models to new environments is another major hurdle, particularly when using visual inputs like monocular images to avoid collisions in unstructured environments. The variability in image quality, lighting conditions, and differences between simulated and real environments further complicates the task. | Trends in this field point to the use of high-quality simulations to train DRL models that can be effectively transferred to the real world. Models are being developed that jointly integrate the current state and the task objective to improve adaptability and reduce the need for retraining. Additionally, combining simulation training with collective learning in robot fleets and incorporating data from computer vision systems is being explored to enhance the generalization and efficiency of models in complex tasks such as navigation and manipulation in real-world environments. |

Object Recognition | Recognizing unseen objects requires vast amounts of labeled data, which is time-consuming and expensive to collect. Models often struggle to generalize to new objects not present in the training data, leading to reduced accuracy in unfamiliar scenarios. Additionally, real-world conditions like lighting, occlusion, and object orientation introduce variability that can drastically affect the model's performance, making consistent recognition difficult. | There is a growing focus on self-supervised and unsupervised learning to reduce dependency on labeled data, along with the use of synthetic data and simulation to augment real-world datasets. Advances in robustness against variability through data augmentation and multi-modal inputs are being explored, alongside the use of attention mechanisms and transformer models to enhance accuracy. Finally, integrating vision with other modalities is becoming increasingly important to improve object recognition in complex environments |

Environment Mapping | Environment Mapping involve dealing with the complexity and dynamic nature of real-world environments. Robots must accurately and efficiently map environments that may contain unknown obstacles, dynamic changes, and varying levels of sensor noise, all while ensuring real-time processing. Additionally, maintaining map accuracy over long-term operations, handling large-scale environments, and integrating data from multiple sensors are significant hurdles. The need for robust algorithms that can adapt to unforeseen environmental changes and the computational load required for high-resolution mapping also present challenges | Trends in Environment Mapping focus on the integration of advanced machine learning techniques, particularly deep learning and reinforcement learning, to enhance map accuracy and adaptability. There is a growing emphasis on creating real-time, scalable mapping solutions that can operate efficiently in large, complex environments. The use of multi-sensor fusion to improve the reliability and detail of the maps, as well as the development of methods that allow for continuous learning and updating of maps during robot operation, are also prominent. Additionally, efforts are being made to reduce the computational demands through more efficient algorithms and to improve the robustness of mapping systems against sensor noise and environmental changes. |

Navigation | Robotic navigation faces several challenges, including limitations in sensors and actuators, which impact navigation performance, especially in noisy environments. The gap between simulation and reality complicates the transfer of navigation policies to real-world applications. Integrating various navigation sub-tasks requires significant manual tuning for each new environment, while ensuring robustness to dynamic changes is difficult. Computational complexity, particularly in sampling-based motion planning, poses efficiency challenges, and designing effective reward functions in reinforcement learning is critical yet challenging. | Emerging trends in robotic navigation include the adoption of end-to-end learning systems using deep reinforcement learning to map sensors directly to actions, and efforts to improve the transferability of policies from simulation to reality. Probabilistic motion planning algorithms are being refined for better efficiency, while adaptive navigation systems are being developed to handle real-time environmental changes. There is also a growing focus on multi-objective optimization, balancing factors like energy efficiency and safety, and the use of hyperparameter optimization in reinforcement learning to enhance policy performance. |

Environmental Data Collection | The main challenges in environmental sensing for robotics include the ability to accurately perceive complex, unstructured environments such as forests or outdoor terrains. Issues like the variability in visual appearance, lighting conditions, and lack of clear boundaries make it difficult for traditional methods based on low-level features such as image saliency or contrast. Furthermore, the shift from structured, paved roads to unpaved, dynamic trails poses even greater challenges due to the constant changes in the environment and the difficulty in generalizing models from virtual to real-world scenarios. Moreover, robots must navigate efficiently without relying on detailed obstacle maps or dense sensors, requiring systems that can adapt quickly to unseen conditions. | Recent trends focus on leveraging deep learning and reinforcement learning techniques to improve environmental sensing. Approaches using Deep Neural Networks (DNNs) for visual trail perception and end-to-end mapless motion planning are becoming more prominent. These techniques bypass traditional hand-engineered feature extraction and instead use large real-world datasets to train systems for navigating unstructured environments. Furthermore, asynchronous deep reinforcement learning methods have enabled the training of motion planners without prior obstacle maps, improving the adaptability of robots in unseen environments. This trend emphasizes the shift towards lightweight sensing solutions and the generalization of models from virtual to real-world applications, bridging the gap between simulated environments and complex outdoor settings. |

Motion Planning | Motion planning faces significant challenges, especially in high-dimensional systems and complex environments. Traditional sampling-based methods struggle with computational costs as dimensionality increases, making it difficult to handle dynamic constraints and high-dimensional spaces, such as those involving humanoid robots or visual inputs. Furthermore, these methods rely on uniform sampling, which may inefficiently explore the space, leading to slow convergence, particularly in complex or dynamic environments. Managing real-time decision-making, like navigating among dynamic obstacles or humans, also remains a critical challenge due to the unpredictability of external agents and the limitations of current collision avoidance strategies. | Recent trends focus on leveraging learning-based techniques to enhance motion planning by embedding low-dimensional representations of state spaces, often using autoencoders and neural networks. These approaches aim to accelerate sampling-based planning by learning latent spaces where collision checking and dynamic feasibility can be efficiently evaluated. Reinforcement learning (RL) and machine learning methods are increasingly applied to improve decision-making, especially in unpredictable or dynamic environments. Combining traditional methods like SBMP with learning-based systems, such as Latent-SBMP and networks for real-time collision avoidance, is emerging as a promising direction to handle complex planning tasks in dynamic and high-dimensional environments. |

Gesture Learning | Pretrained models in robotics face significant challenges, including the difficulty of generalizing to new, unseen environments, which limits their adaptability. Task representation is complex, especially when trying to capture relationships beyond simple goal-reaching. High-dimensional environments, like those involving image data, make defining goals and designing effective reward functions difficult. Additionally, the high computational cost of training each skill individually becomes impractical for wide-ranging applications, especially in real-world settings where data collection is expensive. | The key trends involve using pretrained models that condition on goal distributions rather than specific states, enabling more flexible task representations. Fine-tuning pretrained models with small task-specific datasets significantly reduces the time and cost of learning new tasks. End-to-end reinforcement learning approaches are gaining traction, particularly those that leverage offline multi-task pre-training for faster adaptation. Distribution-conditioned RL, such as DisCo RL, is also emerging as a promising approach to improve generalization across diverse task sets. |

Specific Motion Tasks | Specific motion tasks like parkour for robots present multiple challenges, including the development of diverse, agile skills such as running, climbing, and leaping over obstacles. Traditional reinforcement learning approaches require complex reward functions and extensive tuning, which limits scalability for a wide range of agile movements. Additionally, low-cost hardware can struggle with these dynamic tasks, especially when real-time visual perception is delayed during high-speed movements. Maintaining motor efficiency while avoiding damage and managing perceptual lag during high-speed tasks also remain significant barriers. | Recent trends focus on learning-based methods for agile, dynamic tasks in robotics, aiming to replicate biological movements like parkour while minimizing hardware costs. These approaches use reinforcement learning (RL) pre-training and fine-tuning stages to optimize motion in both simulations and real-world applications. There is an increasing focus on integrating simple reward functions and combining imitation of animal behaviors with onboard visual and computational capabilities. The development of open-source platforms for motion learning and the use of vision-based policies for complex obstacle traversal are key directions, pushing the boundaries of affordable, agile robotic systems. |

Progress Achieved and Areas for Improvement in Reinforcement Learning for Robotics

At a global level, research compiled from various databases shows that, although there has been an increase in studies on reinforcement learning (RL), its effective application in robotic environments continues to face significant challenges. This reflects the need to develop more robust and flexible models that do not solely rely on simulated environments but are also capable of effectively adapting to real-world applications, where conditions are less predictable and more complex.

The lack of standardization in robotic datasets and the difficulties in creating simulations that faithfully replicate real-world challenges limit the development of scalable solutions. Additionally, data fragmentation and platform diversity slow progress toward creating universal models that can be applied to a wide variety of tasks.

There are specific applications within robotics where recent advances have been so significant that, in some cases, they can be considered solved. A clear example is Point Goal Navigation, where robots can efficiently reach a predetermined point in a previously known environment or under controlled conditions. Moreover, multi-robot collision-free navigation systems, in situations without additional obstacles, have reached an advanced level of development, allowing robots to work cooperatively without interfering with each other.

Despite these achievements, numerous areas still require attention as their development has not reached the same level of maturity. Improving robotic capabilities in more complex environments, with moving obstacles or dynamic changes, remains a challenge. Similarly, the use of richer and more diverse data is essential to optimize real-time decision-making and improve the robustness of current systems.

Progress in tasks such as navigation in unpredictable environments, advanced object manipulation, and object recognition with varied characteristics continues to face considerable barriers. More research is needed, along with the collection of large volumes of higher-quality data, to train models that are more adaptable and effective in these scenarios.

Limitations

In the initial phase of the project, the goal was to evaluate the scalability of reinforcement learning models using quantitative metrics, such as floating-point operations per second (FLOPs) and the number of data points. However, during the literature review process, a widespread lack of reported training data was identified in the methodology of many articles.

Unfortunately, most of the analyzed studies focus on describing the model architecture and reward mechanisms used in reinforcement learning, omitting crucial data such as training times and resources, which are necessary to adequately evaluate model scalability. This lack of specific training data led to a reformulation of the project’s approach, prioritizing a comprehensive qualitative analysis of emerging trends and challenges in AI applications for robotics.

Additionally, tackling the project from such a broad perspective as the field of robotics posed an additional obstacle, making it difficult to draw precise conclusions. This motivated the decision to subdivide the analysis into specific applications, allowing for greater depth and relevance in exploring each area.

Based on the above, researchers interested in AI applied to robotics who wish to explore similar topics are advised to focus their studies on specific applications within the field rather than adopting a generalist approach. This strategy will not only reduce the dispersion of research efforts but also facilitate obtaining detailed results that provide a deeper understanding of particular areas of interest within reinforcement learning applied to robotics.

Future Directions:

The field of robotics is advancing rapidly, moving toward greater integration and specialization of existing tools and technologies. It is expected that various emerging trends will play a crucial role in its evolution, offering an excellent opportunity to assess its development and generate solutions to potential risks. Some future perspectives worth highlighting include:

- Integration of robotic databases: The merging of multiple robotic databases is expected to significantly expand the datasets available for AI model training. Centralizing and exchanging information between different platforms will provide more diverse and comprehensive data, strengthening robots’ ability to operate effectively in a wide variety of environments and scenarios.

- Improvement of simulations in realistic environments: Simulations are being refined to accurately replicate robot behavior in real-world conditions. This advancement will not only reduce the gap between simulated and physical environments but will also allow for the effective development and testing of algorithms before practical implementation. Significant progress is expected in simulators capable of more reliably reproducing the physical, visual, and dynamic conditions of the real world.

- Proliferation of pre-trained models and fine-tuning: The use of pre-trained models will solidify as a key tool for tackling tasks in the robotic field. These models, previously trained with large volumes of general data, can be refined through fine-tuning techniques to adapt to specific tasks such as motion planning. This trend will allow developers to reuse efficient models and enhance their performance in specific applications, optimizing resources and accelerating development processes.

References:

Ai, E. (2023, 11 abril). Machine Learning Trends. Epoch AI. https://epochai.org/trends

Gao, F., Jia, X., Zhao, Z., Chen, C., Xu, F., Geng, Z., & Song, X. (2019). Bibliometric analysis on tendency and topics of artificial intelligence over last decade. Microsystem Technologies, 27(4), 1545-1557. https://doi.org/10.1007/s00542-019-04426-y

Hilton, B. (2024, 23 agosto). Preventing an AI-related catastrophe - 80,000 Hours. 80,000 Hours. https://80000hours.org/problem-profiles/artificial-intelligence/#top.

Li, X., Chen, Z., Zhang, J. M., Sarro, F., Zhang, Y., & Liu, X. (2023, 5 agosto). Bias Behind the Wheel: Fairness Analysis of Autonomous Driving Systems. arXiv.org. https://arxiv.org/abs/2308.02935

Niu, Y., Jin, X., Li, J., Ji, G., & Hu, K. (2021). The Development Tendency of Artificial Intelligence in Command and Control: A Brief Survey. Journal Of Physics Conference Series, 1883(1), 012152. https://doi.org/10.1088/1742-6596/1883/1/012152

Preview photo:

Fousert, M. (2021, 9 diciembre). Un grupo de personas sentadas frente a computadoras portátiles. Unsplash. https://unsplash.com/es/fotos/un-grupo-de-personas-sentadas-frente-a-computadoras-portatiles-RvmTvJ6tSwA

Executive summary: Reinforcement learning in robotics shows promise but faces challenges in scalability, real-world adaptation, and integration, requiring improved models, standardization, and ethical considerations for widespread implementation.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.