YouGov America released a survey of 20,810 American adults. Highlights below. Note that I didn't run any statistical tests, so any claims of group differences are just "eyeballed."

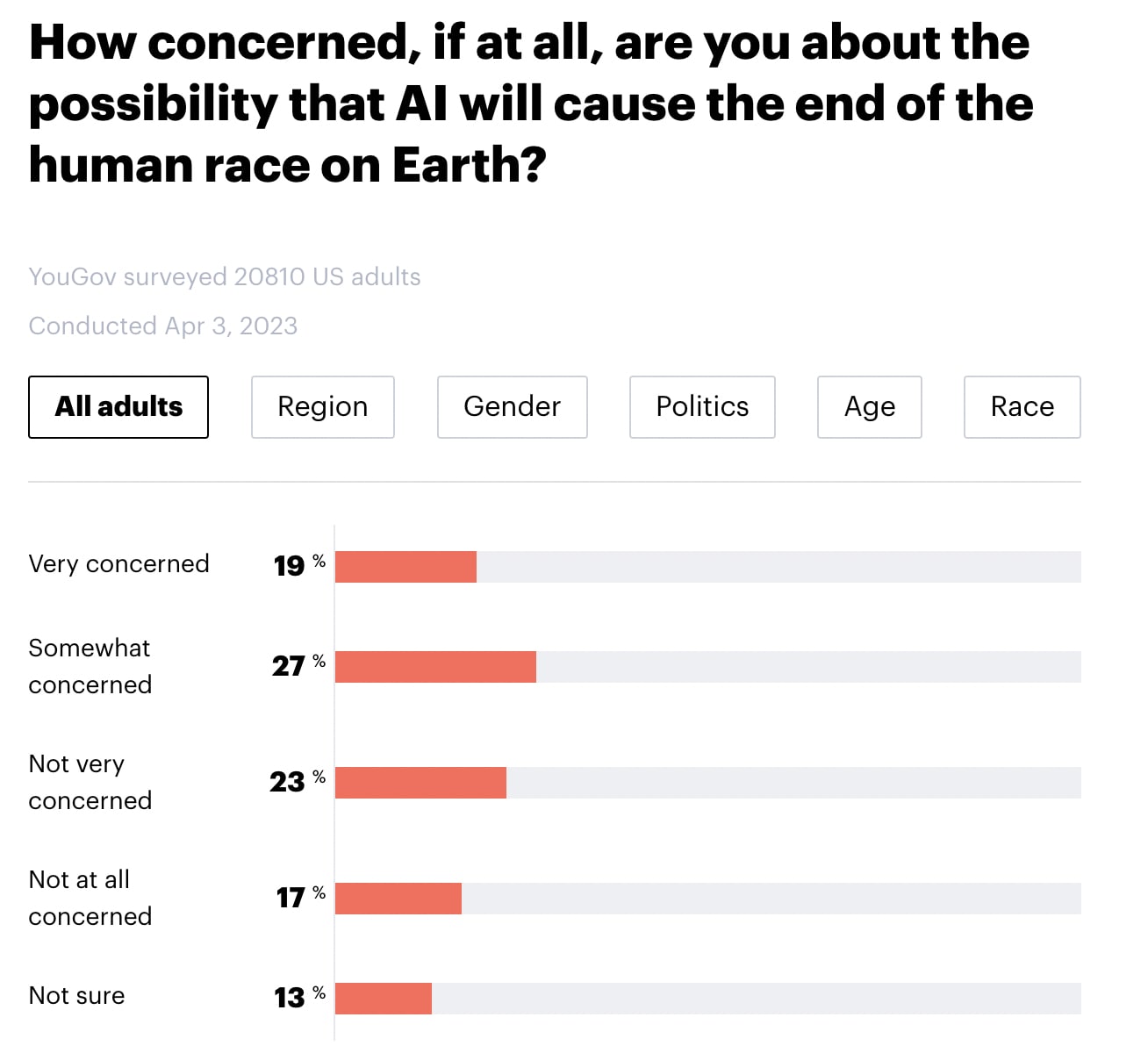

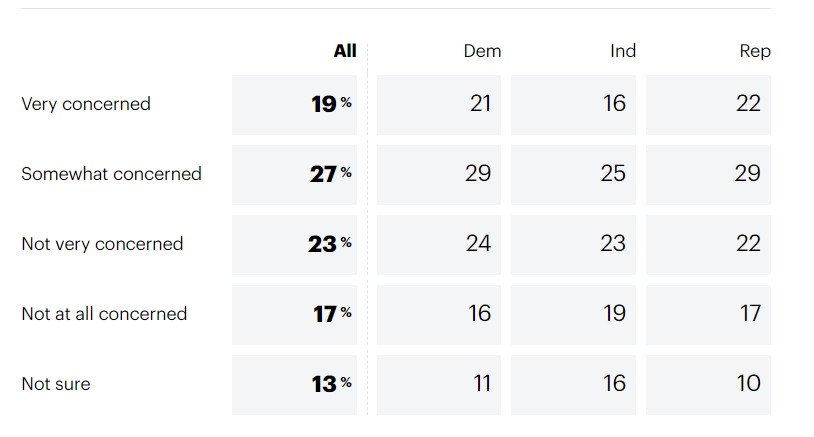

- 46% say that they are "very concerned" or "somewhat concerned" about the possibility that AI will cause the end of the human race on Earth (with 23% "not very concerned, 17% not concerned at all, and 13% not sure).

- There do not seem to be meaningful differences by region, gender, or political party.

- Younger people seem more concerned than older people.

- Black individuals appear to be somewhat more concerned than people who identified as White, Hispanic, or Other.

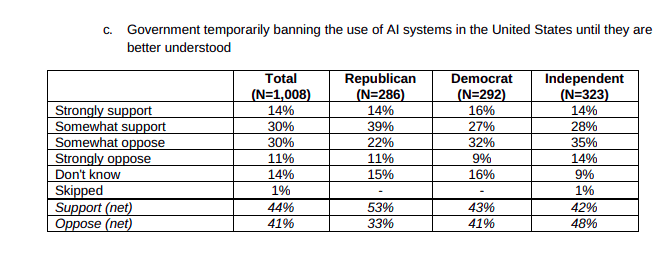

Furthermore, 69% of Americans appear to support a six-month pause in "some kinds of AI development". Note that there doesn't seem to be a clear effect of age or race for this question. (Particularly if you lump "strongly support" and "somewhat support" into the same bucket). Note also that the question mentions that 1000 tech leaders signed an open letter calling for a pause and cites their concern over "profound risks to society and humanity", which may have influenced participants' responses.

In my quick skim, I haven't been able to find details about the survey's methodology (see here for info about YouGov's general methodology) or the credibility of YouGov (EDIT: Several people I trust have told me that YouGov is credible, well-respected, and widely quoted for US polls).

See also:

This survey item may represent a circumstance under which YouGov estimates would be biased upwards. My understanding is that YouGov uses quota samples of respondents who have opted-in to panel membership through non-random means, such as responses to advertisements and referrals. They do not have access to respondents without internet access, and those who do but are not internet-savvy are also less likely to opt in. If internet savviness is correlated with item response, then we should expect a bias in the point estimate. I would speculate that internet savviness is positively correlated with worrying about AI risk because they understand the issue better (though I could imagine arguments in the opposite direction--e.g., people who are afraid of computers don't use them).

To give a concrete example, Sturgis and Kuha (2022) report that YouGov's estimate of problem gambling in the U.K. was far higher than estimates from firms that used probability sampling that can reach people who don't use the internet, especially when the interviews were conducted in person. The presumed reasons are that online gambling is more addictive and that people at higher risk of problem gambling prefer online gambling to in-person gambling.