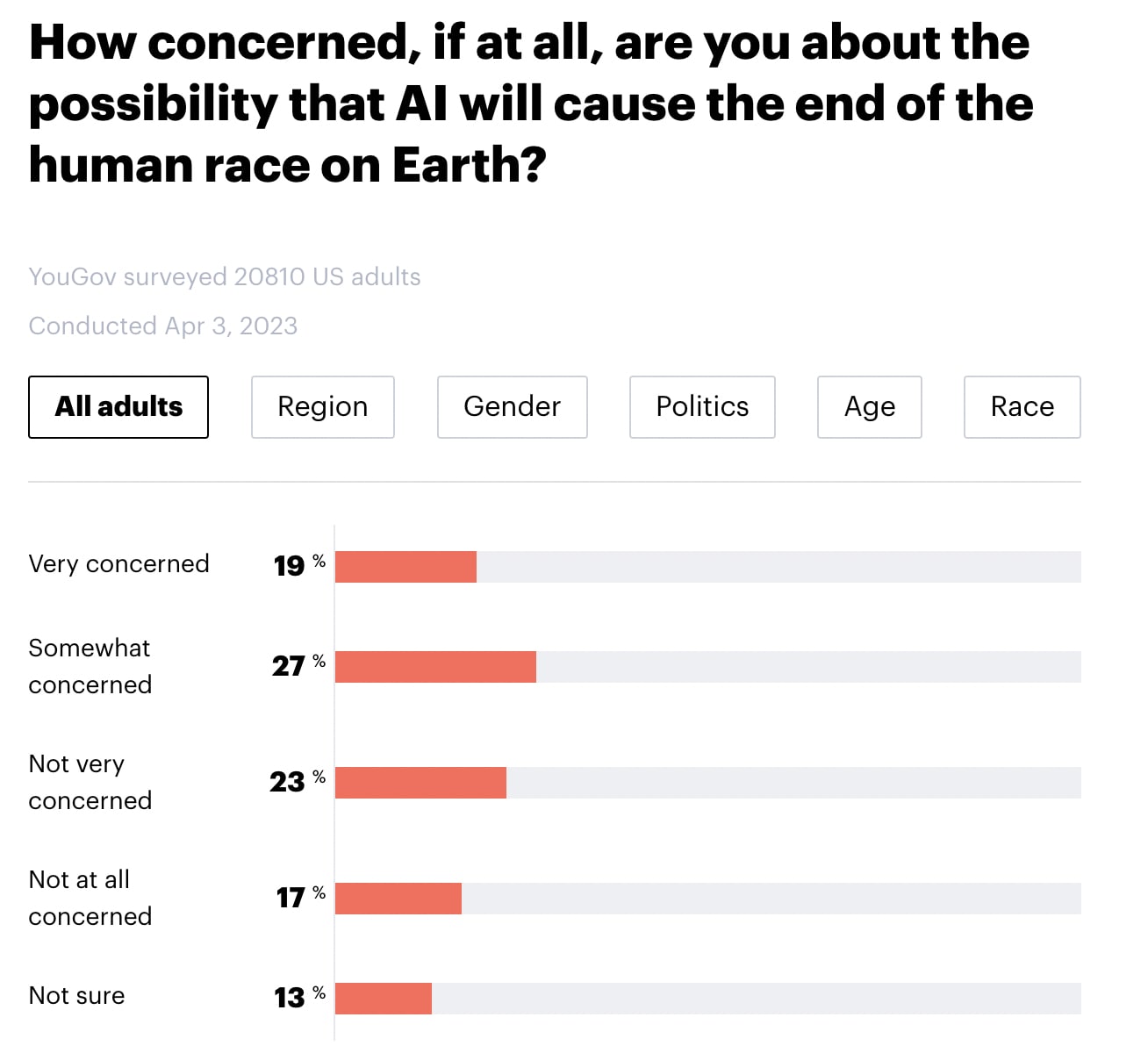

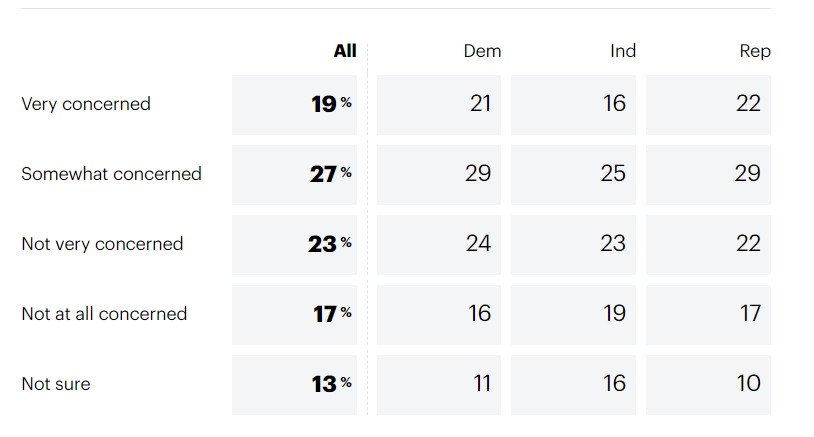

YouGov America released a survey of 20,810 American adults. Highlights below. Note that I didn't run any statistical tests, so any claims of group differences are just "eyeballed."

- 46% say that they are "very concerned" or "somewhat concerned" about the possibility that AI will cause the end of the human race on Earth (with 23% "not very concerned, 17% not concerned at all, and 13% not sure).

- There do not seem to be meaningful differences by region, gender, or political party.

- Younger people seem more concerned than older people.

- Black individuals appear to be somewhat more concerned than people who identified as White, Hispanic, or Other.

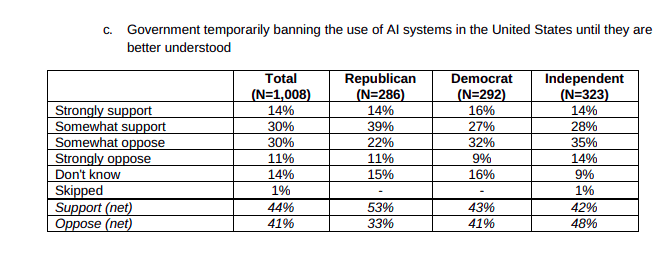

Furthermore, 69% of Americans appear to support a six-month pause in "some kinds of AI development". Note that there doesn't seem to be a clear effect of age or race for this question. (Particularly if you lump "strongly support" and "somewhat support" into the same bucket). Note also that the question mentions that 1000 tech leaders signed an open letter calling for a pause and cites their concern over "profound risks to society and humanity", which may have influenced participants' responses.

In my quick skim, I haven't been able to find details about the survey's methodology (see here for info about YouGov's general methodology) or the credibility of YouGov (EDIT: Several people I trust have told me that YouGov is credible, well-respected, and widely quoted for US polls).

See also:

I think it's plausible that top-10,000 human range is fairly wide. Eg in a pretty quantifiable domain like chess 10,000th position is not even international master (the level below GM) and will be ~2,400 Elo,[1] while the top player is ~2850, for a difference of >450 Elo points. If I'm reading the AI impacts analysis correctly, this type of progress took on the order of 7-8 years.

The bottom 1% of chess players is maybe 500 rating.[2] So measured by Elo, the entire practical human range is about ~2400 (2900-500), or about 5.3x the difference at the top 10k range.

Estimates put the number of people who ever played chess at hundreds of millions, so this isn't a problem of not many people play chess.

I wouldn't be surprised if there's similarly large differences at the top in other domains.

For example, the difference between the 10,000th ML researcher and the best is probably the difference between a recent PhD graduate from a decent-but-not-amazing ML university and a Turing award winner.

Deduced from the table here. By assumption, almost all top chess players have a FIDE rating. https://en.wikipedia.org/wiki/FIDE_titles#cite_ref-fideratings_5-0

https://i.redd.it/s5smrjgqhjnx.png (using chess.com figures rather than FIDE because I assume FIDE ratings are pretty truncated, very casual players do not go to clubs).