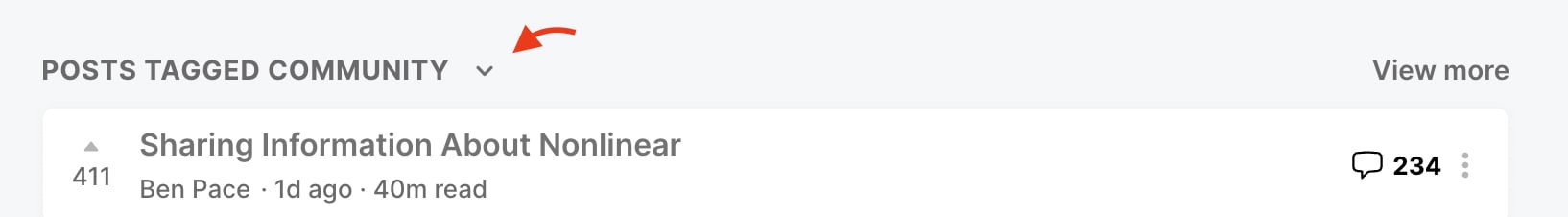

PSA: Apropos of nothing, did you known you can hide the community section?

(You can get rid of it entirely in your settings as well.)

Is there a way to snooze the community tab or snooze / hide certain posts? I would use this feature.

Here’s a puzzle I’ve thought about a few times recently:

The impact of an activity (I) is due to two factors, X and Y. Those factors combine multiplicatively to produce impact. Examples include:

- The funding of an organization and the people working at the org

- A manager of a team who acts as a lever on the work of their reports

- The EA Forum acts as a lever on top of the efforts of the authors

- A product manager joins a team of engineers

Let’s assume in all of these scenarios that you are only one of the players in the situation, and you can only control your own actions.

From a counterfactual analysis, if you can increase your contribution by 10%, then you increase the impact by 10%, end of story.

From a Shapley Value perspective, it’s a bit more complicated, but we can start with a prior that you split your impact evenly with the other players.

Both these perspectives have a lot going for them! The counterfactual analysis has important correspondences to reality. If you do 10% better at your job the world gets 0.1I better. Shapley Values prevent the scenario where the multiplicative impact causes the involved agents to collectively contribute too much.

I notice myself... (read more)

I'm annoyed at vague "value" questions. If you ask a specific question the puzzle dissolves. What should you do to make the world go better? Maximize world-EV, or equivalently maximize your counterfactual value (not in the maximally-naive way — take into account how "your actions" affect "others' actions"). How should we distribute a fixed amount of credit or a prize between contributors? Something more Shapley-flavored, although this isn't really the question that Shapley answers (and that question is almost never relevant, in my possibly controversial opinion).

Happy to talk about well-specified questions. Annoyed at questions like "should I use counterfactuals here" that don't answer the obvious reply, "use them FOR WHAT?"

I don’t feel 100% bought-in to the Shapley Value approach, and think there’s a value in paying attention to the counterfactuals. My unprincipled compromise approach would be to take some weighted geometric mean and call it a day.

FOR WHAT?

Let’s assume in all of these scenarios that you are only one of the players in the situation, and you can only control your own actions.

If this is your specification (implicitly / further specification: you're an altruist trying... (read more)

With the US presidential election coming up this year, some of y’all will probably want to discuss it.[1] I think it’s a good time to restate our politics policy. tl;dr Partisan politics content is allowed, but will be restricted to the Personal Blog category. On-topic policy discussions are still eligible as frontpage material.

- ^

Or the expected UK elections.

The Forum went down this morning, for what is I believe the longest outage we've ever had. A bot was breaking our robots.txt and performing a miniature (unintentional) DOS attack on us. This was perfectly timed with an upgrade to our infrastructure that made it hard to diagnose what was going on. We eventually figured out what was going on and blocked the bot, and learned some lessons along the way. My apologies on behalf of the Forum team :(

Appreciation post for Saulius

I realized recently that the same author that made the corporate commitments post and the misleading cost effectiveness post also made all three of these excellent posts on neglected animal welfare concerns that I remembered reading.

Fish used as live bait by recreational fishermen

Rodents farmed for pet snake food

35-150 billion fish are raised in captivity to be released into the wild every year

For the first he got this notable comment from OpenPhil's Lewis Bollard. Honorable mention includes this post which I also remembered, doing good epistemic work fact-checking a commonly cited comparison.

Also, I feel that as the author, I get more credit than is due, it’s more of a team effort. Other staff members of Rethink Charity review my posts, help me to select topics, and make sure that I have to worry about nothing else but writing. And in some cases posts get a lot of input from other people. E.g., Kieran Greig was the one who pointed out the problem of fish stocking to me and then he gave extensive feedback on the post. My CEE of corporate campaigns benefited tremendously from talking with many experts on the subject who generously shared their knowledge and ideas.

Offer of help with hands-free input

If you're experiencing wrist pain, you might want to take a break from typing. But the prospect of not been able to interact with the world might be holding you back. I want to help you try voice input. It's been helpful for me to go from being scared about my career and impact to being confident that I can still be productive without my hands. (In fact this post is brought to you by nothing but my voice.) Right now I think you're the best fit if you:

- Have ever written code, even a small amount, or otherwise feel comfortable editing a config file

- Are willing to give it a few days

- Have a quiet room where you can talk to your computer

Make sure you have a professional microphone — order this mic if not, which should arrive the next day.

Then you can book a call with me. Make sure your mic will arrive by the time the call is scheduled.

I'd like to try my hand at summarizing / paraphrasing Matthew Barnett's interesting twitter thread on the FLI letter.[1]

The tl;dr is that trying to ban AI progress will increase the hardware overhang, and risk the ban getting lifted all of a sudden in a way that causes a dangerous jump in capabilities.

Background reading: this summary will rely on an understanding of hardware overhangs (second link), which is a somewhat slippery concept, and I myself wish I understood at a deeper level.

***

Barnett Against Model Scaling Bans

Effectiveness of regulation and the counterfactual

It is hard to prevent AI progress. There's a large monetary incentive to make progress in AI, and companies can make algorithmic progress on smaller models. "Larger experiments don't appear vastly more informative than medium sized experiments."[2] The current proposals on the table on ban the largest runs.

Your only other option is draconian regulation, which will be hard to do well and will unpredictable and bad effects.

Conversely, by default, Matthew is optimistic about companies putting lots of effort into alignment. It's economically incentivized. And we can see this happening: OpenAI has put more effort in... (read more)

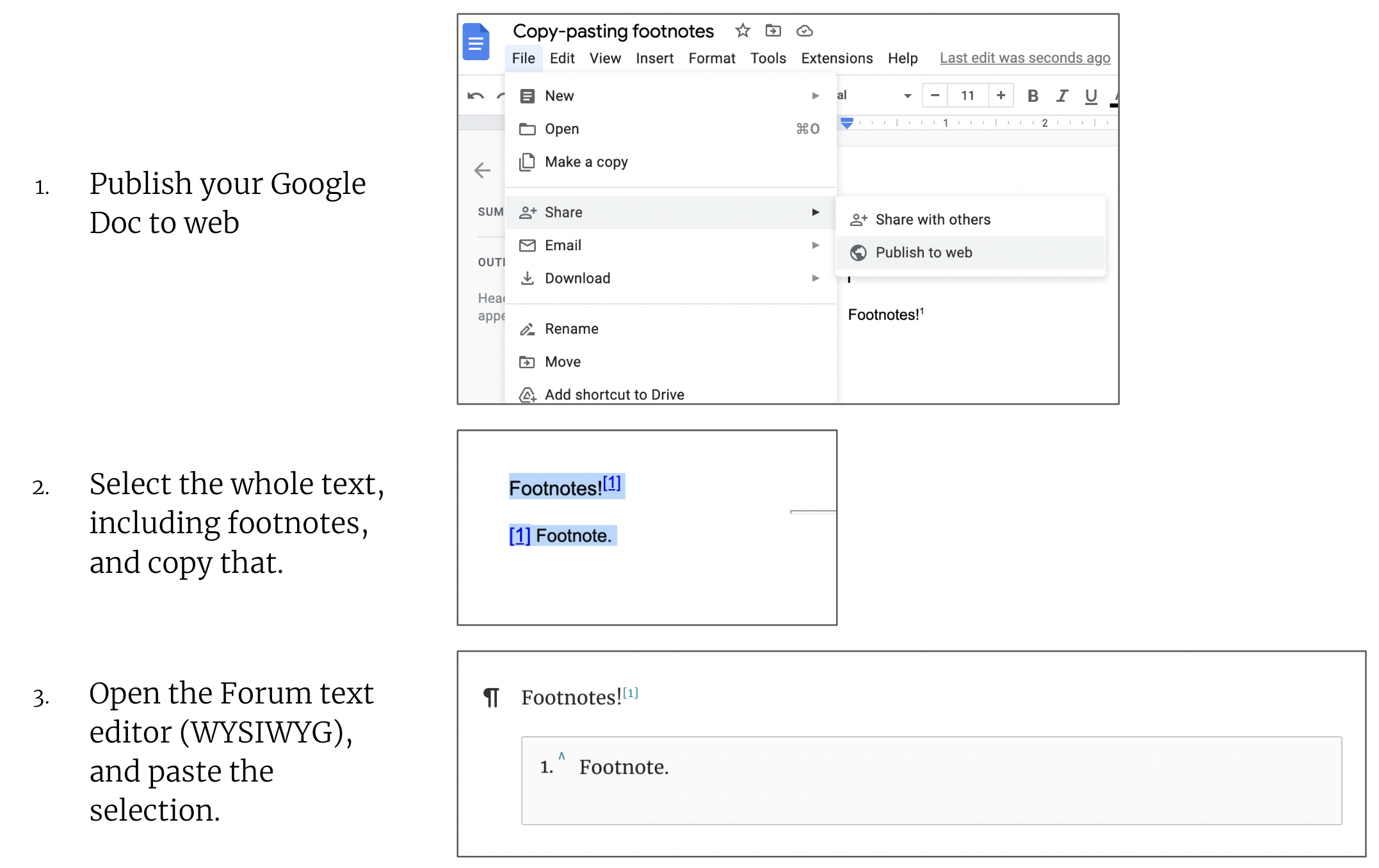

If you're copy-pasting from a Google Doc, you can now copy-paste footnotes into the WYSIWYG editor in the following way (which allowed us to get around the impossibility of selecting text + footnotes in a Google Doc):

- Publish your Google Doc to web

- You can do this by clicking on File > Share > Publish to web

- Then approve the pop-up asking you to confirm (hit "Publish")

- Then open the link that you'll be given; this is now the published-to-web version of your document.

- Select the whole text, including footnotes, and copy that. (If you'd like, you can now unpublish the document.)

- Open the Forum text editor (WYSIWYG), and paste the selection.

We'll announce this in a top level post as part of a broader feature announcement soon, but I wanted to post this on my shortform to get this in to the minds of some of the authors of the Forum.

Thanks to Jonathan Mustin for writing the code that made this work.

We now have google-docs-style collaborative editing. Thanks a bunch to Jim Babcock of LessWrong for developing this feature.

How hard should one work?

Some thoughts on optimal allocation for people who are selfless but nevertheless human.

Baseline: 40 hours a week.

Tiny brain: Work more get more done.

Normal brain: Working more doesn’t really make you more productive, focus on working less to avoid burnout.

Bigger brain: Burnout’s not really caused by overwork, furthermore when you work more you spend more time thinking about your work. You crowd out other distractions that take away your limited attention.

Galaxy brain: Most EA work is creative work that benefits from:

- Real obsession, which means you can’t force yourself to do it.

- Fresh perspective, which can turn thinking about something all the time into a liability.

- Excellent prioritization and execution on the most important parts. If you try to do either of those while tired, you can really fuck it up and lose most of the value.

Here are some other considerations that I think are important:

- If you work hard you contribute to a culture of working hard, which could be helpful for attracting the most impactful people, who are more likely than average in my experience to be hardworking.

- Many people will have individual-specific reasons not to work hard. Some peopl

Note: due to the presence of spammers submitting an unusual volume of spam to the EA Topics Wiki, we've disabled creation and editing of topics for all users with <10 karma.

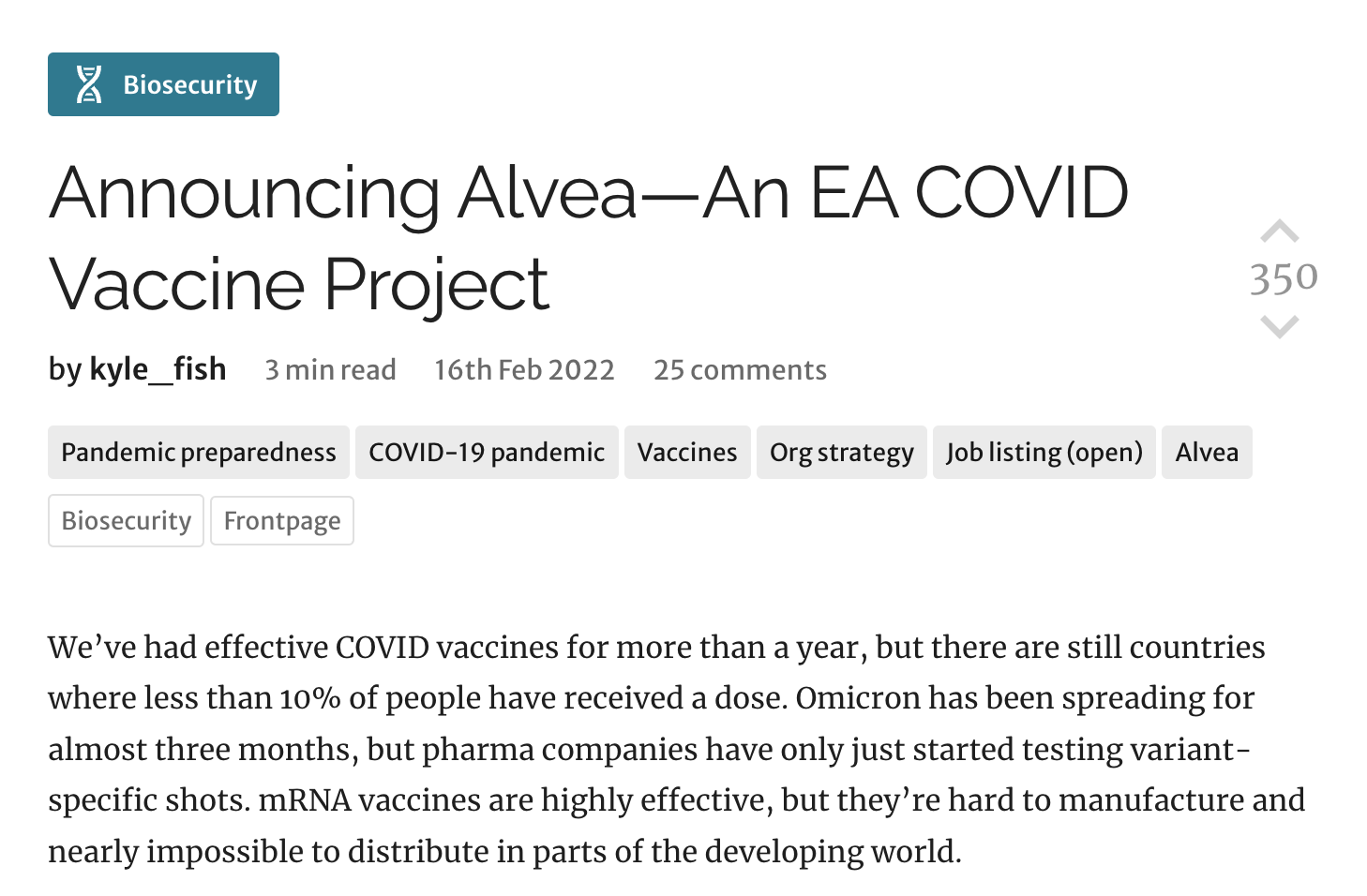

I've just released the first step towards having EA Forum tags become places where you can learn more about a topic after read a post. Posting about it because it's a pretty noticeable change:

The tag at the top of the post makes it easier to notice that there's a category to this post. (It's the most relevant core tag to the post.) We had heard feedback from new users that they didn't know tags were a thing.

Once you get to the tag page, there's now a header image. More changes to the tag pages to give it more structure are coming.

Here's a thought you might have: "AI timelines are so short, it's significantly reducing the net present value[1] of {high school, college} students." I think that view is tempting based on eg (1, 2). However, I claim that there's a "play to your outs" effect here. Not in a "AGI is hard" way, but "we slow down the progress of AI capability development".

In those worlds, we get more time. And, with that more time, it sure would seem great if we could have a substantial fraction of the young workforce care about X-Risk / be thinking with EA principl... (read more)

Consider time discounting in your cost-benefit calculations of avoiding tail risks

[Epistemic status: bit unendorsed, written quickly]

For many questions of tail risks (covid, nuclear war risk) I've seen EAs[1] doing a bunch of modeling of how likely the tail risk is, and what the impact will be, maybe spending 10s of hours on it. And then when it comes time to compare that tail risk vs short term tradeoffs, they do some incredibly naive multiplication, like life expectancy * risk of death.

Maybe if you view yourself as a pure hedonist with zero discounting, but that's a hell of a mix. I at least care significantly about impact in my life, and the impact part of my life has heavy instrumental discounting. If I'm working on growing the EA community, which is exponentially growing at ~20% per year, then I should discount my future productivity at 20% per year.

[This section especially might be wrong] So altruistically, I should be weighing the future at a factor of about (not gonna show my work) ∫400e−.2tdt times my productivity for a year, which turns out to be a factor of about 5.

Maybe you disagree with this! Good! But now this important part of the cost-benefit calcula... (read more)

Note from the Forum team: The Forum was intermittently down for roughly an hour and change last night/this morning, due to an issue with our domain provider.

Here's an interesting tweet from a thread by Ajeya Cotra:

But I'm not aware of anyone who successfully took complex deliberate *non-obvious* action many years or decades ahead of time on the basis of speculation about how some technology would change the future.

I'm curious for this particular line to get more discussion, and would be interested in takes here.

A tax, not a ban

In which JP tries to learn about AI governance by writing up a take. Take tl;dr: Overhang concerns and desire to avoid catchup effects seem super real. But that need not imply speeding ahead towards our doom. Why not try to slow everything down uniformly? — Please tell me why I’m wrong.

After the FLI letter, the debate in EA has coalesced into “6 month pause” vs “shut it all down” vs some complicated shrug. I’m broadly sympathetic to concerns (1, 2) that a moratorium might make the hardware overhang worse, or make competi... (read more)

Posting this on shortform rather than as a comment because I feel like it's more personal musings than a contribution to the audience of the original post —

Things I'm confused about after reading Will's post, Are we living at the most influential time in history?:

What should my prior be about the likelihood of being at the hinge of history? I feel really interested in this question, but haven't even fully read the comments on the subject. TODO.

How much evidence do I have for the Yudkowsky-Bostrom framework? I'd like to get better at comparing the strength of an argument to the power of a study.

Suppose I think that this argument holds. Then it seems like I can make claims about AI occurring because I've thought about the prior that I have a lot of influence. I keep going back and forth about whether this is a valid move. I think it just is, but I assign some credence that I'd reject it if I thought more about it.

What should my estimate of the likelihood we're at the HoH if I'm 90% confident in the arguments presented in the post?

This first shortform comment on the EA Forum will be both a seed for the page and a description.

Shortform is an experimental feature brought in from LessWrong to allow posters a place to put quickly written thoughts down, with less pressure to make it to the length / quality of a full post.

Temporary site update: I've taken down the allPosts page. It appears we have a bot hitting the page, and it's causing the site to be slow. While I investigate, I've simply taken the page down. My apologies for the inconvenience.

Thus starts the most embarrassing post-mortem I've ever written.

The EA Forum went down for 5 minutes today. My sincere apologies to anyone who's Forum activity was interrupted.

I was first alerted by Pingdom, which I am very glad we set up. I immediately knew what was wrong. I had just hit "Stop" on the (long unused and just archived) CEA Staff Forum, which we built as a test of the technology. Except I actually hit stop on the EA Forum itself. I turned it back on and it took a long minute or two, but was soon back up.

...

Lessons learned:

* I've seen sites that, after pressing the big red button that says "Delete", makes you enter the name of the service / repository / etc. you want to delete. I like those, but did not think of porting it to sites without that feature. I think I should install a TAP that whenever I hit a big red button, I confirm the name of the service I am stopping.

* The speed of the fix leaned heavily on the fact that Pingdom was set up. But it doesn't catch everything. In case it doesn't catch something, I just changed it so that anyone can email me with "urgent" in the subject line and I will get notified on my phone, even if it is on silent. My email is jp at organizationwebsite.

On the incentives of climate science

Alright, the title sounds super conspiratorial, but I hope the content is just boring. Epistemic status: speculating, somewhat confident in the dynamic existing.

Climate science as published by the IPCC tends to

1) Be pretty rigorous

2) Not spend much effort on the tail risks

I have a model that they do this because of their incentives for what they're trying to accomplish.

They're in a politicized field, where the methodology is combed over and mistakes are harshly criticized. Also, they want to show enough damage from climate change to make it clear that it's a good idea to institute policies reducing greenhouse gas emissions.

Thus they only need to show some significant damage, not a global catastrophic one. And they want to maintain as much rigor as possible to prevent the discovery of mistakes, and it's easier to be rigorous about things that are likely than about tail risks.

Yet I think longtermist EAs should be more interested in the tail risks. If I'm right, then the questions we're most interested in are underrepresented in the literature.

Working questions

A mental technique I’ve been starting to use recently: “working questions.” When tackling a fuzzy concept, I’ve heard of people using “working definitions” and “working hypotheses.” Those terms help you move forward on understanding a problem without locking yourself into a frame, allowing you to focus on other parts of your investigation.

Often, it seems to me, I know I want to investigate a problem without being quite clear on what exactly I want to investigate. And the exact question I want to answer is quite important! And instead of ne... (read more)

Scoreboard

Or: Let impact outcomes settle debates

When American sports players try to trash talk their opponents, the opponents will sometimes retort with the word "scoreboard" along with pointing at the screen showing that they plainly have more points. This is to say, basically, "whatever bro, I'm winning". It's a cocky response, but it's hard to argue with. I'll try to keep this post not-cocky.

I think of this sometimes in the context of the EA ecosystem. Sometimes I am astounded by how disorganized someone might be, and because of that I might be tempted ... (read more)

For a long time the EA Forum has struggled with what to call its tag / wiki thing. Our interface had several references to "this wiki-tag". As part of our work to improve the tagging feature, we've now renamed it to Topics.

I should write up an update about the Decade Review. Do you have questions about it? I can answer them here, but it will also help me inform the update.

We're planning Q4 goals for the Forum.

Do you use the Forum? (Probably, considering.) Do you have feelings about the Forum?

If you send me a PM, one of the CEA staffers running the Forum (myself or Aaron) will set up a call call where you can tell me all the things you think we should do.

I'm wondering about the possibility to up-vote one's own posts and comments. I find that a bit of an odd system. My guess would be that someone up-voting their own post is a much weaker signal of quality than someone up-voting someone else's post.

Also, it feels a bit entitled/boastful to give a strong up-vote to one's own posts and comments. I'm therefore reluctant to vote on my own work.

Hence, I'd suggest that one shouldn't be able to vote on one's own posts and comments.

Posts are not listed in order of relevance. You need to know exact words from the post you're searching for in order to find it - preferably exact words from the title.

For example, if I wanted to find your post from four days ago on long term future grants and typed in "grants", your post wouldn't appear, because your post uses the word "grant" in the title instead.

I want to write a post saying why Aaron and I* think the Forum is valuable, which technical features currently enable it to produce that value, and what other features I’m planning on building to achieve that value. However, I've wanted to write that post for a long time and the muse of public transparency and openness (you remember that one, right?) hasn't visited.

Here's a more mundane but still informative post, about how we relate to the codebase we forked off of. I promise the space metaphor is necessary. I don't know whether to apo... (read more)

Tip: if you want a way to view Will's AMA answers despite the long thread, you can see all his comments on his user profile.

We've been experiencing intermittent outages recently. Multiple possible causes and fixes have not turned out to fix it, so we're still working on it. If you see an error saying:

"503 Service Unavailable: No healthy endpoints to handle the request. [...]"

Try refreshing, or waiting 30 seconds and then refreshing; they're very transient errors.

Our apologies for the disruption.

Posts are not listed in order of relevance. You need to know exact words from the post you're searching for in order to find it - preferably exact words from the title.

For example, if I wanted to find your post from four days ago on long term future grants and typed in "grants", your post wouldn't appear, because your post uses the word "grant" in the title instead.