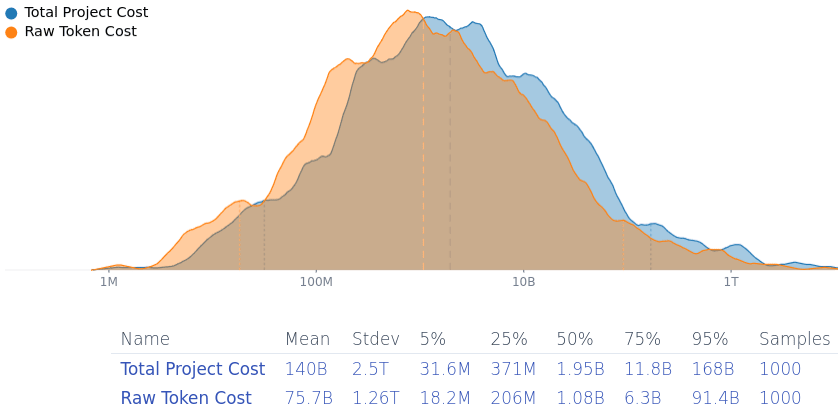

Summary: Patching all exploits in open-source software that forms the backbone of the internet would be hard on maintainers, less effective than thought, and expensive (Fermi estimate included, 5%/50%/95% cost ~$31 mio./~$1.9 bio./~$168 bio.). It's unclear who'll be willing to pay that.

Preventative measures discussed for averting an AI takeover attempt include hardenening the software infrastructure of the world against attacks. The plan is to use lab-internal (specialized?) software engineering AI systems to submit patches to fix all findable security vulnerabilities in open-source software (think a vastly expanded and automated version of Project Zero, and likely to partner with companies developing internet-critical software (in the likes of Cisco & Huawei).

I think that that plan is net-positive. I also think that it has some pretty glaring open problems (in ascending order of exigency): (1) Maintainer overload and response times, (2) hybrid hardware/software vulnerabilities, and (3) cost as a public good (also known as "who's gonna pay for it?").

Maintainer Overload

If transformative AI is developed soon, most open source projects (especially old ones relevant to internet infrastructure) are going to be maintained by humans with human response times. That will significantly increase the time for relevant security patches to be reviewed and merged into existing codebases, especially if at the time attackers will submit AI-generated or co-developed subtle exploits using AI systems six to nine months behind the leading capabilities, keeping maintainers especially vigilant.

Hybrid and Hardware Vulnerabilities

My impression is that vulnerabilities are moving from software-only vulnerabilities towards very low-level microcode or software/hardware hybrid vulnerabilities (e.g. Hertzbleed, Spectre, Meltdown, Rowhammer, Microarchitectural Data Sampling, …), for which software fixes, if they exist, have pretty bad performance penalties. GPU-level vulnerabilities get less attention, but they absolutely exist, e.g. LeftoverLocals and JellyFish. My best guess is that cutting-edge GPUs are much less secure than CPUs, since they've received less attention from researchers and their documentation is less easily accessible. (They probably have less cruft from bad design choices in early computer history.) Hence: Software-only vulnerabilities are easy to fix, software/hardware hybrid ones are more painful to fix, hardware vulnerabilities escape quick fixes (in the extreme demanding recall like the Pentium FDIV bug). And don't get me started on the vulnerabilities lurking in human psychology, which are basically impossible to fix on short time-scales…

Who Pays?

Finding vulnerabilities in all the relevant security infrastructure of the internet and fixing them might be expensive. 1 mio. input tokens for Gemini 2.0 Flash cost $0.15, and $0.60 for output tokens—but a model able to find & find fixes to security vulnerabilities is going to be more expensive. An AI-generated me-adjusted Squiggle model estimates that it'd cost (median estimate) ~$1.9 bio. to fix most vulnerabilities in open-source software (90% confidence-interval: ~$31 mio. to ~$168 bio., mean estimated cost is… gulp… ~$140 bio.).

(I think the analysis under-estimates the cost because it doesn't consider setting up the project, paying human supervisors and reviewers, costs for testing infrastructure & compute, finding complicated vulnerabilities that arise from the interaction of different programs…).

It was notable when Google paid $600k for open-source fuzzing, so >~$1.9 bio. is going to be… hefty. The discussion on this has been pretty far mode and "surely somebody is going to do that when it's “so easy”", but there have been fewer remarks about the expense and who'll carry the burden. For comparison, the 6-year budget for Horizon Europe (which funds, as a tiny part of its portfolio, open source projects like PeerTube and the Eclipse Foundation) is 93.5 bio. €, and the EU Next Generation Internet programme has spent 250 mio. € (2018-2020)+62 mio. € (2021-2022)+27 mio. € (2023-2025)=~337 mio. € on funding open-source software.

Another consideration is that this project would need to be finished quickly—potentially less than a year as open weights models catch up and frontier models become more dangerous. So humanity will not be able to wait until the frontier models become cheaper so that it'll be less expensive—as soon as automated vulnerability finding becomes, both attackers and defenders will be in a race to exploit them.

So, a proposal: Whenever someone claims that LLMs will d/acc us out of AI takeover by fixing our infrastructure, they will also have to specify who will pay the costs of setting up this project and running it.

While this is a good argument against it indicating governance-by-default (if people are saying that), securing longtermist funding to work with the free software community over this (thus overcoming two of the three hurdles) still seems to be a potentially very cost-effective way to reduce AI risk to look into, particularly combined with differential technological development of AI defensive v. offensive capacities.

That's maybe a more productive way of looking at it! Makes me glad I estimated more than I claimed.

I think governments are probably the best candidate for funding this, or AI companies in cooperation with governments. And it's an intervention which has limited downside and is easy to scale up/down, with the most important software being evaluated first.

Remark in guaranteed safe AI newsletter:

Niplav writes

I’m almost centrally the guy claiming LLMs will d/acc us out of AI takeover by fixing infrastructure, technically I’m usually hedging more than that but it’s accurate in spirit.

I usually say we prove the patches correct! But Niplav is correct: it’s a hard social problem, many critical systems maintainers are particularly slop-phobic and won’t want synthetic code checked in. That’s why I try to emphasize that the two trust points are the spec and the checker, and the rest is relinquished to a shoggoth. That’s the vision anyway– we solve this social problem by involving the slop-phobic maintainers in writing the spec and conveying to them how trustworthy the deductive process is.

Niplav’s squiggle model: Median $~1b worth of tokens, plus all the “setting up the project, paying human supervisors and reviewers, costs for testing infrastructure & compute, finding complicated vulnerabilities that arise from the interaction of different programs…” etc costs. I think a lot’s in our action space to reduce those latter costs, but the token cost imposes a firm lower bound.

But this is an EA Forum post, meaning the project is being evaluated as an EA cause area: is it cost effective? To be cost effective, the savings from alleviating some disvalue have to be worth the money you’ll spend. As a programming best practices chauvinist, one of my pastimes is picking on CrowdStrike, so let’s not pass up the opportunity. The 2024 outage is estimated to have cost about $5b across the top 500 companies excluding microsoft. A public goods project may not have been able to avert CrowdStrike, but it’s instructive for getting a flavor of the damage, and this number suggests it could be easily worth spending around Niplav’s estimate. On cost effectiveness though, even I (who works on this “LLMs driving Hot FV Summer” thing full time) am skeptical, only because open source software is pretty hardened already. Curl/libcurl saw 23 CVEs in 2023 and 18 in 2024, which it’d be nice to prevent but really isn’t a catastrophic amount. Other projects are similar. I think a lot about the Tony Hoare quote “It has turned out that the world just does not suffer significantly from the kind of problem that our research was originally intended to solve.” Not every bug is even an exploit.

I'm happy this is reaching exactly the right people :-D

As for proving invariances, that makes sense as a goal, and I like it. If I perform any follow-up I'll try to estimate how much more tokens that'll produce, since IIRC seL4 or CakeML had proofs that exceeded 10× the length of their source code.

A recent experience I've had is to try and use LLMs to generate Lean definitions and proofs for a novel mathematical structure I'm toying with, they do well with anything below 10 lines but start to falter with more complicated proofs, and

sorrytheir way out of anything I'd call non-trivial. My understanding is that a lot of software formal verification is gruntwork but there also needs to be interwoven moments of brilliance.I'm always unsure what to think of claims that markets will incentivize the correct level of investment in software security. Like, initial computer security in the aughts seems to me like it was actually pretty bad, and while it became better over time it did take at least a decade. From afar, markets look efficient, from close up you can see efficiency establish itself. And then it's the question how much of the cost is internalized, which I feel for private companies should be close to 100%? For open source projects of course that number then goes close to zero.

It'd be cool to see a time series of the number of found exploits in open source software, thanks for the

curlnumbers. You picked a fairly old/established codebase with an especially dedicated developer, I wonder what it's like with newer ones, and whether one discovers more exploits in the early, middle, or late stage of development. The adoption of better programming languages than C/C++ of course helps.Lean synthesis capabilities aren't maximally elicited right now, because a lot of people view it as a text-to-text problem which leads to a bunch of stress about low amount of syntax in the pretraining data and the high code velocity (until about a year ago, language models still hadn't fully internalized migrations from Lean3 to Lean4). Techniques like Cobblestone or the stuff that Higher Order Company talks about (logic programming / language model API call hybrid architecture) seem really promising to me (and, especially as HOC points out, so much cheaper!).

Thanks for your comment. I had broken my ankle in three places and was on too much oxycodone to engage the first time I read it. I continue to recommend your essay a lot.

Yeah, I goofed by using Claude for math, not any of the OpenAI models, which are much better at math.

Not draft amnesty but I'll take it. Yell at me below to get my justification for the variable-values in the Fermi estimate.