- I stated “I see this mostly as an experiment into whether having a simple “event” can cause people to publish more stuff” and I feel like the answer is conclusively “yes”. I put a little bit of effort into pitching people, and I’m sure that my title and personal connections didn’t hurt, but this really was not a terribly heavy lift.

- Thanks to the fortnight I have a post I can reference for EA being a do-ocracy! I would encourage other people to try to organize things like this.

- I noticed an interesting phenomenon: contributors who are less visible in EA wanted to participate because they thought it would give their writing more attention, and people who are more visible in EA wanted to participate because they thought it would give their writing less attention.[1]

- I think the average EA might underestimate the extent to which being visible in EA (e.g. speaking at EAG) is seen as a burden rather than an opportunity.

- This feels like an important problem to solve, though outside the bounds of this project.

3. Part of my goal was to get conversations that are happening in private into a more public venue. I think this basically worked, at least measured by “conversations that I personally have been having in private”. There are some ways in which karma did not reflect what I personally thought was most important though:

- I’ve started to worry that it might be important to get digital sentience work (e.g. legal protection for digital beings) before we get transformative AI, and EA’s seem like approximately the only people who could realistically do this in the next ~5 years.[2] So I would have liked to have seen more grappling with this post, although in fairness Jeff wasn’t making a strong pitch for prioritizing AI welfare.

- I also find myself making the points that Arden raised here pretty regularly, and wish there was more engagement with them.

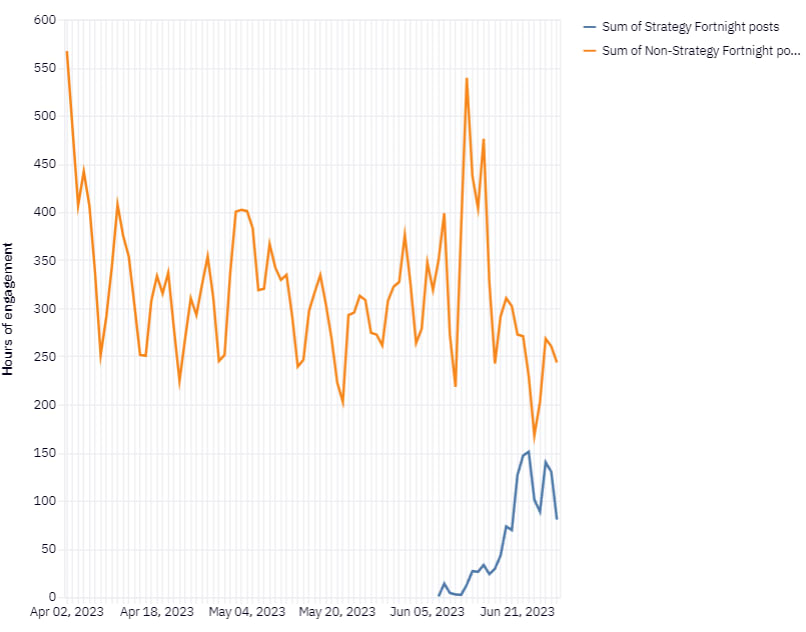

4. When doing a “special event” on the forum, I always wonder whether the event will add to the forum’s engagement or just cannibalize existing engagement. I think the strategy fortnight was mostly additive, although it’s pretty hard to know the counterfactual.

5. Some events I would be interested in someone trying to organize on the Forum

- “Everyone change your job week”[3] – opportunity for people to think seriously about whether they should change their jobs, write up a post about it, and then get feedback from other Forum users

- Rotblat day – Joseph Rotblat was a physicist on the Manhattan project who was originally motivated by wanting to defeat Nazi Germany, but withdrew once he realized the project was actually motivated by wanting to defeat the USSR. On Rotblat day, people post what signs they would look for to determine if their work was being counterproductive.

- “Should we have an AI moratorium?” debate week – basically the comments here, except you recruit people to give longer form takes.

- Video week – people create and post video versions of forum articles (or other EA content)

Firstly - thank you for stepping up at this crucial time for the EA community, Ben. I can only imagine how challenging it must be.

Some thoughts about the Strategy Fortnight: I think it was a good experiment on a platform that many in the community value highly (significantly more than I do). I enjoyed many of the posts, especially Will's. It seems to have improved the quality and coordination of more targeted, constructive posts, and it increased short-term engagement around one particular initiative/topic. Still, those most likely to contribute in this manner are those who have strong connections in the community (or are regular Forum users; just look at who published the top posts). I see this as one piece of the community engagement/feedback puzzle (the EA Community Survey is another great example).

I would love to see additional initiatives experimented with. Just to name a few: A parliamentary model of decision-making across EA organizations (this is a bit complicated to explain succinctly; I would be happy to discuss it further); quarterly public/live meetings/events from each large organization in the EA space that are designed to increase transparency and engagement/feedback; and a leadership summit for EA org leaders to coordinate on cross-community strategy and develop a comprehensive risk assessment (of current and future risks to organizations and "the EA brand") and relevant mechanisms to address these risks.

Thanks for your thoughtfulness about how to improve the community!

Related: https://www.lesswrong.com/posts/pDzdb4smpzT3Lwbym/my-model-of-ea-burnout?commentId=Xz2xzWEuLAiHsFWzf

I had high expectations and they were exceeded! I really appreciated the positivity and encouraging vibe, as well as gaining a better understanding of the EA community landscape, from the leadership perspective (mckaskill), funding perspective and the different movements in community building around the world.

Love the Fortnite meme, although your understanding hitrate might be under 50 percent here haha.

Can you ELI30 the meme?

There is a videogame named Fortnite and when you win you see the screenshot included at the top of this post.[1]

(I think, I've never actually played the game, I only know the memes.)

I really enjoyed the fortnight and found a lot of the discussion to be really good! I'm also grateful to Ben for noting that a lot of people, even famous people who'd agreed in advance to write posts, were likely to procrastinate. That comment was the kick that got me to finally write the post about disability issues I'd been meaning to write for quite some time.

Oh cool, I'm glad to hear that this triggered you to write that post! I thought it was helpful.

I really like this one. I think its success should be judged by the number of people quitting their job in the next month. Not that everyone should, but that's the proof that it's a live option people are taking seriously.

I would participate in video week!

How about May 7, the day of the German surrender?

Re: others organizing special events on the forum--

I think if CEA came up with a process for that, which included CEA promoting the event organized by a non-CEA person, that might help this happen.

There could be a "forum special event" form, where someone can suggest a theme, write up a description, and get feedback from CEA on it. Maybe the feedback would be to clarify the description, or to suggest a specific time for the event to be synchronized (or not) with related other events. E.g. if there's an insect welfare conference happening at time X, it might work well to have an insect welfare forum fortnight soon after that.

And then the promotion could be to include the event announcement in the EA Newsletter or pin the announcement post to the home page of the forum for a bit.

I was interested to see you mention this, as this is something I think is very important.

The phrasing here got me thinking a bit about what would that look like if we were try to make meaningful changes within 5 years specifically.

But I was wondering why you used the "~5 years" phrase here?

(Do you think transformative AI is likely within 5 years?)

Metaculus currently says 7% (for one definition of "transformative").

But I chose five years more because it's hard for me to predict things further in the future, rather than because most of my probability mass is in the <5 year range.