This essay is a personal history of the $60+ million I allocated to metascience starting in 2012 while working for the Arnold Foundation (now Arnold Ventures). It's long (22k+ words with 250+ links), so keep reading if you want to know:

- How the Center for Open Science started

- How I accidentally up working with the John Oliver show

- What kept PubPeer from going under in 2014

- How a new set of data standards in neuroimaging arose

- How a future-Nobel economist got started with a new education research organization

- How the most widely-adopted set of journal standards came about

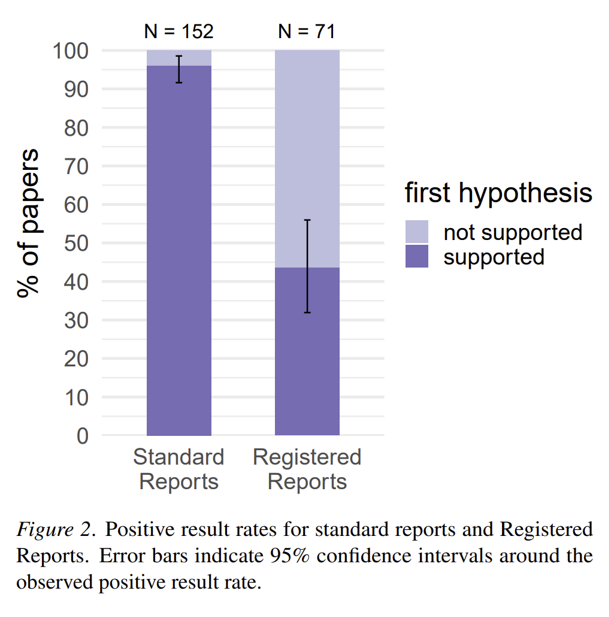

- Why so many journals are offering registered reports

- How writing about ideas on Twitter could fortuitously lead to a multi-million grant

- Why we should reform graduate education in quantitative disciplines so as to include published replications

- When meetings are useful (or not)

- Why we need a new federal data infrastructure

I included lots of pointed commentary throughout, on issues like how to identify talent, how government funding should work, and how private philanthropy can be more effective. The conclusion is particularly critical of current grantmaking practices, so keep reading (or else skip ahead).

Introduction

A decade ago, not many folks talked about “metascience” or related issues like scientific replicability. Those who did were often criticized for ruffling too many feathers. After all, why go to the trouble of mentioning that some fields weren’t reproducible or high-quality? Top professors at Harvard and Princeton even accused their own colleagues of being “methodological terrorists” and the like, just for supporting replication and high-quality statistics.

The situation seems far different today. Preregistration has become much more common across many fields; the American Economic Association now employs a whole team of people to rerun the data and code from every published article; national policymakers at NIH and the White House have launched numerous initiatives on reproducibility and open science; and just recently, the National Academies hosted the Metascience 2023 conference.

How did we get from there to here? In significant part, it was because of “metascience entrepreneurs,” to use a term from a brilliant monograph by Michael Nielsen and Kanjun Qiu. Such entrepreneurs included folks like Brian Nosek of the Center for Open Science, and many more.

But if there were metascience entrepreneurs, I played a significant part as a metascience venture capitalist—not the only one, but the most active. While I worked at what was initially known as the Laura and John Arnold Foundation (now publicly known as Arnold Ventures), I funded some of the most prominent metascience entrepreneurs of the past 11 years, and often was an active participant in their work. The grants I made totaled over $60 million dollars. All of that was thanks to the approval of Laura and John Arnold themselves (John made several billion dollars running a hedge fund, and they retired at 38 with the intention of giving away 90+% of their wealth).

In the past few months, two people in the metascience world independently asked if I had ever written up my perspective on being a metascience VC. This essay was the result. I’ve done my best not to betray any confidentialities or to talk about anyone at Arnold Ventures other than myself. I have run this essay by many people (including John Arnold) to make sure my memory was correct. Any errors or matters of opinion are mine alone.

The Origin Story: How the Arnold Foundation Got Involved in Metascience

As I have often said in public speeches, I trace the Arnold Foundation’s interest in metascience to a conversation that I had with John Arnold in mid-2012.

I had started working as the foundation’s first director of research a few months prior. John Arnold and I had a conference call with Jim Manzi, the author of a widely-heralded book on evidence-based policy.

I don’t remember much about the call itself (other than that Manzi was skeptical that a foundation could do much to promote evidence-based policy), but I do remember what happened afterwards. John Arnold asked me if I had seen a then-recent story about a psychology professor at Yale (John Bargh) whose work wouldn’t replicate and who was extraordinarily upset about it.

As it turned out, I had seen the exact same story in the news. It was quite the tempest in a teapot. Bargh had accused the folks replicating his work of being empty-headed, while Nobel Laureate Daniel Kahneman eventually jumped in to say that the “train wreck” in psychological science could be avoided only by doing more replications.

In any event, John Arnold and I had an energetic conversation about what it meant for the prospect of evidence-based policy if much of the “evidence” might not, in fact, be true or replicable (and still other evidence remained unpublished).

I began a more systematic investigation of the issue. It turned out that across many different fields, highly-qualified folks had occasionally gotten up the courage to write papers that said something along the lines of, “Our field is publishing way too many positive results, because of how biased our methods are, and you basically can’t trust our field.” And there was the famous 2005 article from John Ioannidis: “Why Most Published Research Findings Are False.” To be sure, the Ioannidis article was just an estimate based on assumptions about statistical power, likely effect sizes, and research flexibility, but to me, it still made a decent case that research standards were so low that the academic literature was often indistinguishable from noise.

That’s a huge problem for evidence-based policy! If your goal in philanthropy is to give money to evidence-based programs in everything from criminal justice to education to poverty reduction, what if the “evidence” isn’t actually very good? After all, scholars can cherrypick the results in the course of doing a study, and then journals can cherrypick what to publish at all. At the end of the day, it’s hard to know if the published literature actually represents a fair-minded and evidence-based view.

As a result, the Arnolds asked me to start thinking about how to fix the problems of publication bias, cherrypicking, misuse of statistics, inaccurate reporting, and more.

These aren’t easy problems to solve, not by any means. Yes, there are many technical solutions that are on offer, but mere technical solutions don’t address the fact that scholars still have a huge incentive to come up with positive and exciting results.

But I had to start somewhere. So I started with what I knew . . .

Brian Nosek and the Birth of the Center for Open Science

I had already come across Brian Nosek after an April 2012 article in the Chronicle of Higher Education titled “Is Psychology About to Come Undone?” I was impressed by his efforts to work on improving standards in psychology. To be sure, I don’t normally remember what articles I saw in a specific month over 11 years ago, but in searching back through my Gmail, I saw that I had emailed Peter Attia (who recently published an excellent book!) and Gary Taubes about this article at the time.

Which could lead to another long story in itself: the Arnolds were interested in funding innovative nutrition research, and I advised them on setting up the Nutrition Science Initiative led by Attia and Taubes (here’s an article in Wired from 2014). As of early 2012, I was talking with Attia and Taubes about how to improve nutrition science, and wondered what they thought of Nosek’s parallel efforts in psychology.

In any event, as of summer 2012, I had a new charge to look into scientific integrity more broadly. I liked Nosek’s idea of running psychology replication experiments, his efforts to mentor a then-graduate student (Jeff Spies) in creating the Open Science Framework software, and in particular, a pair of articles titled Scientific Utopia I and Scientific Utopia II.

The first article laid out a new vision for scientific communication: “(1) full embrace of digital communication, (2) open access to all published research, (3) disentangling publication from evaluation, (4) breaking the ‘one article, one journal’ model with a grading system for evaluation and diversified dissemination outlets, (5) publishing peer review, and, (6) allowing open, continuous peer review.” The second article focused on “restructuring incentives and practices to promote truth over publishability.”

Even with the benefit of hindsight, I’m still impressed with the vision that Nosek and his co-authors laid out. One of the first things I remember doing was setting up a call with Brian. I then invited him out to Houston to meet with the Arnolds and the then-president of the Arnold Foundation (Denis).

At that point, no one knew where any of this would lead. As Brian himself has said, his goal at that point was to get us to fund a post-doc position at maybe $50k a year.

For my part, I thought that if you find smart people doing great things in their spare time, empowering them with additional capital is a good bet. I continue to think that principle is true, whether in philanthropy, business, or science.

After the meeting, Denis said that he and Arnolds had loved Brian’s vision and ideas. He said something like, “Can’t we get him to leave the University of Virginia and do his own thing full-time?” Out of that conversation, we came up with the name “Center for Open Science.” I went back to Brian and said that the Arnolds liked his vision, and asked if he would be willing to start up a “Center for Open Science”? Brian wasn’t hard to persuade, as the offer was consistent with his vision for science, yet far beyond what he had hoped to ask for.

He and I worked together on a proposal. In fact, in my Google Docs, I still have a draft from November 2012 titled, “Center for Open Science: Scenario Planning.” The introduction and goal:

“We aim to promote open science, evaluate or improve reproducibility of scientific findings, and improve the alignment of scientific practices to scientific values. Funding will ensure, accelerate, and broaden impact of our projects. All projects are interdisciplinary or are models to replicate across disciplines. The Center for Open Science (COS) will support innovation, open practices, and reproducibility in the service of knowledge-building. COS would have significant visibility to advance an open science agenda; establish and disseminate open science best practices; coordinate and negotiate with funders, journals, and IRBs for open science principles; support and manage open infrastructure; connect siloed communities particularly across disciplines; and, support open science research objectives.”

More than 10 years later, that’s still a fairly good description of what COS has been able to accomplish.

As of September 2013, there was a Google doc that included the following:

Mission

COS aims to increase openness, integrity, and reproducibility of scientific research.

Openness and reproducibility are core scientific values because science is a distributed, non-hierarchical culture for accumulating knowledge. No individual is the arbiter of truth. Knowledge accumulates by sharing information and independently reproducing results. Ironically, the reward structure for scientists does not provide incentives for individuals to pursue openness and reproducibility. As a consequence, they are not common practices. We will nudge incentives to align scientific practices with scientific values.

Summary of Implementation Objectives

1. Increase prevalence of scientific values - openness, reproducibility - in scientific practice2. Develop and maintain infrastructure for documentation, archiving, sharing, and registering research materials

3. Join infrastructures to support the entire scientific workflow in a common framework

4. Foster an interdisciplinary community of open source developers, scientists, and organizations

5. Adjust incentives to make “getting it right” more competitive with “getting it published”

6. Make all academic research discoverable and accessible

Not bad. I eventually was satisfied that the proposal was worth bringing back to the Arnolds, and asking for $5.25 million. That’s what we gave Brian as the first of several large operational grants—$5 million was designated for operational costs for running the Center for Open Science for the first three years, and $250k was for completing 100 psychology replication experiments.

We were off to the races.

Interlude

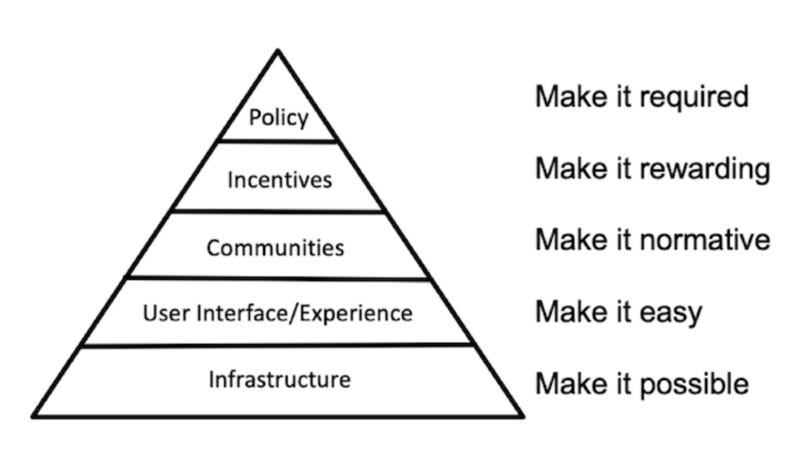

From this point forward, I won’t narrate all of the grants and activities chronologically, but according to broader themes that are admittedly a bit retrofitted. Specifically, I’m now a fan of the pyramid of social change that Brian Nosek has written and talked about for a few years:

In other words, if you want scientists to change their behavior by sharing more data, you need to start at the bottom by making it possible to share data (i.e., by building data repositories). Then try to make it easier and more streamlined, so that sharing data isn’t a huge burden. And so on, up the pyramid.

You can’t start at the top of the pyramid (“make it required”) if the other components aren’t there first. For one thing, no one is going to vote for a journal or funder policy to mandate data sharing if it isn’t even possible. Getting buy-in for such a policy would require work to make data sharing not just possible, but more normative and rewarding within a field.

That said, I might add another layer at the bottom of the pyramid: “Raise awareness of the problem.” For example, doing meta-research on the extent of publication bias or the rate of replication can make entire fields aware that they have a problem in the first place—before that, they aren’t as interested in potential remedies for improving research behaviors.

The rest of this piece will be organized accordingly:

Raise Awareness: fundamental research on the extent of irreproducibility;

Make It Possible and Make It Easy: the development of software, databases, and other tools to help improve scientific practices;

Make It Normative: journalists and websites that called out problematic research, and better standards/guidelines/ratings related to research quality and/or transparency;

Make It Rewarding: community-building efforts and new journal formats

Make It Required: organizations that worked on policy and advocacy.

Disclaimer: As applied to Arnold grantmaking in hindsight, these categories aren’t comprehensive or mutually exclusive, and are somewhat retrofitted. They won’t be a perfect fit.

I. Raise Awareness Via Fundamental Research

The Meta-Research Innovation Center at Stanford (METRICS)

Around the 2012-13 time frame, I read about Dustin Moskovitz and his partner Cari Tuna (it was something akin to this article from 2014), and their ambitions to engage in effective philanthropy. I asked John Arnold if he knew them, or how to get in touch with them. I ended up being redirected to Holden Karnofsky and Alexander Berger, who at the time worked at GiveWell (and since helped launch and run Open Philanthropy).

It turned out that they had been tentatively exploring metascience as well. They introduced me to Steve Goodman and John Ioannidis at Stanford, who had been thinking about the possibility of a center at Stanford focused on meta-research. Goodman sent along a 2-page (or so) document with the general idea, and I worked with him to produce a longer proposal.

My memory is no match for what Holden wrote at the time (more funders should do this while memories are still fresh!):

In 2012, we investigated the US Cochrane Center, in line with the then-high priority we placed on meta-research. As part of our investigation, we were connected – through our network – to Dr. Steven Goodman, who discussed Cochrane with us (notes). We also asked him about other underfunded areas in medical research, and among others he mentioned the idea of “assessing or improving the quality of evidence in the medical literature” and the idea of establishing a center for such work.

During our follow-up email exchange with Dr. Goodman, we mentioned that we were thinking of meta-research in general as a high priority, and sent him a link to our standing thoughts on the subject. He responded, “I didn’t know of your specific interest in meta-research and open science … Further developing the science and policy responses to challenges to the integrity of the medical literature is also the raison d’etre of the center I cursorily outlined, which is hard to describe to folks who don’t really know the area; I didn’t realize how far down that road you were.” He mentioned that he could send along a short proposal, and we said we’d like to see it.

At the same time, we were in informal conversations with Stuart Buck at the Laura and John Arnold Foundation (LJAF) about the general topic of meta-research. LJAF had expressed enthusiasm over the idea of the Center for Open Science (which it now supports), and generally seemed interested in the topic of reproducibility in the social sciences. I asked Stuart whether he would be interested in doing some funding related to the field of medicine as well, and in reviewing a proposal in that area that I thought looked quite promising. He said yes, and (after checking with Dr. Goodman) I sent along the proposal.

From that point on, LJAF followed its own process, though we stayed posted on the progress, reviewed a more fleshed-out proposal, shared our informal thoughts with LJAF, and joined (by videoconference) a meeting between LJAF staff and Drs. Ioannidis and Goodman.

Following the meeting with Drs. Ioannidis and Goodman, we told Stuart that we would consider providing a modest amount of co-funding (~10%) for the initial needs of METRICS. He said this wouldn’t be necessary as LJAF planned to provide the initial funding.

We (at the Arnold Foundation) ended up committing some $4.9 million dollars to launch the Meta-Research Innovation Center at Stanford, or METRICS. The goal was to produce more research (along with conferences, reports, etc.) on how to improve research quality in biomedicine, and to train the next generation of scholars in this field. METRICS did that in spades: they and their associated scholars published hundreds of papers on meta-science (mostly in biomedicine), and were able to hire quite a few postdocs to help create a future generation of scholars who focus on meta-science.

In 2015, METRICS hosted an international conference on meta-research that was well-attended by many disciplinary leaders. The journalist Christie Aschwanden was there, and she went around ambushing the attendees (including me) by asking politely, “I’m a journalist, would you mind answering a few questions on video?,” and then following that with, “In layman’s terms, can you explain what is a p-value?” The result was a hilarious “educational” video and article, still available here.

Steve Goodman has continued to do good work at Stanford, and is leading the Stanford Program on Research Rigor and Reproducibility (SPORR), a wide-ranging program whose goal is to “maximize the scientific value of all research performed in the Stanford School of Medicine.” SPORR now hands out several awards a year for Stanford affiliates who have taken significant steps to improve research rigor or reproducibility that can be used or modeled by others. It has also successfully incorporated those considerations into Stanford medical school’s promotion process and is working with leadership on institutional metrics for informative research.

The goal is to create “culture change” within Stanford and as a model for other academic institutions, so that good science practices are rewarded and encouraged by university officials (rather than just adding more publications to one’s CV). SPORR is arguably the best school-wide program in this regard, and it should be a model for other colleges and universities.

Bringing Ben Goldacre to Oxford

In the early 2010s, Ben Goldacre was both a practicing doctor in the UK and a professional advocate as to clinical trial transparency. He co-founded an EU-wide campaign called AllTrials on clinical trial transparency; wrote the book Bad Pharma (which both John Arnold and I read at the time); and wrote a nice essay on using randomized trials in education.

As I mentioned above, when someone is doing all of this in his spare time, a good bet would be to use philanthropy (or investment, depending on the sector) to enable that person to do greater things.

I reached out to Ben Goldacre in late 2013 or early 2014 (the timing is a bit unclear in my memory). I ended up sending the Arnolds an email titled, “The ‘Set Ben Goldacre Free’ Grant,” or something close to it.

My pitch was simple: We should enable Goldacre to pursue his many ideas as to data and evidence-based medicine, rather than being forced to do so in his spare time while working as a doctor by day.

I asked Goldacre if we could set him up with a university-based center or appointment. Unlike Brian Nosek, it took quite a bit of argument in this case. Goldacre was in the middle of buying a house in London. He wasn’t sure he could find such a good deal again, and he wanted to be very certain of our intentions before he backed out of the London house and moved anywhere else, such as Oxford (which itself was uncertain!). I eventually convinced him that the Arnold Foundation was good for its word, and that it was worth the move. We worked out the details with Oxford, and Arnold made a 5-year grant of $1.24 million in 2014.

Since then, Goldacre has thrived at Oxford. He spearheaded the TrialsTracker Project (which tracks which clinical trials have abided by legal obligations to report their results), and the COMPARE Project (which systematically looked at every trial published in the top 5 medical journals over a several-month period).

The latter’s findings were remarkable: out of 67 trials reviewed, only nine were perfect in their reporting. The other 58 had a collective 357 new outcomes “silently added” and 354 outcomes not reported at all (despite having been declared in advance). This is an indictment of the medical literature and of the ideal that clinical trials should register in advance and then report fully when done.

But it was an amazingly tedious project: while in the middle of all this work checking trial outcomes, Goldacre told me, “I rue the day I ever said I would do this.” It’s no wonder that hardly anyone else ever does this sort of work, and medical journals (among others) need better and more automated ways to check whether a publication is consistent with (or even contradicts) its registration, protocol, or statistical analysis plan.

Goldacre’s lab is now called the Bennet Institute for Applied Data Science, after a subsequent donor. The Institute maintains the OpenSafely project, which offers researchers access to well-curated medical records from 58 million people across the entire UK, as well as Open Prescribing, which does the same for prescription data. These efforts have led to many publications, particularly during Covid.

OpenSafely is especially interesting: despite the highly private nature of health data, Goldacre created a system in which “users are blocked from directly viewing the raw patient data or the research ready datasets, but still write code as if they were in a live data environment.” Instead, “the data management tools used to produce their research-ready datasets also produce simulated, randomly generated ‘dummy data’ that has the same structure as the real data, but none of the disclosive risks.”

Oxford professor Dorothy Bishop calls it a “phenomenal solution for using public data.” I think that federal and state governments in the US should do more to create similarly streamlined ways for researchers to easily analyze private data.

Goldacre recently gave his inaugural professorial lecture as the first Bennett Professor of Evidence Based Medicine in the Nuffield Department of Primary Care Health Sciences at Oxford. I’m pleased to have helped Goldacre along this path.

Open Trials

A significant problem with clinical trials, so it seemed to me, was that if a patient or doctor wanted to know the full spectrum of information about a drug or a trial, it required an enormous amount of effort and insider knowledge to track down. For example, you’d have to know:

- How to search the medical literature,

- How to look up trials on ClinicalTrials.gov,

- How to check the FDA approval package (which is such a cumbersome process that the British Medical Journal once literally published an entire article with instructions on how to access FDA data),

- How to check for whether the European Medicines Agency had any additional information, and more.

It was literally impossible to find the whole set of information about any drug or treatment in one place.

So, an idea I discussed with Ben Goldacre was creating a database/website that would pull in information about drugs from all of those places and more. If you were curious about tamoxifen (the cancer drug) or paroxetine (the antidepressant), you’d be able to see the entire spectrum of all scholarly and regulatory information ever produced on that drug.

I ended up making a grant of $545,630 to the Center for Open Science to work with Open Knowledge International (now called the Open Knowledge Foundation) and Ben Goldacre on creating a platform called OpenTrials. In theory, this was a great idea, as you can read in our press release at the time, or this article that Ben Goldacre published with Jonathan Gray of Open Knowledge in 2016.

In practice, it was hard to juggle and coordinate three independent groups within one grant. Folks had different ideas about the technological requirements and the path for future development. So ultimately, the grant stalled and never produced anything that was very functional.

In retrospect, I still think it’s a great idea for something that ought to exist, but it is technically difficult and would require lots of ongoing maintenance. I shouldn’t have tried to patch together three independent groups that each had their own ideas.

The 2014 Controversy Over Replication in Psychology

As of 2014, psychologists were starting to get nervous. The Reproducibility Project in Psychology was well under way, thanks to our funding, and would eventually be published in Science in 2015. A fair number of prominent psychological researchers knew far too well (or so I suspected) that their own work was quite flawed and likely to be irreplicable.

As a result, the 2014 time period was marked by a number of controversies over the role of replication in science, as well as the attitude and tone of debates on social media. I’ll spend a bit of time describing the controversies, not because they were all that strategically important, but just because I personally found it fascinating. Also, to this day, no one has fully catalogued all of the back-and-forth accusations, much of which I found only by looking for old expired links in emails or documents, and then going to the Internet Archive.

Brian Nosek and Daniel Lakens led a small replication project for a special issue of the journal Social Psychology in 2014 (with some small awards drawn out of the main COS grant). One of the replications was of a 2008 article by European researcher Simone Schnall, in which she and her colleagues had found that people who were primed to think about cleanliness or to cleanse themselves “found certain moral actions to be less wrong than did participants who had not been exposed to a cleanliness manipulation.”

The replication experiments – with much bigger sample sizes – found no such effect. One of the replication authors wrote a blog post titled, “Go Big or Go Home.” Brian Nosek, with his customary approach to openness, posted a long document with many emails back and forth amongst Schnall, the replication team, and the journal editors—all with permission, of course.

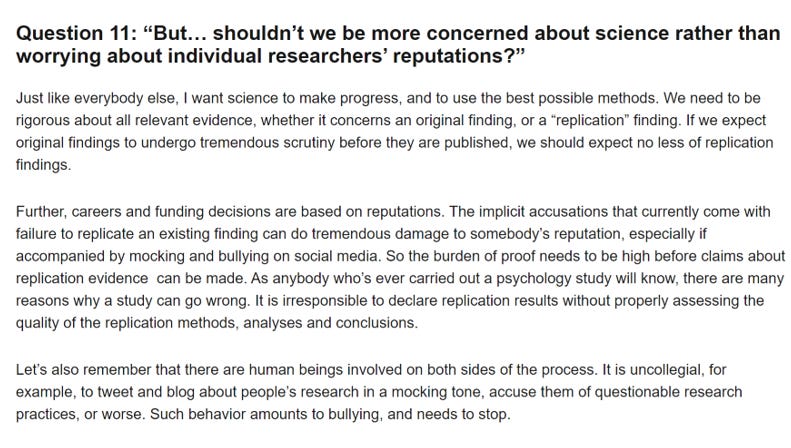

Simone Schnall then wrote a long blog post reacting to the replication and the accompanying commentary. She characterized her critics as bullies:

Here’s where it gets interesting.

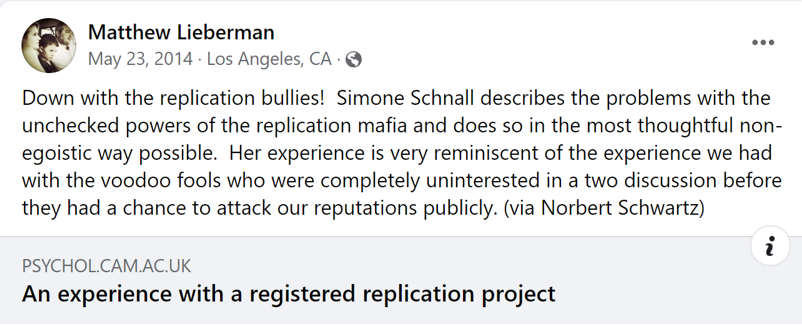

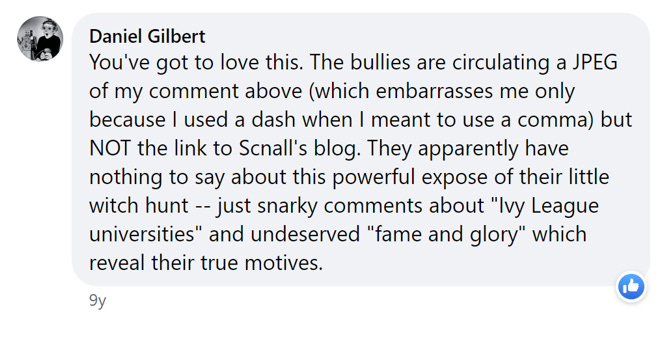

On May 23, 2014, UCLA neuroscientist Matthew Lieberman posted this on Facebook:

Dan Gilbert of Harvard—the same Dan Gilbert whose TED talks have had over 33 million views, has appeared in several television commercials for Prudential Insurance, and whose articles have been cited over 55,000 times—posted this in response:

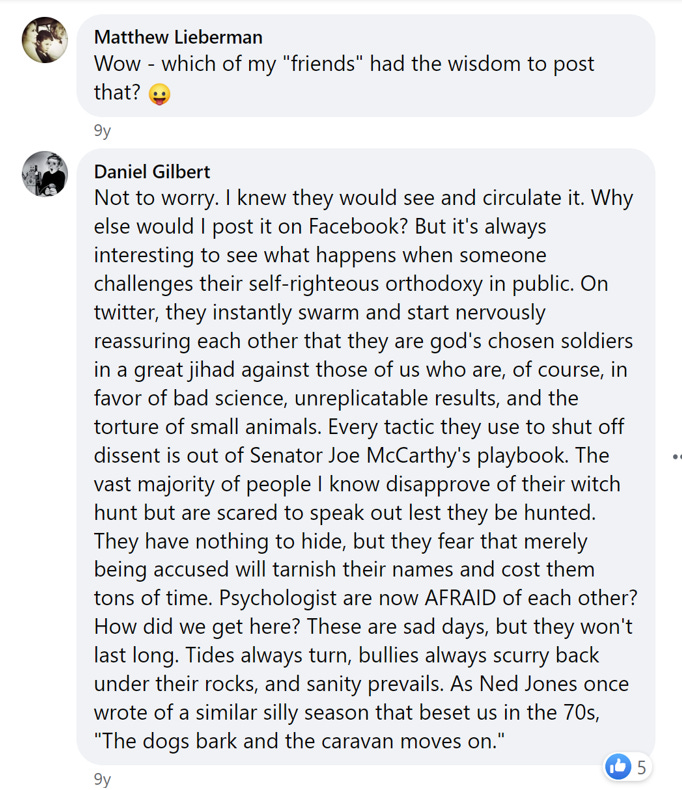

His comment about “second stringers” drew some attention elsewhere, and he then posted this:

That was followed by this:

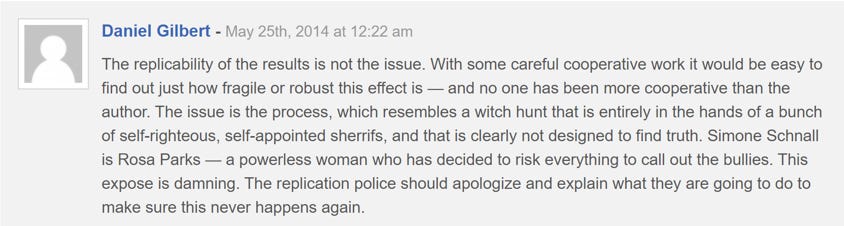

On the blog post by Schnall, Gilbert’s comments were even more inflammatory, if that were possible:

In other words, someone whose work had been replicated was—merely on that account—the equivalent of Rosa Parks standing up against bigots and segregationists.

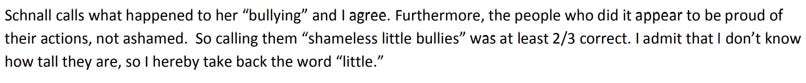

Gilbert also published an account on his own website, where he ended up concluding that calling replicators “shameless little bullies” was incorrect . . . because they might not be “little”:

I don’t bring all of this up to embarrass Gilbert—although I never saw that he retracted any insult other than the word “little,” or that he apologized for casting the replication debate in terms like “replication police,” “self-appointed sherrifs [sic],” “god’s chosen soldiers in a great jihad,” “Senator Joe McCarthy’s playbook,” “witch hunt,” “second stringers,” and “Rosa Parks.”

Instead, I bring it up to show the amount of vehement criticism in 2014 that was aimed at the Center for Open Science, Brian Nosek, and anyone trying to do replications. Since Gilbert was a prominent tenured professor at Harvard, he represented the height of the profession. We all assumed that there were other people who shared his views but were slightly less outspoken.

As for me, I not only took it in stride, but assumed that we at Arnold were doing good work. After all, replication is a core part of the scientific method. If folks like Gilbert were that upset, we must have struck a nerve, and one that deserved to be struck. To me, it was as if the IRS had announced that it would be auditing more tax returns of wealthy people, and then one wealthy person started preemptively complaining about a witch hunt while casting himself as Rosa Parks standing up against segregation—that person might well have something to hide.

It did seem important that replications were:

Led by tenured professors at major universities, and

Funded by an outside philanthropy with no particular ax to grind and no stake in maintaining the good wishes of all psychologists everywhere.

The former made the replication efforts harder to dismiss as mere sour grapes or as the work of “second stringers” (Dan Gilbert’s efforts to the contrary). The latter made us at Arnold completely impervious to the complaints. I wonder if a program officer at NSF who was on a two-year leave from being a tenured professor of psychology might feel differently about antagonizing prominent members of the psychology community.

Reproducibility Project: Psychology

That leads us to the Reproducibility Project: Psychology, which at the time was the most ambitious replication project in history (it has since been handily surpassed by a DARPA program that my friend Adam Russell launched in partnership with COS). The aim was to replicate 100 psychology experiments published in top journals in 2008. In the Center for Open Science grant, we included an extra $250,000 for completing the Reproducibility Project, which had started but was unlikely to be finished given the current trajectory.

The final results were published in Science in 2015. Only around 40% (give or take) of the 100 experiments could definitively be replicated, while the rest were mixed or else definitely not replicable. These results took the academic world by storm. As of this writing, Google Scholar estimates that the 2015 Science paper has been cited over 7,500 times. It is a rare occasion when I mention this project and someone hasn’t heard of it. At $250k, it was easily the highest impact grant I ever made.

When the project was done, I got an inspiring email from Brian Nosek (it has been publicly posted on a Google Group, so this is all public knowledge). The email thanked us for supporting the project, and forwarded an email to his team thanking them for the heroic work they had done in pushing the project forward to completion. I remember finding it powerful and emotionally moving at the time, and that remains true today. It’s long, but worth quoting.

From: bno...@gmail.com [bno...@gmail.com] on behalf of Brian Nosek [no...@virginia.edu]

Sent: Wednesday, April 22, 2015 9:58 PM

To: Stuart Buck; Denis Calabrese

Subject: Reproducibility ProjectDear Stuart and Denis --

Today we completed and submitted the manuscript presenting the Reproducibility Project: Psychology. My note to the 270 co-authors on the project is below. When you funded the Reproducibility Project (and COS more broadly), the collaboration was active, exciting, and a patchwork.

LJAF funding gave us the excuse and opportunity to make it a "serious" effort. And, even though this project represents a very small part of our operating expenses, that support made an enormous difference.

If we had not received LJAF support, this project would have been a neat experiment with maybe a few dozen completed replications and contestable results. It would have received plenty of attention because of its unique approach, but I am doubtful that it would have had lasting impact.

With LJAF support, we had one person full-time on the project for our first year (Johanna), and two people for our second (+Mallory). We gave grants to replication teams and nominal payments to many others who helped in small and large ways. We helped all of the replication teams get their projects done, and then we audited their work, re-doing all of their analyses independently. We supported a small team to make sure that the project itself demonstrated all of the values and practices that comprise our mission. And, I could devote the time needed to make sure the project was done well. The project has been a centerpiece in the office for our two years of existence. Even though few in the office are working on it directly, they are all aware of the importance that it has for the mission of the organization. LJAF's support transformed this project from one that would have gotten lots of attention, to one that I believe will have a transformative impact.

I often tell my lab and the COS team about one of my favorite quotes for how we do our business. Bill Moyers asked Steve Martin, "What's the secret of success?" Steve responded, "Be so damn good that they can't ignore you."

LJAF funding gave us the opportunity to do this project in the best way that we could. Regardless of predictions, my thanks to both of you, Laura, and John for supporting this project and giving us the opportunity to do it well. I am so proud of it. I am confident that the scientific community will not be able to ignore it.

Best,

Brian

---------- Forwarded message ----------

From: Brian Nosek <no...@virginia.edu>

Date: Wed, Apr 22, 2015 at 10:50 PM

Subject: This is the end, or perhaps the beginning?

To: "Reproducibility Project: Psychology Team" <cos-reproducibility...@lists.cos.io>

Colleagues --I am attaching the submitted version of our Reproducibility Project: Psychology manuscript. [Figures need a bit of tweaking still for final version, but otherwise done.]

The seeds of this project were brewing with a few small-scale efforts in 2009 and 2010, and officially launched on November 4, 2011. Now, 3.5 years later, we have produced what must be the largest collective effort in the history of psychology. Moreover, the evidence is strong, and the findings are provocative.

For years, psychology conferences, journals, social media, and the general media has been filled with discussion of reproducibility. Is there a problem? How big is the problem? What should we do about it? Most of that discussion has been based on anecdote, small examples, logical analysis, simulation, or indirect inferences based on the available literature. Our project will ground this discussion in data. We cannot expect that our evidence will produce a sudden consensus, nor should it. But, we should expect that this paper will facilitate and mature the debate. That alone will be a substantial contribution.

To me, what is most remarkable about this project is that we did it. 270 authors and 86 volunteers contributed some or a lot of their time to the Reproducibility Project. If researchers were just self-interested actors, this could not have occurred. In the current reward system, no one is going to get a job or keep a job as an author on this paper.

. . .

The project will get a lot of attention. That won't necessarily be positive attention all the time, but it will get attention. I will receive much more than my share of attention and credit than I deserve based on our respective contributions. For that, I apologize in advance. In part, it is an unavoidable feature of large projects/teams. There is strong desire to talk about/through a single person.

But, there are also things that we can do to reduce it, and amplify the fact that this project exists because of the collective action of all, not the individual contribution of any. One part of that will be getting as many of us involved in the press/media coverage about the project as possible. Another part will be getting many of us involved in the presentations, posters, and discussions with our colleagues. We can emphasize the team, and correct colleagues when they say "Oh, Brian's project?" by responding "Well, Brian and 269 others, including me." [And, if they hate the project, do feel free to respond "Oh, yes, Brian's project."]

We all deserve credit for this work, and we can and should do what we can to ensure that everyone receives it. Does anyone know how to easily create a short video of the author list as a credit reel that we could all put into presentations?

Here, it would be difficult to call-out the contributions of everyone. I will mention just a few:

* Elizabeth Bartmess moved this fledgling project into a real thing when she started volunteering as a project coordinator in its early days. She was instrumental in turning the project from an interesting idea to a project that people realized could actually get done.

* Alexander Aarts made countless contributions at every step of the process - coding articles, editing manuscripts, reviewing submission requirements, and on on on. Much of getting the reporting details right is because of his superb contributions.

* Marcel van Assen, Chris Hartgerink, and Robbie van Aert led the meta-analysis of all the projects, pushing, twisting, and cajoling the diverse research applications into a single dataset. This was a huge effort over the last many weeks. That is reflected in the extensive analysis report in the supplementary information.

* Fred Hasselman led the creation of the beautiful figures for the main text of the report and provided much support to the analysis team.

* And, OBVIOUSLY, Johanna Cohoon and Mallory Kidwell are the pillars of this project. Last summer, more than 2 years in, it looked we wouldn't get much past 30 completed replications. Johanna and Mallory buckled down, wrote 100 on the wall, and pursued a steady press on the rest of us to get this done. They sent you (and me) thousands of emails, organized hundreds of tasks, wrote dozens of reports, organized countless materials, and just did whatever needed to be done to hit 100. They deserve hearty congratulations for pulling this off. Your email inbox will be lonely without them.

This short list massively under-represents all of the contributions to the project. So, I just say thank you. Thank you for your contributions. I get very emotional thinking about how so many of you did so much to get this done. I hope that you are as proud of the output as I am.

Warm regards,

Brian

That was a long email. But worth it—I still remember how emotional I felt when first reading it years ago. By the way, Johanna Cohoon and Mallory Kidwell deserve more recognition as key figures who made this project a reality. To them and many others, we should be grateful.

Johanna Cohoon, Mallory Kidwell, Courtney Soderberg, and Brian Nosek, pictured in the New York Times in 2016.

Reproducibility Project: Cancer Biology

Along with psychology, cancer biology seemed like a discipline that was early to the reproducibility crisis. In 2011-12, both Amgen and Bayer published articles bemoaning how rarely they were able to reproduce the academic literature, despite wanting desperately to do so (in order to find a drug to bring to market).

The Amgen findings were particularly dismal—they could replicate the literature only 11% of the time (6 out of 53 cases). I heard a similar message in private from top executives at Pfizer and Abbvie, with the gist being: “We always try to replicate an academic finding before carrying it forward, but we can’t get it to work 2/3rds of the time.”

Keep in mind that while pharma companies have a reputation (often justified) for trying to skew the results of clinical trials in their favor, they have no incentive to “disprove” or “fail to replicate” early-stage lab studies. Quite the contrary—they have every incentive to figure out a way to make it work if at all possible, so that they can carry forward a research program with a solid drug candidate. The fact that pharma companies still have such trouble replicating academic work is a real problem.

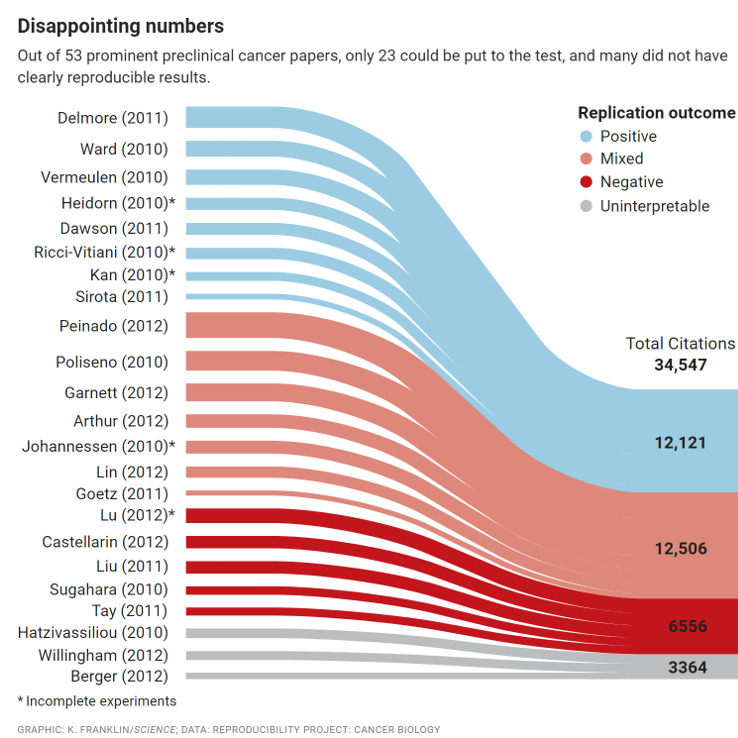

Around that time, Elizabeth Iorns launched the Reproducibility Initiative to address the problems of cancer biology. I ended up funding her in partnership with the Center for Open Science (where Tim Errington led the effort) to try to replicate 50 cancer biology papers from top journals (some coverage from Science at the time).

The project was quite a journey. It took longer than expected, cost more (they had to ask for supplemental funding at one point), and completed far fewer replications (only 23 papers, not 50). This might look like the project was inefficient in every possible way, but that wasn’t the case at all. The reason was that the underlying literature was so inadequate that the project leaders were never able to start a replication experiment without reaching out the original authors and labs. Never. Not even one case where they could just start up the replication experiment.

The original authors and labs weren’t always cooperative either—only 41% of the time. Indeed, the replicators were unable to figure out the basic descriptive and inferential statistics for 68% of the original experiments, even with the authors’ help (where provided)!

The end results? For studies where one could calculate an effect size (such as, how many days does a cancerous mouse survive with a particular drug?), the replication effect was on average 85% smaller than the original (e.g., the mouse survived 3 days rather than 20 days). And for studies where the effect was binary (yes-no), the replication rate was 46%. In any case, it looked like the original effects were overestimated compared to the replications.

As for what this implies for the field of cancer biology, see coverage in Wired, Nature, and Science. [Note: the coverage would have been far greater if not for the fact that the Washington Post, New York Times, and NPR all canceled stories at the last minute due to the Omicron wave.] Science had a useful graphic of which studies replicated and how often they have been cited in the field:

Science also quoted my friend Mike Lauer from NIH, whose thoughts mirror my own:

The findings are “incredibly important,” Michael Lauer, deputy director for extramural research at the National Institutes of Health (NIH), told reporters last week, before the summary papers appeared. At the same time, Lauer noted the lower effect sizes are not surprising because they are “consistent with … publication bias”—that is, the fact that the most dramatic and positive effects are the most likely to be published. And the findings don’t mean “all science is untrustworthy,” Lauer said.

True, not all science is untrustworthy. But at a minimum, we need to figure out how to prevent publication bias and a failure to report complete methods.

RIAT (Restoring Invisible and Abandoned Trials)

I first came across Peter Doshi due to his involvement in reanalyzing all of the clinical trial data as to Tamiflu, leading to the conclusion that governments around the world had stockpiled Tamiflu based on overestimates of its efficacy. Similarly, an effort to reanalyze the famous Study 329 on the efficacy of paroxetine (Paxil) to treat depression in adolescents showed that not only did the drug not work, there was an “increase in harms.” (GSK’s earlier attempt to market Paxil to adolescents–despite the fact that the FDA denied such approval--had been a key factor in a staggering $3 billion settlement with the federal government in 2012.) These and other examples suggested that misreported (or unreported) clinical trials could lead to significant public expense and even public harms.

Doshi and co-authors (including the famous Kay Dickersin) wrote a piece in 2013 calling for “restoring invisible and abandoned trials.” They gave numerous examples of trial information (drawn from documents made public via litigation or in Europe) that had been withheld from the public:

The documents we have obtained include trial reports for studies that remain unpublished years after completion (such as Roche’s study M76001, the largest treatment trial of oseltamivir, and Pfizer’s study A945-1008, the largest trial of gabapentin for painful diabetic neuropathy). We also have thousands of pages of clinical study reports associated with trials that have been published in scientific journals but shown to contain inaccuracies, such as Roche’s oseltamivir study WV15671, GlaxoSmithKline’s paroxetine study 329, and Pfizer’s gabapentin study 945-291. We consider these to be examples of abandoned trials: either unpublished trials for which sponsors are no longer actively working to publish or published trials that are documented as misreported but for which authors do not correct the record using established means such as a correction or retraction (which is an abandonment of responsibility) (box 1).Because abandonment can lead to false conclusions about effectiveness and safety, we believe that it should be tackled through independent publication and republication of trials.

In 2017, I was able to award a grant to Peter Doshi of $1.44 million to set up the RIAT Support Center—with RIAT standing for Restoring Invisible and Abandoned Trials. Doshi’s Center, in turn, made several smaller grants to outside researchers to reanalyze important clinical trials in areas ranging from cardiovascular disease to depression to nerve pain (gabapentin specifically).

I continue to think that this kind of work is vastly undersupplied. Few people have the resources, patience, or the academic incentive to spend years poring through potentially thousands of documents (including patient records) just to reanalyze one clinical trial to see whether the benefits and adverse events were accurately recorded. NIH should set aside, say, $5 million a year for this purpose, which be a pittance compared to what it spends on clinical trials each year (up to a thousand times more than that!).

REPEAT Initiative

I can’t remember how I first came across Sebastian Schneeweiss and his colleague Shirley Wang, both of whom holding leading roles at Harvard Medical School and at the Division of Pharmacoepidemiology and Pharmacoeconomics at Brigham and Women's Hospital.

I do remember inviting them down to Houston to meet with the Arnolds in order to pitch their idea: they wanted to review studies using “big data” in medicine (e.g., epidemiology, drug surveillance, etc.) in order to 1) assess the transparency of 250 of them, and 2) try to replicate 150 studies. Note: The replications here don’t mean rerunning experiments, but rerunning code on the same databases as in the original study.

This sort of systematic study seemed important, given that in the 21st Century Cures Act, the federal government had promoted the idea that drugs could be approved based on “real world evidence,” meaning “data regarding the usage, or the potential benefits or risks, of a drug derived from sources other than randomized clinical trials” (see section 3022 here).

How reliable is that “real world evidence” anyway? No one knew.

We ended up giving a $2 million grant to launch the REPEAT Initiative at Harvard, with REPEAT standing for “Reproducible Evidence: Practices to Enhance and Achieve Transparency.” A 2022 publication from this project noted that it was the “largest and most systematic evaluation of reproducibility and reporting-transparency for [real-world evidence] studies ever conducted.”

The overall finding was that the replication “effect sizes were strongly correlated between the original publications and study reproductions in the same data sources,” but that that there was room for improvement (since some findings couldn’t be reproduced even with the same methods and data).

This overall finding is an understatement, of course. If a drug is approved to market to the US population, what we really care about is whether the original finding still applies to new data/people/etc. If we could do a broader replication project, I’m fairly certain that we would find more discrepancies between what the FDA approved and what actually works in the real world.

The researchers at the REPEAT Initiative (Wang and Schneeweiss) since procured an award from FDA to use health claims databases to try to replicate key RCTs. Their initial results show that a “highly selected, nonrepresentative sample” of “real-world evidence studies can reach similar conclusions as RCTs when design and measurements can be closely emulated, but this may be difficult to achieve.” They also note that “emulation differences, chance, and residual confounding can contribute to divergence in results and are difficult to disentangle.”

In other words, the political enthusiasm for “real-world evidence” as a substitute for rigorous RCTs (rather than a complement) is overly naïve—at least as it would be implemented, well, in the real world.

Publication Standards at the journal Nature

Around 2014, Malcolm McLeod, a professor of Neurology and Translational Neuroscience at the University of Edinburgh (and one of the most hilariously droll people I’ve ever met) wanted to evaluate Nature’s new editorial requirements for research in the life sciences.

I gave him a grant of $82,859 to do so. He and his colleagues compared 394 articles in the Nature set of journals to 353 similar articles in other journals, including articles both before and after the change in Nature’s standards. The team looked at the number of articles that satisfied four criteria (information on randomization, blinding, sample size, and exclusions).

The findings were that in Nature-related articles, the number of articles that met all four criteria went from 0% to 16.4% after the change in guidelines, but the number of such articles in other journals stayed near zero over the whole timeframe.

In other words, a change in journal guidelines increased the number of articles that met basic quality standards, but there was still a long way to go. Thus, their findings encapsulated the entire open science movement: Some progress, but lots more to come.

Computer Science

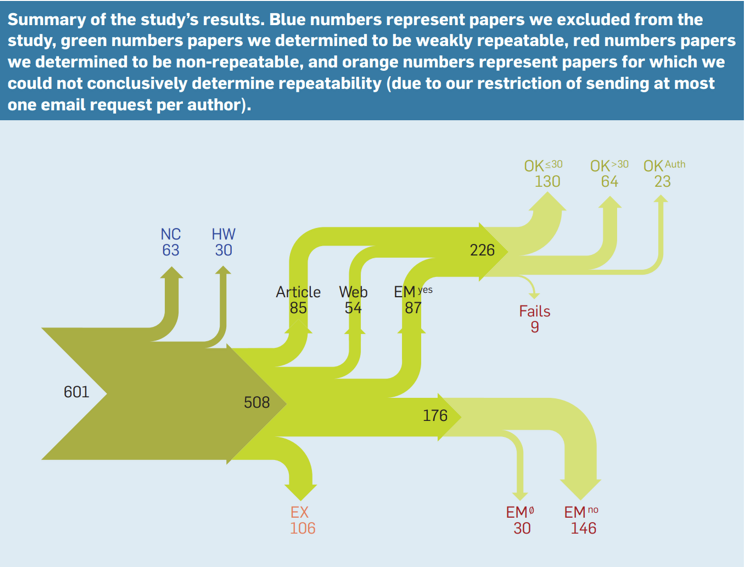

In 2015, I made a grant of $357,468 to Todd Proebsting and Christian Collberg at the University of Arizona to do a longitudinal study of how computer scientists share data and research artifacts. They published a study in 2016 (also here) on “repeatability in computer systems research,” with the findings represented in this colored/numbered flow chart:

They also produced this website cataloguing 20,660 articles from 78,945 authors (at present) and the availability of code and data. To repeat myself, this seems like the sort of thing that NSF ought to support in perpetuity.

Transcranial Direct Current Stimulation

In 2015, I gave the Center for Open Science a grant of $41,212 to work with another researcher to try to replicate one or more experiments using transcranial direct current stimulation, a popular but controversial treatment where replication seemed like it would turn up useful insights (as shown by subsequent studies). This grant was a failure: The outside researcher never completed the project. Not much more to say about this one.

II. Make It Possible and Make It Easy: software, databases, and other tools to help improve reproducibility

One of the most important ways to improve science (so I thought) was to provide better tools, software, etc., so that scientists would have more readily available ways to do good work. After all, one of the most common excuses for failing to share data/methods/code was that it was just too hard and time-consuming. If we could help remove that excuse, perhaps more scientists would engage in good practices.

Open Science Framework

A significant part of the funding for the Center for Open Science was always the development of the Open Science Framework software/website. To this day, COS’s staff includes 15+ people who work on software and product development.

What was OSF? From the beginning, I saw it as a software platform that allowed scientists to create a wiki-style page for each research project, share with collaborators, track changes over time, and more. Along the way, the platform also enabled preregistration (by freezing in time the current status of a given project) and preprints (by allowing access to PDFs or other versions of articles). Indeed, its SocArXiv preprint service currently hosts over 13,000 papers as a free and open source alternative to SSRN (the Social Science Research Network), and OSF is home to around 140,000 preregistrations.

As other examples, OSF was recently listed as one of the few data repositories that the NIH recommends for complying with its new data-sharing policy. Among many other institutional partners, the Harvard Library recommends OSF to the Harvard community, and offers ways to link OSF to a Harvard account (see here).

I’ll quote from a Twitter thread by Brian Nosek from last August on the occasion of OSF having 500,000 registered users:

How much has open science accelerated?

OSF launched in 2012. In its first year, we had less than 400 users. In 2022, OSF averages 469 new registered users per day, every day.

OSF consumers don't need an account. More than 13M people have consumed OSF content so far in 2022.

Other daily averages for 2022 so far:

- 202 new projects every day

- 9105 files posted every day

- 5964 files made publicly accessible every day

- 93 studies registered every day

Vivli

Clinical trials in medicine always seemed to me like the quintessential case where we ought to have the full evidence before us. Sure, if some trendy social psychology studies on power posing and the like were p-hacked, that would be troubling and we might end up buying the wrong book in an airport bookstore or being too credulous at a TED talk. But when medical trials were faked or p-hacked, cancer patients might end up spending thousands on a drug that didn’t actually work or that even made things worse. The stakes seemed higher.

In the 2013-14 timeframe, I noticed that there were many efforts to share clinical trial data—too many, in fact. The Yale Open Data Alliance was sharing data from Medtronic and J&J trials; Duke was sharing data from Bristol; Pfizer had its own Blue Button initiative; GSK had set up its own site to share data from GSK and other companies; and there were more.

Sharing data is most useful if done on a universal basis where everyone can search, access, and reanalyze data no matter what the source. That wasn’t occurring here. Companies and academia were all sharing clinical trial data in inconsistent ways across inconsistent websites. If someone wanted to reanalyze or do a meta-analysis on a category of drugs (say, antidepressant drugs from multiple companies), there was no way to do that.

Thus, I had the thought: What if there were a one-stop-shop for clinical trial data? A site that hosted data but also linked to and pulled in data from all the other sites, so that researchers interested in replication or meta-analysis could draw on data from everywhere? They would be far better able to do their work.

I asked around: Was anyone doing this? If not, why not? The answer was always: “No, but someone should do this!”

I ended up giving a small grant to Barbara Bierer at Harvard (and Brigham and Women’s Hospital in Boston) at the Multi-Regional Clinical Trials Center at Brigham and Women's Hospital and Harvard to have a conference on clinical trial data-sharing. The conference was well-attended, and I remember seeing folks like Deb Zarin, then the director of Clinicals.gov, and Mike Lauer, who was then at NHLBI and now is the Director of Extramural Research at NIH.

After that, I gave a planning grant of $500,000 to Bierer and her colleagues to help create a clinical trial data-sharing platform, plus a $2 million grant in 2017 to actually launch the platform. The result was Vivli. After a $200k supplemental grant in 2019, Vivli was on its own as a self-sufficient platform. As of 2023, Vivli shares data from 6,600+ clinical trials with over 3.6 million participants.

By those metrics, Vivli is by far the biggest repository for clinical trial data in the world. But not enough people request Vivli data. The last I heard, the number of data requests was far less than one per trial.

This leads to a disturbing thought about platforms and policies on data-sharing (for example, the NIH’s new data-sharing rule that went into effect in January 2023). That is, why should we spend so much effort, money, and time on sharing data if hardly anyone else wants to request and reuse someone else’s data in the first place? It’s an existential question for folks who care about research quality—what if we built it and no one came?

That said, we could improve both PhD education and the reuse of data in one fell swoop: If you’re doing a PhD in anything like biostatistics, epidemiology, etc., you should have to pick a trial or publication, download the data from a place like Vivli or various NIH repositories, and try to replicate the analysis (preferably exploring additional ideas as well).

Who knows what would happen—perhaps a number of studies would stand up, but others wouldn’t (as seen in a similar psychology project). In any event, the number of replications would be considerable, and it would be valuable training for the doctoral students. Two birds, one stone.

Declare Design

One key problem for high-quality research is that while there are many innovations as to experimental design, fixed effects, difference-in-differences, etc., there often isn’t a good way to do analyze these designs ahead of time. What will be your statistical power? The possible effects of sampling bias? The expected root mean squared error? The robustness across multiple tests? For these and many other questions, there might not be any simple numerical solution.

Enter DeclareDesign. The idea behind DeclareDesign is that you “declare” in advance the “design” of your study, and then the program does simulations that help you get a handle on how that study design will work out.

The folks behind DeclareDesign were all political scientists, whom I met through an introduction from Don Green around 2015 or so:

- Graeme Blair, Associate Professor of Political Science at UCLA.

- Jasper Cooper, then in the middle of a PhD in political science at Columbia, and now a Portfolio Lead at the Office of Evaluation Sciences at the General Services Administration.

- Alexander Coppock, Associate Professor of Political Science at Yale.

- Macartan Humphreys, at the time a Professor of Political Science at Columbia, and currently director of the Institutions and Political Inequality group at the WZB Berlin Social Science Center and honorary professor of social sciences at Humboldt University.

In 2016, they put out the first draft of an article on their idea. It was eventually published in the American Political Science Review in 2019. As they write:

Formally declaring research designs as objects in the manner we describe here brings, we hope, four benefits. It can facilitate the diagnosis of designs in terms of their ability to answer the questions we want answered under specified conditions; it can assist in the improvement of research designs through comparison with alternatives; it can enhance research transparency by making design choices explicit; and it can provide strategies to assist principled replication and reanalysis of published research.

I gave them a grant of $447,572 in 2016 to help build out their software, which is primarily written in R, but the grant was also intended to help them build a web interface that didn’t require any coding ability (DDWizard, released in 2020).

The software got a positive reception from social scientists across different disciplines. A flavor of the reactions:

- A major university professor once wrote to me DeclareDesign was an "incredible contribution to academic social science research and to policy evaluation."

- A Dartmouth professor posted on Twitter that “it’s an amazing resource by super smart people for research design and thinking through our theories and assumptions.”

- A political scientist at Washington University-St. Louis wrote: “It is remarkable how many methods questions I answer these days just by recommending DeclareDesign. Such an awesome piece of software.”

- An Oxford professor of neuropsychology wrote: “It's complex and difficult at first, but it does a fantastic job of using simulations not just to estimate power, but also to evaluate a research design in terms of other things, like bias or cost.”

I do think that this was a good investment; beyond Twitter anecdotes, DeclareDesign won the 2019 “Best Statistical Software Award” from the Society for Political Methodology. Blair, Coppock and Humphreys are about to publish a book on the whole approach: Research Design in the Social Sciences. I hope that DeclareDesign continues to help scholars design the best studies possible.

Center for Reproducible Neuroscience

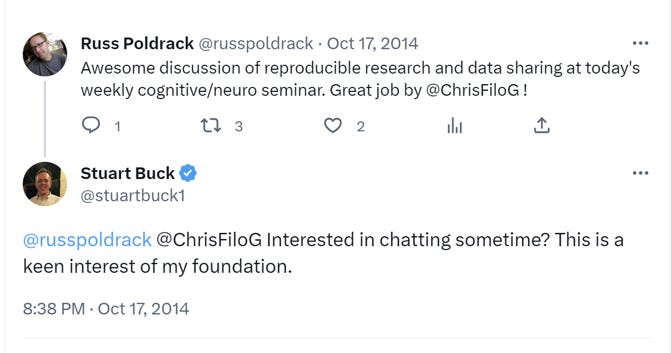

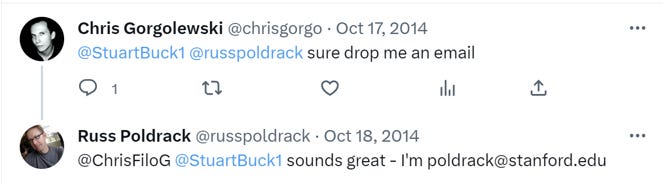

Here is a grant that can be traced to the power of Twitter. In Oct. 2014, I noticed a tweet by Russ Poldrack at Stanford. Poldrack and I had the following interaction:

Russ and I talked, along with his colleague Krzysztof (Chris) Gorgolewski. Long story short, I was able to give them a grant of $3.6 million in 2015 to launch the Stanford Center for Reproducible Neuroscience. The intent was to promote data sharing in neuroscience, as well as higher data quality standards for a field that had been plagued by criticisms for quite some time (see the infamous 2009 paper involving a brain scan of dead fish). The critiques continue to this day (see a recent Nature study showing that most brain-wide association studies are vastly underpowered).

The project has been quite successful in the years since. First, the OpenNeuro archive that was the main focus of the project was launched in 2017. It has grown consistently since then, and now hosts brain imaging data from more than 33,000 individuals and 872 different datasets. It is one of the official data archives of the BRAIN Initiative, and just received its second five-year round of NIH funding (which will go from 2023-2028).

Second, the Brain Imaging Data Structure (or BIDS) standard that was developed to support data organization for OpenNeuro has been remarkably successful. When Chris Gorgolewski left academia in 2019, it became a community project with an elected Steering Group and well over 100 contributors to date. One of the ideas that Chris developed was the "BIDS App" which is a containerized application that processes a BIDS dataset. One of these, fMRIPrep, has been widely used—according to Poldrack, there had been over 4,000 successful runs of the software per week over the last 6 months.

Indeed, a draft article on the history of the BIDS standard—which is now expected practice in neuroscience—includes this sentence: “The birth of BIDS can be traced back ultimately to a Twitter post by Russ Poldrack on October 17, 2014.” The paragraph then discusses the interaction between me and Russ (shown above).

This story shows not just the power of Twitter, but the power of publicly sharing information and ideas about what you’re working on—you never know who will read it, and start a conversation that leads to something like whole new set of data standards for neuroscience.

Stealth Software Technologies

My friend Danny Goroff (usually at the Sloan Foundation, but currently at the White House) introduced me to the topic of privacy-preserving computation, such as secure multi-party computation or fully homomorphic encryption. It would take too long to explain here, but there are ways that you could write a regression equation and compute it on data that remains entirely private to you the entire time, and there are ways to match and merge data from sources that keep their data private the whole time.

The possibilities seemed endless. One of the biggest obstacles to evidence-based policy is how hard it is to access private data on sensitive issues (health, criminal justice, etc.), let alone match and merge that data with other sources (e.g., income or schooling) so that you can research how housing/schooling affects health or criminal outcomes.

It seemed like every researcher working in these areas had to spend way too much time and money on negotiating relationships with individual state/federal agencies and then figuring out the legalities of data access. If there was a way to do all of this computation while keeping the underlying data private the entire time, it might be a shortcut around many of the legal obstacles to working with highly private data.

I spent a year (in my spare time) reading books on computation and encryption before I finally felt comfortable pitching a grant to the Arnolds on this topic. I also talked to Craig Gentry, who invented fully homomorphic encryption in his graduate dissertation (and then went to work for IBM).

I ended up making a grant of $1,840,267 to Stealth Software Technologies, a company formed by Rafail Ostrovsky of UCLA (one of the giants in the field) and Wes Skeith. Ostrovsky had primarily been funded by DARPA. The grant included working with ICPSR, the Inter-university Consortium for Political and Social Research, one of the largest data repositories in the world for social science data. Our goal was to find ways to do social science research that combined data from two different sources (e.g., an education agency and a health agency) while keeping data provably private the entire time.

The result was an open-source set of software (available here), although it still needs more explanation, marketing, and use. The team has given talks at NIST on secure computation, and has worked with Senator Ron Wyden on proposing the Secure Research Data Network Act, which would authorize a multi-million pilot at NSF using secure multi-computation to help create much more “research that could support smart, evidence-based policymaking.”

The same group (Stealth Software Technologies and George Alter) have also engaged in a DARPA-funded collaboration with the state of Virginia to produce a working version of the cryptographic software that will be used on a regular basis. The goal in the Virginia government is to get more agency participation in sharing private data and/or improving the capabilities of the Virginia Longitudinal Data System.

III. Make It Normative

Journalists/Websites

As much as everyone says that “science is self-correcting,” an ironic downside of the academic incentive system is that there are essentially no rewards for actively calling out problems in a given field (as my friend Simine Vazire and her spouse Alex Holcombe have written). There are no tenure-track positions in “noticing replicability issues with other people’s research,” no academic departments devoted to replication or the discovery of academic fraud, and very few government grants (if any). On top of that, as we’ve seen above, people who devote hundreds or thousands of hours to replication or uncovering fraud often find themselves with no academic job while being threatened with lawsuits.

It is a thankless task. “Science is self-correcting”—sure, if you don’t mind being an outcast.

Yet public correction is necessary. Otherwise, individual scientists or even entire academic fields can coast for years or decades without being held to account. Public scrutiny can create pressure to improve research standards, as has been seen in fields such as medicine and psychology. That’s why one line of Arnold funding was devoted to supporting websites and journalists that called out research problems.

Retraction Watch

One oddity in scholarly communication is that even when articles are retracted, other scholars keep citing them. Historically, there just wasn’t a good way to let everyone know, “Stop citing this piece, except as an example of retracted work.” But now that we have the Internet, that isn’t really an excuse.

As it turns out, journal websites often don’t highlight the fact that an article was retracted. Thus, one article that reviewed papers from 1960-2020 found that “retraction did not change the way the retracted papers were cited,” and only 5.4% of the citations even bothered to acknowledge the retraction. There are many more articles on this unfortunate phenomenon (see here and here).

Retraction Watch is one of the most successful journalistic outlets focused on problematic research since its launch in 2010 by Ivan Oransky and Adam Marcus. Marcus, the editorial director for primary care at Medscape, is the former managing editor of Gastroenterology & Endoscopy News, and has done a lot of freelance science journalism. Oransky, the editor in chief of Spectrum, a publication about autism research, is also a Distinguished Writer In Residence at New York University’s Carter Journalism Institute, and has previously held leadership positions at Medscape, Reuters Health, Scientific American, and The Scientist. It has published thousands of blog posts (or articles) on problematic research.

In 2014, John Arnold emailed me about this Retraction Watch post asking for donations. I looked into it, and eventually Retraction Watch pitched me on a database of retractions in the scholarly literature in order to help scholars keep track of what not to cite. In 2015, we awarded Retraction Watch a grant of $280,926 to partner with the Center for Open Science on that effort. The database is available here, and as a recent post stated:

Our list of retracted or withdrawn COVID-19 papers is up to more than 300. There are now 41,000 retractions in our database — which powers retraction alerts in EndNote, LibKey, Papers, and Zotero. The Retraction Watch Hijacked Journal Checker now contains 200 titles. And have you seen our leaderboard of authors with the most retractions lately — or our list of top 10 most highly cited retracted papers?

In other words, the Retraction Watch database has been created and populated with 41,000+ entries, and has partnered with several citation services to help scholars avoid citing retracted articles. Indeed, a recent paper found the following: "reporting retractions on RW significantly reduced post-retraction citations of non-swiftly retracted articles in biomedical sciences."

Even by 2018, there were many interesting findings from this database, as documented in a Science article. The Science article also quotes a notable metascience scholar (Hilda Bastian):

Such discussions underscore how far the dialogue around retractions has advanced since those disturbing headlines from nearly a decade ago. And although the Retraction Watch database has brought new data to the discussions, it also serves as a reminder of how much researchers still don't understand about the prevalence, causes, and impacts of retractions. Data gaps mean "you have to take the entire literature [on retractions] with a grain of salt," Bastian says. "Nobody knows what all the retracted articles are. The publishers don't make that easy."

Bastian is incredulous that Oransky's and Marcus's "passion project" is, so far, the most comprehensive source of information about a key issue in scientific publishing. A database of retractions "is a really serious and necessary piece of infrastructure," she says. But the lack of long-term funding for such efforts means that infrastructure is "fragile, and it shouldn't be."

I obviously agree. One of my perpetual frustrations was that even though NIH and NSF were spending many times Arnold’s entire endowment every single year, comparatively little is spent on infrastructure or metascience efforts that would be an enormous public good. Instead, we at Arnold were constantly in the position of awarding $300k to a project that, in any rational system, would be a no-brainer for NIH or NSF to support at $1 or $2 million a year forever.

PubPeer

PubPeer is a discussion board that was launched in 2012 as a way for anonymous whistleblowers to point out flaws or fraud in scientific research. It has since become a focal point for such discussions. While anonymous discussion boards can be fraught with difficulties themselves (i.e., people who just want to harass someone else, or throw out baseless accusations), PubPeer seemed to take a responsible approach—it requires that comments be based on “publicly verifiable information” and that they not include “allegations of misconduct.” Are there occasions where an anonymous commenter might have valuable inside information about misconduct? For sure. But valid comments could be lost in a swarm of trolls, saboteurs, etc. PubPeer decided to focus only on verifiable problems with scientific data.

I initially gave PubPeer a grant of $412,800 in 2016 as general operating support; this was followed by a $150,000 general operating grant in 2019. The 2016 grant enabled PubPeer to “hire a very talented new programmer to work on the site full-time and completely rewrite it to make it easier to add some of the ideas we have for new features in the future.” In particular, a previous lawsuit had tried to order PubPeer to provide information about commenters who had allegedly cost a professor a job due to research irregularities (or possible fraud). PubPeer used our funding to revamp so as to be completely unable “to reveal any user information if we receive another subpoena or if the site is hacked.” As well, thanks to Laura Arnold’s membership on the ACLU’s national board, I was able to introduce PubPeer to folks at the ACLU who helped defend the lawsuit.

While it can often be difficult to know the counterfactual in philanthropy (“would the same good work have happened anyway?), I’m fairly confident in this case that without our grant and the introduction to the ACLU, PubPeer would likely have been forced to shut down.

I thought, and still think, that this sort of outlet is enormously useful. Just last year, a major scandal in Alzheimer’s research was first exposed by commenters on PubPeer—not by grant peer review, journal peer review, or by the NIH grant reports that had been submitted over a decade before. And as I say throughout, it seems like a no-brainer for NIH or NSF to support this sort of effort with $1-2 million a year indefinitely. We have very few ways of collecting whistleblower information about fraud or other research problems that many people (particularly younger scientists) might be reluctant to bring up otherwise.

HealthNewsReview

HealthNewsReview (now defunct) was a website run by Gary Schwitzer, a former medical correspondent for CNN and journalism professor at the University of Minnesota. The focus was providing regular, structured critiques of health/science journalism. In 2008, Ben Goldacre had written about a HealthNewsReview study showing that out of 500 health articles in the United States, only 35% had competently “discussed the study methodology and the quality of the evidence.” The site had come up with 10 criteria for responsible journalism about health/science studies, such as whether the story discusses costs, quantifies benefits in meaningful terms, explains possible harms, compares to existing alternatives, looks for independence and conflicts of interest, reviews the study’s methodology and evidentiary quality, and more.

The site seemed to be widely respected among health journalists, but had run out of funding. I thought their efforts were important and worth reviving. As a result, in 2014, I gave a $1.24 million grant to the University of Minnesota for Gary Schwitzer’s efforts at HealthNewsReview, and this was followed by a $1.6 million grant in 2016.

One of their most widely-known successes was the case of the “chocolate milk, concussion” story. In late 2015, the University of Maryland put out a press release claiming that chocolate milk “helped high school football players improve their cognitive and motor function over the course of a season, even after experiencing concussions.” A journalist who worked for HealthNewsReview started asking questions, such as “where is the study in question?”

Boy, was he in for a surprise when the Univ. of Maryland called him back:

The next morning I received a call from the MIPS news office contact listed on the news release, Eric Schurr. What I heard astounded me. I couldn’t find any journal article because there wasn’t one. Not only wasn’t this study published, it might never be submitted for publication. There wasn’t even an unpublished report they could send me.