bruce

Bio

Doctor from NZ, independent researcher (grand futures / macrostrategy) collaborating with FHI / Anders Sandberg. Previously: Global Health & Development research @ Rethink Priorities.

Feel free to reach out if you think there's anything I can do to help you or your work, or if you have any Qs about Rethink Priorities! If you're a medical student / junior doctor reconsidering your clinical future, or if you're quite new to EA / feel uncertain about how you fit in the EA space, have an especially low bar for reaching out.

Outside of EA, I do a bit of end of life care research and climate change advocacy, and outside of work I enjoy some casual basketball, board games and good indie films. (Very) washed up classical violinist and Oly-lifter.

All comments in personal capacity unless otherwise stated.

Posts 9

Comments134

While I agree that both sides are valuable, I agree with the anon here - I don't think these tradeoffs are particularly relevant to a community health team investigating interpersonal harm cases with the goal of "reduc[ing] risk of harm to members of the community while being fair to people who are accused of wrongdoing".

One downside of having the bad-ness of say, sexual violence[1]be mitigated by their perceived impact,(how is the community health team actually measuring this? how good someone's forum posts are? or whether they work at an EA org? or whether they are "EA leadership"?) when considering what the appropriate action should be (if this is happening) is that it plausibly leads to different standards for bad behaviour. By the community health team's own standards, taking someone's potential impact into account as a mitigating factor seems like it could increase the risk of harm to members of the community (by not taking sufficient action with the justification of perceived impact), while being more unfair to people who are accused of wrongdoing. To be clear, I'm basing this off the forum post, not any non-public information

Additionally, a common theme about basically every sexual violence scandal that I've read about is that there were (often multiple) warnings beforehand that were not taken seriously.

If there is a major sexual violence scandal in EA in the future, it will be pretty damning if the warnings and concerns were clearly raised, but the community health team chose not to act because they decided it wasn't worth the tradeoff against the person/people's impact.

Another point is that people who are considered impactful are likely to be somewhat correlated with people who have gained respect and power in the EA space, have seniority or leadership roles etc. Given the role that abuse of power plays in sexual violence, we should be especially cautious of considerations that might indirectly favour those who have power.

More weakly, even if you hold the view that it is in fact the community health team's role to "take the talent bottleneck seriously; don’t hamper hiring / projects too much" when responding to say, a sexual violence allegation, it seems like it would be easy to overvalue the bad-ness of the immediate action against the person's impact, and undervalue the bad-ness of many more people opting to not get involved, or distance themselves from the EA movement because they perceive it to be an unsafe place for women, with unreliable ways of holding perpetrators accountable.

That being said, I think the community health team has an incredibly difficult job, and while they play an important role in mediating community norms and dynamics (and thus have corresponding amount of responsibility), it's always easier to make comments of a critical nature than to make the difficult decisions they have to make. I'm grateful they exist, and don't want my comment to come across like an attack of the community health team or its individuals!

(commenting in personal capacity etc)

If this comment is more about "how could this have been foreseen", then this comment thread may be relevant. I should note that hindsight bias means that it's much easier to look back and assess problems as obvious and predictable ex post, when powerful investment firms and individuals who also had skin in the game also missed this.

TL;DR:

1) There were entries that were relevant (this one also touches on it briefly)

2) They were specifically mentioned

3) There were comments relevant to this. (notably one of these was apparently deleted because it received a lot of downvotes when initially posted)

4) There has been at least two other posts on the forum prior to the contest that engaged with this specifically

My tentative take is that these issues were in fact identified by various members of the community, but there isn't a good way of turning identified issues into constructive actions - the status quo is we just have to trust that organisations have good systems in place for this, and that EA leaders are sufficiently careful and willing to make changes or consider them seriously, such that all the community needs to do is "raise the issue". And I think looking at the systems within the relevant EA orgs or leadership is what investigations or accountability questions going forward should focus on - all individuals are fallible, and we should be looking at how we can build systems in place such that the community doesn't have to just trust that people who have power and who are steering the EA movement will get it right, and that there are ways for the community to hold them accountable to their ideals or stated goals if it appears to, or risks not playing out in practice.

i.e. if there are good processes and systems in place and documentation of these processes and decisions, it's more acceptable (because other organisations that probably have a very good due diligence process also missed it). But if there weren't good processes, or if these decisions weren't a careful + intentional decision, then that's comparatively more concerning, especially in context of specific criticisms that have been raised,[1] or previous precedent. For example, I'd be especially curious about the events surrounding Ben Delo,[2] and processes that were implemented in response. I'd be curious about whether there are people in EA orgs involved in steering who keep track of potential risks and early warning signs to the EA movement, in the same way the EA community advocates for in the case of pandemics, AI, or even general ways of finding opportunities for impact. For example, SBF, who is listed as a EtG success story on 80k hours, has publicly stated he's willing to go 5x over the Kelly bet, and described yield farming in a way that Matt Levine interpreted as a Ponzi. Again, I'm personally less interested in the object level decision (e.g. whether or not we agree with SBF's Kelly bet comments as serious, or whether Levine's interpretation as appropriate), but more about what the process was, how this was considered at the time with the information they had etc. I'd also be curious about the documentation of any SBF related concerns that were raised by the community, if any, and how these concerns were managed and considered (as opposed to critiquing the final outcome).

Outside of due diligence and ways to facilitate whistleblowers, decision-making processes around the steering of the EA movement is crucial as well. When decisions are made by orgs that bring clear benefits to one part of the EA community while bringing clear risks that are shared across wider parts of the EA community,[3] it would probably be of value to look at how these decisions were made and what tradeoffs were considered at the time of the decision. Going forward, thinking about how to either diversify those risks, or make decision-making more inclusive of a wider range stakeholders[4], keeping in mind the best interests of the EA movement as a whole.

(this is something I'm considering working on in a personal capacity along with the OP of this post, as well as some others - details to come, but feel free to DM me if you have any thoughts on this. It appears that CEA is also already considering this)

If this comment is about "are these red-teaming contests in fact valuable for the money and time put into it, if it misses problems like this"

I think my view here (speaking only for the red-teaming contest) is that even if this specific contest was framed in a way that it missed these classes of issues, the value of the very top submissions[5] may still have made the efforts worthwhile. The potential value of a different framing was mentioned by another panelist. If it's the case that red-teaming contests are systematically missing this class of issues regardless of framing, then I agree that would be pretty useful to know, but I don't have a good sense of how we would try to investigate this.

- ^

This tweet seems to have aged particularly well. Despite supportive comments from high-profile EAs on the original forum post, the author seemed disappointed that nothing came of it in that direction. Again, without getting into the object level discussion of the claims of the original paper, it's still worth asking questions around the processes. If there was were actions planned, what did these look like? If not, was that because of a disagreement over the suggested changes, or the extent that it was an issue at all? How were these decisions made, and what was considered?

- ^

Apparently a previous EA-aligned billionaire ?donor who got rich by starting a crypto trading firm, who pleaded guilty to violating the bank secrecy act

- ^

Even before this, I had heard from a primary source in a major mainstream global health organisation that there were staff who wanted to distance themselves from EA because of misunderstandings around longtermism.

As requested, here are some submissions that I think are worth highlighting, or considered awarding but ultimately did not make the final cut. (This list is non-exhaustive, and should be taken more lightly than the Honorable mentions, because by definition these posts are less strongly endorsed by those who judged it. Also commenting in personal capacity, not on behalf of other panelists, etc):

Bad Omens in Current Community Building

I think this was a good-faith description of some potential / existing issues that are important for community builders and the EA community, written by someone who "did not become an EA" but chose to go to the effort of providing feedback with the intention of benefitting the EA community. While these problems are difficult to quantify, they seem important if true, and pretty plausible based on my personal priors/limited experience. At the very least, this starts important conversations about how to approach community building that I hope will lead to positive changes, and a community that continues to strongly value truth-seeking and epistemic humility, which is personally one of the benefits I've valued most from engaging in the EA community.

Seven Questions for Existential Risk Studies

It's possible that the length and academic tone of this piece detracts from the reach it could have, and it (perhaps aptly) leaves me with more questions than answers, but I think the questions are important to reckon with, and this piece covers a lot of (important) ground. To quote a fellow (more eloquent) panelist, whose views I endorse: "Clearly written in good faith, and consistently even-handed and fair - almost to a fault. Very good analysis of epistemic dynamics in EA." On the other hand, this is likely less useful to those who are already very familiar with the ERS space.

Most problems fall within a 100x tractability range (under certain assumptions)

I was skeptical when I read this headline, and while I'm not yet convinced that 100x tractability range should be used as a general heuristic when thinking about tractability, I certainly updated in this direction, and I think this is a valuable post that may help guide cause prioritisation efforts.

The Effective Altruism movement is not above conflicts of interest

I was unsure about including this post, but I think this post highlights an important risk of the EA community receiving a significant share of its funding from a few sources, both for internal community epistemics/culture considerations as well as for external-facing and movement-building considerations. I don't agree with all of the object-level claims, but I think these issues are important to highlight and plausibly relevant outside of the specific case of SBF / crypto. That it wasn't already on the forum (afaict) also contributed to its inclusion here.

I'll also highlight one post that was awarded a prize, but I thought was particularly valuable:

Red Teaming CEA’s Community Building Work

I think this is particularly valuable because of the unique and difficult-to-replace position that CEA holds in the EA community, and as Max acknowledges, it benefits the EA community for important public organisations to be held accountable (and to a standard that is appropriate for their role and potential influence). Thus, even if listed problems aren't all fully on the mark, or are less relevant today than when the mistakes happened, a thorough analysis of these mistakes and an attempt at providing reasonable suggestions at least provides a baseline to which CEA can be held accountable for similar future mistakes, or help with assessing trends and patterns over time. I would personally be happy to see something like this on at least a semi-regular basis (though am unsure about exactly what time-frame would be most appropriate). On the other hand, it's important to acknowledge that this analysis is possible in large part because of CEA's commitment to transparency.

I don’t bite the bullet in the most natural reading of this, where very small changes in i_s do only result in very small changes in subjective suffering from a subjective qualitative POV. Insofar as that is conceptually and empirically correct, I (tentatively) think it’s a counterexample that more or less disproves my metaphysical claim (if true/legit).

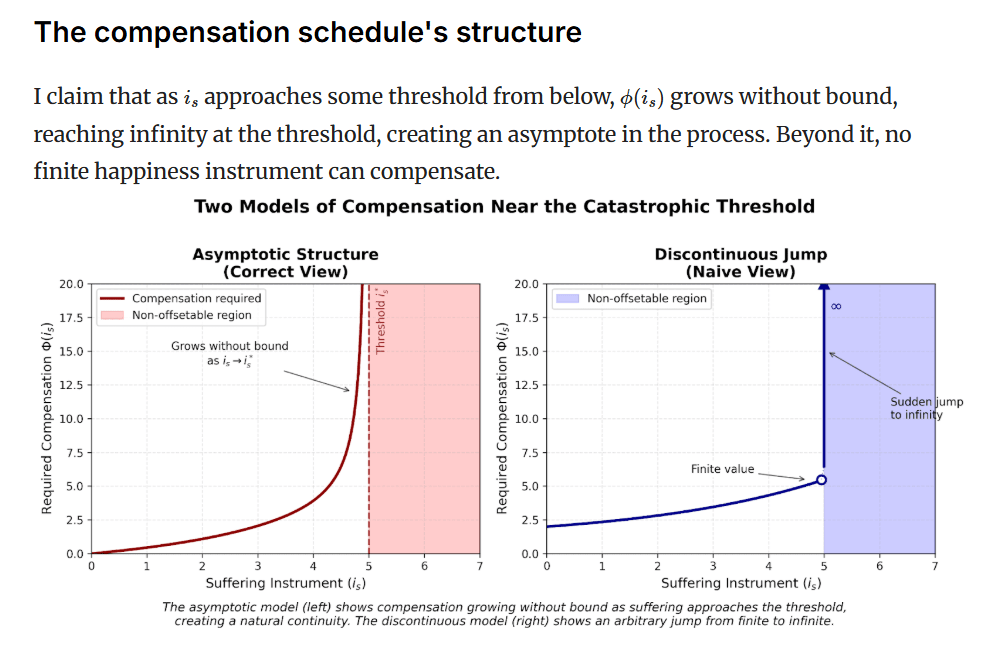

Going along with 'subjective suffering', which I think is subject to the risks you mention here, to make the claim that the compensation schedule is asymptotic (which is pretty important to your topline claim RE: offsetability) I think you can't only be uncertain about Ben's claim or "not bite the bullet", you have to make a positive case for your claim. For example:

I will add that one reason I think this might be a correct “way out” is that it would just be very strange to me if “IHE preference is to refuse 70 year torture and happiness trade mentioned in post” logically entails (maybe with some extremely basic additional assumptions like transitivity) “IHE gives up divine bliss for a very small subjective amount of suffering mitigation”

Like, is it correct that absent some categorical lexical property that you can identify, "the way out" is very dependent on you being able to support the claim "near the threshold a small change in i_s --> large change in subjective experience"?

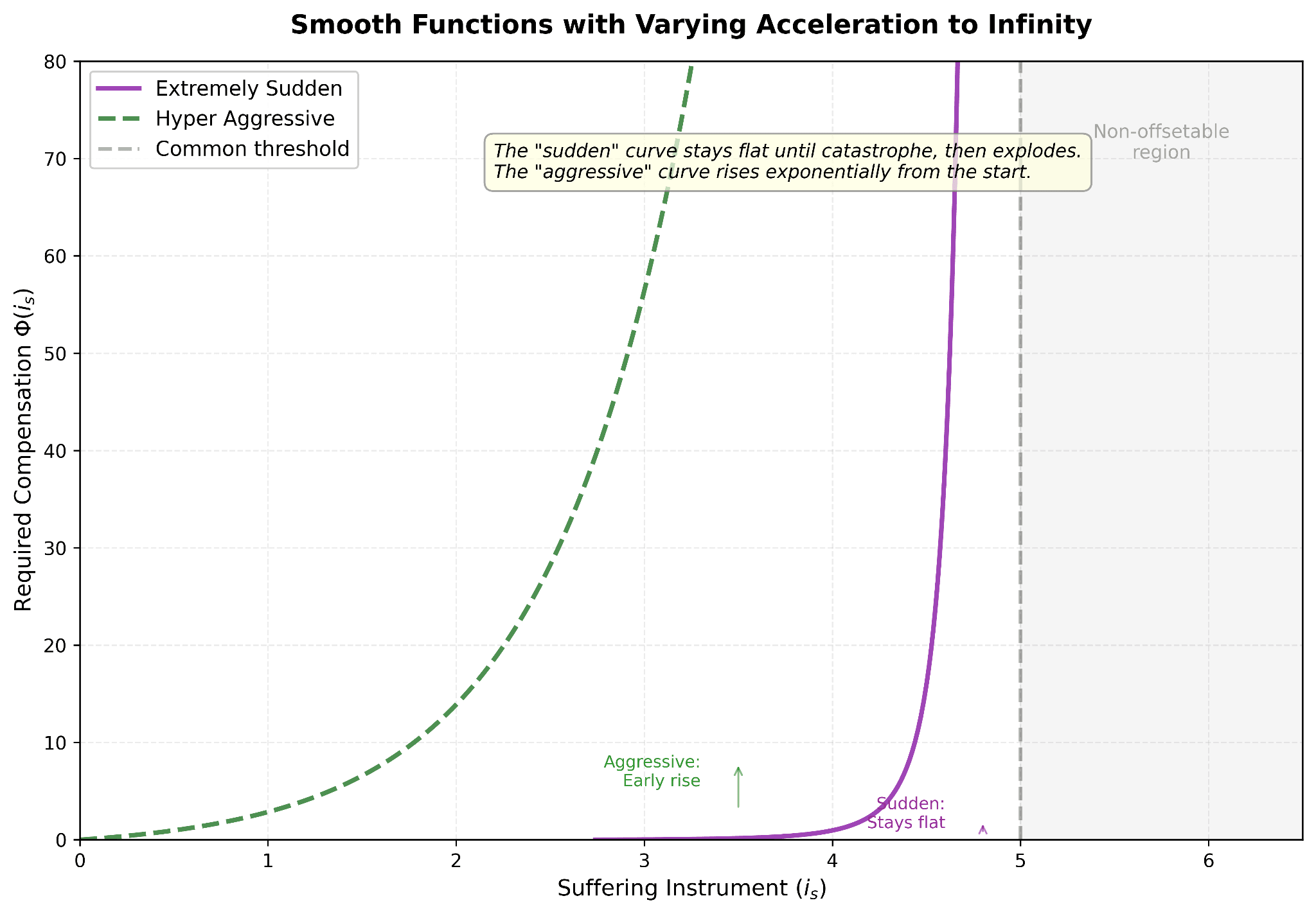

So I suspect your view is something like: "as i_s increases linearly, subjective experience increases in a non-linear way that approaches infinity at some point, earlier than 70 years of torture"?[1] If so, what's the reason you think this is the correct view / am I missing something here?

RE: the shape of the asymptote and potential risks of conflating empirical uncertainties

I think this is an interesting graph, and you might feel like you can make some rough progress on this conceptually with your methodology. For example, how many years of bliss would the IHE need to be offered to be indifferent between the equivalent experience of:

- 1 person boiled alive for an hour at 100degC

- Change the time variable to 30mins / 10min / 5min / 1 minute / 10 seconds / 1 seconds of the above experience[2]

- Change the exposure variable to different % of the body (e.g. just hand / entire arm / abdomen / chest / back, etc)

- (I would be separately interested in how the IHE would make tradeoffs if making a decision for others and the choice was about: 10/10000/1E6 people having ^all the above time/exposure variations, rather than experiencing it themselves, but this is further away from your preferred methodology so I'll leave it for another time)

And then plotted the graph instrument with different combinations of the time/exposure/temperature variables. This could help you either elucidate the shape of your graph, or the location of uncertainties around your time granularity.

The reason I chose this > cluster headaches is partly because you can get more variables here, but if you wanted just a time comparison then cluster headaches might be easier.

But I actually think temperature is an interesting one to consider for multiple additional reasons. For example, it's interesting as a real life example where you have perceived discontinuities of responses to continuous changes in some variable. You might be willing to tolerate 35 degree water for a very long time but as soon as it gets to 40+ how tolerable it is very rapidly decreases in a way that feels like a discontinuity.

But what's happening here is that heat nociceptors activate at a specific temperature (say e.g. 40degC). So you basically just aren't moving up the suffering instrument below that temperature ~at all, and so the variable you'd change is "how many nociceptors do you activate" or "how frequently do they fire" (all of which are modulated by temperature and amount of skin exposed), and that rapidly goes up as you reach / exceed 40degC.[3]

And so if you naively plot "degrees" or "person-hours" at the bottom, you might think subjective suffering is going up exponentially compared to a linear increase in i_s, but you are not accounting for thresholds in i_s activation, or increased sensitisation or recruitment of nociceptors over time, which might make the relationship look much less asymptotic.[4]

And empirical uncertainties about exactly how these kinds of signals work and are processed I think is a potentially large limiting factor for being able to strongly support "as i_s increases linearly, subjective experience increases in a non-linear way that approaches infinite bliss at some point"[5]

I obviously don't think it's possible to have all the empirical Qs worked out for the post, but I wanted to illustrate these empirical uncertainties because I think even if I felt it would be correct for the IHE to reject some weaker version of the torture-bliss trade package[6], it would still be unclear that this reflected an asymptotic relationship, rather than just e.g. a large asymmetry between sensitivity to i_s and i_h, or maximum amount of i_s and i_h possible. I think these possibilities could satisfy the (weaker) IHE thought experiment while potentially satisfying lexicality in practice, but not in theory. It might also explain why you feel much more confident about lexicality WRT happiness but not intra-suffering tradeoffs, and if you put the difference of things like 1E10 vs 1E50 vs 10^10^10 down to scope insensitivity I do think this explains a decent portion of your views.

- ^

And indeed 1 hour of cluster headache

- ^

I'm aware that approaching 1 second is getting towards your uncertainty for the time granularity problem, but I think if you do think 1 hour of cluster headache is NOS then these are the kinds of tradeoffs you'd want to be able to make (and back)

- ^

There are other heat receptors at higher temperatures but to a first approximation it's probably fine to ignore

- ^

Because of uncertainty around how much i_s there actually is

- ^

Also worth flagging that RE: footnote 26, where you say:

Feasible happiness is bounded - there are only so many neurons that can fire, years beings can live, resources we can marshal. Call this maximum H_max.

You should also expect this to apply to the suffering instrument; there is also some upper bound for all of these variables

- ^

e.g. 1E10 years, rather than infinity, since I find that pretty implausible and hard to reason about

Note also that you can accept outweighability and still believe that extreme suffering is really bad. You could - e.g. - think that 1 second of a cluster headache can only be outweighed by trillions upon trillions of years of bliss. That would give you all the same practical implications without the theoretical trouble.

+1 to this, this echoes some earlier discussion we've had privately and I think it would be interesting to see it fleshed out more, if your current view is to reject outweighability in theory

More importantly I think this points to a potential drawback RE: "IHE thought experiment, I claim, is an especially epistemically productive way of exploring that territory, and indeed for doing moral philosophy more broadly"[1]

For example, if your intuition is that 70 years of the worst possible suffering is worse than 1E10 and 1E100 and 10^10^10 years of bliss, and these all feel like ~equally clear tradeoffs to you, there doesn't seem (to me) to be a clear way of knowing whether you should believe your conclusion is that 70 years of the worst possible suffering is "not offsetable in theory" or "offsetable in theory but not in practice, + scope insensitivity",[2] or some other option.

- I'm much more confident about the (positive wellbeing + suffering) vs neither trade than intra-suffering trades. It sounds right that something like the tradeoff you describe follows from the most intuitive version of my model, but I'm not actually certain of this; like maybe there is a system that fits within the bounds of the thing I'm arguing for that chooses A instead of B (with no money pumps/very implausible conclusions following)

Ok interesting! I'd be interested in seeing this mapped out a bit more, because it does sound weird to have BOS be offsettable with positive wellbeing, positive wellbeing to be not offsettable with NOS, but BOS and NOS are offsetable with each other? Or maybe this isn't your claim and I'm misunderstanding

2) Well the question again is "what would the IHE under experiential totalization do?" Insofar as the answer is "A", I endorse that. I want to lean on this type of thinking much more strongly than hyper-systematic quasi-formal inferences about what indirectly follows from my thesis.

Right, but if IHE does prefer A over B in my case while also preferring the "neither" side of the [positive wellbeing + NOS] vs neither trade then there's something pretty inconsistent right? Or a missing explanation for the perceived inconsistency that isn't explained by a lexical threshold.

I think it's possible that the answer is just B because BOS is just radically qualitatively different from NOS.

I think this is plausible but where does the radical qualitative difference come from? (see comments RE: formalising the threshold).

Maybe most importantly I (tentatively?) object to the term "barely" here because under the asymptotic model I suggest, the value of subtracting arbitrarily small amount of suffering instrument from the NOS state results in no change in moral value at all because (to quote myself again) "Working in the extended reals, this is left-continuous: "

Sorry this is too much maths for my smooth brain but I think I'd be interested in understanding why I should accept the asymptotic model before trying to engage with the maths! (More on this below, under "On the asymptotic compensation schedule")

So in order to get BOS, we need to remove something larger than , and now it's a quasi-empirical question of how different that actually feels from the inside. Plausibly the answer is that "BOS" (scare quotes) doesn't actually feel "barely" different - it feels extremely and categorically different

Can you think of one generalisable real world scenario here? Like "I think this is clearly non-offsetable and now I've removed X, I think it is clearly offsetable"

And I'll add that insofar as the answer is (2) and NOT 3, I'm pretty inclined to update towards "I just haven't developed an explicit formalization that handles both the happiness trade case and the intra-suffering trade case yet" more strongly than towards "the whole thing is wrong, suffering is offsetable by positive wellbeing" - after all, I don't think it directly follows from "IHE chooses A" that "IHE would choose the 70 years of torture." But I could be wrong about this! I 100% genuinely think I'm literally not smart enough to intuit super confidently whether or a formalization that chooses both A and no torture exists. I will think about this more!

Cool! Yeah I'd be excited to see the formalisation; I'm not making a claim that the whole thing is wrong, more making a claim that I'm not currently sufficiently convinced to hold the view that some suffering cannot be offsetable. I think while the intuitions and the hypotheticals are valuable, like you say later, there are a bunch of things about this that we aren't well placed to simulate or think about well, and I suspect if you find yourself in a bunch of hypotheticals where you feel like your intuitions differ and you can't find a way to resolve the inconsistencies then it is worth considering the possibility that you're not adequately modelling what it is like to be the IHE in at least one of the hypotheticals

I more strongly want to push back on (2) and (3) in the sense that I think parallel experience, while probably conceptually fine in principle, really greatly degrades the epistemic virtue of the thought experiment because this literally isn't something human brains were/are designed to do or simulate.

Yeah reasonable, but presumably this applies to answers for your main question[1] too?

Suppose the true value of exchange is at 10 years of happiness afterwards; this seems easier for our brains to simulate than if the true exchange rate is at 100,000 years of happiness, especially if you insist on parallel experiences. Perhaps it is just very difficult to be scope sensitive about exactly how much bliss 1E12 years of bliss is!

And likewise with (3), the self interest bit seems pretty epistemically important.

can you clarify what you mean here? Isn't the IHE someone who is "maximally rational/makes no logical errors, have unlimited information processing capacity, complete information about experiences with perfect introspective access, and full understanding of what any hedonic state would actually feel like"?

On formalising where the lexical threshold is you say:

I agree it is imporant! Someone should figure out the right answer! Also in terms of practical implementation, probably better to model as a probability distribution than a single certain line.

This is reasonable, and I agree with probability distribution given uncertainty, but I guess it feels hard to engage with the metaphysical claim "some suffering in fact cannot be morally justified (“offset”) by any amount of happiness" and their implications if you are so deeply uncertain about what counts as NOS. I guess my view is that conditional on physicalism then whatever combination of nociceptor / neuron firing and neurotransmitter release / you can think of, this is a measurable amount. some of these combinations will cross the threshold of NOS under your view, but you can decrease all of those in continuous ways that shouldn't lead to a discontinuity in tradeoffs you're willing to make. It does NOT mean that the relationship is linear, but it seems like there's some reason to believe it's continuous rather than discontinuous / has an asymptote here. And contra your later point:

"I literally don't know what the threshold is. I agree it would be nice to formalize it! My uncertainty isn't much evidence against the view as a whole"

I think if we don't know where a reasonable threshold is it's fine to remain uncertain about it, but I think that's much weaker than accepting the metaphysical claim! It's currently based just on the 70 years of worst-possible suffering VS ~infinite bliss hypothetical. Because your uncertainty about the threshold means I can conjure arbitrarily high numbers of hypotheticals that would count as evidence against your view in the same way your hypothetical is considered evidence for your view.

On the asymptotic compensation schedule

I disagree that it isn't well-justified in principlle, but maybe I should have argued this more thoroughly. It just makes a ton of intuitive sense to me but possibly I am typical-minding.

As far as I can tell, you just claim that it creates an asymptote and label it the correct view right? But why should it grow without bound? Sorry if I've missed something!

And I'm pretty sure you're wrong about the second thing - see point 3 a few bullets up. It seems radically less plausible to me that the true nature of ethics involves discontinuous i_s vs i_h compensation schedules.

I was unclear about the "doesn't seem to meaningfully change the unintuitive nature of the tradeoffs your view is willing to endorse" part you're referring to here, and I agree RE: discontinuity. What I'm trying to communicate is that if someone isn't convinced by the perceived discontinuity of NOS being non-offsettable and BOS being offsettable, a large subset of them also won't be very convinced by the response "the radical part is in the approach to infinity, (in your words: the compensation schedule growing without bound (i.e., asymptotically) means that some sub-threshold suffering would require 10^(10^10) happy lives to offset, or 1000^(1000^1000). (emphasis added)".

Because they could just reject the idea that an extremely bad headache (but not a cluster headache), or a short cluster headache episode, or a cluster headache managed by some amount of painkiller, etc, requires 1000^(1000^1000) happy lives to offset.

I guess this is just another way of saying "it seems like you're assuming people are buying into the asymptotic model but you haven't justified this".

- ^

"Would you accept 70 years of the worst conceivable torture in exchange for any amount of happiness afterward?"

Thanks for writing this up publicly! I think it's a very thought provoking piece and I'm glad you've written it. Engaging with it has definitely helped me consider some of my own views in this space more deeply. As you know this is basically just a compilation of comments I've left in previous drafts, and am deferring to your preference to have these discussions in public. Some caveats for other readers: I don't have any formal philosophical background so this is largely first principles reasoning rather than anything philosophically grounded.[1]

All of this is focussed on (to me) the more interesting metaphysical claim that "some suffering in fact cannot be morally justified (“offset”) by any amount of happiness."

TL;DR

- The positive argument for the metaphysical claim and the title of this piece relies (IMO) too heavily on a single thought experiment, that I don't think supports the topline claim as written.

- The post illustrates an unintuitive finding about utilitarianism, but doesn't seem to provide a substantive case for why utilitarianism that includes lexicality is the least unintuitive option compared to other unintuitive utilitarian conclusions. For example, my understanding of your view is that given a choice of the following options:

A) 70 years of non-offsettable suffering, followed by 1 trillion happy human lives and 1 trillion happy pig lives, or

B) [70 years minus 1 hour of non-offsettable suffering (NOS)], followed by 1 trillion unhappy humans who are living at barely offsettable suffering (BOS), followed by 1 trillion pig lives that are living at the BOS,

You would prefer option B here. And it's not at all obvious to me that we should find this deal more acceptable or intuitive than what I understand is basically an extreme form of the Very Repugnant Conclusion, and I'm not sure you've made a compelling case for this, or that world B contains less relevant suffering.

- Thought experiment variations:

People's intuitions about the suffering/bliss trade might reasonably change based on factors like:- Duration of suffering (70 minutes vs. 70 years vs. 70 billion years)

- Whether experiences happen in series or parallel

- Whether you can transfer the bliss to others

- Threshold problem:

Formalizing where the lexical threshold sits is IMO pretty important, because there are reasonable pushbacks to both, but they feel like meaningfully different views- High threshold (e.g.,"worst torture") means the view is still susceptible to unintuitive package deals that endorse arbitrarily large amounts of barely-offsettable suffering (BOS) to avoid small amounts of suffering that does cross the threshold

- Low threshold (e.g., "broken hip" or "shrimp suffering") seems like it functionally becomes negative utilitarianism

- Asymptotic compensation schedule:

The claim that compensation requirements grow asymptotically and approach infinity (rather than linearly, or some other way) isn't well-justified, and doesn't seem to meaningfully change the unintuitive nature of the tradeoffs your view is willing to endorse.

============

Longer

As far as I can tell, the main positive argument you have for is the thought experiment where you reject the offer of 70 years of worst conceivable suffering in exchange for any amount of happiness afterwards". But I do think it would be rational for an IHE as defined to accept this trade

I agree that package deals that permit or endorse the creation of extreme suffering as part of a package deal is an unintuitive / uncomfortable view to want to accept. But AFAICT most if not all utilitarian views have some plausibly unintuitive thought experiment like this, and my current view is that you have still not made a substantive positive claim for non-offsettability / negative lexical utilitarianism beyond broadly "here is this unintuitive result about total utilitarianism", and I think an additional claim of “why is this the least unintuitive result / the one that we should accept out of all unintuitive options” would be helpful for readers, otherwise I agree more with your section “not a proof” than your topline metaphysical claim (and indeed your title “Utilitarians Should Accept that Some Suffering Cannot be “Offset””).

The thought experiment:

I do actually think that the IHE should take this trade. But I think a lot of my pushbacks apply even if you are uncertain about whether the IHE should or not.

For example, I think whether the thought experiment stipulates 70 minutes years or 70 years or 70 billion years of the worst possible suffering meaningfully changes how the thought experiment feels, but if lexicality was true we should not take the trade regardless of the duration. I know you've weakened your position on this, but it does open up more uncertainties of the kinds of tradeoffs you should be willing to make since the time aspect is continuous, and if this alone is sufficient to turn something from offsettable to not-offsettable then it could imply some weird things, like it seems a little weird to prioritise averting 1 case of a 1 hour cluster headache over 1 million cases of 5 minute cluster headaches.[2]

As Liv pointed out in a previous version of the draft, there are also versions of the thought experiment which I think people’s intuitive answer may reasonably change, shouldn’t if you think lexicality is true:

-is the suffering / bliss happening in parallel or in series

-is there the option of taking the suffering on behalf of others (e.g. some might be more willing to take the trade if after you take the suffering, the arbitrary amounts of bliss can be transferred to other people as well, and not just yourself)

On the view more generally:

I’m not sure you explicitly make this claim so if this isn’t your view let me know! But I think your version of lexicality doesn’t just say “one instance of NOS is so bad that we should avert this no matter how much happiness we might lose / give up as a result”, but it also says “one instance of NOS is so bad that we should prioritise averting this over any amount of BOS”[3]

Why I think formalising the threshold is helpful in understanding the view you are arguing for:

If the threshold is very high, e.g. "worst torture imaginable", then you are (like total utilitarianism) in a situation where you are also having uncomfortable/unintuitive package deals where you have to endorse high amounts of suffering. For example, you would prefer to avert 1 hour of the worst torture imaginable in exchange for never having any more happiness and positive value, but also actively produce arbitrarily high amounts of BOS.

My understanding of your view is that given a choice of living in series:

A) 70 years of NOS, followed by 1 trilion positive happy human lives and 1 trillion happy pig lives, or

B) [70 years minus 1 hour of NOS], followed by 1 trillion unhappy humans who are living at BOS, followed by 1 trillion pig lives that are living at the BOS,

you would prefer the latter. It's not at all obvious to me that we should find this deal more acceptable or intuitive than what I understand is basically an extreme form of the Very Repugnant Conclusion. It's also not clear to me that you have actually argued a world like world B would have to have "less relevant suffering" than world A (e.g. your footnote 24).

If the threshold is lower, e.g. "broken hip", or much lower, e.g. "suffering of shrimp that has not been electrically stunned", then you while you might less unintuitive suffering package deals, but you end up functionally very similar to negative utilitarianism, where averting one broken leg outweighs, or saving 1 shrimp outweighs all other benefits.

Formalising the threshold:

Using your example of a specific, concrete case of extreme suffering: “a cluster headache [for one human] lasting for one hour”.

If this uncontroversially crosses the non-offsetable threshold for you, consider how you'd view the headache if you hypothetically decrease the amount of time, the number of nociceptors that are exposed to the stimuli, how often they fire, etc until you get to 0 on some or all variables. This feels pretty continuous! And if you think there should be a discontinuity that isn't explained by this, then it’d be helpful to map out categorically what it entails. For example, if someone is boiled alive[4] this is extreme suffering, because suffering involving extreme heat, confronting your perceived impending doom, loss of autonomy or some combination of the above. But you might still probably need more than this because not all suffering involving extreme heat or suffering involving loss of autonomy is necessarily extreme, and it’s not obvious how this maps onto e.g. cluster headaches. Or you might bite the bullet on "confronting your impending doom", but this might be a pretty different view with different implications etc.

On "Continuity and the Location of the Threshold"

The radical implications (insofar as you think any of this is radical) aren't at the threshold but in the approach to it. The compensation schedule growing without bound (i.e., asymptotically) means that some sub-threshold suffering would require 10^(10^10) happy lives to offset, or 1000^(1000^1000). (emphasis added)

============

This arbitrariness diminishes somewhat (though, again, not entirely) when viewed through the asymptotic structure. Once we accept that compensation requirements grow without bound as suffering intensifies, some threshold becomes inevitable. The asymptote must diverge somewhere; debates about exactly where are secondary to recognizing the underlying pattern.

It’s not clear that we have to accept the compensation schedule as growing asymptotically? Like if your response to “the discontinuity of tradeoffs caused by the lexical threshold does not seem to be well justified” is “actually the radical part isn’t the threshold, it’s because of the asymptotic compensation schedule”, then it would be helpful to explain why you think the asymptotic compensation schedule is the best model, or preferable to e.g. a linear one.

For example, suppose a standard utilitarian values converting 10 factory farmed pig lives to 1 happy pig life to 1 human life similarly, and they also value 1E4 happy pig lives to 1E3 human lives.

Suppose you are deeply uncertain about whether a factory farmed pig experiences NOS because it's very close to the threshold of what you think constitutes extreme / NOS suffering.

If the answer is yes, then converting 1 factory farmed pig to a happy pig life should trade off against arbitrarily high numbers of human lives. But according to the asymptotic compensation schedule, if the answer is no, then you might need 10^(10^10) human lives to offset a happy pig life. But either way, it's not obvious to the standard utilitarian why they should value 1 case of factory farmed pig experience this much!

Other comments:

In other words, let us consider a specific, concrete case of extreme suffering: say a cluster headache lasting for one hour.

Here, the lexical suffering-oriented utilitarian who claims that this crosses the threshold of in-principle compensability has much more in common with the standard utilitarian who thinks that in principle creating such an event would be morally justified by TREE(3) flourishing human life-years than the latter utilitarian has with the standard utilitarian who claims that the required compensation is merely a single flourishing human life-month.

I suspect this intended to be illustrative, but I would be surprised if there were many, if any standard utilitarians who would actually say that you need TREE(3)[5] flourishing human life years to offset a cluster headache lasting 1 hour, so this seems like a strawman?

Like it does seem like the more useful Q to ask is something more like:

Does the lexical suffering-oriented utilitarian who claims that this crosses the threshold of in-principle compensability have more in common with the standard utilitarian who thinks the event would be morally justified by 50 flourishing human life years (which is already a lot!), than that latter utilitarian has with another standard utilitarian who claims the required compensation is a single flourishing life month?

Like 1 month : TREE(3) vs. TREE(3) : infinity seems less likely to map to the standard utilitarian view than something like 1 month : 50 years vs. 50 years : infinity.

Thanks again for the post, and all the discussions!

- ^

I'm also friends with Aaron and have already had these discussions with him and other mutual friends in other contexts and so have possibly made less effort into making sure the disagreements land as gently as possible than I would otherwise. I've also spent a long time on the comment already so have focussed on the disagreements rather than the parts of the post that are praiseworthy.

- ^

To be clear I find the time granularity issue very confusing personally, and I think it does have important implications for e.g. how we value extreme suffering (for example, if you define extreme suffering as "not tolerable even for a few seconds + would mark the threshold of pain under which many people choose to take their lives rather than endure the pain", then much of human suffering is not extreme by definition, and the best way of reaching huge quantities of extreme suffering is by having many small creatures with a few seconds of pain (fish, shrimp, flies, nematodes). However, depending on how you discount for these small quantities of pain, it could change how you trade off between e.g. shrimp and human welfare, even without disagreements on likelihood of sentience or the non-time elements that contribute to suffering.

- ^

Here I use extreme suffering and non-offsetable suffering interchangeably, to mean anything worse than the lexical threshold, and thus not offsetable, and barely offsetable suffering to mean some suffering that is as close to the lexical threshold as possible but considered offsetable. Credit to Max’s blog post for helping me with wording some of this, though I prefer non-offsetable over extreme as this is more robust to different lexical thresholds).

Speaking just for myself (RE: malaria), the topline figures include adjustments for various estimates around how much USAID funding might be reinstated, as well as discounts for redistribution / compensation by other actors, rather than forecasting an 100% cut over the entire time periods (which was the initial brief, and a reasonable starting point at the time but became less likely to be a good assumption by the time of publication).

My 1 year / 5 year estimates without these discounts are approx. 130k to 270k and 720k to 1.5m respectively.

You can, of course, hold that insects don’t matter at all or that they matter infinitely less than other things so that we can, for all practical purposes, ignore their welfare. Certainly this would be very convenient. But the world does not owe us convenience and rarely provides us with it. If insects can suffer—and probably experience in a week more suffering than humans have for our entire history—this is certainly worth caring about. Plausibly insects can suffer rather intensely. When hundreds of billions of beings die a second, most experiencing quite intense pain before their deaths, that is quite morally serious, unless there’s some overwhelmingly powerful argument against taking their interests seriously.

If you replace insects here with mites doesn't your argument basically still apply? A 10 sec search suggests that mites are plausibly significantly more numerous than insects. When you say "they're not conscious", is this coming from evidence that they aren't, or lack of evidence that they are, and would you consider this an "overwhelmingly powerful argument"?

Thanks for writing this post!

I feel a little bad linking to a comment I wrote, but the thread is relevant to this post, so I'm sharing in case it's useful for other readers, though there's definitely a decent amount of overlap here.

TL; DR

I personally default to being highly skeptical of any mental health intervention that claims to have ~95% success rate + a PHQ-9 reduction of 12 points over 12 weeks, as this is is a clear outlier in treatments for depression. The effectiveness figures from StrongMinds are also based on studies that are non-randomised and poorly controlled. There are other questionable methodology issues, e.g. surrounding adjusting for social desirability bias. The topline figure of $170 per head for cost-effectiveness is also possibly an underestimate, because while ~48% of clients were treated through SM partners in 2021, and Q2 results (pg 2) suggest StrongMinds is on track for ~79% of clients treated through partners in 2022, the expenses and operating costs of partners responsible for these clients were not included in the methodology.

(This mainly came from a cursory review of StrongMinds documents, and not from examining HLI analyses, though I do think "we’re now in a position to confidently recommend StrongMinds as the most effective way we know of to help other people with your money" seems a little overconfident. This is also not a comment on the appropriateness of recommendations by GWWC / FP)

(commenting in personal capacity etc)

Edit:

Links to existing discussion on SM. Much of this ends up touching on discussions around HLI's methodology / analyses as opposed to the strength of evidence in support of StrongMinds, but including as this is ultimately relevant for the topline conclusion about StrongMinds (inclusion =/= endorsement etc):