Announcing Fatebook: a website that makes it extremely low friction to make and track predictions.

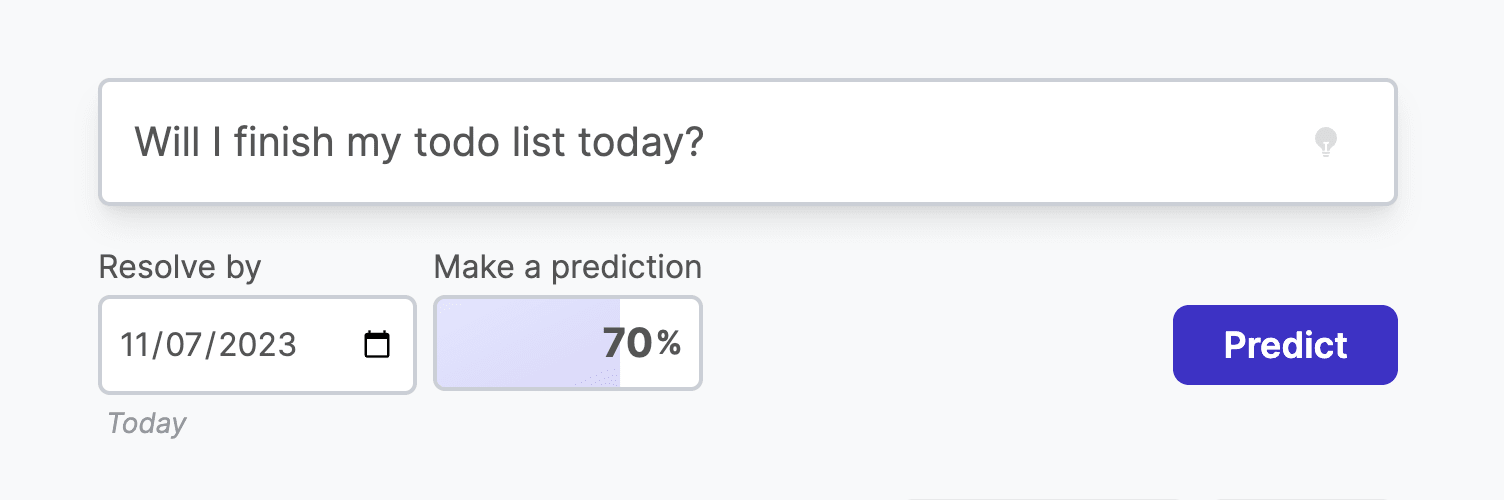

It's designed to be very fast - just open a new tab, go to fatebook.io, type your prediction, and hit enter. Later, you'll get an email reminding you to resolve your question as YES, NO, or AMBIGUOUS.

It's private by default, so you can track personal questions and give forecasts that you don't want to share publicly. You can also share questions with specific people, or publicly.

Fatebook syncs with Fatebook for Slack - if you log in with the email you use for Slack, you’ll see all of your questions on the website.

As you resolve your forecasts, you'll build a track record - Brier score, Relative Brier score, and see your calibration chart. You can use this to track the development of your forecasting skills.

Some stories of outcomes I hope Fatebook will enable

I hope people interested in EA use Fatebook to track many more of the predictions they’re making!

Some example stories:

- During 1-1s at EAG, it’s common to pull out your phone and jot down predictions on Fatebook about cruxes of disagreement

- Before you start projects, you and your team make your underlying assumptions explicit and put probabilities on them - then, as your plans make contact with reality, you update your estimates

- As part of your monthly review process, you might make forecasts about your goals and wellbeing

- If you’re exploring career options and doing cheap tests like reading or interning, you first make predictions about what you’ll learn. Then you return to these periodically to reflect on how valuable more exploration might be

- Intro programs to EA (e.g. university reading groups, AGISF) and to rationality (e.g. ESPR, Atlas, Leaf) use Fatebook to make both on- and off-topic predictions. Participants get a chance to try forecasting on questions that are relevant to their interests and lives

As a result, I hope that we’ll reap some of the benefits of tracking predictions, e.g.:

- Truth-seeking incentives that reduce motivated reasoning => better decisions

- Probabilities and concrete questions reduce talking past each other => clearer communication

- Track records help people improve their forecasting skills, and help identify people with excellent abilities (not just restricted to the domains that are typically covered on public platforms like Metaculus and Manifold like tech and geopolitics) => forecasting skill development and talent-spotting

Ultimately, the platform is pretty flexible - I’m interested to see what unexpected usecases people find for it, and what (if anything) actually seems useful about it in practice!

Your feedback or thoughts would be very useful - we can chat in the comments here, in our Discord, or by email.

You can try Fatebook at fatebook.io

Thanks to the Atlas Fellowship for supporting this project, and thanks to everyone who's given feedback on earlier versions of the tool.

This is great! I love the simplicity and how fast and frictionless the experience is.

I think I might be part of the ideal target market, as someone who has long wanted to get more into the habit of concretely writing out his predictions but often lacks the motivation to do so consistently.

Thank you! I'm interested to hear how you find it!

Very relatable! The 10 Conditions for Change framework might be helping for thinking of ways to do it more consistently (if on reflection you really want to!) Fatebook aims to help with 1, 2, 4, 7, and 8, I think.

One way to do more prediction I'm interested in is integrating prediction into workflows. Here are some made-up examples:

If anyone that either has prediction as part of workflows or would like to do so would be interested in chatting, lmk!

In many ways Fatebook is a successor to PredictionBook (now >11 years old!) If you've used PredictionBook in the past, you can import all your PredictionBook questions and scores to Fatebook.

I really love this <3

Compared to more public prediction platforms (e.g. Manifold), I think the biggest value adds for me are: (a) being ridiculously easy to set up + use, and (b) being able to make private predictions.

On (b), I saw the privacy policy is currently a canned template. I'm curious if you could say more on:

:) I'm a really big fan of Sage's work, thank you so much!

Thank you!

No - we don't look at non-anonymised user data in our analytics. We use Google Analytics events, so we can see e.g. a graph of how many forecasts are made each day, and this tracks the ID of each user so we can see e.g. how many users made forecasts each day (to disambiguate a small number of power-users from lots of light users). IDs are random strings of text that might look like

cwudksndspdkwj. I think you'd call technically this "pseudo-anonymised" because user IDs are stored, not sure!Your predictions are private to you unless you share them. I and the other two devs who have helped out with parts of the project have access to the production database, but we commit to not looking at users' questions unless you specifically share them with us (e.g. to help us debug something). I am interested in encrypting the questions in the database so that we're unable to theoretically access them, but haven't got round to implementing this yet (I want to focus on some bigger user-visible improvements first!)

Hope this makes sense! Thanks for your kind words and for checking about this, let me know if you think we could improve on any of this!

Thanks for the fast response, all of this sounds very reasonable! :)

By the way, very tiny bug report: The datestamps are rendering a bit weird? I see the correct date stamp for today under the date select, but the description text in italics is rendering as 'Yesterday', and the 'data-tip' value in the HTML is wrong.

Obviously not a big deal, just passing it on :) I'm currently in PST time, where it is 9:39am on 2023.07.25, if it matters. (Let me know if you'd prefer to receive bug reports somewhere else?)

Ah thank you! I've just pushed what should be a fix for this (hard to fully test as I'm in the UK).

Thanks so much! :) FYI that the top level helper text seems fixed:

But the prediction-level helper text is still not locale aware:

(Again, not a big deal at all :) )

Nice!

Readers might also be interested in the linux utility version of this: https://github.com/NunoSempere/PredictResolveTally

Awesome! I have been wanting something like this for a while and am looking forward to trying it out.

See this previous comment of mine for some potentially interesting suggestions:

https://forum.effectivealtruism.org/posts/cbtoajkfeXqJAzhRi/metaculus-year-in-review-2022?commentId=dotzeW2wxM5Avm7jL

(Excuse formatting; on mobile)

I'm thinking of creating a Chrome extension that will let you type

/forecast Will x happen?anywhere on the internet, and it'll create and embed an interactive Fatebook question. EDIT: we created this, the Fatebook browser extension.I'm thinking of primarily focussing on Google Docs, because I think the EA community could get a lot of mileage out of making and tracking predictions embedded in reports, strategy docs, etc. This extension would also work in messaging apps, on social media, and even here on the forum (though first-party support might be better for the forum!).

Great, thanks!

I'm interested in adding power user shortcuts like this!

Currently, if your question text includes a date that Fatebook can recognise, it'll prepopulate the "Resolve by" field with that date. This works for a bunch of common phrases, e.g. "in two weeks" "by next month" "by Jan 2025" "by February" "by tomorrow".

If you play around with the site, I'd be interested to hear if you find yourself still keen for the addition of concise shortcuts like "2w" or if the current natural language date parsing works well for you.

It looks fantastic! Great job as always

I absolutely love that it infers resolving dates from the text! I was positively delighted when the field populated itself when I wrote "by the beginning of september". This is especially important on mobile.

Excited to see if this is a useful tool. Very polished, nice work!