This is the summary of the report with additional images (and some new text to explain them) The full 90+ page report (and a link to its 80+ page appendix) is on our website.

Summary

This report forms part of our work to conduct cost-effectiveness analyses of interventions and charities based on their effect on subjective wellbeing, measured in terms of wellbeing-adjusted life years (WELLBYs). This is a working report that will be updated over time, so our results may change. This report aims to achieve six goals, listed below:

1. Update our original meta-analysis of psychotherapy in low- and middle-income countries.

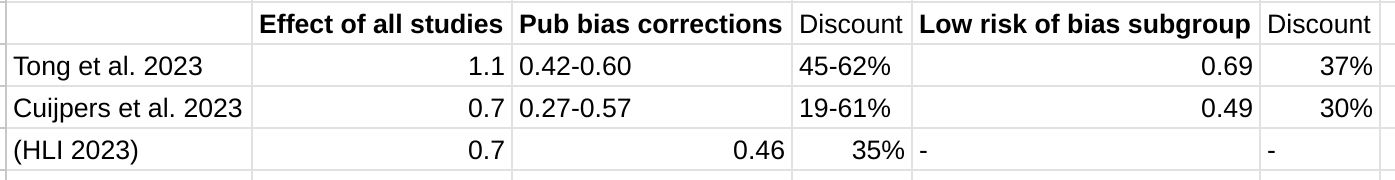

In our updated meta-analysis we performed a systematic search, screening and sorting through 9390 potential studies. At the end of this process, we included 74 randomised control trials (the previous analysis had 39). We find that psychotherapy improves the recipient’s wellbeing by 0.7 standard deviations (SDs), which decays over 3.4 years, and leads to a benefit of 2.69 (95% CI: 1.54, 6.45) WELLBYs. This is lower than our previous estimate of 3.45 WELLBYs (McGuire & Plant, 2021b) primarily because we added a novel adjustment factor of 0.64 (a discount of 36%) to account for publication bias.

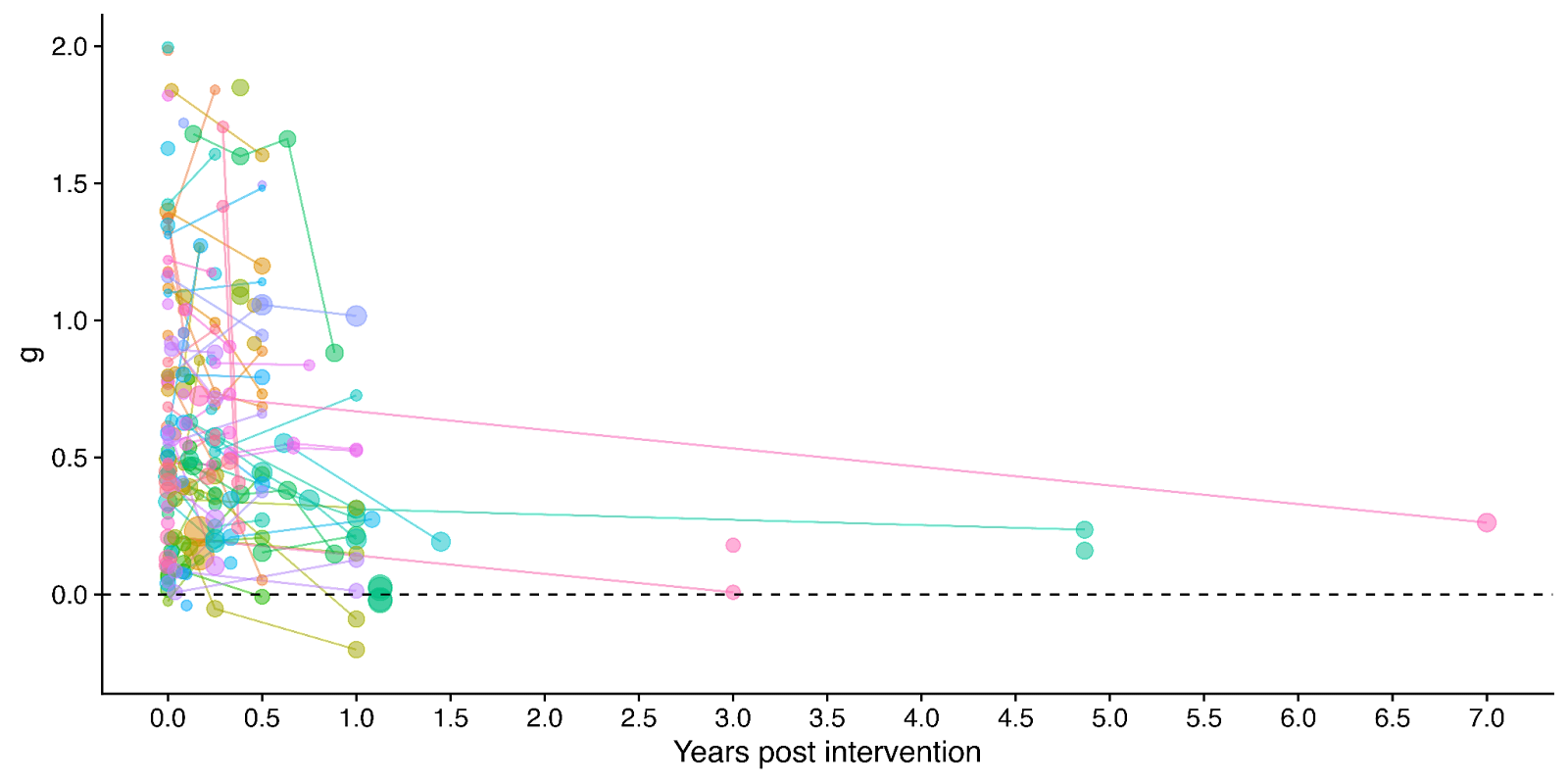

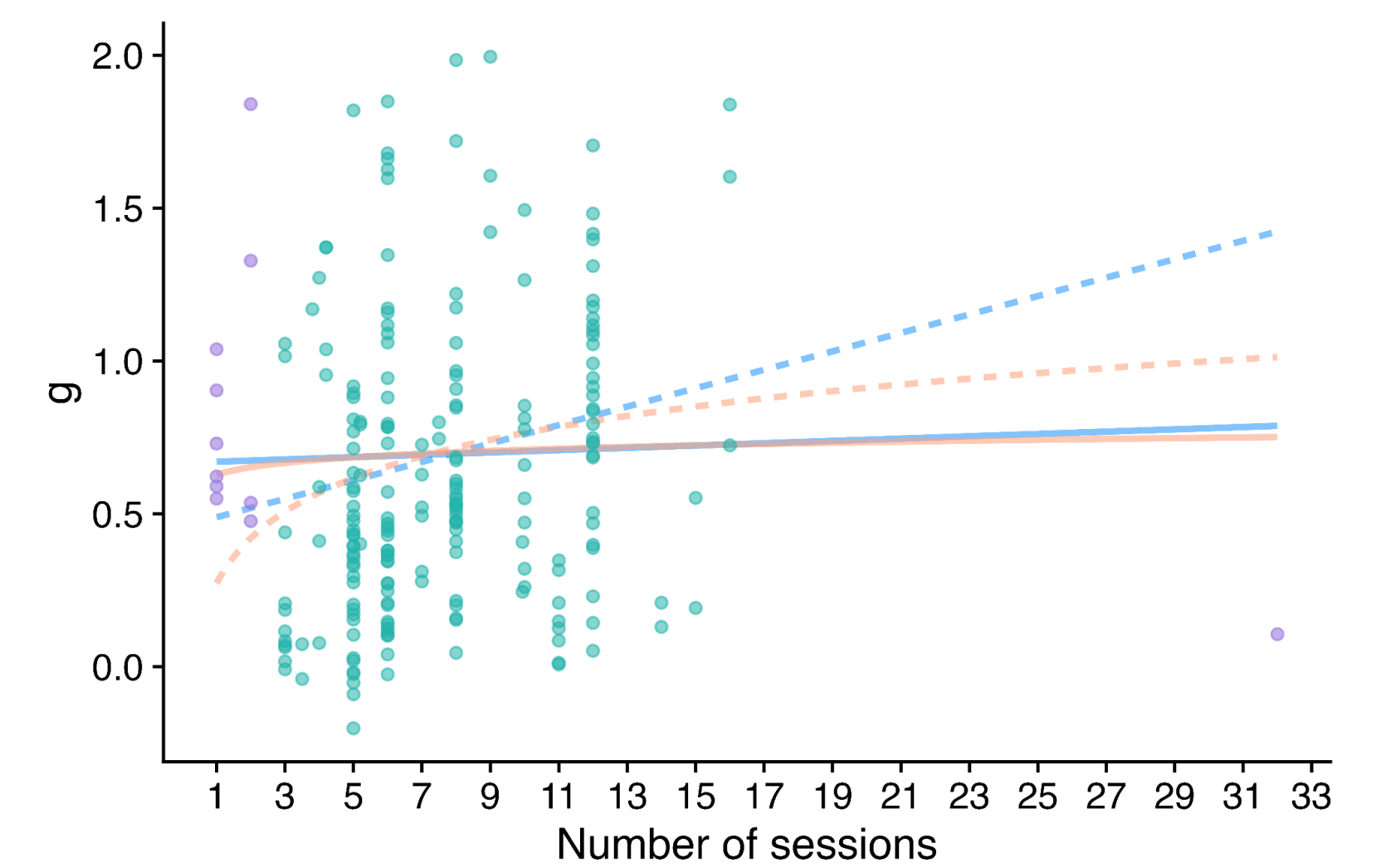

Figure 1: Distribution of the effects for the studies in the meta-analysis, measured in standard deviations change (Hedges’ g) and plotted over time of measurement. The size of the dots represents the sample size of the study. The lines connecting dots indicate follow-up measurements of specific outcomes over time within a study. The average effect is measured 0.37 years after the intervention ends. We discuss the challenges related to integrating unusually long follow-ups in Sections 4.2 and 12 in the report.

2. Update our original estimate of the household spillover effects of psychotherapy.

We collected 5 (previously 2) RCTs to inform our estimate of household spillover effects. We now estimate that the average household member of a psychotherapy recipient benefits 16% as much as the direct recipient (previously 38%). See McGuire et al. (2022b) for our previous report-length treatment of household spillovers.

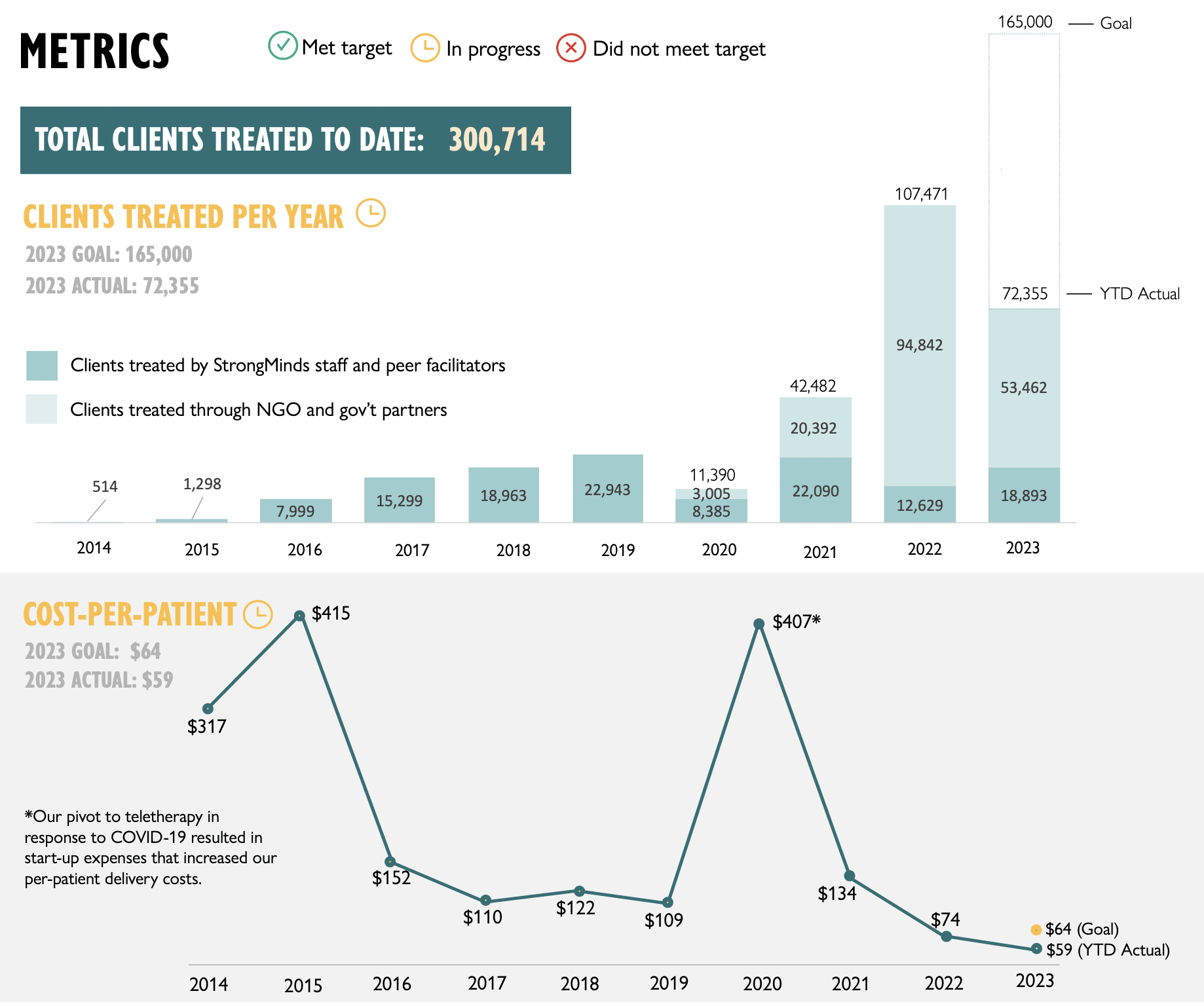

3. Update our original cost-effectiveness analysis of StrongMinds, an NGO that provides group interpersonal psychotherapy in Uganda and Zambia.

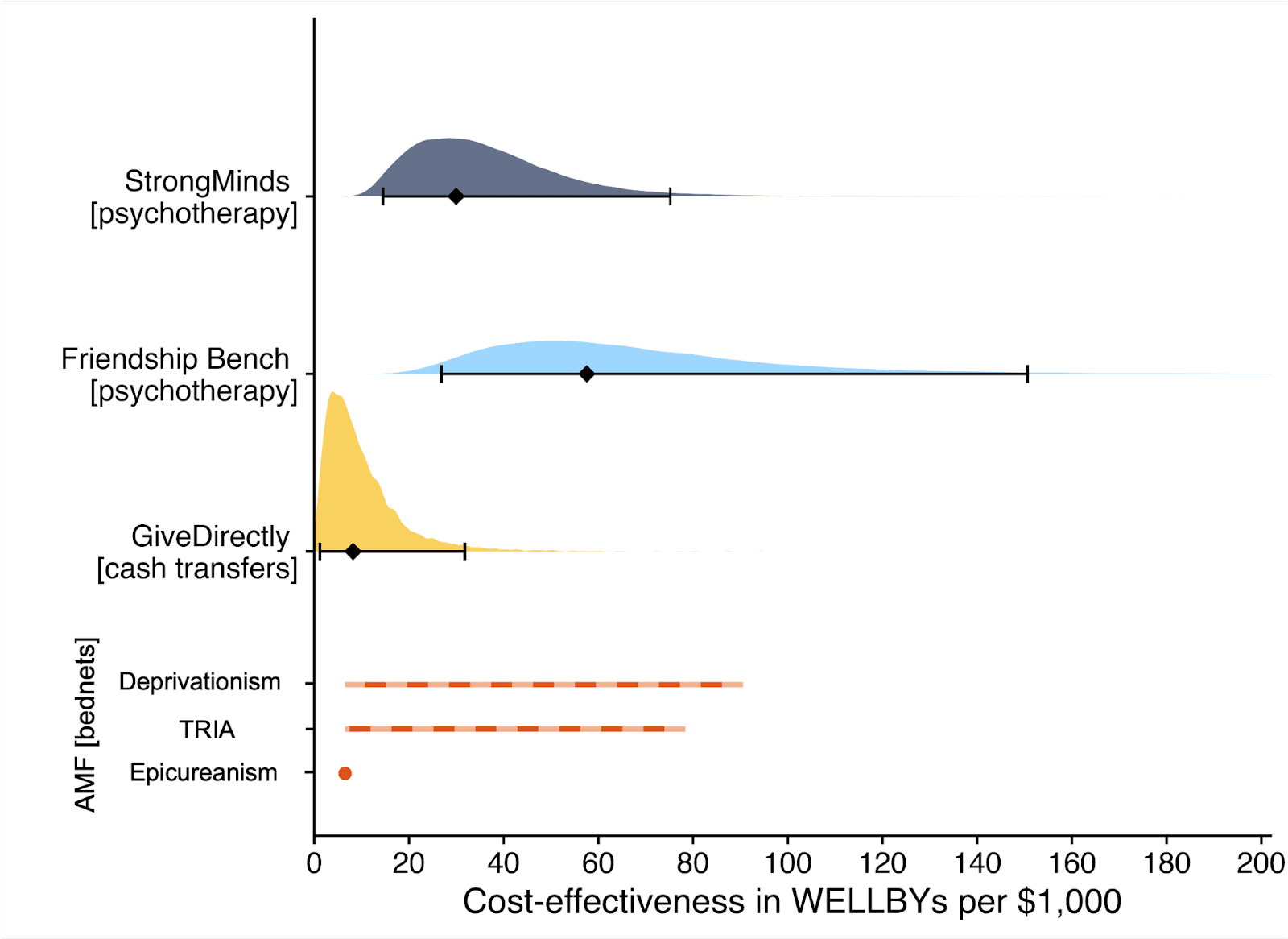

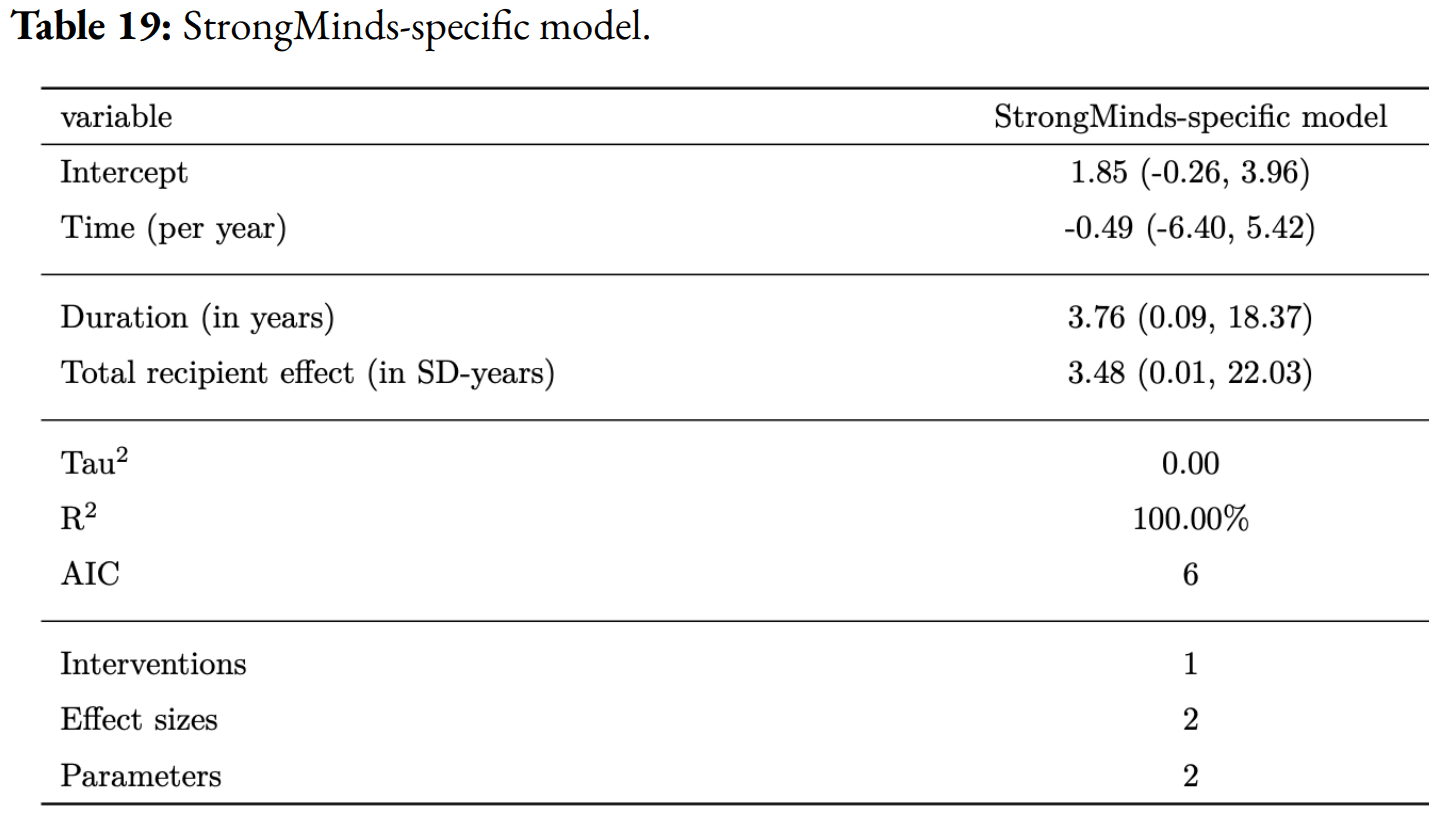

We estimate that a $1,000 donation results in 30 (95% CI: 15, 75) WELLBYs, a 52% reduction from our previous estimate of 62 (see our changelog website page). The cost per person treated for StrongMinds has declined to $63 (previously $170). However, the estimated effect of StrongMinds has also decreased because of smaller household spillovers, StrongMinds-specific characteristics and evidence which suggest smaller-than-average effects, and our inclusion of a discount for publication bias.

The only completed RCT of StrongMinds is the long anticipated study by Baird and co-authors, which has been reported to have found a “small” effect (another RCT is underway). However, this study is not published, so we are unable to include its results and unsure of its exact details and findings. Instead, we use a placeholder value to account for this anticipated small effect as our StrongMinds-specific evidence.[1]

4. Evaluate the cost-effectiveness of Friendship Bench, an NGO that provides individual problem solving therapy in Zimbabwe.

We find a promising but more tentative initial cost-effectiveness estimate for Friendship Bench of 58 (95% CI: 27, 151) WELLBYs per $1,000. Our analysis of Friendship Bench is more tentative because our evaluation of their programme and implementation has been more shallow. It has 3 published RCTs which we use to inform our estimate of the effects of Friendship Bench. We plan to evaluate Friendship Bench in more depth in 2024.

5. Update our charity evaluation methodology.

We improved our methodology for combining our meta-analysis of psychotherapy with charity-specific evidence. Our new method uses Bayesian updating, which provides a formal, statistical basis for combining evidence (previously we used subjective weights). Our rich meta-analytic dataset of psychotherapy trials in LMICs allowed us to predict the effect of charities based on characteristics of their programme such as expertise of the deliverer, whether the therapy was individual or group-based, and the number of sessions attended (previously we used a more rudimentary version of this). We also applied a downwards adjustment for a phenomenon where sample restrictions common to psychotherapy trials inflate effect sizes. We think the overall quality of evidence for psychotherapy is ‘moderate’.

6. Update our comparison to other charities

Finally, we compare StrongMinds and Friendship Bench to GiveDirectly cash transfers, which we estimated as 8 (95% CI: 1, 32) WELLBYs per $1,000 (McGuire et al., 2022b). We find here that StrongMinds is 30 (95% CI: 15, 75) WELLBYs per $1,000. Hence, comparing the point estimates, we now estimate that, in WELLBYs, StrongMinds is 3.7x (previously 8x) as cost-effective as GiveDirectly and Friendship Bench is 7.0x as cost-effective as GiveDirectly.

These estimates are largely determined by our estimates of household spillover effects, but the evidence on these effects is much weaker for psychotherapy than cash transfers. It is worth noting that if we only consider the effects on the direct recipient, this increases psychotherapy’s WELLBY effects relative to cash transfers - StrongMinds and Friendship Bench move to 10x and 21x as cost-effective as GiveDirectly, respectively. But it reduces the cost-effectiveness compared to antimalarial bednets. We also present and discuss (Section 12 in the report) how sensitive these results are to the different analytical choices we could have made in our analysis.

Figure 2: Comparison of charity cost-effectiveness. The diamonds represent the central estimate of cost-effectiveness (i.e., the point estimates). The shaded areas are probability density distribution and the solid whiskers represent the 95% confidence intervals for StrongMinds, Friendship Bench, and GiveDirectly. The lines for AMF (the Against Malaria Foundation) are different from the others[2]. Deworming charities are not shown, because we are very uncertain of their cost-effectiveness.

We think this is a moderate-to-in-depth analysis, where we have reviewed most of the available evidence and made many improvements to our methodology. We view the quality of evidence as ‘moderate to high’ for understanding the effect of psychotherapy on its direct recipients in general, ‘low’ for household spillovers, and ‘low to moderate’ for the charity-specific evidence for psychotherapy (StrongMinds and Friendship Bench). Therefore, we see the overall quality of evidence as ‘moderate’.

This is a working report, and results may change over time. We welcome feedback to improve future versions.

Notes

Author note: Joel McGuire, Samuel Dupret, and Ryan Dwyer contributed to the conceptualization, investigation, analysis, data curation, and writing of the project. Michael Plant contributed to the conceptualization, supervision, and writing of the project. Maxwell Klapow contributed to the systematic search and writing.

Reviewer note: We thank, in chronological order, the following reviewers: David Rhys Bernard (for trajectory over time), Ismail Guennouni (for multilevel methodology), Katy Moore (general), Barry Grimes (general), Lily Yu (charity costs), Peter Brietbart (general), Gregory Lewis (general), Ishaan Guptasarma (general), Lingyao Tong (meta-analysis methods and results), Lara Watson (communications).

Charity evaluation note: We thank Jess Brown, Andrew Fraker, and Elly Atuhumuza for providing information about StrongMinds and for their feedback about StrongMinds specific details. We also thank Lena Zamchiya and Ephraim Chiriseri for providing information about Friendship Bench.

Appendix note: This report will be accompanied by an online appendix that we reference for more detail about our methodology and results. The appendix is a working document and will, like this report, be updated over time.

Updates note: This is the first draft of a working paper. New versions will be uploaded over time.

- ^

We use a study that has similar features to the StrongMinds intervention and then discount its results by 95% in the expectation of the Baird et al. study finding a small effect. Note that we do not only rely on StrongMinds-specific evidence in our analysis but combine charity-specific evidence with the results from our general meta-analysis of psychotherapy in a Bayesian manner.

- ^

They represent the upper and lower bound of cost-effectiveness for different philosophical views (not 95% confidence intervals as we haven’t represented any statistical uncertainty for AMF). Think of them as representing moral uncertainty, rather than empirical uncertainty. The upper bound represents the assumptions most generous to extending lives (a low neutral point and age of connectedness) and the lower bound represents those most generous to improving lives (a high neutral point and age of connectedness). The assumptions depend on the neutral point and one’s philosophical view of the badness of death (see Plant et al., 2022, for more detail). These views are summarised as: Deprivationism (the badness of death consists of the wellbeing you would have had if you’d lived longer); Time-relative interest account (TRIA; the badness of death for the individual depends on how ‘connected’ they are to their possible future self. Under this view, lives saved at different ages are assigned different weights); Epicureanism (death is not bad for those who die – this has one value because the neutral point doesn’t affect it).

HLI kindly provided me with an earlier draft of this work to review a couple of weeks ago. Although things have gotten better, I noted what I saw as major problems with the draft as-is, and recommended HLI take its time to fix them - even though this would take a while, and likely miss the window of Giving Tuesday.

Unfortunately, HLI went ahead anyway with the problems I identified basically unaddressed. Also unfortunately (notwithstanding laudable improvements elsewhere) these problems are sufficiently major I think potential donors are ill-advised to follow the recommendations and analysis in this report.

In essence:

Study quality

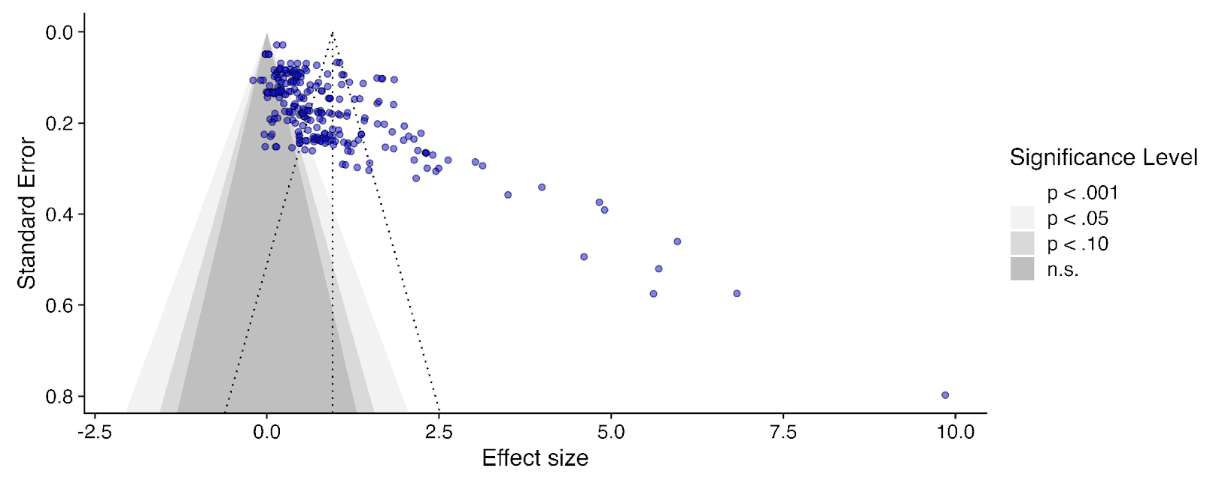

It perhaps comes as little surprise that different studies on psychotherapy in LMICs report very different results:[1]

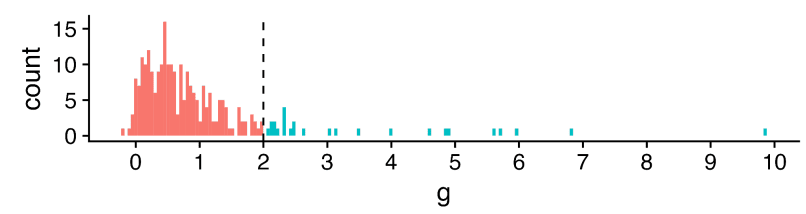

The x-axis is a standardized measure of effect size for psychotherapy in terms of wellbeing.[2] Most - but not all - show a positive effect (g > 0), but the range is vast, HLI excludes effect sizes over 2 as outliers (much more later), but 2 is already a large effect: to benchmark, it is roughly the height difference between male and female populations.

Something like an '(weighted) average effect size' across this set would look promising (~0.6) - to also benchmark, the effect size of cash transfers on (individual) wellbeing is ~0.1. Yet cash transfers (among many other interventions) have much less heterogeneous results: more like "0.1 +/- 0.1", not ~"0.6 multiply-or-divide by an integer". It seems important to understand what is going on.

One hope would be this heterogeneity can be explained in terms of the intervention and length of follow-up. Different studies did (e.g.) different sorts of psychotherapy, did more or less of it, and measured the outcomes at different points afterwards. Once we factor these things in to our analysis, this wide distribution seen when looking at the impact of psychotherapy in general sharpens into a clearer picture for any particular psychotherapeutic intervention. One can then deploy this knowledge to assess - in particular - the likely efficacy of a charity like Strongminds.

The report attempts this enterprise in section 4 of the report. I think a fair bottom line is despite these efforts, the overall picture is still very cloudy: the best model explains ~12% of the variance in effect sizes. But this best model is still better than no model (but more later), so one can still use it to make a best guess for psychotherapeutic interventions, even if there remains a lot of uncertainty and spread.

But there could be another explanation for why there's so much heterogeneity: there are a lot of low-quality studies, and low quality studies tend to report inflated effect sizes. In the worst case, the spread of data suggesting psychotherapy's efficacy is instead a mirage, and the effect size melts under proper scrutiny.

Hence why most systematic reviews do assess the quality of included studies and their risk of bias. Sometimes this is only used to give a mostly qualitative picture alongside the evidence synthesis (e.g. 'X% of our studies have a moderate to high risk of bias') or sometimes incorporated quantitatively (e.g. 'quality score' of studies included as a predictor/moderator, grouping by 'high/moderate/low' risk of bias, etc. - although all are controversial).

HLI's report does not assess the quality of its included studies, although it plans to do so. I appreciate GRADEing 90 studies or whatever is tedious and time consuming, but skipping this step to crack on with the quantitative synthesis is very unwise:[3] any such synthesis could be hugely distorted by low quality studies. And it's not like this is a mere possibility: I previously demonstrated in the previous meta-analysis that study registration status (one indicator of study quality) explained a lot of heterogeneity, and unregistered studies had on average a three times [!] greater effect size than registered ones.

The report notes it has done some things to help manage this risk. One is cutting 'outliers' (g > 2, the teal in the earlier histogram), and extensive assessment of publication bias/small study effects. These things do help: all else equal, I'd expect bigger studies to be methodologically better ones, so adjusting for small study effects does partially 'control' for study quality; I'd also expect larger effect sizes to arise from lower-quality work, so cutting them should notch up the average quality of the studies that remain.

But I do not think they help enough[4] - these are loose proxies for what we seek to understand. Thus the findings would be unreliable in virtue of this alone until after this is properly looked at. Crucially, the risk that these features could confound the earlier moderator analysis has not been addressed:[5] maybe the relationship of (e.g.) 'more sessions given -> greater effect' is actually due to studies of such interventions tend to be lower quality than the rest. When I looked last time things like 'study size' or 'registration status' explained a lot more of the heterogeneity than (e.g.) all of the intervention moderators combined. I suspect the same will be true this time too.

Publication bias

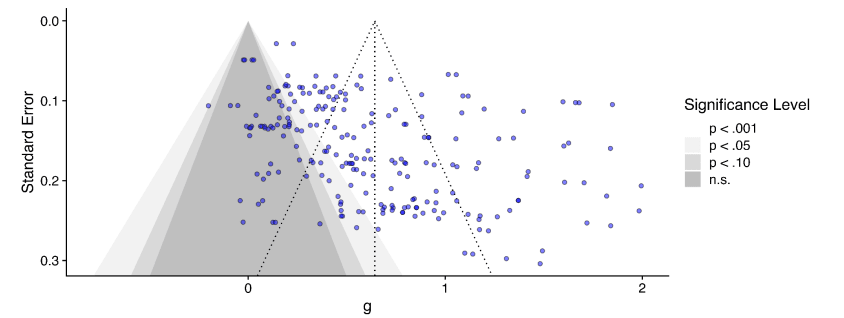

I originally suggested (6m ago?) that correction for publication bias/small study effects could be ~an integer division, so I am surprised the correction was a bit less: ~30%. Here's the funnel plot:[6]

Unsurprisingly, huge amounts of scatter, but the asymmetry, although there, does not leap off the page: the envelope of points is pretty rectangular, but you can persuade yourself it's a bit of a parallelogram, and there's denser part of it which indeed has a trend going down and to the right (so smaller study -> bigger effect).

But this only plots effect sizes g < 2 (those red, not teal, in the histogram). If we include all the studies again, this picture looks a lot clearer - the 'long tail' of higher effects tends to come from smaller studies, which are clearly asymmetric.

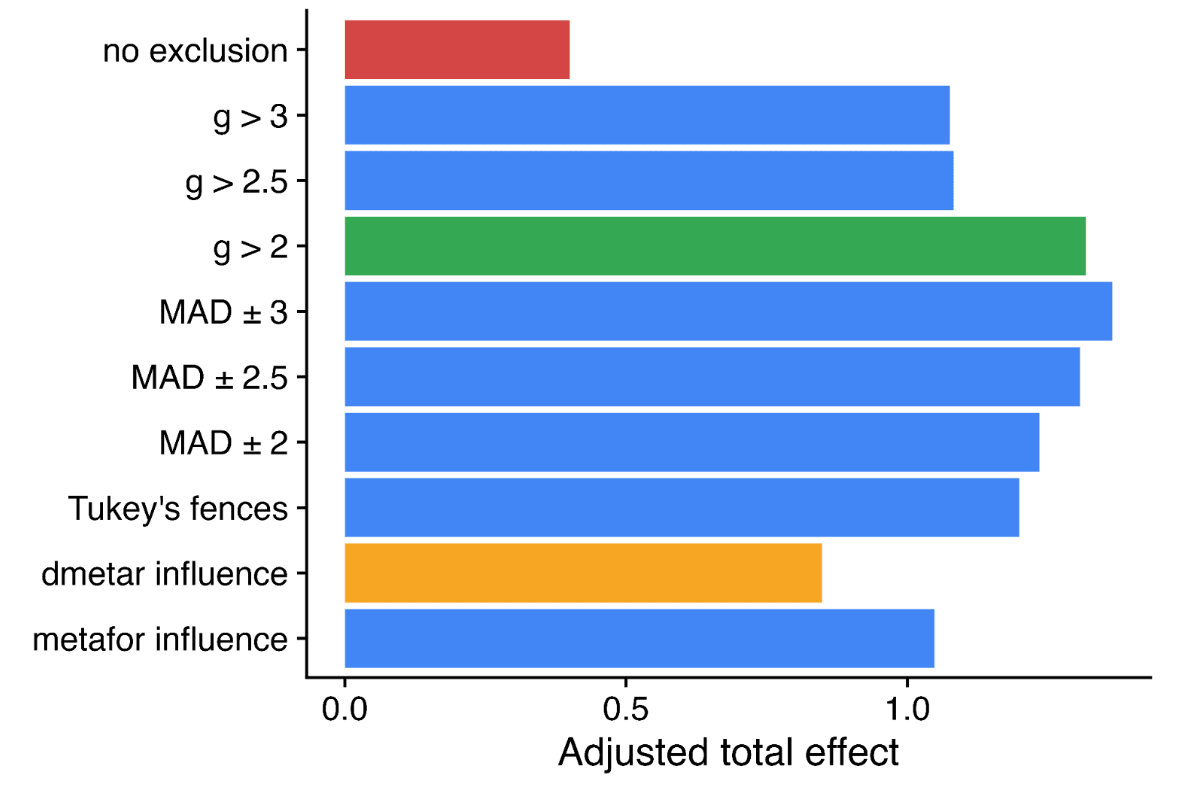

This effect, visible to the naked eye, also emerges in the statistics. The report uses a variety of numerical methods to correct for publication bias (some very sophisticated). All of them adjust the results much further downwards on the full data than when outliers are excluded to varying degrees (table B1, appendix). It would have a stark effect on the results - here's the 'bottom line' result if you take a weighted average of all the different methods, with different approaches to outlier exclusion - red is the full data, green is the outlier exclusion method the report uses.

Needless to say, this choice is highly material to the bottom line results: without excluding data, SM drops from ~3.6x GD to ~1.1x GD. Yet it doesn't get a look in for the sensitivity analysis, where HLI's 'less favourable' outlier method involves taking an average of the other methods (discounting by ~10%), but not doing no outlier exclusion at all (discounting by ~70%).[7]

Perhaps this is fine if outlier inclusion would be clearly unreasonable. But it's not: cutting data is generally regarded as dubious, and the rationale for doing so here is not compelling. Briefly:

The strongest argument given is that, in fact, some numerical methods to correct publication bias give absurd results if given the full data: i.e. one gives an adjusted effect size of -0.6, another -0.2. I could buy an adjustment that drives the effect down to roughly zero, but not one which suggests, despite almost all the data being fairly or very positive, we should conclude from these studies the real effect is actually (highly!) negative.

One could have a long argument on what the most appropriate response is: maybe just keep it, as the weighted average across methods is still sensible (albeit disappointing)? Maybe just drop those methods in particular and do an average of those giving sane answers on the full data? Should we keep g < 2 exclusion but drop p-curve analysis, as it (absurdly?) adjusts the effect slightly upwards? Maybe we should reweigh the averaging of different numerical methods by how volatile their results are when you start excluding data? Maybe pick the outlier exclusion threshold which results in the least disagreement between the different methods? Or maybe just abandon numerical correction, and just say "there's clear evidence of significant small study effects, which the current state of the art cannot reliably quantify and correct"?

So a garden of forking paths opens before us. All of these are varying degrees of 'arguable', and they do shift the bottom line substantially. One reason pre-specification is so valuable is it ties you to a particular path before getting to peek at the results, avoiding any risk a subconscious finger on the scale to push one down a path of still-defensible choices nonetheless favour a particular bottom line. Even if you didn't pre-specify, presenting your first cut as the primary analysis helps for nothing up my sleeve reasons.

It may be the prespecified or initial stab doesn't do a good job of expressing the data, and a different approach does better. Yet making it clear this subsequent analysis is post-hoc cautions a reader about potential risk of bias in analysis.

Happily, HLI did make a protocol for this work, made before they conducted the analysis. Unfortunately, it is silent on whether outlying data would be excluded, or by what criteria. Also unfortunately, because of this (and other things like the extensive discussion in the appendix discussing the value of outlier removal principally in virtue of its impact on publication bias correction), I am fairly sure the analysis with all data included was the first analysis conducted. Only after seeing the initial publication bias corrections did HLI look at the question of whether some data should be excluded. Maybe it should, but if it came second the initial analysis should be presented first (and definitely included in the sensitivity analysis).

There's also a risk the cloud of quantification buries the qualitative lede. Publication bias is known to be very hard to correct, and despite HLI compiling multiple numerical state of the art methods, they starkly disagree on what the correction factor should be (i.e. from <~0 to > 100%). So perhaps the right answer is we basically do not know how much to discount the apparent effects seen in the PT literature given it also appears to be an extremely compromised one, and if forced to give an overall number, any 'numerical bottom line' should have even wider error bars because of this.[9]

Bayesian methods

I previously complained that the guestimate/BOTEC-y approach HLI used in integrating information from the meta-analysis and the strongminds trial data couldn't be right, as it didn't pass various sanity tests: e.g. still recommending SM as highly effective even if you set the trial data to zero effect. HLI now has a much cleverer Bayesian approach to combining sources of information. On the bright side, this is mechanistically much clearer as well as much cleverer. On the downside, the deck still looks pretty stacked.

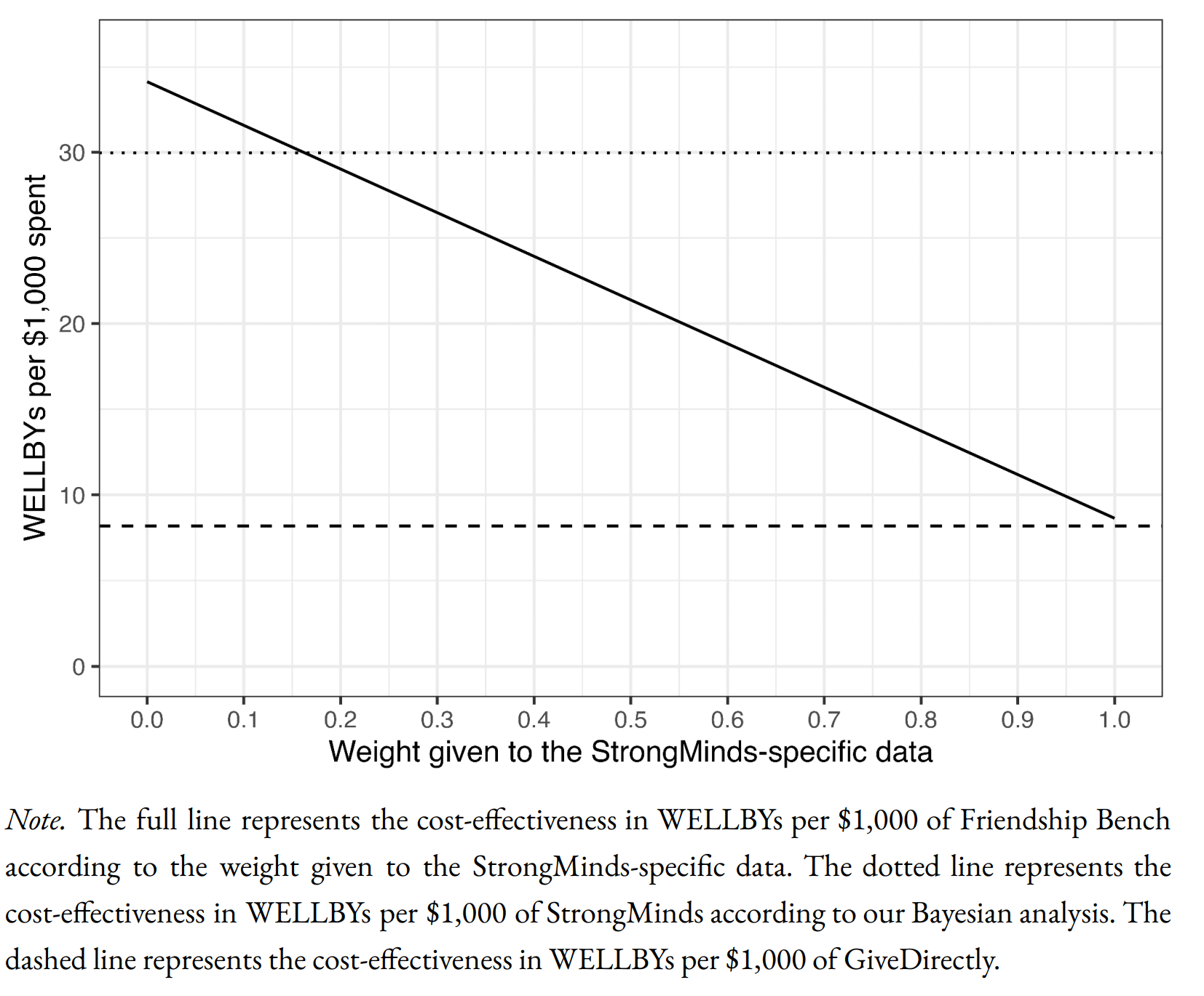

Starting at the bottom, here's how HLI's Bayesian method compares SM to GD:

The informed prior (in essence) uses the meta-analysis findings with some monte carlo to get an expected effect for an intervention with strongminds-like traits (e.g. same number of sessions, same deliverer, etc.). The leftmost point of the solid line gives the expectation for the prior: so the prior is that SM is ~4x GDs cost effectiveness (dashed line).

The x axis is how much weight one gives to SM-specific data. Of interest, the line slopes down, so the data gives a negative update on SMs cost-effectiveness. This is because HLI - in anticipation of the Baird/Ozler RCT likely showing disappointing results - discounted the effect derived from the original SM-specific evidence by a factor of 20, so the likelihood is indeed much lower than the prior. Standard theory gives the appropriate weighting of this vs. the prior, so you adjust down a bit, but not a lot, from the prior (dotted line).

Despite impeccable methods, these results are facially crazy. To illustrate:

I take it most readers would disagree with the model here too - e.g. if indeed the only RCT on strongminds is basically flat, that should be sufficient demote SM from putative 'top charity' status.

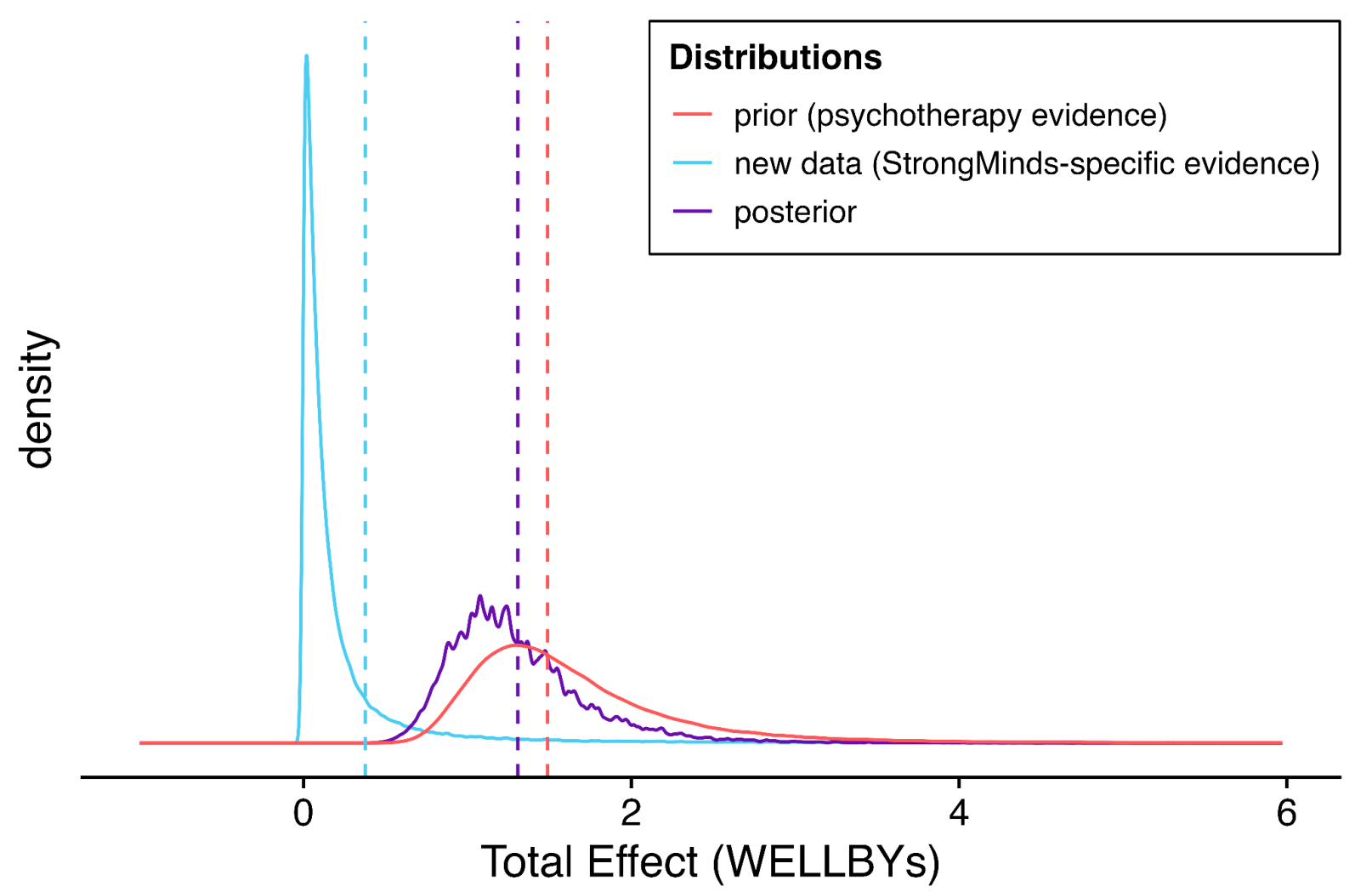

I think I can diagnose the underlying problem: Bayesian methods are very sensitive to the stipulated prior. In this case, the prior is likely too high, and definitely too narrow/overconfident. See this:

Per the dashed and dotted lines in the previous figure, the 'GiveDirectly bar' is fractionally below at the blue dashed line (the point estimate of the stipulated-SM data). The prior distribution is given in red. So the expectation (red dashed line) is indeed ~4x further from the origin (see above).

The solid red curve gives the distribution. Eyeballing the integrals reveals the problem: the integral of this distribution from the blue dashed line to infinity gives the models confidence psychotherapy interventions would be more cost-effective than GD. This is at least 99% of the area, if not 99.9% - 99.99%+. A fortiori, this prior asserts it is essentially certain the intervention is beneficial (total effect >0).

I don't think anyone should think that any intervention is P > 0.99 more cost-effective than give directly (or P < 0.0001 or whatever it is in fact harmful) as a prior,[12] but if one did, it would indeed take masses of evidence to change one's mind. Hence the very sluggish moves in response to adverse data (the purple line suggests the posterior is also 99%+ confident SM is better than givedirectly).

I think I can also explain the underlying problem of this underlying problem. HLI constructs its priors exclusively from its primary meta-analytic model (albeit adapted to match the intervention of interest, and recalculated excluding any studies done on this intervention to avoid double counting). Besides the extra uncertainty (so spread) likely implied by variety of factors covered in the sensitivity analysis, in real life our prior would be informed by other things too: the prospect entire literatures can be misguided, a general sense (at least for me) that cash transfers are easy to beat in principle, but much harder in practice, and so on.

In reality, our prior-to-seeing-the-metaanalysis prior would be very broad and probably reasonably pessimistic, and (even if I'm wrong about the shortcomings I suggest earlier), the 'update' on reading it would be a bit upwards, and a little narrower, but not by that much. In turn, the 'update' on seeing (e.g.) disappointing RCT results for a given PT intervention would be a larger shift downwards, netting out that this was unlikely better than GiveDirectly after all.

If the Bayesian update was meant only to be a neat illustration, I would have no complaint. But instead the bottom line recommendations and assessments rely upon it - that readers should indeed adopt the supposed prior the report proposes about the efficacy of PT interventions in general. Crisply, I doubt the typical reader seriously believes (e.g.) basically any psychotherapy intervention in LMICs, so long as cost per patient is <$100, is a ~certain bet to beat cash transfers. If not, they should question the report's recommendations too.

Summing up

Criticising is easier than doing better. But I think this is a case where a basic qualitative description tells the appropriate story, the sophisticated numerical methods are essentially a 'bridge too far' given the low quality of what they have to work with, and so confuse rather than clarify the matter. In essence:

All of the figures are taken from the report and appendix. The transparency is praiseworthy, although it is a pity despite largely looking at the right things the report often mistakes the right conclusions to draw.

With all the well-worn caveats about measuring well-being.

The Cochrane handbook section on meta-analysis is very clear on this (but to make it clearer, I add emphasis)

As a WIP, the data and code for this report is not yet out, but in my previous statistical noodling on the last one both study size and registration status significantly moderated the effect downwards when included together, suggesting indeed the former isn't telling you everything re. study quality.

The report does mention later (S10.2) controlling a different analysis for study quality, when looking at the effect of sample size itself:

I don't think this analysis is included in the appendix or similar, but later text suggests the 'study quality' correction is a publication bias adjustment. This analysis is least fruitful when applied to study scale, as measures of publication bias are measures of study size: so finding the effects of study scale are attenuated when you control for a proxy of study scale is uninformative.

What would be informative is the impact measures of 'study scale' or publication bias have on the coefficients for the primary moderators. Maybe they too could end up 'controlled away with quality variables, more of which that we haven't considered might be included'?

There are likely better explanations of funnel plots etc. online, but my own attempt is here.

The report charts a much wiser course on a different "Outlier?" question: whether to include very long follow-up studies, where exclusion would cut the total effect in half. I also think including everything here is fine too, but the report's discussion in S4.2 clearly articulates the reason for concern, displays what impact inclusion vs. exclusion has, and carefully interrogates the outlying studies to see whether they have features (beyond that they report 'outlying' results) which warrants exclusion. They end up going 'half-and-half', but consider both full exclusion and inclusion in sensitivity analysis.

If you are using study size as an (improvised) measure of study quality, excluding the smallest studies because on an informal read they are particularly low quality makes little sense: this is the trend you are interested in.

A similar type of problem crops up when one is looking at the effect of 'dosage' on PT efficacy.

The solid lines are the fit (blue linear, orange log) on the full data, whilst the dashed lines are fits with extreme values of dosage - small or large - excluded (purple). The report freely concedes its choices here are very theory led rather than data driven - and also worth saying getting more of a trend here makes a rod for SM and Friendship Bench's back, as these deliver smaller numbers of sessions than the average, so adjusting with the dashed lines and not the solid ones reduces the expected effect.

Yet the main message I would take from the scatter plot is the data indeed looks very flat, and there is no demonstrable dose-response relationship of PT. Qualitatively, this isn't great for face validity.

To its credit, the write-up does highlight this, but does not seem to appreciate the implications are crazy: any PT intervention, so long as it is cheap enough, should be thought better than GD, even if studies upon it show very low effect size (which would usually be reported as a negative result, as almost any study in this field would be underpowered to detect effects as low as are being stipulated):

The report describes this clearly itself, but seems to think this is a feature rather than a bug (my emphasis):

I'm not even sure that "P > 0.99 better than GD" would be warranted as posterior even for a Givewell recommended top charity, and I'd guess the GW staff who made the recommendation would often agree.

I'm feeling confused by these two statements:

The first statement says HLI's recommendation is unsafe, but the second implies it is reasonable as long as the sensitivity is clearly explained. I'm grateful to Greg for presenting the analysis paths which lead to SM < GD, but it's unclear to me how much ... (read more)