This is the summary of the report with additional images (and some new text to explain them) The full 90+ page report (and a link to its 80+ page appendix) is on our website.

Summary

This report forms part of our work to conduct cost-effectiveness analyses of interventions and charities based on their effect on subjective wellbeing, measured in terms of wellbeing-adjusted life years (WELLBYs). This is a working report that will be updated over time, so our results may change. This report aims to achieve six goals, listed below:

1. Update our original meta-analysis of psychotherapy in low- and middle-income countries.

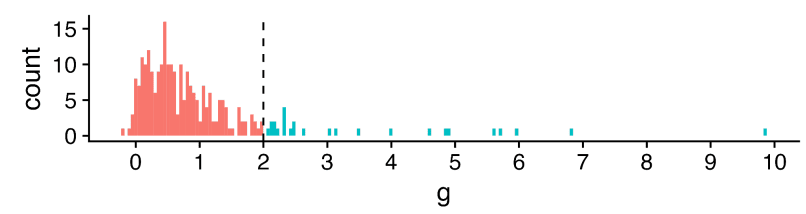

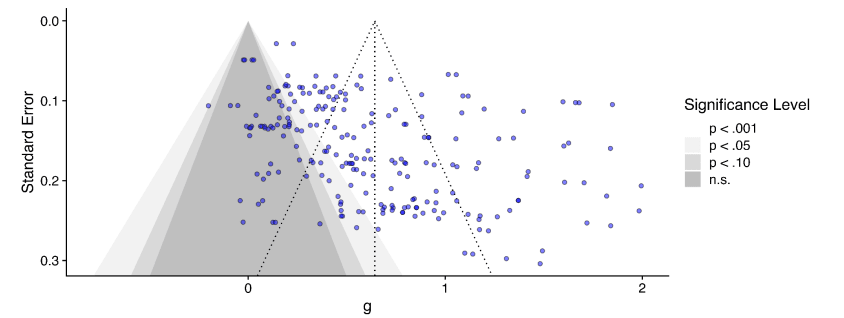

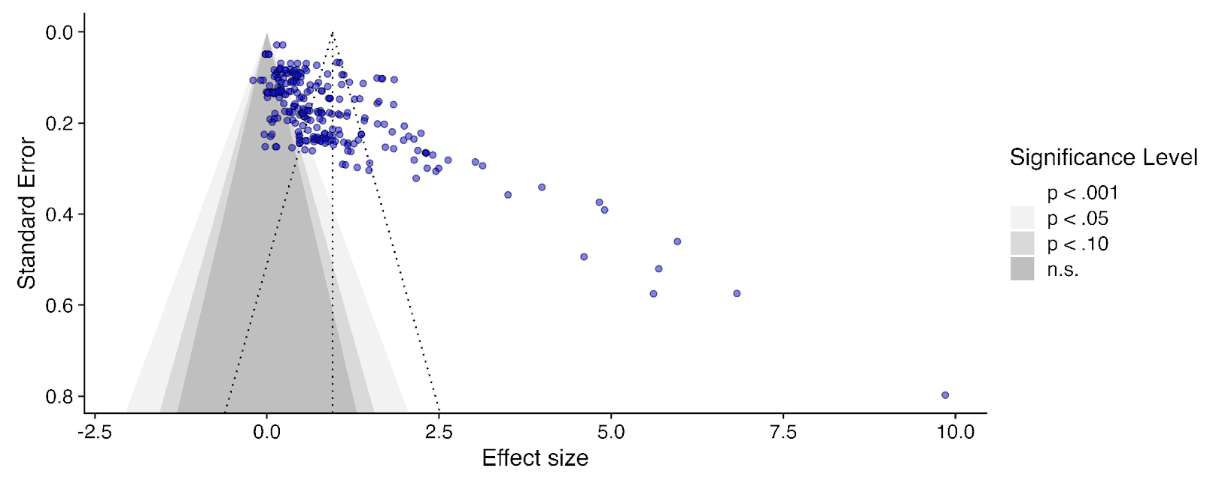

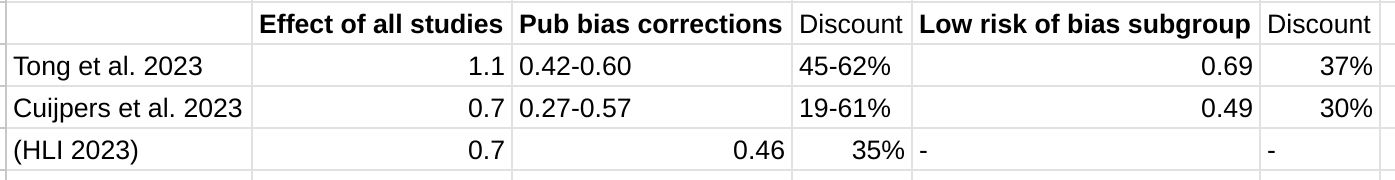

In our updated meta-analysis we performed a systematic search, screening and sorting through 9390 potential studies. At the end of this process, we included 74 randomised control trials (the previous analysis had 39). We find that psychotherapy improves the recipient’s wellbeing by 0.7 standard deviations (SDs), which decays over 3.4 years, and leads to a benefit of 2.69 (95% CI: 1.54, 6.45) WELLBYs. This is lower than our previous estimate of 3.45 WELLBYs (McGuire & Plant, 2021b) primarily because we added a novel adjustment factor of 0.64 (a discount of 36%) to account for publication bias.

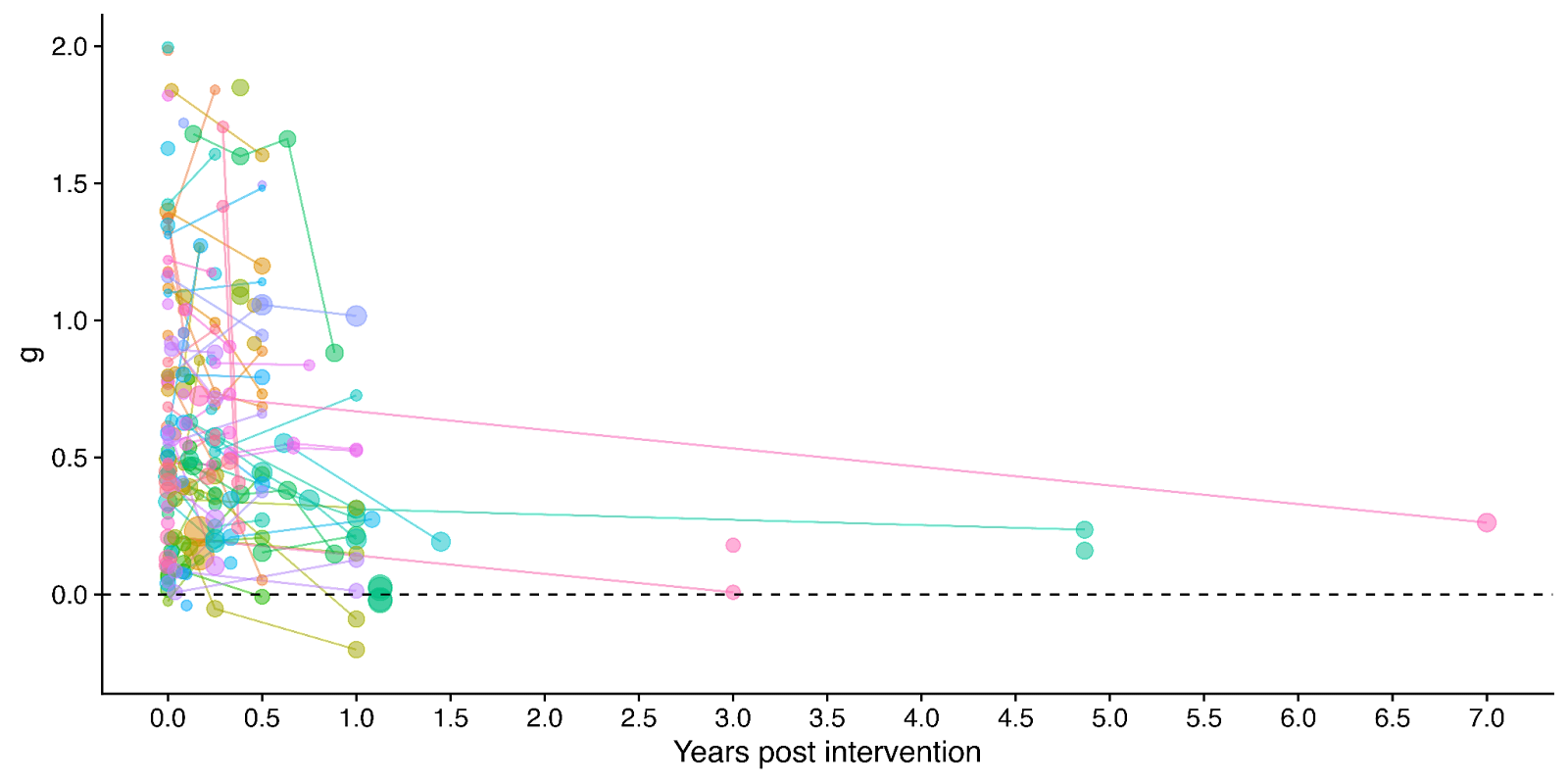

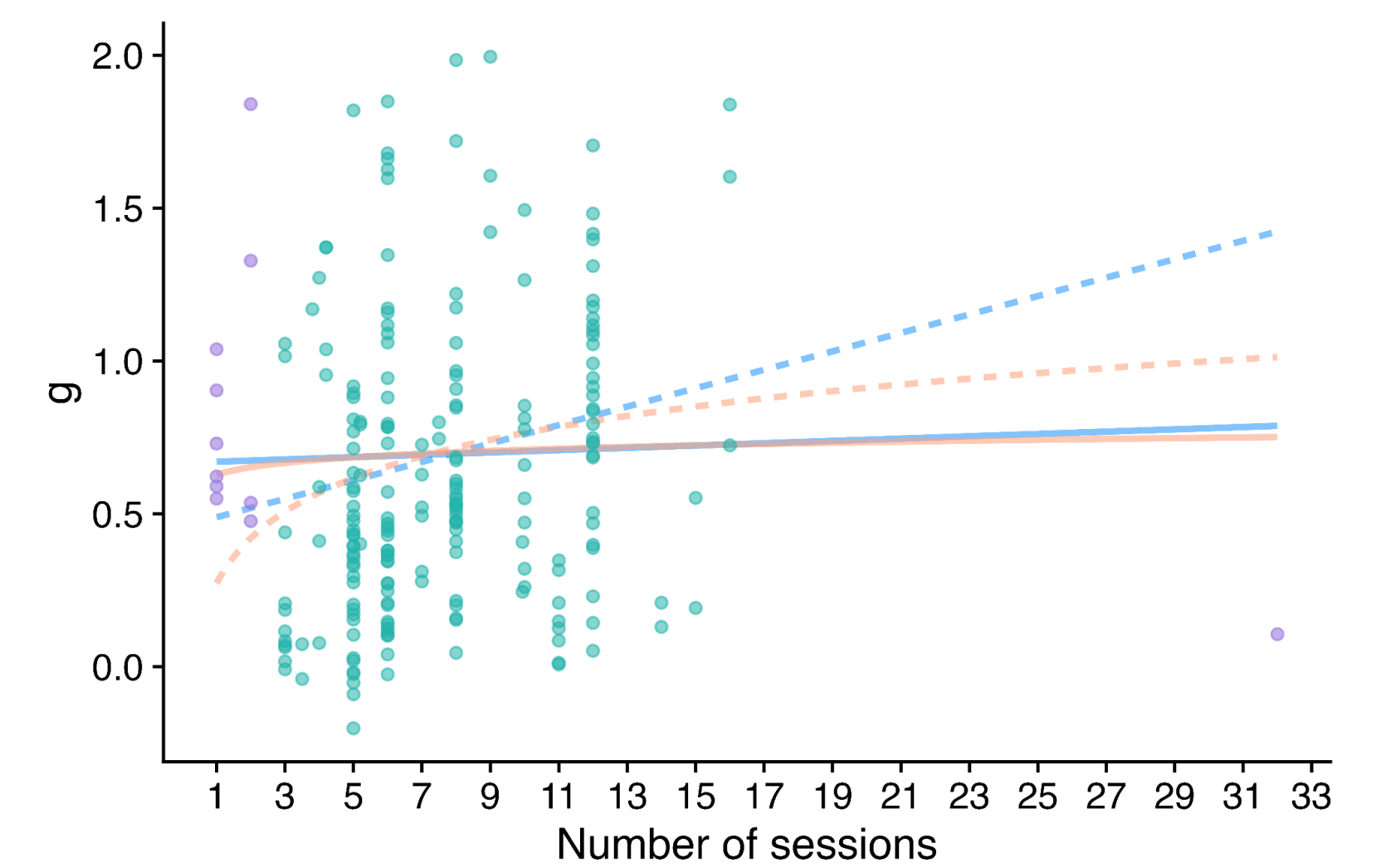

Figure 1: Distribution of the effects for the studies in the meta-analysis, measured in standard deviations change (Hedges’ g) and plotted over time of measurement. The size of the dots represents the sample size of the study. The lines connecting dots indicate follow-up measurements of specific outcomes over time within a study. The average effect is measured 0.37 years after the intervention ends. We discuss the challenges related to integrating unusually long follow-ups in Sections 4.2 and 12 in the report.

2. Update our original estimate of the household spillover effects of psychotherapy.

We collected 5 (previously 2) RCTs to inform our estimate of household spillover effects. We now estimate that the average household member of a psychotherapy recipient benefits 16% as much as the direct recipient (previously 38%). See McGuire et al. (2022b) for our previous report-length treatment of household spillovers.

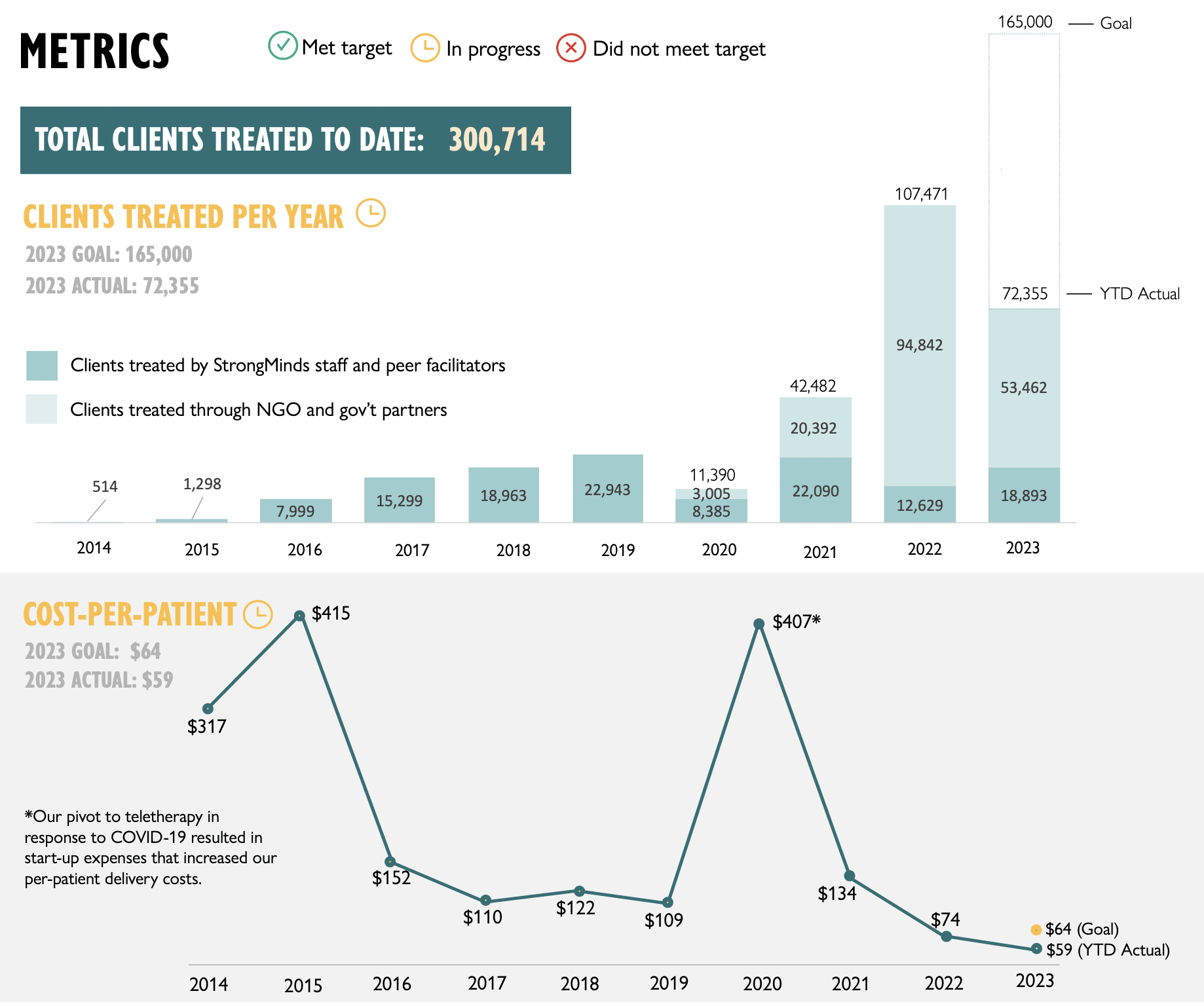

3. Update our original cost-effectiveness analysis of StrongMinds, an NGO that provides group interpersonal psychotherapy in Uganda and Zambia.

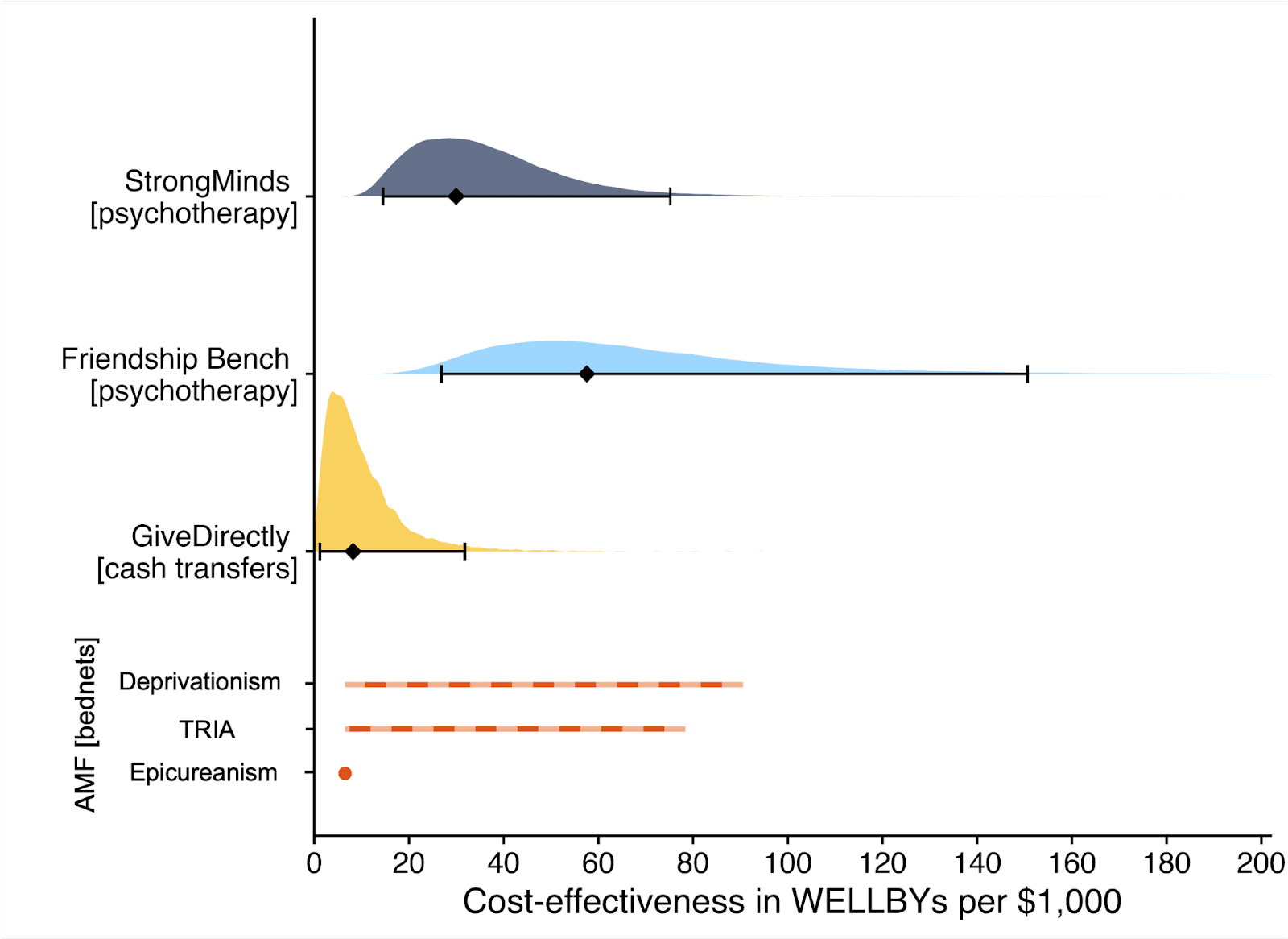

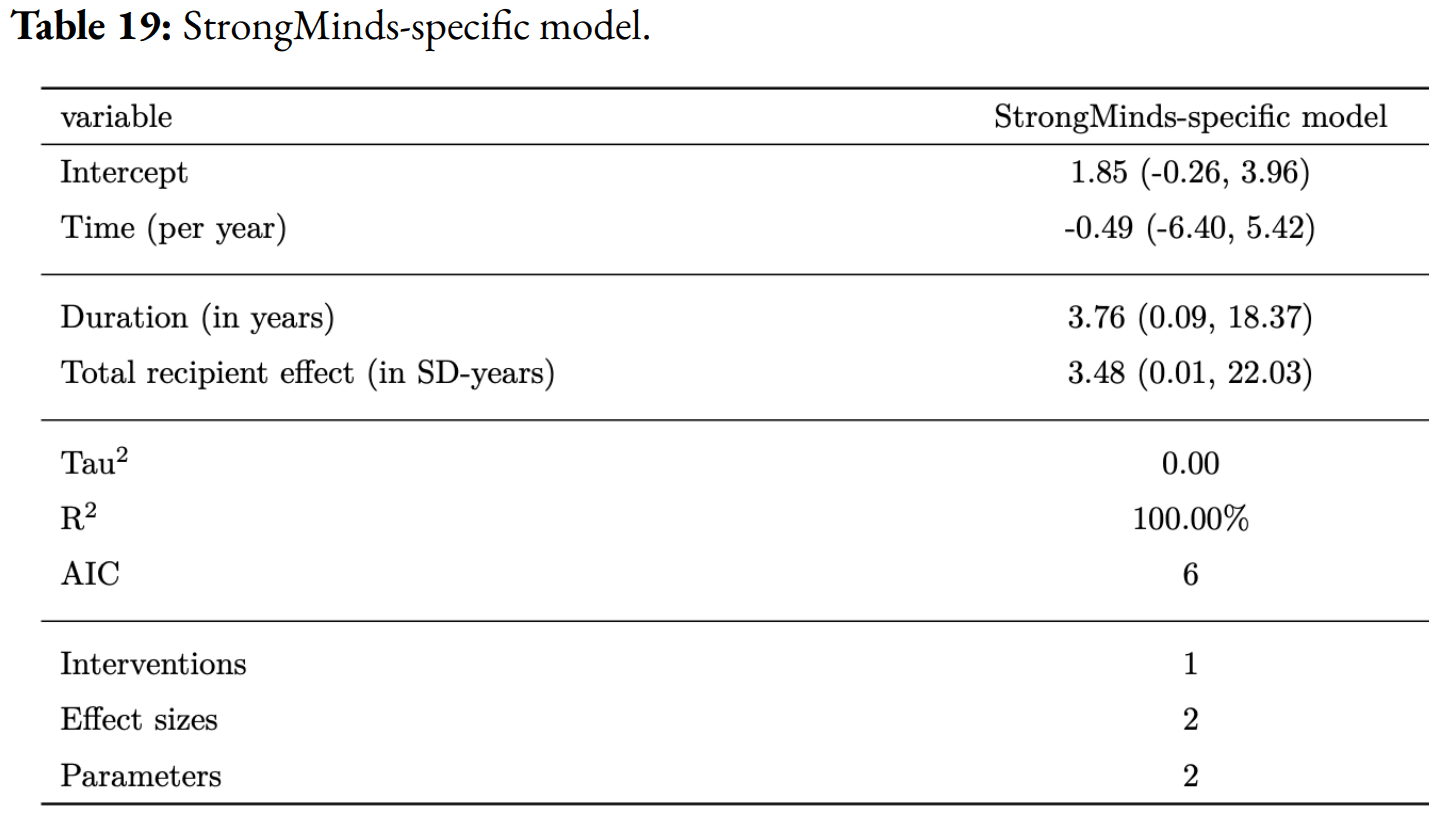

We estimate that a $1,000 donation results in 30 (95% CI: 15, 75) WELLBYs, a 52% reduction from our previous estimate of 62 (see our changelog website page). The cost per person treated for StrongMinds has declined to $63 (previously $170). However, the estimated effect of StrongMinds has also decreased because of smaller household spillovers, StrongMinds-specific characteristics and evidence which suggest smaller-than-average effects, and our inclusion of a discount for publication bias.

The only completed RCT of StrongMinds is the long anticipated study by Baird and co-authors, which has been reported to have found a “small” effect (another RCT is underway). However, this study is not published, so we are unable to include its results and unsure of its exact details and findings. Instead, we use a placeholder value to account for this anticipated small effect as our StrongMinds-specific evidence.[1]

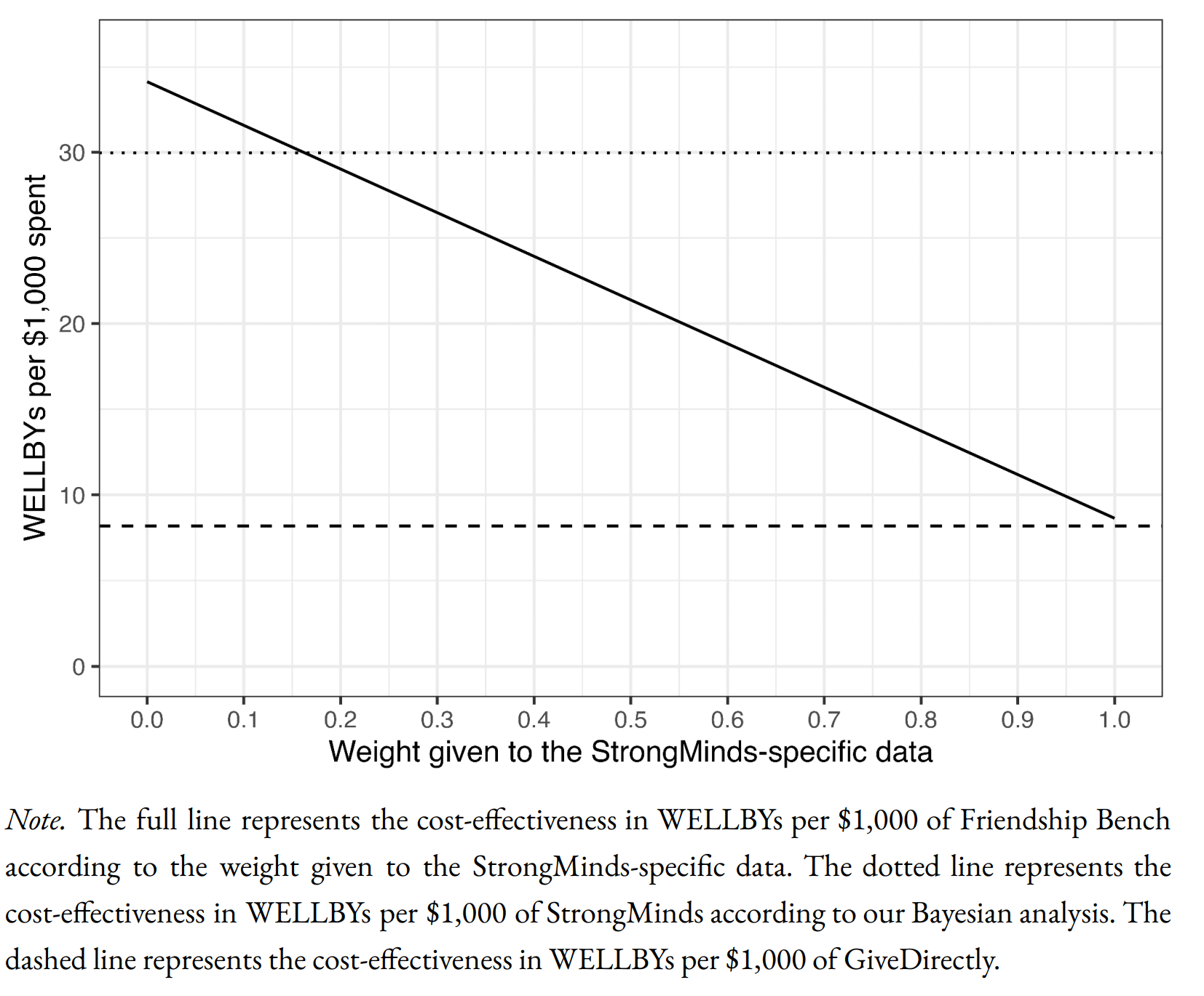

4. Evaluate the cost-effectiveness of Friendship Bench, an NGO that provides individual problem solving therapy in Zimbabwe.

We find a promising but more tentative initial cost-effectiveness estimate for Friendship Bench of 58 (95% CI: 27, 151) WELLBYs per $1,000. Our analysis of Friendship Bench is more tentative because our evaluation of their programme and implementation has been more shallow. It has 3 published RCTs which we use to inform our estimate of the effects of Friendship Bench. We plan to evaluate Friendship Bench in more depth in 2024.

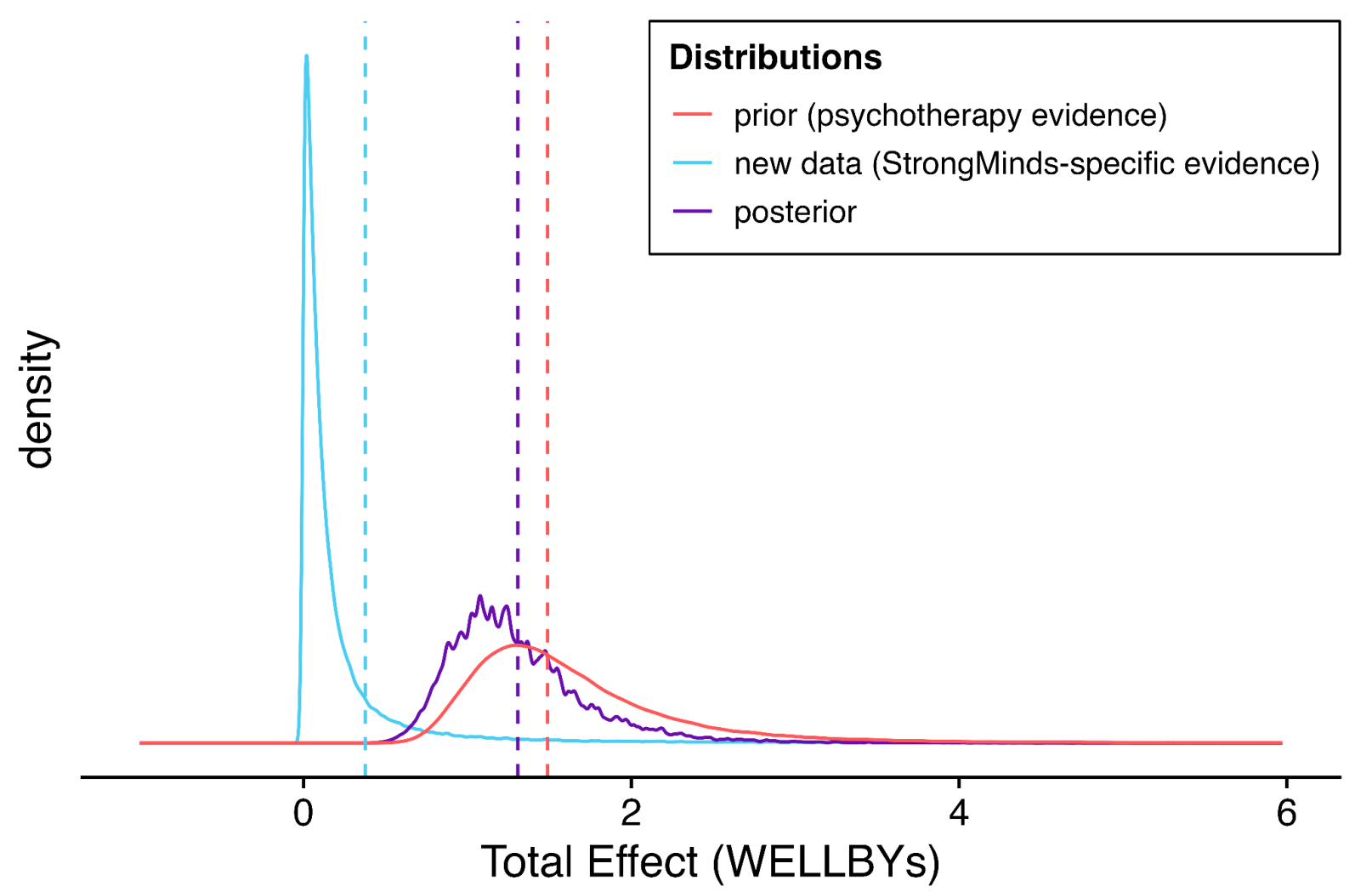

5. Update our charity evaluation methodology.

We improved our methodology for combining our meta-analysis of psychotherapy with charity-specific evidence. Our new method uses Bayesian updating, which provides a formal, statistical basis for combining evidence (previously we used subjective weights). Our rich meta-analytic dataset of psychotherapy trials in LMICs allowed us to predict the effect of charities based on characteristics of their programme such as expertise of the deliverer, whether the therapy was individual or group-based, and the number of sessions attended (previously we used a more rudimentary version of this). We also applied a downwards adjustment for a phenomenon where sample restrictions common to psychotherapy trials inflate effect sizes. We think the overall quality of evidence for psychotherapy is ‘moderate’.

6. Update our comparison to other charities

Finally, we compare StrongMinds and Friendship Bench to GiveDirectly cash transfers, which we estimated as 8 (95% CI: 1, 32) WELLBYs per $1,000 (McGuire et al., 2022b). We find here that StrongMinds is 30 (95% CI: 15, 75) WELLBYs per $1,000. Hence, comparing the point estimates, we now estimate that, in WELLBYs, StrongMinds is 3.7x (previously 8x) as cost-effective as GiveDirectly and Friendship Bench is 7.0x as cost-effective as GiveDirectly.

These estimates are largely determined by our estimates of household spillover effects, but the evidence on these effects is much weaker for psychotherapy than cash transfers. It is worth noting that if we only consider the effects on the direct recipient, this increases psychotherapy’s WELLBY effects relative to cash transfers - StrongMinds and Friendship Bench move to 10x and 21x as cost-effective as GiveDirectly, respectively. But it reduces the cost-effectiveness compared to antimalarial bednets. We also present and discuss (Section 12 in the report) how sensitive these results are to the different analytical choices we could have made in our analysis.

Figure 2: Comparison of charity cost-effectiveness. The diamonds represent the central estimate of cost-effectiveness (i.e., the point estimates). The shaded areas are probability density distribution and the solid whiskers represent the 95% confidence intervals for StrongMinds, Friendship Bench, and GiveDirectly. The lines for AMF (the Against Malaria Foundation) are different from the others[2]. Deworming charities are not shown, because we are very uncertain of their cost-effectiveness.

We think this is a moderate-to-in-depth analysis, where we have reviewed most of the available evidence and made many improvements to our methodology. We view the quality of evidence as ‘moderate to high’ for understanding the effect of psychotherapy on its direct recipients in general, ‘low’ for household spillovers, and ‘low to moderate’ for the charity-specific evidence for psychotherapy (StrongMinds and Friendship Bench). Therefore, we see the overall quality of evidence as ‘moderate’.

This is a working report, and results may change over time. We welcome feedback to improve future versions.

Notes

Author note: Joel McGuire, Samuel Dupret, and Ryan Dwyer contributed to the conceptualization, investigation, analysis, data curation, and writing of the project. Michael Plant contributed to the conceptualization, supervision, and writing of the project. Maxwell Klapow contributed to the systematic search and writing.

Reviewer note: We thank, in chronological order, the following reviewers: David Rhys Bernard (for trajectory over time), Ismail Guennouni (for multilevel methodology), Katy Moore (general), Barry Grimes (general), Lily Yu (charity costs), Peter Brietbart (general), Gregory Lewis (general), Ishaan Guptasarma (general), Lingyao Tong (meta-analysis methods and results), Lara Watson (communications).

Charity evaluation note: We thank Jess Brown, Andrew Fraker, and Elly Atuhumuza for providing information about StrongMinds and for their feedback about StrongMinds specific details. We also thank Lena Zamchiya and Ephraim Chiriseri for providing information about Friendship Bench.

Appendix note: This report will be accompanied by an online appendix that we reference for more detail about our methodology and results. The appendix is a working document and will, like this report, be updated over time.

Updates note: This is the first draft of a working paper. New versions will be uploaded over time.

- ^

We use a study that has similar features to the StrongMinds intervention and then discount its results by 95% in the expectation of the Baird et al. study finding a small effect. Note that we do not only rely on StrongMinds-specific evidence in our analysis but combine charity-specific evidence with the results from our general meta-analysis of psychotherapy in a Bayesian manner.

- ^

They represent the upper and lower bound of cost-effectiveness for different philosophical views (not 95% confidence intervals as we haven’t represented any statistical uncertainty for AMF). Think of them as representing moral uncertainty, rather than empirical uncertainty. The upper bound represents the assumptions most generous to extending lives (a low neutral point and age of connectedness) and the lower bound represents those most generous to improving lives (a high neutral point and age of connectedness). The assumptions depend on the neutral point and one’s philosophical view of the badness of death (see Plant et al., 2022, for more detail). These views are summarised as: Deprivationism (the badness of death consists of the wellbeing you would have had if you’d lived longer); Time-relative interest account (TRIA; the badness of death for the individual depends on how ‘connected’ they are to their possible future self. Under this view, lives saved at different ages are assigned different weights); Epicureanism (death is not bad for those who die – this has one value because the neutral point doesn’t affect it).

Would it have been better to start with a stipulated prior based on evidence of short-course general-purpose[1] psychotherapy's effect size generally, update that prior based on the LMIC data, and then update that on charity-specific data?

One of the objections to HLI's earlier analysis was that it was just implausible in light of what we know of psychotherapy's effectiveness more generally. I don't know that literature well at all, so I don't know how well the effect size in the new stipulated prior compares to the effect size for short-course general-purpose psychotherapy generally. However, given the methodological challenges with measuring effect size in LMICs on available data, it seems like a more general understanding of the effect size should factor into the informed prior somehow. Of course, the LMIC context is considerably different than the context in which most psychotherapy studies have been done, but I am guessing it would be easier to manage quality-control issues with the much broader research base available. So both knowledge bases would likely inform my prior before turning to charity-specific evidence.

[Edit 6-Dec-23: Greg's response to the remainder of this comment is much better than my musings below. I'd suggest reading that instead!]

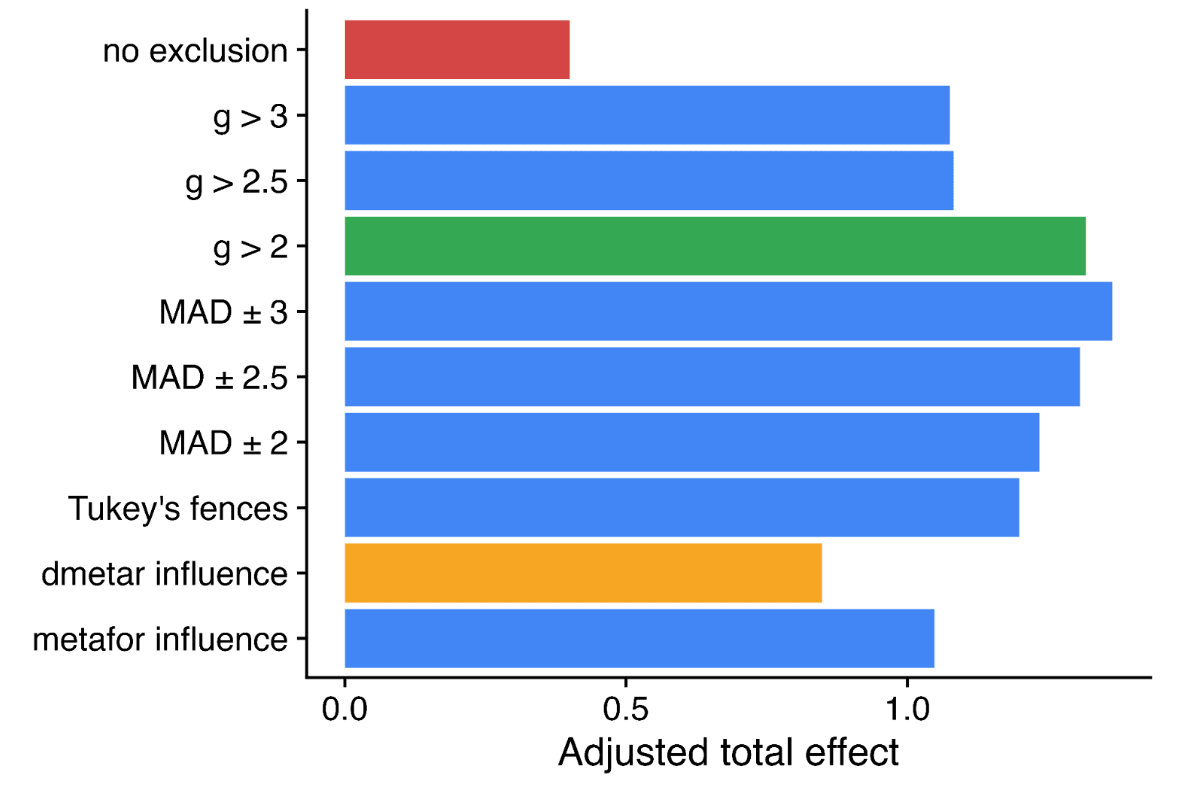

To my not-very-well-trained eyes, one hint to me that there's an issue with application of Bayesian analysis here is the failure of the LMIC effect-size model to come anywhere close to predicting the effect size suggested by the SM-specific evidence. If the model were sound, it would seem very unlikely that the first organization evaluated to the medium-to-in-depth level would happen to have charity-specific evidence suggesting an effect size that diverged so strongly from what the model predicted. I think most of us, when faced with such a circumstance, would question whether the model was sound and would put it on the shelf until performing other charity-specific evaluations at the medium-to-in-depth level. That would be particularly true to the extent the model's output depended significantly on the methodology used to clean up some problems with the data.[2]

By which I mean not psychotherapy for certain narrow problems (e.g., CBT-I for insomnia, exposure therapy for phobias).

If Greg's analysis is correct, it seems I shouldn't assign the informed prior much more credence than I have credence in HLI's decision to remove outliers (and to a lesser extent, its choice of a method). So, again to my layperson way of thinking, one partial way of thinking about the crux could be that the reader must assess their confidence in HLI's outlier-treatment decision vs. their confidence in the Baird/Ozler RCT on SM.