TL;DR:

You might save up to two lives per year using Cognitive Behavioral Treatment methods on one EA. And you might make a meaningful difference by helping us to do just that! In 2023, we developed an 8-week program and saw an increase of 5-9 productive hours per week for the 42 attending EAs, on top of improved mental health. In 2024, we aim to scale this program within the EA community with a randomized controlled trial (RCT). Is this cost-effective? Probably. Potentially, the program might outperform GiveWell’s recommended top charities. Below you can find out about who is behind this initiative, what our program looks like, what our outcomes have been so far, and how we aim to have an impact in 2024. We are thirsty for your ideas and thoughts on this!

You want to support us or get involved? You can do so by:

- Telling others about us and our program, e.g., talking about us on social media, sharing, commenting, or upvoting this post

- Considering donating to Rethink Wellbeing via our GWWC sub-website

- Your donation will move the needle significantly for us as a small org to help us survive past Jan 2024 and to offer our program again

- Staying in touch and up-to-date with Rethink Wellbeing: leave your email address here

You can alternatively consider donating a few hours per week to become a group facilitator in 2024 (apply in ~20 min via this form).

Watch an 8-minute demo overview of our CBT Lab program.

Who We Are

Rethink Wellbeing is a Dutch Stichting (Dutch for “foundation”) founded in February 2023 based on the belief that the global mental health crisis is in need of cost-effective interventions. We are a young nonprofit organization in the Effective Altruism space (meta), along with organizations like CEA, 80k Hours, and Rethink Priorities. Our mission is to spread mental resilience and wellbeing at scale. To do so, we use engaging, proven, and low-cost programs tailored to the needs of communities aimed at having a high impact. We believe the largest initial impact we can have is supporting changemakers such as people in the Effective Altruism community.

- Core Team

- Dr. Sam Bernecker:

- Program & Training Manager

- Ex-Harvard University postdoc and ex-BCG, in HLI board

- Psychologist with 7+ years experience in online mental health programs and research, e.g., at BetterUp

- Dr. Inga Grossmann:

- Org & Research Manager

- Former psychology professor, and ex-BCG DV

- 10+ years experience building online health businesses and in clinical & market research

- John Drummond:

- Ops & Comms Manager

- Former TEDx coordinator

- 10+ years international experience in content, project and operations management

- Dr. Sam Bernecker:

- Volunteers

- Fundraising consultant: Jennifer Schmitt

- Business consultant: Edmo Gamelin

- Research support: Robert Reason

- Operations support: Amine Challouf

- 6 program facilitators (more to be trained)

- 3 programmers to automate processes (>50 applied)

- Advisors:

- 5 EA-informed practitioners:

- Ewelina Tur, Damon Pourtahmaseb-Sasi, Dr. Hannah Boettcher, Dave Cortright, Samantha Robins

- People from other initiatives in the field (e.g., Vida Plena, Action for Happiness, Happier Lives Institute, CEA Mental Health Team, MHN)

- Some of our direct references:

- Peter Brietbart, Dr. Kate Niederhoffer, Dr. Laurin Rötzer, Kristina Wilms, Sara Ness, Spencer Greenberg

- 5 EA-informed practitioners:

What We Do

Problem

An estimated 10-30% of people in changemaker and Effective Altruism (EA) communities experience poor mental health (Our World in Data, 2021; Slate Star Codex, 2020; EA MHN & Rethink Wellbeing)², which is related to a 35% loss in productivity for affected individuals (e.g., for depression; see Beck et al., 2011). Therefore, 5-10% of the overall work and resources devoted to EA causes may be lost due to this problem.

At the same time, the resulting demand doesn’t seem to be met. In 2023, 74% of EAs stated their mental support needs are not or only partly met, and >60% prefer peer group support. Specifically, support tailored to changemakers, such as EAs, is sparse (e.g., there are only a few therapists who are EA-informed at all). Tailoring, though, has been shown to be essential to the success of interventions and multiplies engagement and effects up to 4x (e.g., Griner & Smith, 2006; Wildeboer et. al, 2016).

Solution

What our program entails

In our 8-week online program, participants learn cutting-edge psychotherapeutic and behavior change techniques.

- Weekly group sessions: with 5-7 well-matched peers and trained facilitator, for co-learning, accountability, and bonding

- In between sessions: read one chapter of a CBT workbook, practice tools learned, utilize Discord to share and troubleshoot

- Weekly evaluations: of wellbeing and the program to foster reflection, engagement, and deliberate practice

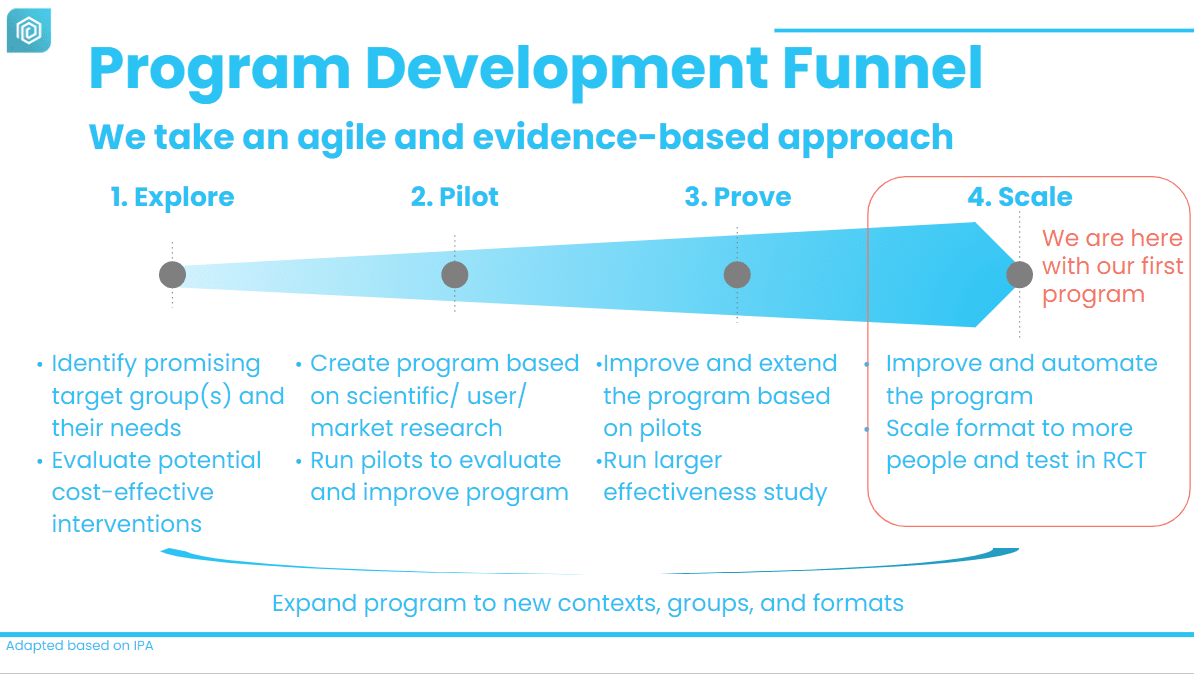

All in all, our program is ready to scale (see Program Development Funnel image below). Though tailored to the needs of EAs, it will be easily adaptable to other communities.

Want to see more more of it? You can watch an 8-minute high-level overview of our CBT Lab program here.

Our program is likely effective and engaging

Our program is wanted and needed

- >350 EAs applied to participate in our program in 2023 despite relatively little advertising (see EA forum post)

- >120 EAs applied to become facilitators (see EA forum post). We have trained 6 already and plan to train 15 more

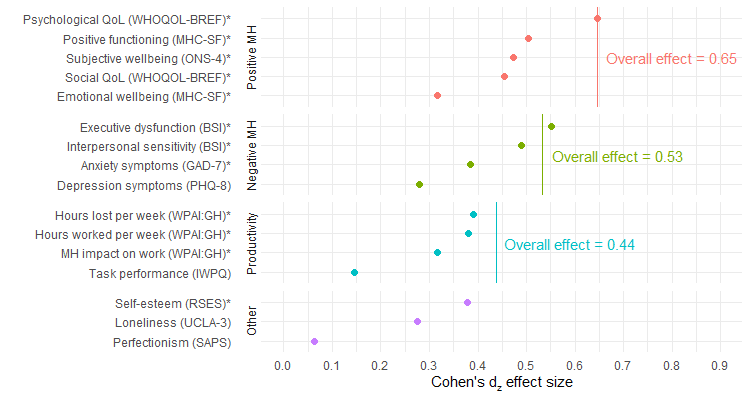

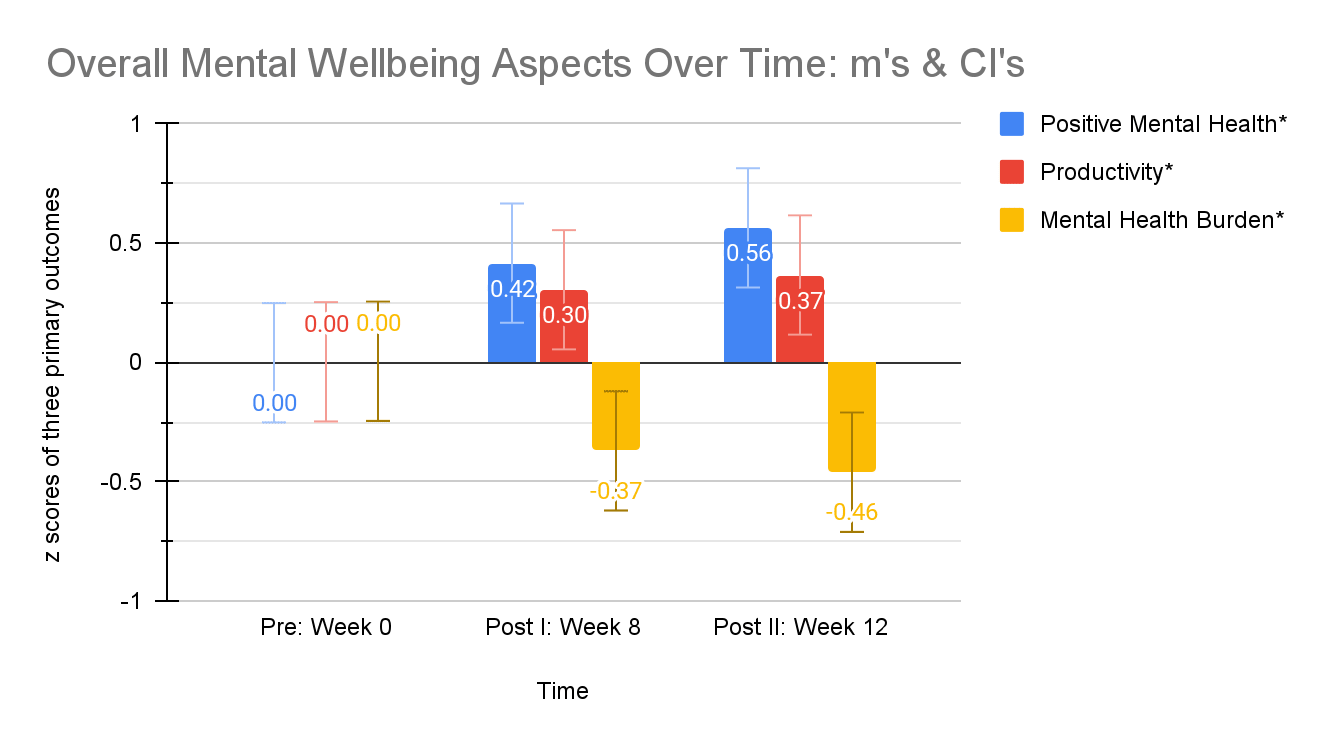

Results from our pre-post effectiveness study (7 groups, N=42):

- Participants significantly improved after 12 weeks (all p<.05)

- Increase in productivity ~9 hours per week

- Roughly half of it refers to an increase in working hours, and the other half to an increase in the subjective productivity experienced during those worked hours (self-assessment)

- Plus ~1 point on 0-10 point life satisfaction scales

- This is greater than the increases seen from becoming partnered (+0.59) or finding employment (+0.70), when compared with findings from other cross-sectional studies, sustained for 1 year this would equate to one WELLBY per participant

- Decrease in mental health burden by 16-28%

- Including symptoms of depression, anxiety, executive dysfunction, and interpersonal sensitivity

- Increase in productivity ~9 hours per week

- Only 7% (3 of 42) dropped out, which is lower than in 1:1 therapy

- Very high user satisfaction for both participants and facilitators, >90% would recommend the program, 30% of participants willing and able to pay at least partly

- More than 50% of participants feel significantly more positive about or committed to the EA community after the program

Please be aware that while this evidence might not be causal, the results are suggestive! We need to run a randomized controlled trial (RCT) as a next step to make sure the improvements are caused by the program, and not by other factors such as time.

This diagram shows how much participants got better between the start and the end of the program after 8 weeks - in measures of positive mental health, negative mental health (symptom burden), productivity, and other variables.

Notes: What does this figure mean? They show the average change the 42 participants reported in all of the variables we measured in the form of a standardized effect size. Higher values represent higher improvements in the pre-post comparison (week zero versus week eight). MH: Mental Health.

Interpretation of Cohen’s d: small (0.2), medium (0.5), and large (0.8).] Effect sizes (Cohen's d) of 0.5-0.8 are considered "medium," while Cohen's d of 1 reflects a difference of 1 standard deviation. Why are the overall effects larger than the component-based effects? We calculated a standardized composite score, which leads to less noise, smaller SDs, and, thereby, larger effect sizes.

Changes that were statistically significant—in other words, ones that we are reasonably confident are not due to random chance—are marked with an asterisk (*). We used mixed-effects models to test for significance because these models most rigorously account for the way participants were placed in groups.

Four weeks after the program, the effects increased even more. The effect sizes align with the commonly measured ones measuring the effects of evidence-based mental health interventions such as cognitive behavioral therapy (e.g., Cuipers, et. al, 2016).

Notes: What does this figure mean? They show the average change the 42 participants reported in all of the variables we measured in the form of standardized scores. Higher values represent higher improvements in the pre-post comparison (week zero versus week eight versus week twelve). The Z-score is measured in terms of standard deviations from the mean. If a Z-score is 0, it indicates that the data point's score is identical to the mean score. A Z-score of 1.0 would indicate a value that is one standard deviation from the mean. Z-scores may be positive or negative, with a positive value indicating the score above the mean and a negative score below the mean. *p<.05, significant changes.

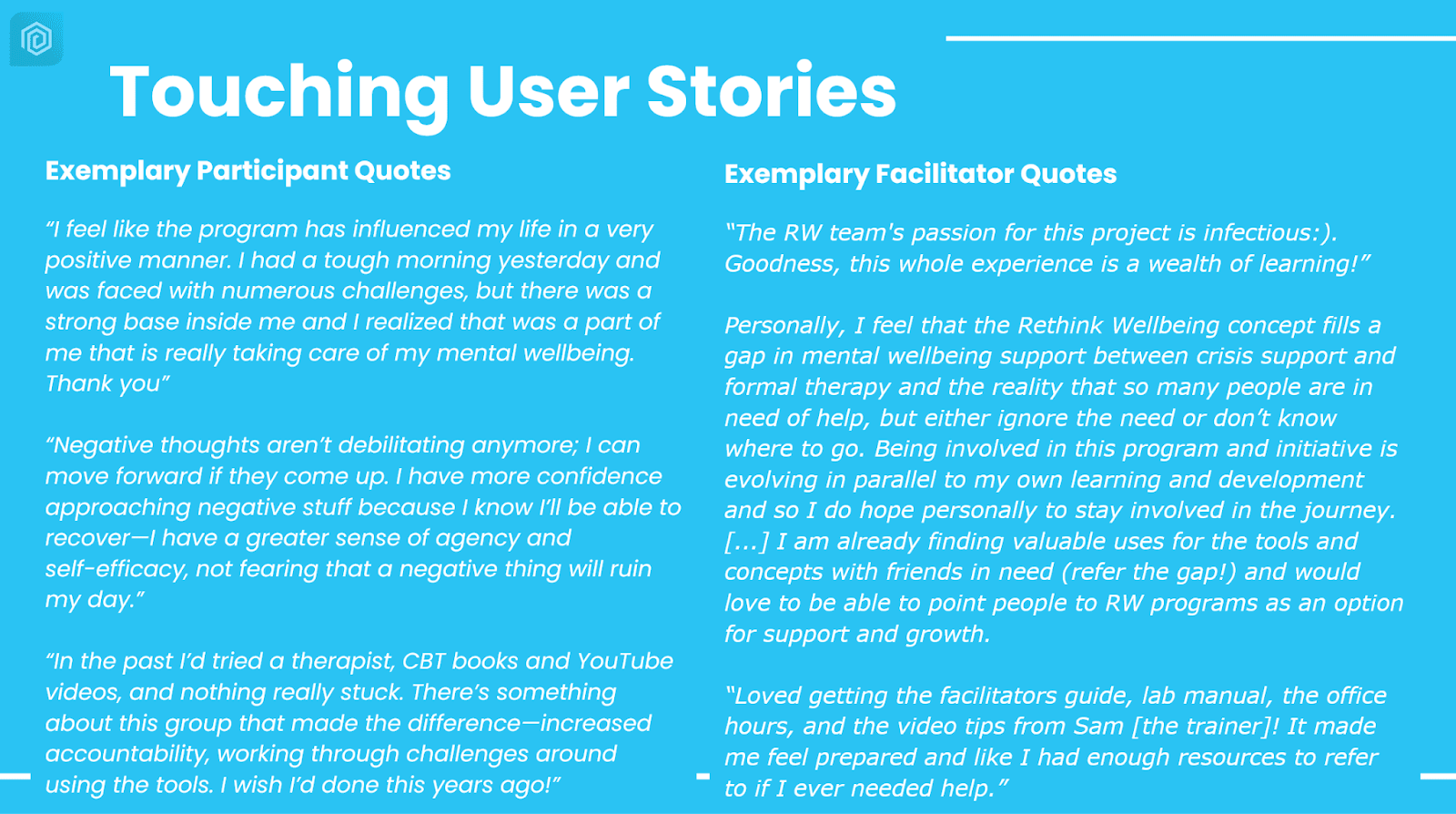

We also collected some heartwarming user stories for you so that you can get an impression of what the whole experience is like:

Cost-Effectiveness

The program is designed to maximize effects while minimizing resources needed. We use cutting-edge psychotherapeutic and behavior change methods and deliver those via a layperson-guided self-help program. The format has shown to be as effective and engaging as professional 1:1 therapy in multiple meta-analyses while costing 3 times less in our case (e.g., Baumeister et al., 2014). We are not operating at full scale, using only a minimum viable product of our CBT program, but offer a “back of the envelope” cost-effectiveness estimate for that full-scale scenario below and in more detail with reasoning transparency in this sheet.

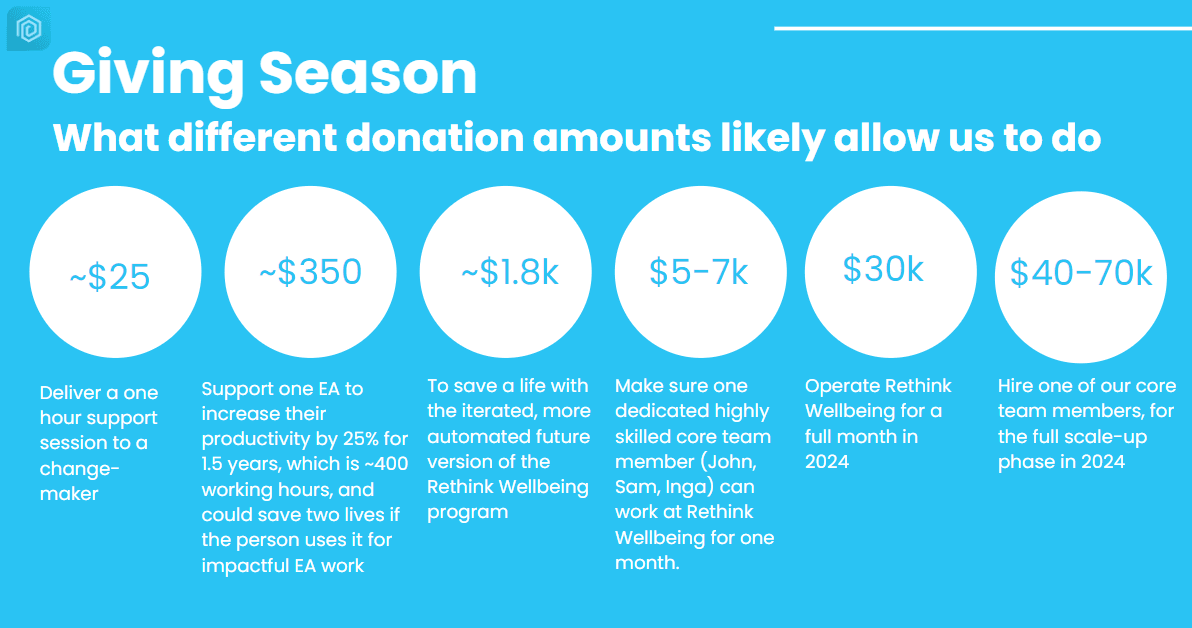

By our estimations, after further iterations and scaling efforts as intended for 2024, we will likely be able to deliver our program to 500 participants yearly at a cost of ~$ 350/participant (including overhead costs). This would generate a total of ~100 yearly full-time equivalent (FTE) EAs or $5MM worth of work per year when Rethink Wellbeing is in operation. If just 10% of the value of this additional productivity is donated to top charities or to equally cost-effective activities, the program’s overall cost-effectiveness will outperform GiveWell’s top charities with a cost of <~$2,000 per life saved. Put in another way, if only one in ten participants would donate their additional income of ~$10,000, this would mean that two additional lives could be saved for a total of ~$3,500 (cost of the program for 10 participants)³. We are aware that this is more of a promising thought experiment based on the little data we have than a prediction with high certainty. We plan to increase confidence in this estimate through data gathered in 2024.

Plan for 2024

- Q1: Iterate and expand program and make it more scalable via automation and delegation

- Q2 & Q3: Run RCT to more accurately assess (cost)-effectiveness and be assessed by GiveWell (N~150)

- Q4: Deliver the program to more EAs (N~150), determine potential next high-impact audiences, grow the team

- Q3-Q4: Run pilot tests in new target groups of potential changemakers, selected from communities such as leaders, founders, and students

Our current runway will end at the end of January 2024. For 2023, we had raised ~$250k in 2022 from Open Philanthropy, the Clearer Thinking Regrant Program, the Future Forum, and individual donors to develop and validate our program.

To execute the plan above and to enable us to keep running our charity, we aim to collect at least $200k by the end of December. This is how we will distribute the funding:

- Hire 3-4 core team members full-time

- +20% outsourcing + overhead (e.g., HR, legal, advice)

- +15% buffer

Achievements in 2023

How to Get Involved

We are thankful for every small action you take!

Make a difference by donating

Help us continue to help others! Without your donation, Rethink Wellbeing won’t be able to offer this program again. But with your contribution, we will be able to:

- Continue serving the program to altruistically-minded people, creating a waterfall of goodness

- Improve the program to make it even more powerful and efficient

- Test the improved program in a rigorous, gold-standard randomized controlled trial

You can consider donating to Rethink Wellbeing via our GWWC sub-website, and share your Giving Story on social media if you like. Since we are still a small organization and the EA funding landscape is pretty funding-constraint currently, our survival will depend on the donations we are able to raise until the end of December.

Get in touch & talk about us

Share our story. Tell your peers about us and our program. You can:

- Share, comment, or upvote this post to get more eyes on our work

- Recommend our program as many participants do to friends who might struggle, and our facilitator roles to people who’d like to upskill in that area or donate their facilitation skills to doing good

- Simply talk about us on social media, starting off with one of our generated insights, such as “you might save a life by using CBT on a changemaker”

You can stay tuned by leaving your name and email address here. We are happy to send you updates! Feel free to follow our LinkedIn for job postings and other business-related info.

Get involved in our program

You can donate a few hours per week as a program facilitator in 2024 (apply in ~20 min via this form), or apply as a participant for a potential future round (apply in 15 min via this form). You can learn more about the facilitator roles in this forum post.

Is This Too Good to Be True?

Is it really the case that the full-scale program will likely outperform GiveWell's top charities? It's a surprising prospect, especially for a project based in the Western world. We shared this initial skepticism. To ensure reasonable accuracy, we meticulously analyzed our numbers, turning them upside down and inside out multiple times for a conservative and robust analysis. Additionally, three senior researchers independently reviewed our assumptions and reasoning. We're pleased to report that the cost-effectiveness analysis appears sound, and the results, thus far, are promising. Nonetheless, we remain committed to rigorously challenging our thinking and assumptions. If you have any questions or feedback, your input would be invaluable to us!

A Big Thank You

.... goes to the reviewers of this post for all the helpful comments you made: Thijs Loggen, Stefan Müller, Justis Millis, Joel McGuire, Victor Wang, and Jennifer Schmitt!

... goes to all our funders, participants, facilitators, advisors, and other supporters for making this initiative happen.

Thank you for being a part of this journey to empower mental wellbeing. Let’s flourish!

Last but not least, take good care of yourself and the people around you!

² We are aware of a potential selection bias for the latter two surveys but expect the prevalence to be at least as high as in the general population.

³ We work with the underlying assumption that productivity is related to outcomes such as salary and quality of work. Based on a standard working year of 2,000 hours (assuming full-time employment), a 6-hour-per-week increase over 1.5 years would result in a 25% salary uplift for the additional hours worked. This means the equation is more applicable for freelancers, students, and employees in EA organizations than for employees in non-EA organizations.

I am so excited to read this summary and these results! Thank you and everyone involved for bringing and sharing the gift of group therapy with EA.

I'm excited about this!

One question, I notice a bit of a tension with the EA justification of this project ("improving EA productivity") and the common EA mental health issues around feeling pressure to be productive. I know CBT is more about providing thinking tools rather than giving concrete advice on what to do/try, but might there be a risk that people who take part will feel like they are expected to show a productivity increase? Would you still recommend to EA clients to take time off generously if someone is having burnout symptoms? I'm curious to hear your thoughts on this.

Hi Lukas,

Thank you for this thoughtful comment. I hope you allow me to quote our program manager, Sam. She crafted a beautifully phrased answer to something similar in a former post:

"I’m the Mental Health Program Manager at Rethink Wellbeing, and I’d like to offer my perspective on framing the program as a way to increase productivity. My thoughts are my own, not an official RW statement, but I have given my colleagues a chance to review this message before sending it.

I agree that basing one’s self-worth on one’s productivity can be a recipe for poor mental health (and rarely is effective at increasing productivity!).[...]

Despite agreeing with you, there are several reasons why RW highlights productivity in some of our marketing materials.

With all that said, because we agree that obsessing about impact is often harmful, we aren’t planning to emphasize productivity as a goal throughout the program. We are measuring it as one outcome, since it is a meaningful part of flourishing, even if just one; we also may briefly invite people to reflect on the effects of mental health on productivity if that is an effective source of motivation for them. But during the core of the program, we want to give people a chance to work on exactly this: the dysfunctional relationship they may have with productivity and impact, such as believing one needs to be productive, or close to perfect to be worthy.[1]

Talking about productivity is a way of getting people in the door by addressing a common core concern for EA community members. However, our model is that helping people increase their well-being, including developing a well-rounded life and engaging in self-care, should have a side effect of increasing their capacity to do good for others … without needing to focus on it or put any pressure on people. Rather, we hope to alleviate pressure. And participation in the program does not “obligate” people to do any particular EA work or change the world.

I expect that the team will continue to reflect on how to walk this tightrope in our marketing. It’s tough to get it right, because the messaging lands different ways with different people, so feedback like yours is incredibly valuable. Thank you."

Is it also possible to read the materials for the sessions without participating? I guess this could also help many people - at least more than not having any access to that kind of information at all.

Thanks for the concise summary and for your work! It looks thoughtfully executed and promising.

Congratulations on running the pilot and getting these results!

They seem rather promising to me and above a threshold for testing more rigorously and scaling. However, a couple of questions:

Thanks, Sebastian.

1. We measured once right at the end of the program at week 8 and once 4 weeks after the program, i.e., 12 weeks after the start of it. A follow-up is planned, likely after 3 but, at the latest, after 6 months, so that we can better estimate the course of the effect decline. In the post above, we present the 8 and 12-week results compared to the pre-course measurements.

2. Hopefully, I remember the research correctly, so take my answer below with some caution. As far as I know:

- professional 1:1 CBT-Psychotherapy for depression and anxiety shows very similar effect sizes to guided self-help CBT.

- 1:1 CBT-based coaching can help just as professional 1:1 CBT-Psychotherapy

- our effects look like very similar to the average of typical professional 1:1 CBT-Psychotherapy / guided self-help programs

I am uncertain if 1:1 psychotherapy reached the effects that quickly.

2.

- How do you define guided self-help? Do you mean facilitated group sessions?

- Do you have any specific papers/references that you've used to for those estimates?

How do you manage participants who fear being judged and may be afraid to interact with the group?