I'm interested in figuring out "what skill profiles are most leveraged for altruistic work after we get the first competent AI agents"?

I think this might be one of the most important questions for field-building orgs to work on (in any cause area but particularly AI safety). I think 80k and other AIS-motivated careers groups should try to think hard about this question and what it means for their strategy going forward.

I'm optimistic about productivity on the question of "what skill profiles are most leveraged after we get the first competent AI-agents" because:

- few people have actually tried to think about what managing an AI workforce would look like

- this doesn't feel conceptually abstract (e.g. some people have already adopted LMs in their day-to-day workflows)

- something like drop-in replacements for human remote workers are more likely than AIs less analogous to human workers if timelines are short (and if timelines are short, this work is more urgent than if timelines are long)

One important consideration is whether human managers are going to be good proxies for AI-agent managers. It's plausible to me that the majority of object-level AI safety work that has ever be done wi... (read more)

Some AI research projects that (afaik) haven't had much work done on them and would be pretty interesting:

- If the US were to co-build secure data centres in allied countries, would that be geopolitically stabilising or destabilising?

- What AI safety research agendas could be massively sped up by AI agents? What properties do they have (e.g. easily checkable, engineering > conceptual ...)?

- What will the first non-AI R&D uses of powerful and general AI systems be?

- Are there ways to leverage cheap (e.g. 100x lower than present-day cost) intelligence or manual labour to massively increase the US's electricity supply?

- What kinds of regulation might make it easier to navigate an intelligence explosion (e.g. establishing quick pathways to implement policy informed by AI experts, or establishing zones where compute facilities can be quickly built without navigating a load of red tape)?

Some quick thoughts on AI consciousness work, I may write up something more rigorous later.

Normally when people have criticisms of the EA movement they talk about its culture or point at community health concerns.

I think aspects of EA that make me more sad is that there seems to be a few extremely important issues on an impartial welfarist view that don’t seem to get much attention at all, despite having been identified at some point by some EAs. I do think that ea has done a decent job of pointing at the most important issues relative to basically every other social movement that I’m aware of but I’m going to complain about one of it’s shortcomings anyway.

It looks to me like we could build advanced ai systems in the next few years and in most worlds we have little idea of what’s actually going on inside them. The systems may tell us they are conscious, or say that they don’t like the tasks we tell them to do but right now we can’t really trust their self reports. There’ll be a clear economic incentive to ignore self reports that create a moral obligation to using the systems in less useful/efficient ways. I expect the number of deployed systems to be very large and that it’ll be ... (read more)

(quick thoughts, may be missing something obvious)

Relative the scale of the long term future, the number of AIs deployed in the near term is very small, so to me it seems like there's pretty limited upside to improving that. In the long term, it seems like we have AIs to figure out the nature of consciousness for us.

Maybe I'm missing the case that lock-in is plausible, it currently seems pretty unlikely to me because the singularity seems like it will transform the ways the AIs are running. So in my mind it mostly matters what happens after the singularity.

I'm also not sure about the tractability, but the scale is my major crux.

I do think understanding AI consciousness might be valuable for alignment, I'm just arguing against work on nearterm AI suffering.

I'm really glad you wrote this; I've been worried about the same thing. I'm particularly worried at how few people are working on it given the potential scale and urgency of the problem. It also seems like an area where the EA ecosystem has a strong comparative advantage — it deals with issues many in this field are familiar with, requires a blend of technical and philosophical skills, and is still too weird and nascent for the wider world to touch (for now). I'd be very excited to see more research and work done here, ideally quite soon.

Companies often hesitate to grant individual raises due to potential ripple effects:

The true cost of a raise may exceed the individual amount if it:

- Necessitates adjustments to other salaries via organizational policies

- Sets precedents for other employees

- Sparks salary discussions among colleagues

An alternative for altruistic employees: Negotiate for charitable donation matches

- Generally less desirable for most employees

- Similarly attractive to altruists (Assumption: Current donations to eligible charities exceed the proposed raise)

This approach allows altruists to increase their impact without triggering company-wide salary adjustments.

(About 85% confident, not a tax professional)

At least in the US I'm pretty sure this has very poor tax treatment. The company match portion would be taxable to the employee while also not qualifying for the charitable tax deduction. The idea is they can offer a consistent match as policy, but if they're offering a higher match for a specific employee that's taxable compensation. And the employee can only deduct contributions they make, which this isn't quite.

I feel a bit confused about how much I should be donating.

-

On the one hand there’s just a straight forward case that donating could help many sentient beings to a greater degree than it helps me. On the other hand, donating 10% for me feels like it’s coming from a place of fitting in with the EA consensus, gaining a certain kind of status and feeling good rather than believing it’s the best thing for me to do.

-

I’m also confused about whether I’m already donating a substantial fraction of my income.

-

I’m pretty confident that I’m taking at least a 10% pay-cut in my current role. If nothing else my salary right now is not adjusted for inflation which was ~8% last year so it feels like I’m at least underpaid by that amount (though it’s possible they were overpaying me before). Many of my friends earn more than twice as much as I do and I think if I negotiated hard for a 100% salary increase the board would likely comply.

-

So how much of my lost salary should I consider to be a donation? I think numbers between 0% and 100% are plausible. -50% also isn’t insane to me as my salary does funge with other peoples donations to charities.

-

One solution is that I should just negotiate

-

A couple of considerations I've thought about, at least for myself

(1) Fundamentally, giving helps save/improve lives, and that's a very strong consideration that we need equally strong philosophical or practical reasons to overcome.

(2) I think value drift is a significant concern. For less engaged EAs, the risk is about becoming non-EA altogether; for more engaged EAs, it's more about becoming someone less focused on doing good and more concerned with other considerations (e.g. status); this doesn't have to be an explicit thing, but rather biases the way we reason and decide in a way that means we end up rationalizing choices that helps ourselves over the greater good. Giving (e.g. at the standard 10%) helps anchor against that.

(3) From a grantmaking/donor advisory perspective, I think it's hard to have moral credibility, which can be absolutely necessary (e.g. advising grantees to put up modest salaries in their project proposals, not just to increase project runway but also the chances that our donor partners approve the funding request). And this is both psychologically and practically hard to do this if you're not just earning more but earning far more and not giving to charity... (read more)

Hey Caleb!

(I'm writing this in my personal capacity, though I work at GWWC)

On 1: While I think that giving 10% is a great norm for us to have in the community (and to inspire people worldwide who are able to do the same), I don't think there should be pressure for people to take a pledge or donate who don't feel inspired to do so - I'd like to see a community where people can engage in ways that make sense for them and feel welcomed regardless of their donation habits or career choices, as long as they are genuinely engaging with wanting to do good effectively.

On 3: I think it makes sense for people to build up some runway or sense of financial stability, and that they should generally factor this in when considering donating or taking a pledge. I personally only increased my donations to >10% after I felt I had enough financial stability to manage ongoing health issues.

I do think that people should consider how much runway or savings they really need though, and whether small adjustments in lifestyle could increase their savings and allow for more funds to donate - after all, many of us are still in the top few % of global income earners even after taking jobs that are less than we would getting in the private sector.

I guess there are two questions it might be helpful to separate.

- what is the best thing to do with my money if I am purely optimising for the good?

- how much of my money does the good demand?

Looking at the first question (1), I think engaging with the cost of giving (as opposed to the cost of building runway) wrt doing the most good is also helpful. It feels to me like donating $10K to AMF could make me much less able to transition my career to a more impactful path, costing me months, which could mean that several people die that I could have saved via ... (read more)

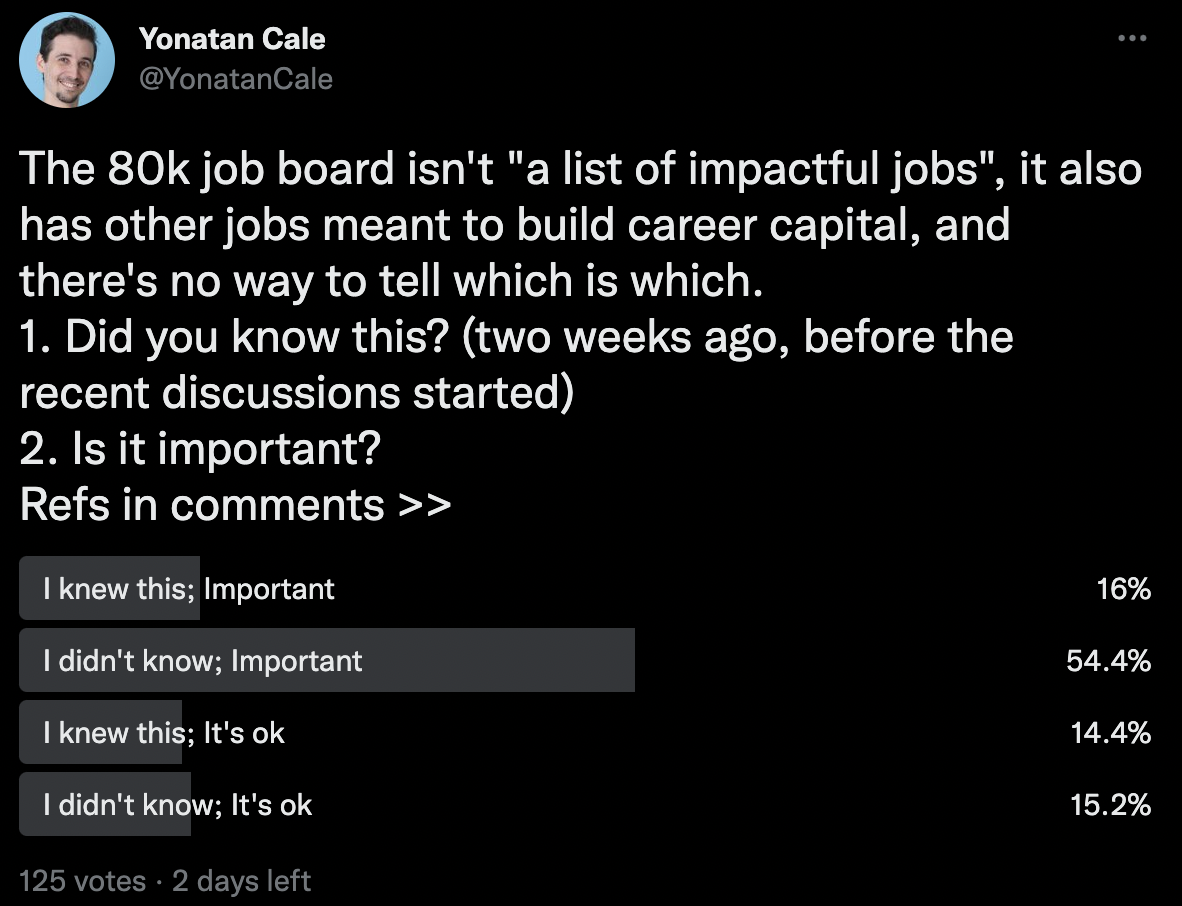

Update 2024-Jul-5 as this seems to be getting some attention again: I am not sure whether I endorse the take below anymore - I think 80k made some UI changes that largely address my concerns.

The 80k job board has too much variance.

(Quickly written, will probably edit at some point in future)

Jobs on the main 80k job board can range from (in my estimation) negligible value to among the best opportunities I'm aware of. I have also seen a few jobs that I think are probably actively harmful (e.g., token alignment orgs trying to build AGI where the founders haven't thought carefully about alignment—based on my conversations with them).

I think a helpful orientation towards jobs on the jobs board is, at least one person with EA values who happens to work at 80k thinks it's worth signal boosting. And NOT EA/80k endorses all of these jobs without a lot more thought from potential applicants.

Jobs are also on the board for a few different reasons, e.g., building career cap vs. direct impact vs.... and there isn't lots of info about why the job is there in the first place.

I think 80k does try to give more of this vibe than people get. I don't mean to imply that they are falling short in an obvi... (read more)

TL;DR: I think this is very under communicated

You have that line there, but I didn't notice it in years, and I recently talked to other people who didn't notice it and were also very surprised. The only person I think I talked to who maybe knew about it is Caleb, who wrote this shortform.

Everyone (I talked to) thinks 80k is the place to find an impactful job.

Maybe the people I talk to are a very biased sample somehow, it could be, but they do include many people who are trying to have a high impact with their career right now

Very half baked

Should recent ai progress change the plans of people working on global health who are focused on economic outcomes?

It seems like many more people are on board with the idea that transformative ai may come soon, let’s say within the next 10 years. This pretty clearly has ramifications for people working on longtermist causes areas but I think it should probably affect some neartermist cause prioritisation as well.

If you think that AI will go pretty well by default (which I think many neartermists do) I think you should expect to see extremely rapid economic growth as more and more of industry is delegated to AI systems.

I’d guess that you should be much less excited about interventions like deworming or other programs that are aimed at improving people’s economic position over a number of decades. Even if you think the economic boosts from deworming and ai will stack, and you won’t have sharply diminishing returns on well-being with wealth I think you should be especially uncertain about your ability to predict the impact of actions in the crazy advanced ai world (which would generally make me more pessimistic about how useful the thing I’m working on is).

I don’t have... (read more)

If you want there to be more great organisations, don’t lower the bar

I sometimes hear a take along the lines of “we need more founders who can start organisations so that we can utilise EA talent better”. People then propose projects that make it easier to start organisations.

I think this is a bit confused. I think the reason that we don’t have more founders is due to a having few people who have deep models in some high leverage area and a vision for a project. I don’t think many projects aimed at starting new organisations are really tackling this bottleneck at its core and instead lower the bar by helping people access funding, or appear better positioned than they actually are.

I think in general people that want to do ambitious things should focus on building deep domain knowledge, often by working directly with people with deep domain knowledge. The feedback loops are just too poor within most EA cause areas to be able to learn effectively by starting your own thing. This isn’t always true, but I think it’s more often than not true for most new projects that I see.

I don’t think the normal startup advice where running a startup will teach you a lot applies well here. Most start... (read more)

A quickly written model of epistemic health in EA groups I sometimes refer to

I think that many well intentioned ea groups do a bad job cultivating good epistemics. By this I roughly mean the culture of the group does not differentially advantage truthseekjng discussions or other behaviours that helps us figure out what is actually true as opposed to what is convenient or feels nice.

I think that one of the main reasons for this is poor gatekeeping of ea spaces. I do think groups do more and more gate keeping, but they are often not selecting on epistemics as hard as I think they should be. I’m going to say why I think this is and then gesture at some things that might improve the situation. I’m not going to talk at this tjme about why I think it’s important - but I do think it’s really really important.

EA group leaders often exercise a decent amount of control over who should be part of their group (which I think is great). Unfortunately, it’s much easier to evaluate what conclusion a person has come to, than how good were their reasoning processes. So "what a person says they think" becomes the filter for who gets to be in the group as opposed to how do they think. Intuitively I ex... (read more)

Why handing over vision is hard.

I often see projects of the form [come up with some ideas] -> [find people to execute on ideas] -> [hand over the project].

I haven't really seen this work very much in practice. I have two hypotheses for why.

-

The skills required to come up with great projects are pretty well correlated with the skills required to execute them. If someone wasn't able to come up with the idea in the first place, it's evidence against them having the skills to execute well on it.

-

Executing well looks less like firing a canon and more like deploying a heat-seeking missile. In reality, most projects are a sequence of decisions that build on each other, and the executors need to have the underlying algorithm to keep the project on track. In general, when someone explains a project, they communicate roughly where the target is and the initial direction to aim in, but it's much harder to hand off the algorithm that keeps the missile on track.

I'm not saying separating out ideas and execution is impossible, just that it's really hard and good executors are rare and very valuable. Good ideas are cheap and easy to come by, but good execution is expensive.

A formula that I see more often works well is [a person has idea] -> [person executes well in their own idea until they are doing something they understand very well "from the inside" or is otherwise hand over-able] -> person hands over the project to a competent executor.

The importance of “inside view excitement”

Another model I regularly refer to when advising people on projects to pursue. Quickly written - may come back and try to clarify this later.

I think it’s generally really important for people to be inside view excited about their projects. By which I mean, they think the project is good based on their own model of how the project will interact with the world.

I think this is important for a few reasons. The first obvious one is that it’s generally much more motivating to work on things you thing are good.

The second, and more interesting reason, is that if you are not inside view excited I think (generally speaking) you don’t actually understand why your project will succeed. Which makes it hard to execute well on your project. When people aren’t inside view excited about their project I get the sense they either have the model and don’t actually believe the project is good, or they are just deferring to others on how good it is which makes it hard to execute.

One of my criticisms of criticisms

I often see public criticisms of EA orgs claiming poor execution on some object level activity or falling short on some aspect of the activity (e.g. my shortform about the 80k jobs board). I think this is often unproductive.

In general I think we want to give feedback to change the organisations policy (decision making algorithm), and maybe the EA movements policy. When you publicly criticise an org on some activity you should be aware that you are disincentivising the org from generally doing stuff.

Imagine the case where the org was choosing between scrappily running a project to get data and some of the upside value strategically as opposed to carefully planning and failing to execute fully. I think in these cases you should react differently and from the outside it is hard to know which situation the org was in.

If we also criticised orgs for not doing enough stuff I might feel differently, but this is an extremely hard criticism to make unless you are on the inside. I'd only trust a few people who didn't have inside information to do this kind of analysis.

Maybe a good idea would be to describe the amount of resources that would have had to have g... (read more)

(crosspost of a comment on imposter syndrome that I sometimes refer to)

I have recently found it helpful to think about how important and difficult the problems I care about are and recognise that on priors I won't be good enough to solve them. That said, the EV of trying seems very very high, and people that can help solve them are probably incredibly useful.

So one strategy is to just try and send lots of information that might help the community work out whether I can be useful, into the world (by doing my job, taking actions in the world, writing p... (read more)

More EAs should give rationalists a chance

My first impression of meeting rationalists was at a AI safety retreat a few years ago. I had a bunch of conversations that were decidedly mixed and made me think that they weren’t taking the project of doing a large amount of good seriously, reasoning carefully (as opposed to just parroting rationalist memes) or any better at winning than the standard EA types that I felt were more ‘my crowd’.

I now think that I just met the wrong rationalists early on. The rationalists that I most admire:

- Care deeply about their va

I adjust upwards on EAs who haven't come from excellent groups

I spend a substantial amount of my time interacting with community builders and doing things that look like community building.

It's pretty hard to get a sense of someone's values, epistemics, agency .... by looking at their CV. A lot of my impression of people that are fairly new to the community is based on a few fairly short conversations at events. I think this is true for many community builders.

I worry that there are some people who were introduced to some set of good ideas first, and then ... (read more)

‘EA is too elitist’ criticisms seem to be more valid from a neartermist perspective than a longtermist one

I sometimes see criticisms around

- EA is too elitist

- EA is too focussed on exceptionally smart people

I do think that you can have a very outsized impact even if you're not exceptionally smart, dedicated, driven etc. However I think that from some perspectives focussing on outliery talent seems to be the right move.

A few quick claims that push towards focusing on attracting outliers:

- The main problems that we have are technical in nature (particularl

I guess there are two questions it might be helpful to separate.

Looking at the first question (1), I think engaging with the cost of giving (as opposed to the cost of building runway) wrt doing the most good is also helpful. It feels to me like donating $10K to AMF could make me much less able to transition my career to a more impactful path, costing me months, which could mean that several people die that I could have saved via donating to Givewell charities.

It feels like the "cost" applies symmetrically to the runway and donating cases and pushes towards "you should take this seriously" instead of having a high bar for spending money on runway/personal consumption.

Looking at (2) - Again I broadly agree with the overall point, but it doesn't really push me towards a particular percentage to give.