Key insights

|

Findings

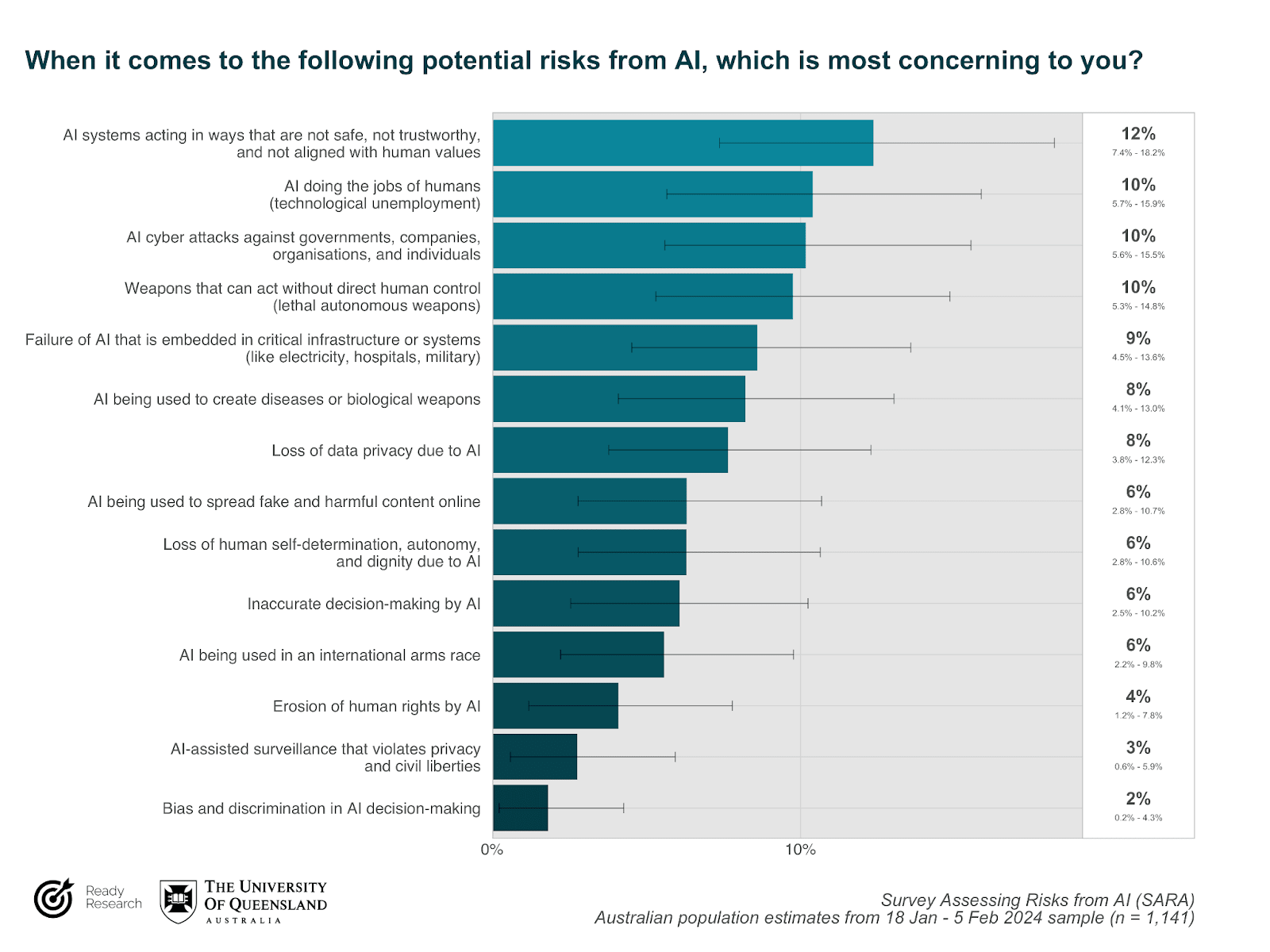

Australians are concerned about diverse risks from AI

Australians are skeptical of the promise of artificial intelligence: 4 in 10 support the development of AI, 3 in 10 oppose it, and opinions are divided about whether AI will be a net good (4 in 10) or harm (4 in 10).

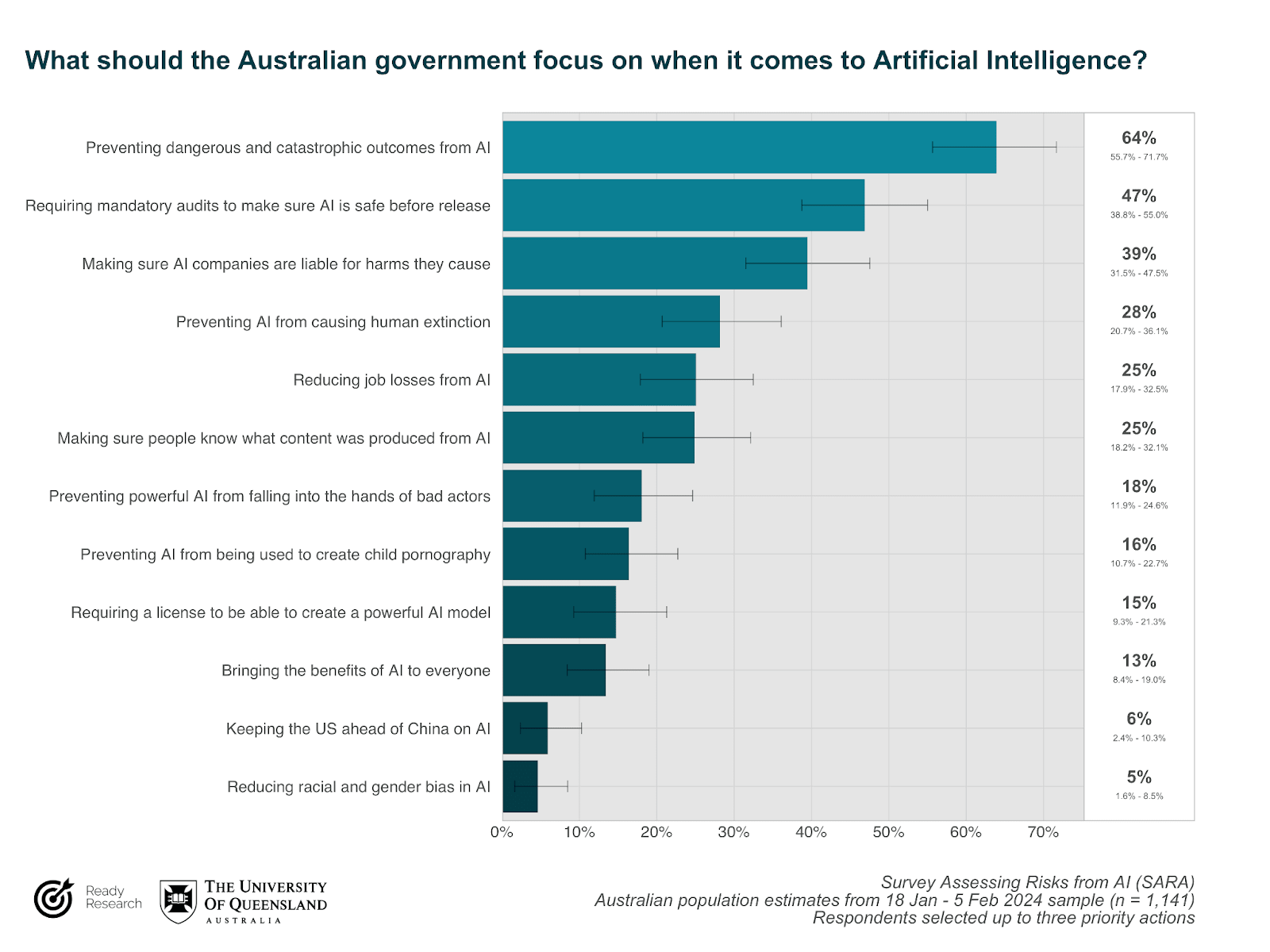

Australians support regulatory and non-regulatory action to address risks from AI

When asked to choose the top 3 AI priorities for the Australian Government, the #1 selected priority was preventing dangerous and catastrophic outcomes from AI. Other actions prioritised by at least 1 in 4 Australians included (1) requiring audits of AI models to make sure they are safe before being released, (2) making sure that AI companies are liable for harms, (3) preventing AI from causing human extinction, (4) reducing job losses from AI, and (5) making sure that people know when content is produced using AI.

Almost all (9 in 10) Australians think that AI should be regulated by a national government body, similar to how the Therapeutic Goods Administration acts as a national regulator for drugs and medical devices. 8 in 10 Australians think that Australia should lead the international development and governance of AI.

Australians take catastrophic and extinction risks from AI seriously

Australians consider the prevention of dangerous and catastrophic outcomes from AI the #1 priority for the Australian Government. In addition, a clear majority (8 in 10) of Australians agree with AI experts, technology leaders, and world political leaders that preventing the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war 1.

Artificial Intelligence was judged as the third most likely cause of human extinction, after nuclear war and climate change. AI was judged as more likely than a pandemic or an asteroid impact. About 1 in 3 Australians think it’s at least ‘moderately likely’ AI will cause human extinction in the next 50 years.

Implications and actions supported by the research

Findings from SARA show that Australians are concerned about diverse risks from AI, especially catastrophic risks, and expect the Australian Government to address these through strong governance action.

Australians’ ambivalence about AI and expectation of strong governance action to address risks is a consistent theme of public opinion research in this area 2–4

Australians are concerned about more diverse risks from AI, compared to Government

The Australian Government published an interim response to its Safe and Responsible AI consultation 5. As part of its interim response, the Government plans to address known risks and harms from AI by strengthening existing laws, especially in areas of privacy, online safety, and mis/disinformation.

Findings from SARA show that some Australians are concerned about privacy, online safety, and mis/disinformation risks, so government action in these areas is a positive step. However, the risks that Australians are most concerned about are not a focus of the Government’s interim response. These priority risks include AI systems being misused or accidentally acting in ways that harm people, AI-enabled cyber attacks, and job loss due to AI. The Government must broaden its consideration of AI risks to include those identified as high priority by Australians.

Australians want Government to establish a national regulator for AI, require pre-release safety audits, and make companies liable for AI harms

The Government plans to develop a voluntary AI Safety Standard and voluntary watermarking of AI-generated materials. Findings from SARA show that Australians support stronger Government action, including mandatory audits to make sure AI is safe before release 6, and making AI companies liable for harms caused by AI 7. Australians show strong support for a national regulatory authority for AI; this support has been consistently high since at least 2020 4. To meet expectations, Government should establish a national regulation for AI, and implement strong action to limit harms from AI.

Australians want Government action to prevent dangerous and catastrophic outcomes from frontier and general-purpose models

In its interim response, the Government described plans to establish mandatory safeguards for ‘legitimate, high-risk settings’ to ‘ensure AI systems are safe when harms are difficult or impossible to reverse’, as well as for ‘development, deployment and use of frontier or general-purpose models’.

Findings from SARA indicate that Australians want the government, as a #1 priority action, to prevent dangerous and catastrophic outcomes from AI. Frontier and general-purpose models carry the greatest risk for catastrophic outcomes 8, and are also advancing in capabilities without clear safety measures. Australians believe preventing the risk of extinction from AI should be a global priority. To meet Australians’ expectations, Government must ensure it can understand and respond to emergent and novel risks from these AI models.

Research context and motivation

The development and use of AI technologies is accelerating. Across 2022 and 2023, new large-scale models have been announced monthly, and are achieving increasingly complex and general tasks9; this trend continues in 2024 with Google DeepMind Gemini, OpenAI Sora, and others. Experts in AI forecast that development of powerful AI models could lead to radical changes in wealth, health, and power on a scale comparable to the nuclear and industrial revolutions 10,11.

Addressing the risks and harms from these changes requires effective AI governance: forming robust norms, policies, laws, processes and institutions to guide good decision-making about AI development, deployment and use12. Effective governance is especially crucial for managing extreme or catastrophic risks from AI that are high impact and uncertain, such as harm from misuse, accident or loss of control8.

Understanding public beliefs and expectations about AI risks and their possible responses is important for ensuring that the ethical, legal, and social implications of AI are addressed through effective governance. We conducted the Survey Assessing Risks from AI (SARA) to generate ‘evidence for action’, to help public and private actors make the decisions needed for safer AI development and use.

About the Survey Assessing Risks from AI

Ready Research and The University of Queensland collaborated to design and conduct the Survey Assessing Risks from AI (SARA). This briefing presents topline findings. Visit the website or read the technical report for more information on the project, or contact Dr Alexander Saeri (a.saeri@uq.edu.au).

Between 18 January and 5 February 2024, The University of Queensland surveyed 1,141 adults living in Australia, online using Qualtrics survey platform. Participants were recruited through the Online Research Unit's panel, with nationally representative quota sampling by gender, age group, and state/territory. Multilevel regression with poststratification (MRP) was used to create Australian population estimates and confidence intervals, using 2021 Census information about sex, age, state/territory, and education. The research project was reviewed and approved by UQ Research Ethics (Project 2023/HE002257).

This project was funded by the Effective Altruism Infrastructure Fund.

References

- Center for AI Safety. Statement on AI risk. https://www.safe.ai/statement-on-ai-risk (2023).

- Lockey, S., Gillespie, N. & Curtis, C. Trust in Artificial Intelligence: Australian Insights. https://espace.library.uq.edu.au/view/UQ:b32f129 (2020) doi:10.14264/b32f129.

- Gillespie, N., Lockey, S., Curtis, C., Pool, J. & Akbari, A. Trust in Artificial Intelligence: A Global Study. https://espace.library.uq.edu.au/view/UQ:00d3c94 (2023) doi:10.14264/00d3c94.

- Selwyn, N., Cordoba, B. G., Andrejevic, M. & Campbell, L. AI for Social Good - Australian Attitudes toward AI and Society. https://bridges.monash.edu/articles/report/AI_for_Social_Good_-_Australian_Attitudes_Toward_AI_and_Society_Report_pdf/13159781/1 (2020) doi:10.26180/13159781.V1.

- Safe and Responsible AI in Australia Consultation: Australian Government’s Interim Response. https://consult.industry.gov.au/supporting-responsible-ai (2024).

- Shevlane, T. et al. Model evaluation for extreme risks. arXiv [cs.AI] (2023) doi:10.48550/arXiv.2305.15324.

- Weil, G. Tort Law as a Tool for Mitigating Catastrophic Risk from Artificial Intelligence. (2024) doi:10.2139/ssrn.4694006.

- Anderljung, M. et al. Frontier AI Regulation: Managing Emerging Risks to Public Safety. arXiv [cs.CY] (2023) doi:10.48550/arXiv.2307.03718.

- Maslej, N. et al. Artificial Intelligence Index Report 2023. arXiv [cs.AI] (2023).

- Grace, K., Stein-Perlman, Z., Weinstein-Raun, B. & Salvatier, J. 2022 Expert Survey on Progress in AI. https://aiimpacts.org/2022-expert-survey-on-progress-in-ai/ (2022).

- Davidson, T. What a Compute-Centric Framework Says about Takeoff Speeds. https://www.openphilanthropy.org/research/what-a-compute-centric-framework-says-about-takeoff-speeds/ (2023).

- Dafoe, A. AI governance: Opportunity and theory of impact. https://www.allandafoe.com/opportunity (2018).

Nice! I was surprised that more present-day harms were not more front of mind for respondents (e.g. job losses, AI pornography, and racial and gender bias were far below preventing catastrophic outcomes). Interesting.

Great that you got it into The Conversation! And appreciate the key takeaways box at the start here

Just FYI, the link at the top doesn't work for me.

Thanks Peter. Fixed!

Thanks for sharing. This is a very insightful piece. Im surprised that folks were more concerned about larger scale abstract risks compared to more well defined and smaller scale risks (like bias). I'm also surprised that they are this pro regulation (including a Sox months pause). Given this, I feel a bit confused that they mostly support the development of AI and I wonder what had most shaped their view.

Overall, I mildly worry that the survey led people to express more concern than they feel. Because this seems surprisingly close to my perception of the views of many existential risks "experts". What do you think?

Would love to see this for other countries too. How feasible do you think that would be?

Thanks Seb. I'm not that surprised—public surveys in the Existential Risk Persuasion tournament were pretty high (5% for AI). I don't think most people are good at calibrating probabilities between 0.001% and 10% (myself included).

I don't have strong hypotheses why people 'mostly support' something they also want treated with such care. My weak ones would be 'people like technology but when asked about what the government should do, want them to keep them safe (remove biggest threats).' For example, Australians support getting nuclear submarines but also support the ban on nuclear weapons. I don't necessarily see this as a contradiction—"keep me safe" priorities would lead to both. I don't know if our answers would have changed if we made the trade-offs more salient (e.g., here's what you'd lose if we took this policy action prioritising risks). Interested in suggestions for how we could do that better.

It'd be easy for us to run in other countries. We'll put the data and code online soon. If someone's keen to run the 'get it in the hands of people who want to use it' piece, we could also do the 'run the survey and make a technical report one'. It's all in R so the marginal cost of another country is low. We'd need access to census data to do the statistical adjustment to estimate population agreement (but that should be easy to see if possible).

Thanks. Hmm. The vibe I'm getting from these answers is P(extinction)>5% (which is higher than the XST you linked).

Ohh that's great. We're starting to do significant work in India and would be interested in knowing similar things there. Any idea of what it'd cost to run there?

I'll look into it. The census data part seems okay. Collecting a representative sample would be harder (e.g., literacy rates are lower, so I don't know how to estimate responses for those groups).

That makes sense. We might do some more strategic outreach later this year where a report like this would be relevant but for now i don't have a clear use case in mind for this so probably better to wait. Approximately how much time would you need to run this?

Our project took approximately 2 weeks FTE for 3 people (most was parallelisable). Probably the best reference class.

Very helpful. I'll keep it in mind if the use case/need emerges in the future.

Executive summary: A survey of Australians found high levels of concern about risks from AI, especially catastrophic risks, and strong support for government action to regulate AI and prevent dangerous outcomes.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.