I didn't want to read all of @Vasco Grilo🔸's post on the "meat eating" problem and all 80+ comments, so I expanded all the comments and copy/pasted the entire webpage into Claude with the following prompt: "Please give me a summary of the authors argument (dot points, explained simply) and then give me a summary of the kinds of push back he got (dot points, explained simply, thematised, giving me a sense of the concentration/popularity of themes in the push back)"

Below is the result (the Forum team might want to consider how posts with large numbers of comments can be read quickly):

- The author claims that saving lives in developing countries might cause more harm than good in the short term because:

- When people are saved from death, they consume animal products

- The suffering of farm animals (especially chickens and farmed fish/shrimp) from being raised and killed outweighs the happiness of the human life saved

- Using specific calculations, they estimate that one average person causes 15.5 times more animal suffering than human happiness globally (with higher ratios in some countries)

- The author specifically criticizes two organizations:

- GiveWell (for granting $1.09 billion without consid

I met Australia's Assistant Minister for Defence last Friday. I asked him to write an email to the Minister in charge of AI, asking him to establish an AI Safety Institute. He said he would. He also seemed on board with not having fully autonomous AI weaponry.

All because I sent one email asking for a meeting + had said meeting.

Advocacy might be the lowest hanging fruit in AI Safety.

I don't know how to say this in a way that won't come off harsh, but I've had meetings with > 100 people in the last 6 months in AI Safety and I've been surprised how poor the industry standard is for people:

- Planning meetings (writing emails that communicate what the meeting will be about, it's purpose, my role)

- Turning up to scheduled meetings (like literally, showing up at all)

- Turning up on time

- Turning up prepared (i.e. with informed opinions on what we're going to discuss)

- Turning up with clear agendas (if they've organised the meeting)

- Running the meetings

- Following up after the meeting (clear communication about next steps)

I do not have an explanation for what is going on here but it concerns me about the movement interfacing with government and industry. My background is in industry and these just seem to be things people take for granted?

It is possible I give off a chill vibe, leading to people thinking these things aren't necessary, and they'd switch gears for more important meetings!

When AI Safety people are also vegetarians, vegans or reducetarian, I am pleasantly surprised, as this is one (of many possible) signals to me they're "in it" to prevent harm, rather than because it is interesting.

I am skeptical that exhibiting one of the least cost-effective behaviors for the purpose of reducing suffering would be correlated much with being "in it to prevent harm". Donating to animal charities, or being open to trade with other people about reducing animal suffering, or other things that are actually effective seem like much better indicator that people are actually in it to prevent harm.

My best guess is that most people who are vegetarians, vegans or reducetarians, and are actually interested in scope-sensitivity, are explicitly doing so for signaling and social coordination reasons (which to be clear, I think has a bunch going for it, but seems like the kind of contingent fact that makes it not that useful as a signal of "being in it to prevent harm").

Have you actually just talked to people about their motives for reducing their animal product consumption, or read about them? Do you expect them to tell you it's mostly for signaling or social coordination, or (knowingly or unknowingly) lie that it isn't?

I'd guess only a minority of EAs go veg mostly for signaling or social coordinaton.

Other reasons I find more plausibly common, even among those who make bigger decisons in scope-sensitive ways:

- Finding it cost-effective in an absolute sense, e.g. >20 chickens spared per year, at small personal cost (usually ignoring opportunity costs to help others more). (And then maybe falsely thinking you should go veg to do this, rather than just cut out certain animal products, or finding it easier to just go veg than navigate exceptions.)

- Wanting to minimize the harm they personally cause to others, or to avoid participating in overall harmful practices, or deontological or virtue ethics reasons, separately from actively helping others or utilitarian-ish reasons.

- Not finding not eating animal products very costly, or not being very sensitive to the costs. It may be easy or take from motivational "budgets" psychologically separate from wo

This is an old thread, but I'd like to confirm that a high fraction of my motivation for being vegan[1] is signaling to others and myself. (So, n=1 for this claim.) (A reasonable fraction of my motivation is more deontological.)

- ^

I eat fish rarely as I was convinced that the case for this improving productivity is sufficiently strong.

Happy new year everyone! I’m extremely grateful to have this community in my life!

A psychological trap EAs might want to watch out for: don't mistake indecision for wisdom.

Sometimes the better option is to just put something out into the world in a small format and get information back.

Of course the reverse works: don't mistake decisiveness for bravery. But I don't think this is a problem for EAs :)

People in EA are ridiculously helpful. I've been able to get a Careers Conference off the ground because people in other organisations are happy to help out in significant ways. In particular, Elliot Teperman and Bridget Loughhead from EA Australia, Greg Sadler from the Good Ancestors Project and Chris Leong. Several people have also volunteered to help at the Conference as well as on other tasks, such as running meetups and creating content.

In theory I'm running an organisation by myself (via an LTFF grant), but because it is nested within the EA/AIS space, I've got the support of a big network. Otherwise this whole project would be insane to start.

If someone is serious about doing Movement Building, but worries about doing it alone, this feels like important information.

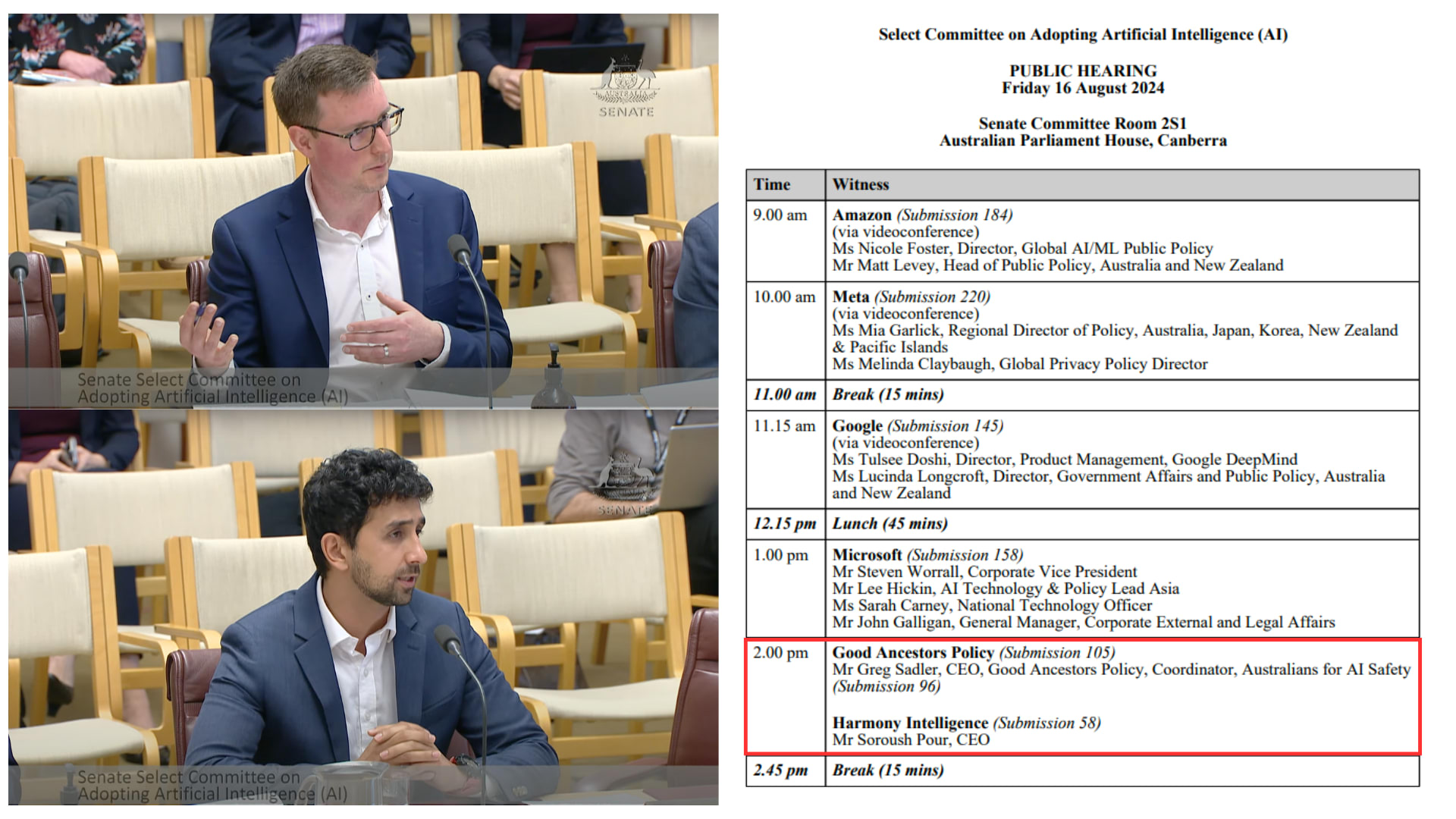

[IMAGE] Extremely proud and excited to watch Greg Sadler (CEO of Good Ancestors Policy) and Soroush Pour (Co-Founder of Harmony Intelligence) speak to the Australian Federal Senate Select Committee on Artificial Intelligence. This is a big moment for the local movement. Also, check out who they ran after.

AI Safety has less money, talent, political capital, tech and time. We have only one distinct advantage: support from the general public. We need to start working that advantage immediately.

I think it is good to have some ratio of upvoted/agreed : downvotes/disagreed posts in your portfolio. I think if all of your posts are upvoted/high agreeance then you're either playing it too safe or you've eaten the culture without chewing first.

I think some kinds of content are uncontroversially good (e.g. posts that are largely informational rather than persuasive), so I think some people don't have a trade-off here.

What is your AI Capabilities Red Line Personal Statement? It should read something like "when AI can do X in Y way, then I think we should be extremely worried / advocate for a Pause*".

I think it would be valuable if people started doing this; we can't feel when we're on an exponential, so its likely we will have powerful AI creep up on us.

@Greg_Colbourn just posted this and I have an intuition that people are going to read it and say "while it can do Y it still can't do X"

*in the case you think a Pause is ever optimal.

I'm pretty confident that Marketing is in the top 1-3 skill bases for aspiring Community / Movement Builders.

When I say Marketing, I mean it in the broad sense it used to mean. In recent years "Marketing" = "Advertising", but I use the classic Four P's of Marketing to describe it.

The best places to get such a skill base is at FMCG / mass marketing organisations such as the below. Second best would be consulting firms (McKinsey & Company):

- Procter & Gamble (P&G)

- Unilever

- Coca-Cola

- Amazon

1. Product

- What you're selling (goods or services)

- Features and benefits

- Quality, design, packaging

- Brand name and reputation

- Customer service and support

2. Price

- Retail/wholesale pricing

- Discounts and promotions

- Payment terms

- Pricing strategy (premium, economy, etc.)

- Price comparison with competitors

3. Place (Distribution)

- Sales channels

- Physical/online locations

- Market coverage

- Inventory management

- Transportation and logistics

- Accessibility to customers

4. Promotion

- Advertising

- Public relations

- Sales promotions

- Direct marketing

- Digital marketing

- Personal selling

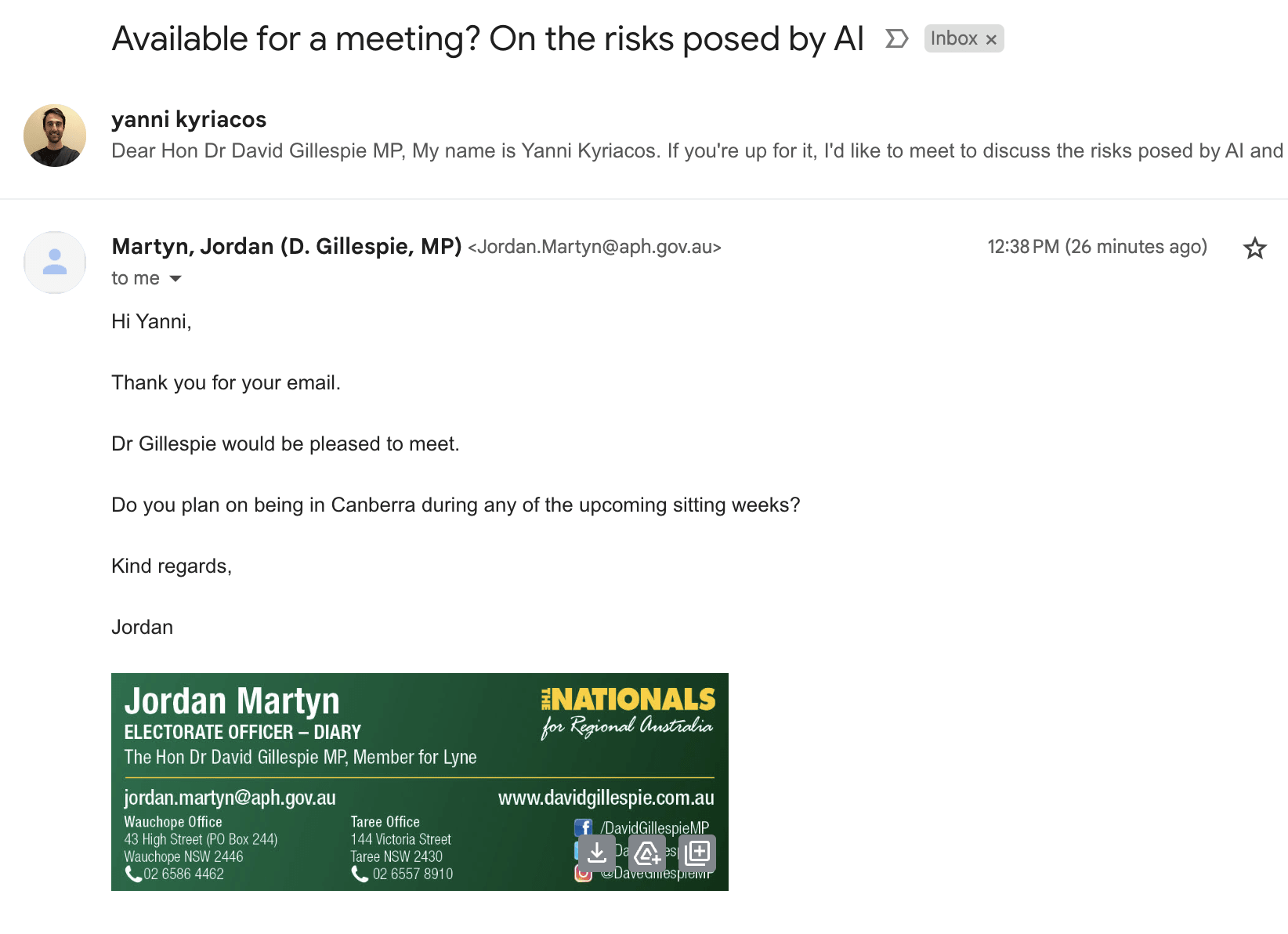

A periodic reminder that you can just email politicians and then meet them (see screenshot below).

Big AIS news imo: “The initial members of the International Network of AI Safety Institutes are Australia, Canada, the European Union, France, Japan, Kenya, the Republic of Korea, Singapore, the United Kingdom, and the United States.”

H/T @shakeel

Flaming hot take: I wonder if some EAs suffer from Scope Oversensitivity - essentially the inverse of the identifiable victim effect. Take the animal welfare vs global health debate: are we sometimes biased by the sheer magnitude of animal suffering numbers, rather than other relevant factors? Just as the identifiable victim effect leads people to overweight individual stories, maybe we're overweighting astronomical numbers.

EAs pride themselves on scope sensitivity to combat emotional biases, but taken to an extreme, could this create its own bias? Are we sometimes too seduced by bigger numbers = bigger problem? The meta-principle might be that any framework, even one designed to correct cognitive biases, needs wisdom and balance to avoid becoming its own kind of distortion.

Another shower thought - I’ve been vegetarian for 7 years and vegan for 6.5.

I find it very surprising and interesting how little it has nudged anyone in my friends / family circle to reduce their consumption of meat.

I would go as far as to say it has made literally no difference!

Keep in mind I’m surrounded by extremely compassionate and mostly left leaning people.

I also have relatively wide networks and I normally talk about animal welfare in a non annoying way.

You’d have thought in that time one person would have been nudged - nope!

My intention going vegan wasn’t as some social statement, but if you’d asked me 7 years later would there have been some social effect, I would have guessed yes.

Im surprised!

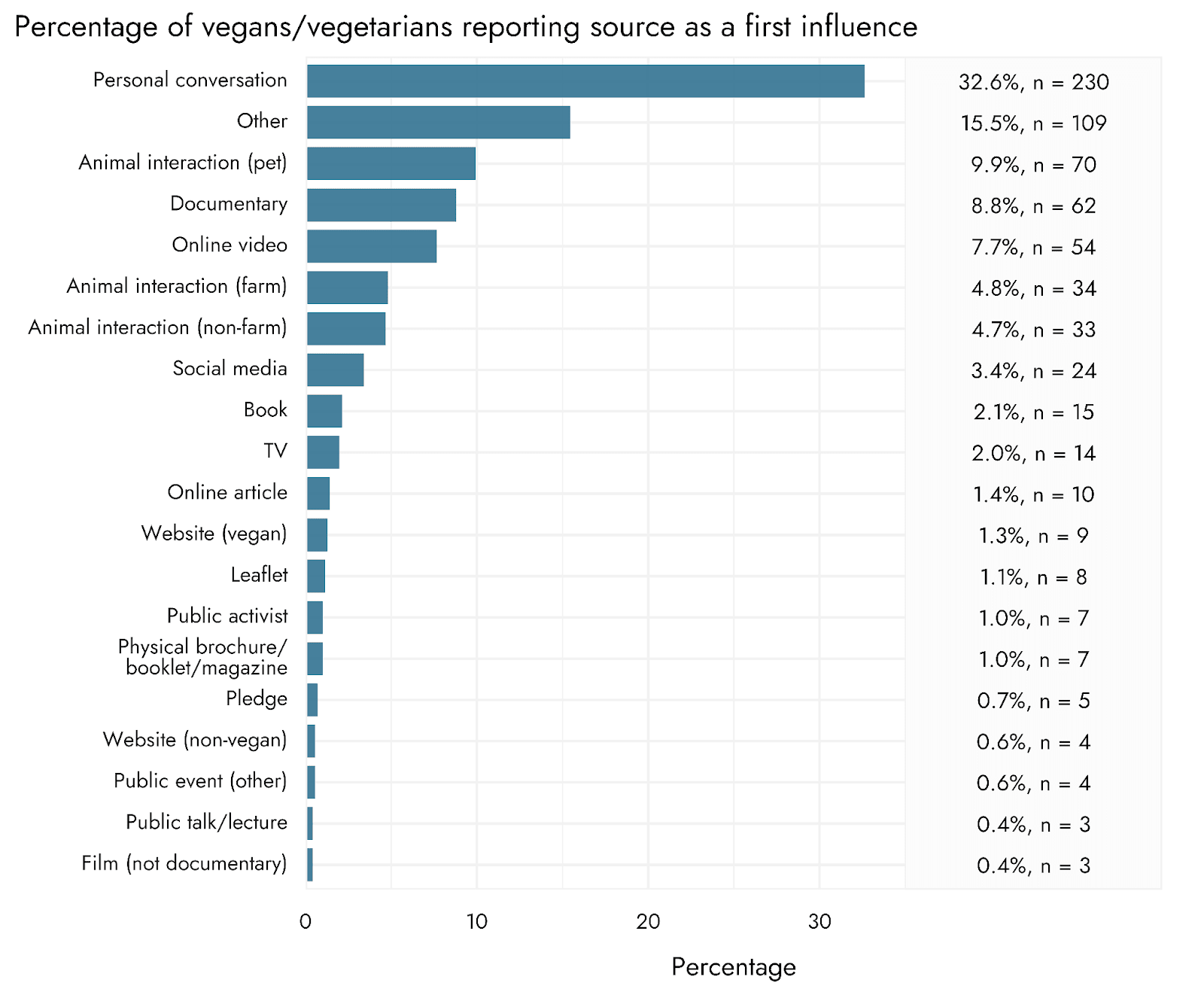

When we asked current vegetarians/vegans what influenced them to adopt their diet, personal conversations (primarily with friends/family) were among the top influences.

So your surprise/expectation seems reasonable! Of course, I don't know whether it's actually surprising, since presumably whether anyone actually converts depends on lots of other features of a given social network (do your networks contain a lot of people who were already vegetarian/vegan?).

My previous take on writing to Politicians got numbers, so I figured I'd post the email I send below.

I am going to make some updates, but this is the latest version:

---

Hi [Politician]

My name is Yanni Kyriacos, I live in Coogee, just down the road from your electorate.

If you're up for it, I'd like to meet to discuss the risks posed by AI. In addition to my day job building startups, I do community / movement building in the AI Safety / AI existential risk space. You can learn more about AI Safety ANZ by joining our Facebook group here or the PauseAI movement here. I am also a signatory of Australians for AI Safety - a group that has called for the Australian government to set up an AI Commission (or similar body).

Recently I worked with Australian AI experts (such as Good Ancestors Policy) in making a submission to the recent safe and response AI consultation process. In the letter, we called on the government to acknowledge the potential catastrophic and existential risks from artificial intelligence. More on that can be found here.

There are many immediate risks from already existing AI systems like ChatGPT or Midjourney, such as disinformation or improper implementation in various ... (read more)

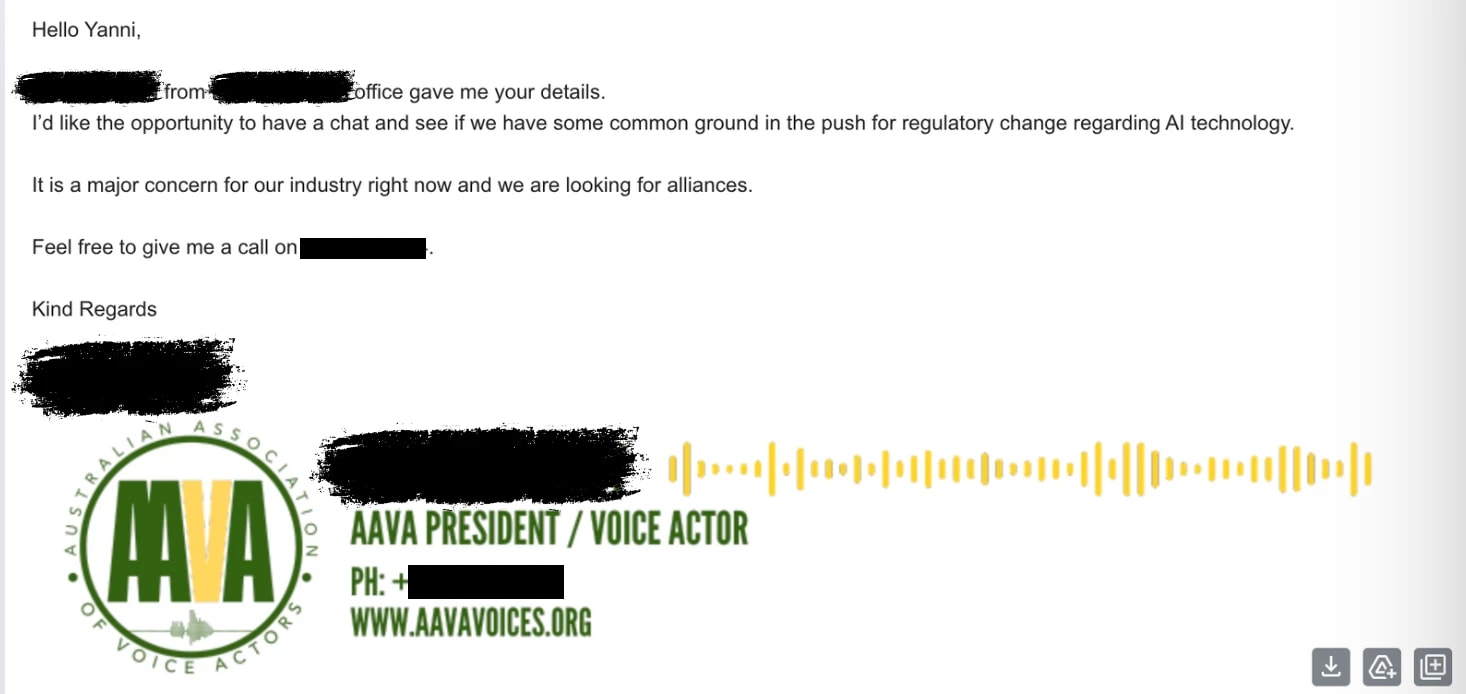

[PHOTO] I sent 19 emails to politicians, had 4 meetings, and now I get emails like this. There is SO MUCH low hanging fruit in just doing this for 30 minutes a day (I would do it but my LTFF funding does not cover this). Someone should do this!

You should probably also blank their job title (which would make it easy to work out who they are) and their phone number (!)

Larry Ellison, who will invest tens of billions in Stargate said uberveillance via AGI will be great because then police and the populace would always have to be on their best behaviour. It is best to assume the people pushing 8 billion of us into the singularity have psychopathy (or similar disorders). This matters because we need to know who we're going up against: there is no rationalising with these people. They aren't counting the QALYs!

Footage of Larry’s point of view starts around 12.00 on Matt Wolf’s video

RIP to any posts on anything earnest over the last 48 hours. Maybe in future we don't tag anything April Fools and it is otherwise a complete blackout on serious posts 😅

Something(!) needs to be done. Otherwise, it's just a mess for clarity and the communication of ideas.

The Australian Federal Government is setting up an "AI Advisory Body" inside the Department of Industry, Science and Resources.

IMO that means Australia has an AISI in:

9 months (~ 30% confidence)

12 months (~ 50%)

18 months (~ 80%)

Ten months ago I met Australia's Assistant Defence Minister about AI Safety because I sent him one email asking for a meeting. I wrote about that here. In total I sent 21 emails to Politicians and had 4 meetings. AFAICT there is still no organisation with significant funding that does this as their primary activity. AI Safety advocacy is IMO still extremely low hanging fruit. My best theory is EAs don't want to do it / fund it because EAs are drawn to spreadsheets and google docs (it isn't their comparative advantage). Hammers like nails etc.

I think if you work in AI Safety (or want to) it is very important to be extremely skeptical of your motivations for working in the space. This applies to being skepticism of interventions within AI Safety as well.

For example, EAs (like most people!) are motivated to do things they're (1) good at (2) see as high status (i.e. people very quietly ask themselves 'would someone who I perceive as high status approve of my belief or action?'). Based on this, I am worried that (1) many EAs find protesting AI labs (and advocating for a Pause in general) cringy and/or awkward (2) Ignore the potential impact of organisations such as PauseAI.

We might all literally die soon because of misaligned AI, so what I'm recommending is that anyone seriously considering AI Safety as a career path spends a lot of time on the question of 'what is really motivating me here?'

One axis where Capabilities and Safety people pull apart the most, with high consequences is on "asking for forgiveness instead of permission."

1) Safety people need to get out there and start making stuff without their high prestige ally nodding first

2) Capabilities people need to consider more seriously that they're building something many people simply do not want

I have written 7 emails to 7 Politicians aiming to meet them to discuss AI Safety, and already have 2 meetings.

Normally, I'd put this kind of post on twitter, but I'm not on twitter, so it is here instead.

I just want people to know that if they're worried about AI Safety, believe more government engagement is a good thing and can hold a decent conversation (i.e. you understand the issue and are a good verbal/written communicator), then this could be an underrated path to high impact.

Another thing that is great about it is you can choose how many emails to send and how many meetings to have. So it can be done on the side of a "day job".

Is anyone in the AI Governance-Comms space working on what public outreach should look like if lots of jobs start getting automated in < 3 years?

I point to Travel Agents a lot not to pick on them, but because they're salient and there are lots of them. I think there is a reasonable chance in 3 years that industry loses 50% of its workers (3 million globally).

People are going to start freaking out about this. Which means we're in "December 2019" all over again, and we all remember how bad Government Comms were during COVID.

Now is the time to start ... (read more)

AI Safety Monthly Meetup - Brief Impact Analysis

For the past 8 months, we've (AIS ANZ) been running consistent community meetups across 5 cities (Sydney, Melbourne, Brisbane, Wellington and Canberra). Each meetup averages about 10 attendees with about 50% new participant rate, driven primarily through LinkedIn and email outreach. I estimate we're driving unique AI Safety related connections for around $6.

Volunteer Meetup Coordinators organise the bookings, pay for the Food & Beverage (I reimburse them after the fact) and greet attendees. This ini... (read more)

Solving the AGI alignment problem demands a herculean level of ambition, far beyond what we're currently bringing to bear. Dear Reader, grab a pen or open a google doc right now and answer these questions:

1) What would you do right now if you became 5x more ambitious?

2) If you believe we all might die soon, why aren't you doing the ambitious thing?

[GIF] A feature I'd love on the forum: while posts are read back to you, the part of the text that is being read is highlighted. This exists on Naturalreaders.com and would love to see it here (great for people who have wandering minds like me)

I think acting on the margins is still very underrated. For e.g. I think 5x the amount of advocacy for a Pause on capabilities development of frontier AI models would be great. I also think in 12 months time it would be fine for me to reevaluate this take and say something like 'ok that's enough Pause advocacy'.

Basically, you shouldn't feel 'locked in' to any view. And if you're starting to feel like you're part of a tribe, then that could be a bad sign you've been psychographically locked in.

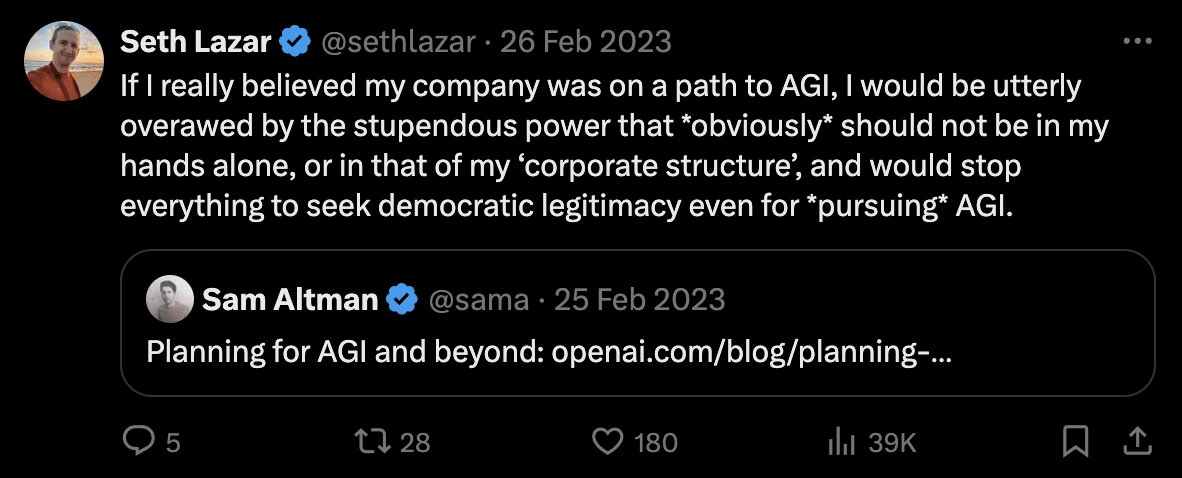

I believe we should worry about the pathology of people like Sam Altman, mostly because of what they're trying to achieve, without the consent of all governments and their respective populace. I found a tweet that summarises my own view better than I can. The pursuit of AGI isn't normal or acceptable and we should call it out as such.

Yesterday Greg Sadler and I met with the President of the Australian Association of Voice Actors. Like us, they've been lobbying for more and better AI regulation from government. I was surprised how much overlap we had in concerns and potential solutions:

1. Transparency and explainability of AI model data use (concern)

2. Importance of interpretability (solution)

3. Mis/dis information from deepfakes (concern)

4. Lack of liability for the creators of AI if any harms eventuate (concern + solution)

5. Unemployment without safety nets for Australians (concern)

6. Rate of capabilities development (concern)

They may even support the creation of an AI Safety Institute in Australia. Don't underestimate who could be allies moving forward!

NotebookLM is basically magic. Just take whatever Forum post you can't be bothered reading but know you should and use NotebookLM to convert it into a podcast.

It seems reasonable that in 6 - 12 months there will be a button inside each Forum post that converts said post into a podcast (i.e. you won't need to visit NotebookLM to do it).

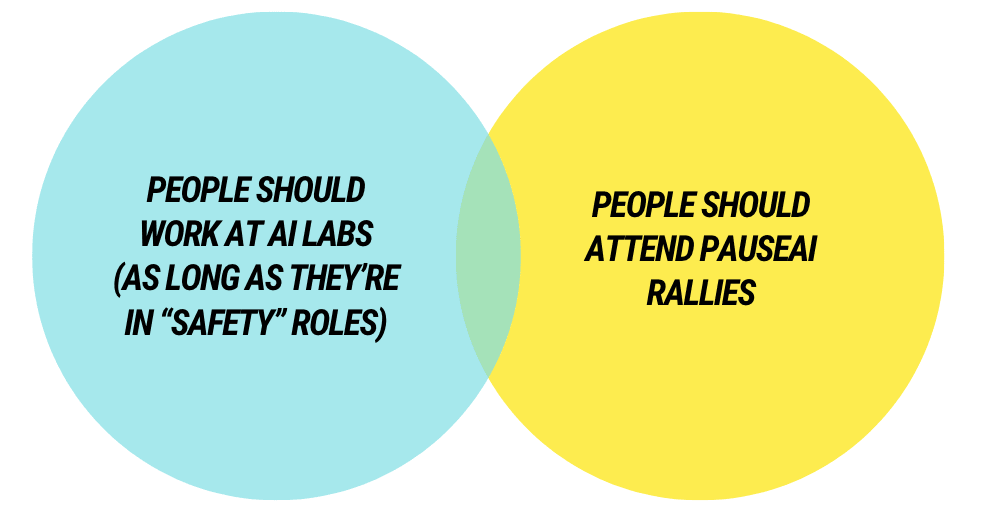

Remember: EA institutions actively push talented people into the companies making the world changing tech the public have said THEY DONT WANT. This is where the next big EA PR crisis will come from (50%). Except this time it won’t just be the tech bubble.

The general public wants frontier AI models regulated and there doesn't seem to be grassroots focussed orgs attempting to capture and funnel this energy into influencing politicians. E.g. via this kind of activity. This seems like massively low hanging fruit. An example of an organisation that does this (but for GH&W) is Results Australia. Someone should set up such an org.

So I did a quick check today - I've sent 19 emails to politicians about AI Safety / x-risk and received 4 meetings. They've all had a really good vibe, and I've managed to get each of them to commit to something small (e.g. email XYZ person about setting up an AI Safety Institute). I'm pretty happy with the hit rate (4/19). I might do another forum quick take once I've sent 50.

I expect (~ 75%) that the decision to "funnel" EAs into jobs at AI labs will become a contentious community issue in the next year. I think that over time more people will think it is a bad idea. This may have PR and funding consequences too.

Possibly a high effort low reward suggestion for the forum team but I’d love (with a single click) to be able to listen to forum posts as a podcast via google’s notebookLM. I think this could increase my content consumption of long form posts by about 2x.

I often see EA orgs looking to hire people to do Z, where applicants don't necessarily need experience doing Z to be successful.

E.g. instead of saying "must have minimum 4 years as an Operations Manager", they say "we are hiring for an Operations Manager. But you don't need experience as an Operations Manager, as long as you have competencies/skills (insert list) A - F."

This reminds of when Bloomberg spent over $10M training a GPT-3.5 class AI on their own financial data, only to find that GPT-4 beat it on almost all finance tasks.

They thought proprietary ... (read more)

I beta tested a new movement building format last night: online networking. It seems to have legs.

V quick theory of change:

> problem it solves: not enough people in AIS across Australia (especially) and New Zealand are meeting each other (this is bad for the movement and people's impact).

> we need to brute force serendipity to create collabs.

> this initiative has v low cost

quantitative results:

> I purposefully didn't market it hard because it was a beta. I literally got more people that I hoped for

> 22 RSVPs and 18 attendees

> this s... (read more)

A piece of career advice I've given a few times recently to people in AI Safety, which I thought worth repeating here, is that AI Safety is so nascent a field that the following strategy could be worth pursuing:

1. Write your own job description (whatever it is that you're good at / brings joy into your life).

2. Find organisations that you think need thing that job but don't yet have it. This role should solve a problem they either don't know they have or haven't figured out how to solve.

3. Find the key decision maker and email them. Explain the (their) pro... (read more)

If transformative AI is defined by its societal impact rather than its technical capabilities (i.e. TAI as process not a technology), we already have what is needed. The real question isn't about waiting for GPT-X or capability Y - it's about imagining what happens when current AI is deployed 1000x more widely in just a few years. This presents EXTREMELY different problems to solve from a governance and advocacy perspective.

E.g. 1: compute governance might no longer be a good intervention

E.g. 2: "Pause" can't just be about pausing model development. It should also be about pausing implementation across use cases

It breaks my heart when I see eulogy posts on the forum. And while I greatly appreciate people going to the effort of writing them (while presumably experiencing grief), it still doesn't feel like enough. We're talking about people that dedicated their lives to doing good, and all they get is a post. I don't have a suggestion to address this 'problem', and some may even feel that a post is enough, but I don't. Maybe there is no good answer and death just sucks. I dunno.

Something bouncing around my head recently ... I think I agree with the notion that "you can't solve a problem at the level it was created".

A key point here is the difference between "solving" a problem and "minimising its harm".

- Solving a problem = engaging with a problem by going up a level from which is was createwd

- Minimising its harm = trying to solve it at the level it was created

Why is this important? Because I think EA and AI Safety have historically focussed (and has their respective strengths in) harm-minimisation.

This applies obviously the micro. ... (read more)

I am 90% sure that most AI Safety talent aren't thinking hard enough about what Neglectedness. The industry is so nascent that you could look at 10 analogous industries, see what processes or institutions are valuable and missing and build an organisation around the highest impact one.

The highest impact job ≠ the highest impact opportunity for you!

AI Safety (in the broadest possible sense, i.e. including ethics & bias) is going be taken very seriously soon by Government decision makers in many countries. But without high quality talent staying in their home countries (i.e. not moving to UK or US), there is a reasonable chance that x/c-risk won’t be considered problems worth trying to solve. X/c-risk sympathisers need seats at the table. IMO local AIS movement builders should be thinking hard about how to either keep talent local (if they're experiencing brain drain) OR increase the amount of local talent coming into x/c-risk Safety, such that outgoing talent leakage isn't a problem.

What is your best guess of the overall impact of 80k interviewing AI labs on their podcast + listing AI lab vacant roles?

Poll: https://www.guidedtrack.com/programs/qj0ykwn/run

Three million people are employed by the travel (agent) industry worldwide. I am struggling to see how we don't lose 80%+ of those jobs to AI Agents in 3 years (this is ofc just one example). This is going to be an extremely painful process for a lot of people.

I'm not sure it going to feel like a zoom call with a colleague any time soon. That's a pretty high bar IMO that we ain't anywhere near yet. Many steps aren't yet there which include

(I'm probably misrepresenting a couple of these due to lack of expertise but something like...)

1) Video quality (especially being rendered real time)

2) Almost insta-replying

3) Facial warmth and expressions

4) LLM sounding exactly like a real person (this one might be closest)

5) Reduction in processing power required for this to be a norm. Having thousands of these going simultaneously is going to need a lot. (again less important)

I would bet against Avatars being this high fidelity in 3 years a in common use because I think LLM progress is tailing off and there are multiple problems to be solved to get there - but maybe I'm a troglodyte...

This is an extremely "EA" request from me but I feel like we need a word for people (i.e. me) who are Vegans but will eat animal products if they're about to be thrown out. OpportuVegan? UtilaVegan?

I think https://www.wakingup.com/ should be considered for effective organisation status. It donates 10% of revenue to the most effective causes and I think reaching nondual states of awakening could be one of the most effective ways for people in rich countries to improve their wellbeing.

If i found a place that raised cows that had predictably net positive lives, what would be the harm in eating beef from this farm?

I've been ostrovegan for ~ 7 years but open to changing my mind with new information.

Imagine running 100 simulations of humanity's story. In every single one, the same pattern emerges: The moment we choose agriculture over hunting and gathering, we unknowingly start a countdown to our own extinction through AGI. If this were true, I think it suggests that our best chance at long-term survival is to stay as hunter-gatherers - and that what we call 'progress' is actually a kind of cosmic trap.

Two jobs in AI Safety Advocacy that AFAICT don't exist, but should and probably will very soon. Will EAs be the first to create them though? There is a strong first mover advantage waiting for someone -

1. Volunteer Coordinator - there will soon be a groundswell from the general population wanting to have a positive impact in AI. Most won't know how to. A volunteer manager will help capture and direct their efforts positively, for example, by having them write emails to politicians

2. Partnerships Manager - the President of the Voice Actors guild reached out... (read more)

More thoughts on "80,000 hours should remove OpenAI from the Job Board"

- - - -

A broadly robust heuristic I walk around with in my head is "the more targeted the strategy, the more likely it will miss the target".

Its origin is from when I worked in advertising agencies, where TV ads for car brands all looked the same because they needed to reach millions of people, while email campaigns were highly personalised.

The thing is, if you get hit with a tv ad for a car, worst case scenario it can't have a strongly negative effect because of its generic... (read more)

A potential failure mode of 80k recommending EAs work at AI labs:

- 80k promotes a safety related job within a leading AI lab.

- 80k's audience (purposefully) skews to high prospect candidates (HPC) - smarter, richer, better connected vs average.

- HPC applies for and gets safety role within AI lab.

- HPC candidate stays at the lab but moves roles.

- Now we have a smart, rich, well connected person no longer in safety but in capabilities.

I think this is sufficiently important / likely that 80k should consider tracking these people over time to see if this is a real issue.

I always get downvoted when I suggest that (1) if you have short timelines and (2) you decide to work at a Lab then (3) people might hate you soon and your employment prospects could be damaged.

What is something obvious I'm missing here?

One thing I won't find convincing is someone pointing at the Finance industry post GFC as a comparison.

I believe the scale of unemployment could be much higher. E.g. 5% ->15% unemployment in 3 years.

What is your "Pens Down" moment?

"Pens Down" to mean 'Artificial Super Intelligence in my opinion is close enough that it no longer makes sense to work on whatever else I'm currently working on, because we're about to undergo radical amounts of change very soon/quickly'.

For me, it is probably when we have something as powerful as GPT-4 except it is agentic and costs less than $100 / month. So, that looks like a digital personal assistant that can execute an instruction like "have a TV delivered for me by X date, under Y price and organise installation and wall mounting."

This is obviously a question mainly for people who don't work full time on AI Safety.

I'm pretty confident that a majority of the population will soon have very negative attitudes towards big AI labs. I'm extremely unsure about what impact this will have on the AI Safety and EA communities (because we work with those labs in all sorts of ways). I think this could increase the likelihood of "Ethics" advocates becoming much more popular, but I don't know if this necessarily increases catastrophic or existential risks.

The Greg / Vasco bet reminded me of something: I went to buy a ceiling fan with a light in it recently. There was one on sale that happened to also tick all my boxes, joy! But the salesperson warned me "the light in this fan can't be replaced and only has 10,000 hours in it. After that you'll need a new fan. So you might not want to buy this one." I chuckled internally and bought two of them, one for each room.

Has anyone seen an analysis that takes seriously the idea that people should eat some fruits, vegetables and legumes over others based on how much animal suffering they each cause?

I.e. don't eat X fruit, eat Y one instead, because X fruit is [e.g.] harvested in Z way, which kills more [insert plausibly sentient creature].

A judgement I'm attached to is that a person is either extremely confused or callous if they work in capabilities at a big lab. Is there some nuance I'm missing here?

I think the average EA worries too much about negative PR related to EA. I think this is a shame because EA didn't get to where it is now because it concerned itself with PR. It got here through good-old-fashioned hard work (and good thinking ofc).

Two examples:

1. FTX.

2. OpenAI board drama.

On the whole, I think there was way too much time spent thinking and talking about these massive outliers and it would have been better if 95%+ of EAs put their head down and did what they do best - get back to work.

I think it is good to discuss and take action... (read more)

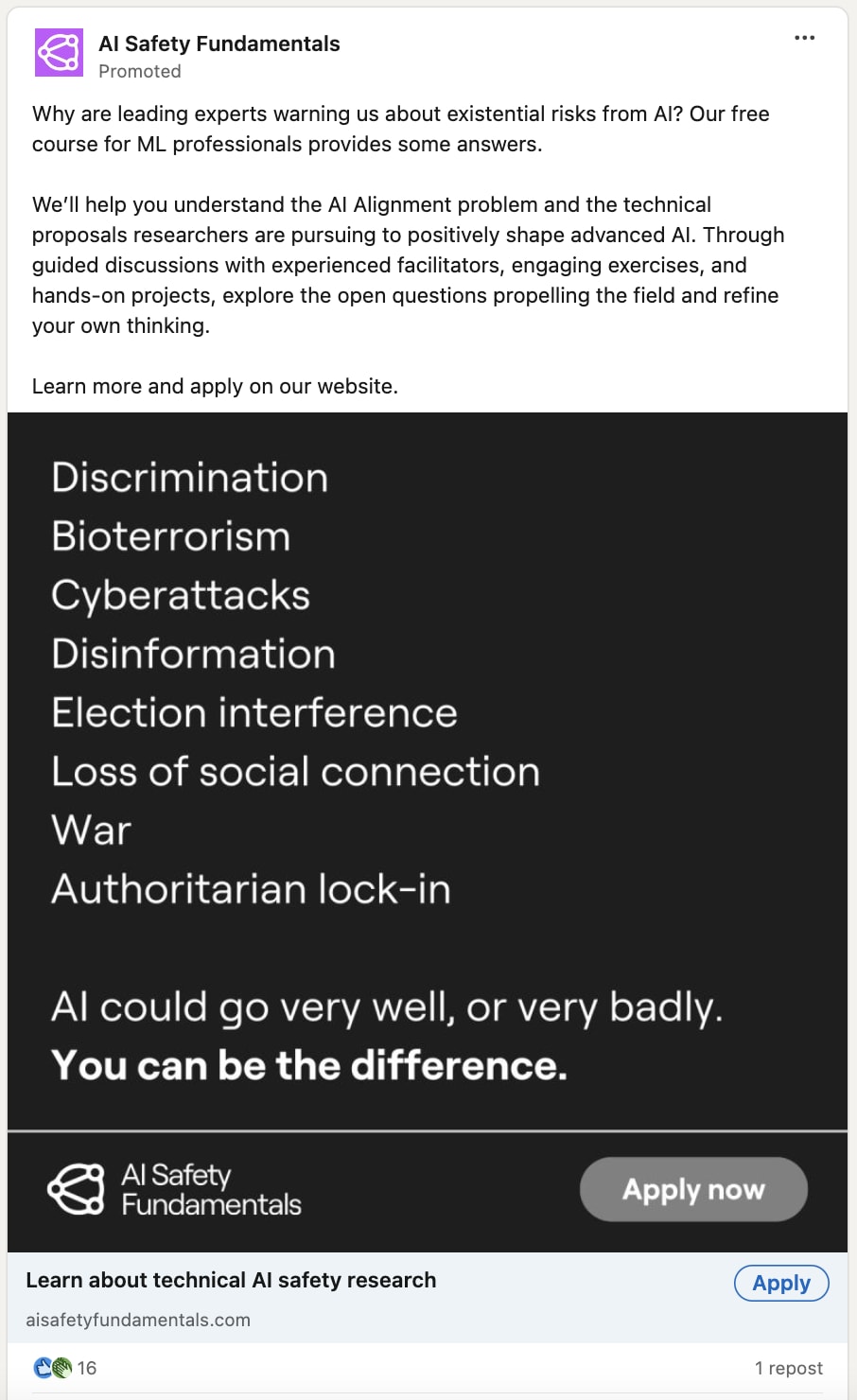

I really like this ad strategy from BlueDot. 5 stars.

90% of the message is still x / c-risk focussed, but by including "discrimination" and "loss of social connection" they're clearly trying to either (or both);

- create a big tent

nudge people that are in "ethics" but sympathetic to x / c-risk into x / c-risk

This feels like misleading advertising to me, particularly "Election interference" and "Loss of social connection". Unless bluedot is doing some very different curriculum now and have a very different understanding of what alignment is. These bait-and-switch tactics might not be a good way to create a "big tent".

I expect that over the next couple of years GenAI products will continue to improve, but concerns about AI risk won't. For some reason we can't "feel" the improvements. Then (e.g. around 2026) we will have pretty capable digital agents and there will be a surge of concern (similar to when ChatGPT was released, maybe bigger), and then possibly another perceived (but not real) plateau.

Even though I've been in the AI Safety space for ~ 2 years, I can't shake the feeling that every living thing dying painlessly in its sleep overnight (due to AI killing us) isn't as bad as (i.e. is 'better' than) hundreds of millions of people living in poverty and/or hundreds of billions of animals being tortured.

This makes me suspicious of my motivations. I think I do the work partly because I kinda feel the loss of future generations, but mainly because AI Safety still feels so neglected (and my counter factual impact here is larger).

I don't think... (read more)

I'm pleased to announce the launch of a brand new Facebook group dedicated to AI Safety in Wellington: AI Safety Wellington (AISW). This is your local hub for connecting with others passionate about ensuring a safe and beneficial future with artificial intelligence / reducing x-risk.To kick things off, we're hosting a super casual meetup where you can:

- Meet & Learn: Connect with Wellington's AI Safety community.

- Chat & Collaborate: Discuss career paths, upcoming events, and

I’d like to quickly skill up on ‘working with people that have a kind of neurodivergence’.

This means;

- understanding their unique challenges

- how I can help them get the best from/for themselves

- but I don’t want to sink more than two hours into this

Can anyone recommend some online content for me please?

What would be the pros and cons of adding a semi-hidden-but-permanent Hot Takes section to the Forum? All of my takes are Hot and due to time constraints I would otherwise not post at all. Some would argue that someone like me should not post Hot Takes at all. Anyway, in true lazy fashion here is ChatGPT on the pros and cons:

Pros:

- Encourages diverse perspectives and stimulates debate.

- Can attract more engagement and interest from users.

- Provides a platform for expressing unconventional or controversial ideas.

- Fosters a culture of intellectual curiosity and ope

The catchphrase I walk around with in my head regarding the optimal strategy for AI Safety is something like: Creating Superintelligent Artificial Agents* (SAA) without a worldwide referendum is ethically unjustifiable. Until a consensus is reached on whether to bring into existence such technology, a global moratorium is required (*we already have AGI).

I thought it might be useful to spell that out.

I think it would be good if lots of EAs answered this twitter poll, so we could get a better sense for the communities views on the topic of Enlightenment / Awakening: https://twitter.com/SpencrGreenberg/status/1782525718586413085?ref_src=twsrc%5Egoogle%7Ctwcamp%5Eserp%7Ctwgr%5Etweet

One of the seminal texts in marketing science is The Long and The Short of it by Field and Binet. They argue that for maximum effectiveness, marketing should aim to create two distinct work streams and results; immediate sales and longer term sales.

They argue the tactics that go into either are distinct (e.g. short term = create a sense of urgency, long term = match brand associations with category associations).

This feels like a good analogy for AI Safety Advocacy / Governance - keep talking about short term things people can buy now (in an Au... (read more)

I have heard rumours that an AI Safety documentary is being made. Separate to this, a good friend of mine is also seriously considering making one, but he isn't "in" AI Safety. If you know who this first group is and can put me in touch with them, it might be worth getting across each others plans.

Not sure if this type of concern has reached the meta yet, but if someone approached me asking for career advice, tossing up whether to apply for a job at a big AI lab, I would let them know that it could negatively affect their career prospects down the track because so many people now perceive such as a move as either morally wrong or just plain wrong-headed. And those perceptions might only increase over time. I am not making a claim here beyond this should be a career advice consideration.

Something I'm confused about: what is the threshold that needs meeting for the majority of people in the EA community to say something like "it would be better if EAs didn't work at OpenAI"?

Imagining the following hypothetical scenarios over 2024/25, I can't predict confidently whether they'd individually cause that response within EA?

- Ten-fifteen more OpenAI staff quit for varied and unclear reasons. No public info is gained outside of rumours

- There is another board shakeup because senior leaders seem worried about Altman. Altman stays on

- Superalignment team

What are some historical examples of a group (like AI Safety folk) getting something incredibly wrong about an incoming Technology? Bonus question: what led to that group getting it so wrong? Maybe there is something to learn here.

In the 90's and 2000's, many people such as Eric Drexler were extremely worried about nanotechnology and viewed it as an existential threat through the "gray goo" scenario. Yudkowsky predicted drexler style nanotech would occur by 2010, using very similar language to what he is currently saying about AGI.

It turned out they were all being absurdly overoptimistic about how soon the technology would arrive, and the whole drexlerite nanotech project flamed out by the end of the 2000's and has pretty much not progressed since. I think a similar dynamic playing out with AGI is less likely, but still very plausible.

I recently discovered the idea of driving all blames into oneself, which immediately resonated with me. It is relatively hardcore; the kind of thing that would turn David Goggins into a Buddhist.

Gemini did a good job of summarising it:

This quote by Pema Chödron, a renowned Buddhist teacher, represents a core principle in some Buddhist traditions, particularly within Tibetan Buddhism. It's called "taking full responsibility" or "taking self-blame" and can be a bit challenging to understand at first. Here's a breakdown:

What it Doesn't Mean:

- Self-Flagellation:

Feels kinda obnoxious to write a quick take along the lines of "I'm thinking about writing a post on X, does anyone actually give a sh*t? Otherwise I won't write it."

I just wanted to check, since I can't place my finger on why it feels obnoxious but it certainly does.

Is there a Slack group or something similar for Founders of early-stage (EA) startups?

How impactful could non-dual meditation be for improving wellbeing?

Are there any EAs out there who have practiced non-dual meditation? Specifically, anything from the Dzogchen or Mahamudra traditions of Tibetan Buddhism?

More secular teachers would be Loch Kelly, Sam Harris, Michael Taft.

This has been a life changing experience for me and I'm wondering whether it could be a blind spot for EA.

I'd also just love to chat with someone else who has experienced non-duality / awakening through this form of meditation :)

I didn't want to read all of @LintzA's post on the "The Game Board has been Flipped" and all 43+ comments, so I copy/pasted the entire webpage into Claude with the following prompt: "Please give me a summary of the authors argument (dot points, explained simply) and then give me a summary of the kinds of support and push back they got (dot points, explained simply, thematised, giving me a sense of the concentration/popularity of themes in the push back)"

Below is the result (the Forum team might want to consider how posts with large numbers of comments can ... (read more)

I think > 40% of AI Safety resources should be going into making Federal Governments take seriously the possibility of an intelligence explosion in the next 3 years due to proliferation of digital agents.

I spent some time with Claude this morning trying to figure out why I find it cringe calling myself an EA (I never call myself an EA, even though many in EA would call me an EA).

The reason: calling myself "EA" feels cringe because it's inherently a movement/community label - it always carries that social identity baggage with it, even when I'm just trying to describe my personal philosophical views.

I am happy to describe myself as a Buddhist or Utilitarian because I don't think it does those things (at least, not within the broader community context I find myself in - Western, Online, Democratic, Australia, etc).

[IMAGE] there is something about the lack overlap between these two audiences that makes me uneasy. WYD?

Prediction: In 6-12 months people are going to start leaving Deepmind and Anthropic for similar sounding reasons to those currently leaving OpenAI (50% likelihood).

> Surface level read of what is happening at OpenAI; employees are uncomfortable with specific safety policies.

> Deeper, more transferable, harder to solve problem; no person that is sufficiently well-meaning and close enough to the coal face at Big Labs can ever be reassured they're doing the right thing continuing to work for a company whose mission is to build AGI.

Basically, this is less about "OpenAI is bad" and more "Making AGI is bad".

Very quick thoughts on setting time aside for strategy, planning and implementation, since I'm into my 4th week of strategy development and experiencing intrusive thoughts about needing to hurry up on implementation;

- I have a 52 week LTFF grant to do movement building in Australia (AI Safety)

- I have set aside 4.5 weeks for research (interviews + landscape review + maybe survey) and strategy development (segmentation, targeting, positioning),

- Then 1.5 weeks for planning (content, events, educational programs), during which I will get feedback from others on th

What would stop you from paying for an LLM? Take an extreme case; Sam Altman turns around tomorrow and says "We're racing to AGI, I'm not going to worry about Safety at all."

Would that stop you from throwing him $20 a month?

(I currently pay for Gemini)

Help clear something up for me: I am extremely confused (theoretically) how we can simultaneously have:

1. An Artificial Superintelligence

2. It be controlled by humans (therefore creating misuse of concentration of power issues)

My intuition is that once it reaches a particular level of power it will be uncontrollable. Unless people are saying that we can have models 100x more powerful than GPT4 without it having any agency??

I don't think we need to explicitly alert the reader when we've received help from an LLM to write something (even if it wrote the bulk of the post). That's it, my quickest ever Quick Take.

There have been multiple occasions where I've copy and pasted email threads into an LLM and asked it things like:

- What is X person saying

- What are the cruxes in this conversation?

- Summarise this conversation

- What are the key takeaways

- What views are being missed from this conversation

I really want an email plugin that basically brute forces rationality INTO email conversations.

Tangentially - I wonder if LLMs can reliably convert peoples claims into a % through sentiment analysis? This would be useful for Forecasters I believe (and rationality in general)

How bad does factory farming need to get before we WANT TO accelerate AI capabilities?

This article suggests that the DAILY "total painfulness of fish suffering is equivalent to the painfulness of one hundred fifty million human deaths" and that most of these deaths are via "slow suffocation, crushing, or live disemboweling".

Let's assume the creation of AGI had a >80% probability of leading to two outcomes for non-human animals: extinction or liberation.

How might we do the math on this?

Is there an Animal Advocacy Careers (AAC) for AI Safety? If not, what is the most analogous org?

Having a nondual Awakening was the second most important thing to happen to me (after my daughters birth). It has led to incredibly low levels of suffering and incredibly high levels of wellbeing. I write this because I think it is still incredibly under-appreciated and attainable for most people (maybe literally anyone).

There are traditions (Dzogchen, Zen, modern nonduality) where this shift in consciousness can be experienced simply by hearing the right combination of words and insights. As our understanding and tools for communicating these insights evolve, including through advances in AI, I believe this transformative experience could become accessible to many more people.

At some point over the next 5 years virtually every person reading this will become a Pause advocate, the only question is whether that occurs now, in 2025, 2026, 2027, 2028 or 2029.

Flaming hot take: if you think Digital Sentience should be taken seriously but not Human Awakening / Enlightenment, then EA culture might have its hooks in a bit deep.

One axis where Capabilities and Safety people pull apart the most, with high consequences is on "asking for forgiveness instead of permission."

1) Safety people need to get out there and start making stuff without their high prestige ally nodding first

2) Capabilities people need to consider more seriously that they're building something many people simply do not want