I am organizing an EA university group. I am wondering if, in general, putting this on my resume would be good or bad for getting a non-EA job.

11

11

Reactions

2 Answers sorted by

Absolutely do so! In the eyes of the vast majority of employers, organizing a university group centered around charity shows character and energy, highly positive qualities in an employee.

I agree completely! However, I feel obliged to point out that some EAs I know intentionally play down their EA associations because they think it will harm their careers. Often, these people are thinking of working in government.

I weakly think this is a mistake for two reasons. Firstly, as Mathias said, because EA appears to be generally seen as a positive thing (similar to climate change action, according to this study). Secondly, I think Ord is right when he says we could do with more earnestness and sincerity in EA.

Alix, ex-co-director at EA Switzerland, wrote up some interesting thoughts on this general subject here.

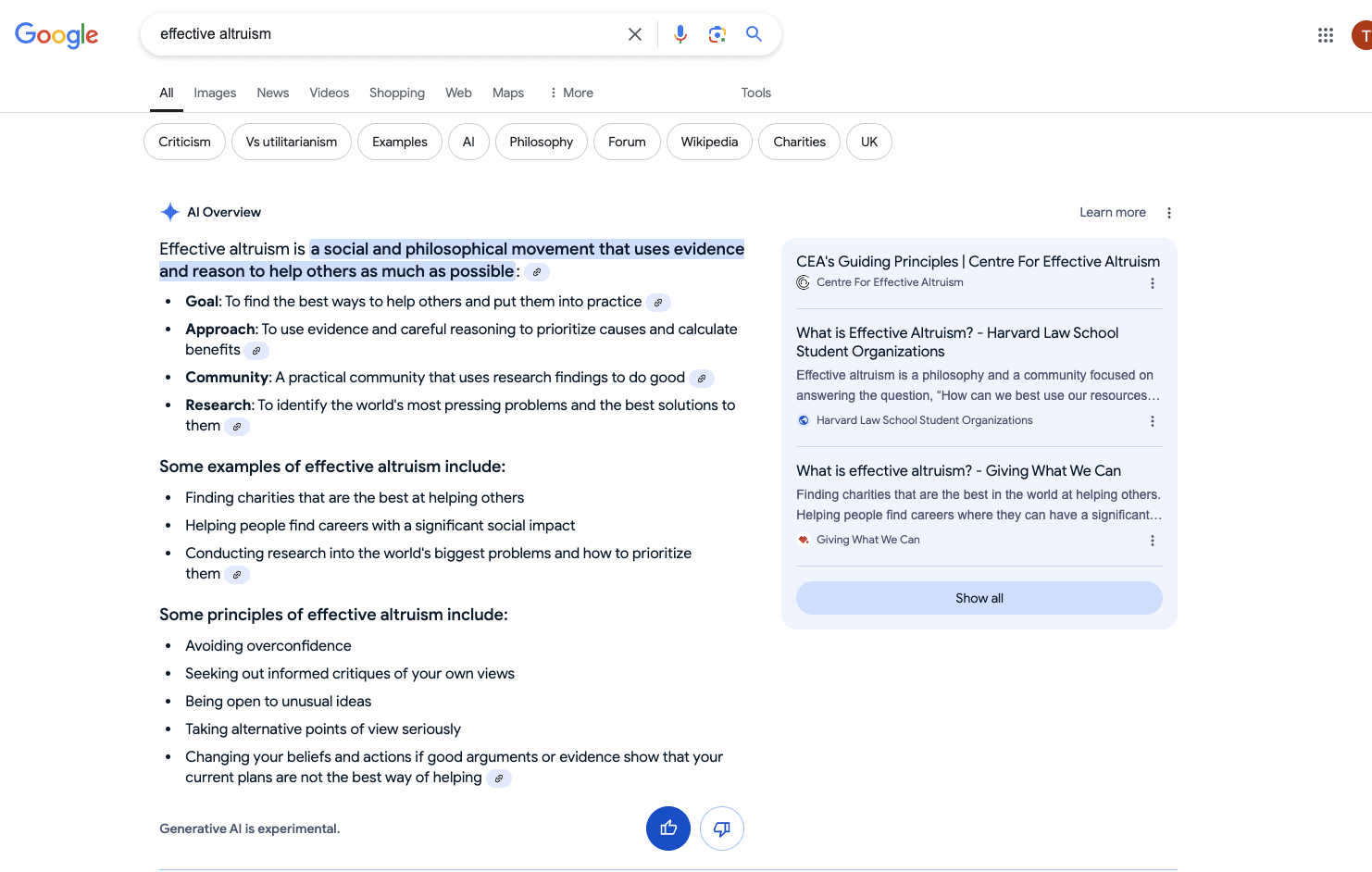

I'd also add that basically no one knows what EA is, and currently, when you do a quick google, you get a good impression (criticism tab aside):

(Interested to know if others get the same AI summary- not sure if it regenerates for each user, or just for search terms)

I'll further add that most people aren't going to bother doing the quick Google, they're going to see "organised university society" and whatever two sentence summary you've got about it being charity-related and see it as a positive, although not necessarily any more positive than organising the RAG week charity or a sports team.

The bigger question is if and how you raise it as an answer to a question about your life experiences at interview

(FWIW my ad-blocked Google results for Effective Altruism are this website, the Wikipedia link and a BBC article about SBF)

If you are trying to get a US policy job than probably no, but it also depends on the section of US policy

I agree completely! However, I feel obliged to point out that some EAs I know intentionally play down their EA associations because they think it will harm their careers. Often, these people are thinking of working in government.

I weakly think this is a mistake for two reasons. Firstly, as Mathias said, because EA appears to be generally seen as a positive thing (similar to climate change action, according to this study). Secondly, I think Ord is right when he says we could do with more earnestness and sincerity in EA.

Alix, ex-co-director at EA Switzerland, wrote up some interesting thoughts on this general subject here.