This is Part 2.2 (third post) in a series. The previous post is here.

I’ve heard a lot about “nuclear close calls.” Stanislav Petrov was, at one point, one human and one uncomfortable decision away from initiating an all-out nuclear exchange between the US and the USSR. Then that happened several dozen more times. As described in Part 1, there were quite a few large state biological weapons programs after WWII. Was a similar situation unfolding there, behind the scenes like the nuclear near-misses? Were any biological apocalypses-that-weren’t quietly hidden in the pages of history? What were the actual usage plans for biological weapons, and how close did any of them come to deployment?

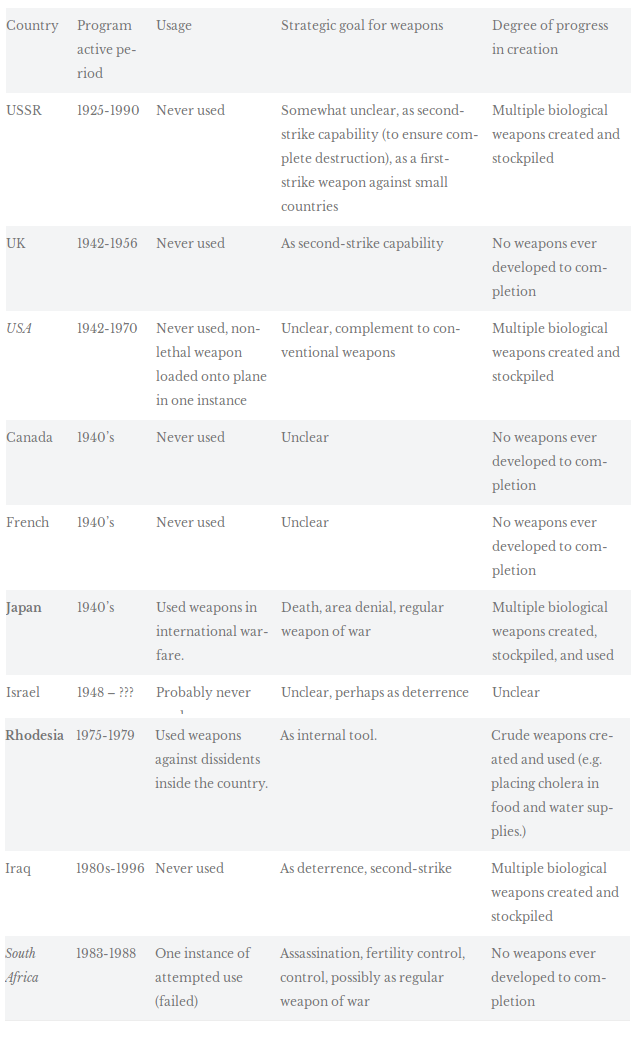

First, to note that there are a few examples of state uses of BW in modernity - the most major being the Japanese BW program's use of lethal weapons against Chinese civilians in the 1940’s. More recently, the Rhodesian BW program used weapons against their own citizens on small scales in the 1970’s, and South Africa program operatives attempted to sicken a refugee camp’s water supplies with cholera in 1989 (but failed). There are also a few ambiguous incidents, and the possibility of unnoticed usage. These incidents and possibilities are concerning, but are rare and on small scales.

The claim I assert is that since the 1940’s, there have been no instances of the sort of large-scale usage of BW in international warfare, like that which the imagination conjures upon hearing the phrase “biological weapons.” My research suggests, more tentatively, that not only were there no such uses, but there were no close calls - that even in major state programs that created and stockpiled weapons in quantity, these weapons were virtually never included into war plans, prepared for usage or, especially, used. The strategies they were intended for were were generally vague and retaliatory to offensive uses of biological or nuclear weapons. This has another bonus: understanding what biological weapons were ever made in mass quantity, or were ever seen to have particular strategic value.

Ways of classifying BW

Note two failure modes which are common in existing literature which tries to extrapolate from past bioweapons plans: first, an overly narrow reference class limited to weapons that were actually used in combat, of which there are very few; second, an overly broad class composed of every pathogen or plan that programs ever experimented with. The latter would include many plans that were impractical or undesirable and eventually abandoned. No literature thus far has focused on this middle ground between these extremes, or analyzed the strategic goals of such usages in depth.

Addressing BW under a framework of planned usage is rare. Categorizations of weapon usage are also uncommon. Most commonly found is the sorting of BW strategies into the theater of war – strategic, tactical, and operational uses (Koblentz 2009, Leitenberg et al 2012). This categorization system is broadly applicable and not agent-based, and thus may apply to future weapons with novel agents as well as to past programs. On the other hand, three abstract categories are not enough to be predictive, and many usages could plausibly be described as affecting multiple theaters. Any new categorization system should factor in historical strategies and motivations as a reference class to understand possible future uses.

No concrete intentions

To illustrate why considering usage plans is valuable, note that many sophisticated BW programs did not in fact make war plans that incorporated their weapons. In many cases, this is because early conceptual research was time-consuming and unsuccessful, and the results were considered inferior to conventional or nuclear weapons. UK, South African, Canadian, and French programs never resulted in mass-production of a finalized weapon, much less strategies for such a weapon’s use (Balmer 1997, Wheelis et al 2006). The South African and UK programs both explored unusual intended uses (assassinations and fertility control (Purkitt and Burgess 2002), and area-denial, respectively (Wheelis et al 2006), but no weapons were created which would fulfill them. Even the USSR program, which weaponized more agents than any other BW program in history (Leienberg et al 2012), failed to make concrete war plans involving BW (Wheelis et al 2006). The broader intents of these programs are still interesting, but clearly the relative importance of various programs changes when considering concrete war plans.

“No first use” of BW

Many state biological weapons programs post-WWII, and their strategies for weapons usage, were heavily intertwined with nuclear policy. The UK BW program, since 1946, was guided by a principle of “no first use” that echoes that of their nuclear program – that they would not use biological weapons unless the UK was first attacked with biological or nuclear weapons (Balmer 1997). This was also the original stated purpose of the US program, although the “no first use” doctrine was officially rescinded in 1956 (Wheelis et al 2006). Information revealed after the Iraq BW program survey group investigation in 2004 revealed Saddam Hussein’s intent in 1991 to use BW to retaliate against “unconventional” weapon attacks by the US ("Iraq Survey Group" 2004). The USSR was likely also interested in using weapons after a nuclear exchange with the US (Leitenberg et al 2012). In this way, BW join with nuclear weapons as a facet of mutually-assured destruction dynamics.

BW have long been considered “the poor man’s nuclear weapon”. Numerous studies and documents have suggested that biological weapons are cheaper per death-caused than nuclear weapons (Koblentz 2004), are relatively simple to construct, and could be developed by less-wealthy states to give them the MAD protection or bargaining capability of a full-fledged nuclear power. This idea has been criticized in several ways – for instance, by Gregory Koblentz, who points out that biological attacks are not reliable in the same way that nuclear attacks are and thus do not fulfil the major goals of deterrence (Koblentz 2009), and by Sonia Ben Ouagrham-Gormley, who observes that biological weapons are in fact significantly more difficult to produce than commonly believed (Ben Ouagrham-Gormley 2014).

Nonetheless, the perception that biological weapons are an alternative to nuclear weapons is widespread, including by powerful figures like Hillary Clinton (Reuters 2011) and Bill Gates (Farmer 2017). In light of this, it is interesting to observe that all three programs for which “no first use” was a primary strategic goal – the UK, US, and Iraq programs – were also nuclear powers or pursuing nuclear weapons. The UK and US BW programs were indeed later abandoned in favor of developing and relying on on nuclear weapons programs for defense and deterrence (Balmer 1997).

Non-lethal weapons

The popular image of BW as lethal antipersonnel weapons does not describe the whole of BW development, but certainly describes large swathes of it. The USSR, US, and Iraq programs all mass-produced lethal weapons; for example, anthrax-filled munitions. Of these, it seems that the Iraq program was the only one that actually deployed such weapons. Saddam Hussein was particularly interested in anthrax, not just for its virulence, but for its ability to remain active in soil and potentially cause death over many years.

Despite the prominence of the above, both in actuality and in the public eye, a great deal of effort focused on biological weapons has been focused on non-lethal weapons. These may be incapacitating antipersonnel weapons, or weapons with non-human targets such as plants or animals to attack the enemy’s agricultural system. Desiderata between lethal and non-lethal biological weapons has not been well-explored by the literature. It has been suggested that caring for incapacitated soldiers, particularly those who are sick over a long period of time, can be more burdensome on an enemy than in simply burying and replacing killed soldiers (Pappas et al, 2006). While strongly dependent on the pathogen and expected death rates, this is a practical reason these weapons may be preferred. Inflicting nonlethal or economic damage is also seen as more humane than lethal damage, and perhaps more politically acceptable. Political acceptability was, for instance, part of the internal US motivation for using anti-plant chemical weapons rather than human-targeted weapons in the Vietnam War (Pearson 2006). However, humanitarian or political motivations for developing biological weapons have not been described or well-documented in research.

Some of the first modern BW production was for weapons aimed at cattle: before WWII, the US and UK collaborated on producing anthrax-infected cow cakes, meant to be scattered over German fields to damage agriculture. Later, the US program explored agricultural weapons until 1958 (Hay 1999), and two of the twelve weapons finalized by the US program were agricultural – rice blast and wheat stem rust (Wheelis et al 2006). The USSR did not stockpile anti-agriculture weapons, but a variety of them were developed by a sub-program of the BW apparatus code-named Ecology (Koblentz 2009), and production was designed to scale quickly if needed (Alibek 1999). These would not be used in conditions of total war with the US, but in smaller wars with smaller nations (Alibek 1999).

Both the US and the USSR also invested in incapacitating weapons for use against humans. The USSR weaponized the rarely-lethal Brucella sp. and Burkholderia mallei, as well as Venezuelan equine encephalitis virus (VEEV), which causes flu-like symptoms. The US also invested heavily in incapacitating weapons. Four out of ten standardized antipersonnel weapons produced by the US BW program were not intended to be lethal: Brucella suis, Coxiella burnetti and VEEV, as well as the staphylococcus-derived Enterotoxin B (Wheelis 2006). In what may have been the closest call of major use of a BW since WWII, airplanes were loaded with VEEV-containing weapons during the Cuban missile crisis (Guillemin 2004). This was unauthorized, but nonetheless seems to have come very close to real battlefield usage.

Here's a summary of my findings by program:

(for this table in text rather than an image, see the version on my website.)

References

Alibek, Kenneth. “The Soviet Union’s Anti-Agricultural Biological Weapons.” Annals of the New York Academy of Sciences 894, no. 1 (December 1, 1999): 18–19. https://doi.org/10.1111/j.1749-6632.1999.tb08038.x.

Balmer, Brian. “The drift of biological weapons policy in the UK 1945–1965.” The Journal of Strategic Studies 20, no. 4 (1997): 115-145.

Farmer, Ben. “Bioterrorism Could Kill More People than Nuclear War, Bill Gates to Warn World Leaders.” The Telegraph, February 17, 2017. https://www.telegraph.co.uk/news/2017/02/17/biological-terrorism-could-kill-people-nuclear-attacks-bill/.

Guillemin, Jeanne. Biological weapons: From the invention of state-sponsored programs to contemporary bioterrorism. Columbia University Press, 2004.

Hay, Alastair. “Simulants, Stimulants and Diseases: The Evolution of the United States Biological Warfare Programme, 1945–60.” Medicine, Conflict and Survival 15, no. 3 (July 1, 1999): 198–214. https://doi.org/10.1080/13623699908409459.

“Iraq Survey Group Final Report.” Iraq Survey Group, September 30, 2004. https://www.globalsecurity.org/wmd/library/report/2004/isg-final-report/.

Koblentz, Gregory. Living Weapons. Ithaca: Cornell University Press, 2009.

Koblentz, Gregory. “Pathogens as weapons: The international security implications of biological warfare.” International security 28, no. 3 (2004): 84-122.

Leitenberg, Milton, Raymond Zilinskas, and Jens Kuhn. The Soviet Biological Weapons Program: A History. Cambridge: Harvard University Press, 2012.

Ouagrham-Gormley, Sonia Ben. Barriers to Bioweapons: The Challenges of Expertise and Organization for Weapons Development. Cornell University Press, 2014.

Pappas G, Panagopoulou P, Christou L, Akritidis N. Brucella as a biological weapon. Cell Mol Life Sci 2006; 63: 2229-36.

Pearson, Alan. “Incapacitating Biochemical Weapons.” The Nonproliferation Review 13, no. 2 (July 1, 2006): 151–88. https://doi.org/10.1080/10736700601012029.

Purkitt, Helen E., and Stephen Burgess. “South Africa’s Chemical and Biological Warfare Programme: A Historical and International Perspective.” Journal of Southern African Studies 28, no. 2 (2002): 229–53.

Reuters, Thomas. “Hillary Clinton Warns Terror Threat from Biological Weapons Is Growing.” The National Post, December 7, 2011. https://nationalpost.com/news/biological-weapons-threat-growing-clinton-says.

Wheelis, Mark, Lajos Rozsa, and Malcolm Dando. Deadly Cultures: Biological Weapons since 1945. Cambridge, MA: Harvard University Press, 2006.

The link to the previous post is broken

Thanks, fixed.

Do China and North Korea have bioweapons?

Thanks for writing this! It's good to know that there probably weren't recent close calls for governments using bioweapons on a large scale on purpose. The history linked in the last post mentions a few times when testing accidents might have hurt nearby people - do you think there were other close calls for testing accidents, including ones that might have affected many more people?

It seems quite possible. Japan aside (and those largely weren't "accidents"), the anthrax accidentally released at Sverdlovsk by the USSR program is a major one. I think Alibek describes more in his book Biohazard, but I'm not certain, and nothing else on the scale of that incident. It seems possible that there have been more accidental releases to civilian populations, than just these.