tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

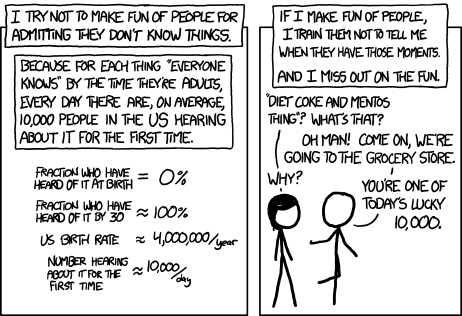

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

AISafety.info - Interactive FAQ

Additionally, this will serve as a way to spread the project Rob Miles' team[1] has been working on: Stampy and his professional-looking face aisafety.info. This will provide a single point of access into AI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that!

You can help by adding questions (type your question and click "I'm asking something else") or by editing questions and answers. We welcome feedback and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase and volunteer developers to help with the conversational agent and front end that we're building.

We've got more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

- In case you're not comfortable posting a question under your own name, you can use this form to send a question anonymously and I'll post it as a comment.

Guidelines for Answerers:

- Linking to the relevant answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

I've seen people already building AI 'agents' using GPT. One crucial component seems to be giving it a scratchpad to have an internal monologue with itself, rather than forcing it to immediately give you an answer.

If the path to agent-like AI ends up emerging from this kind of approach, wouldn't that make AI safety really easy? We can just read their minds and check what their intentions are?

Holden Karnofsky talks about 'digital neuroscience' being a promising approach to AI safety, where we figure out how to read the minds of AI agents. And for current GPT agents, it seems completely trivial to do that: you can literally just read their internal monologue in English and see exactly what they're planning!

I'm sure there are lots of good reasons not to get too hopeful based on this early property of AI agents, although for some of the immediate objections I can think of I can also think of responses. I'd be interested to read a discussion of what the implications of current GPT 'agents' are for AI safety prospects.

A few reasons I can think of for not being too hopeful, and my thoughts:

A final thought/concern/question: if 'digital neuroscience' did turn out to be really easy, I'd be much less concerned about the welfare of humans, and I'd start to be a lot more concerned about the welfare of the AIs themselves. It would make them very easily exploitable, and if they were sentient as well then it seems like there's a lot of scope for some pretty horrific abuses here. Is this a legitimate concern?

Sorry this is such a long comment, I almost wrote this up as a forum post. But these are very uninformed naive musings that I'm just looking for some pointers on, so when I saw this pinned post I thought I should probably put it here instead! I'd be keen to read comments from anyone who's got more informed thoughts on this!

Since these developments are really bleeding edge I don't know who is really an "expert" I would trust on evaluating it.

The closest to answering your question is maybe this recent article I came across on hackernews, where the comments are often more interesting then the article itself:

https://news.ycombinator.com/item?id=35603756

If you read through the comments which mostly come from people that follow the field for a while they seem to agree that it's not just "scaling up the existing model we have now", mainly because of cost reasons, but that's it's go... (read more)