This post is the executive summary for Rethink Priorities’ report on our work in 2023 and our strategy for the coming year.

Please click here to download a 6-page PDF summary or click here to read the full report.

Executive Summary

Rethink Priorities (RP) is a research and implementation group. We research pressing opportunities and implement solutions to make the world better. We act upon these opportunities by developing and implementing strategies, projects, and solutions to address key issues. We do this work in close partnership with a variety of organizations including foundations and impact-focused nonprofits. This year’s highlights include:

- Early traction we have had on AI governance work

- Exploring how risk aversion influences cause prioritization

- Creating a cost-effectiveness tool to compare different causes

- Foundational work on shrimp welfare

- Consulting with GiveWell and Open Philanthropy (OP) on top global health and development opportunities

Key updates for us this year include:

- Launching a new Worldview Investigations team, who, over the course of the year, rounded off initial work on the Moral Weight Project prior to completing a sequence on “Causes and Uncertainty: Rethinking Value in Expectation”

- Launching the Institute for AI Policy & Strategy (IAPS), which evolved out of our AI Governance and Strategy Team. More information can be found at IAPS's announcement post

- Commencing four new fiscal sponsorships for unaffiliated groups (e.g., Apollo Research and the Effective Altruism Consulting Network)

- Fundraising was comparatively more difficult this year, and we think that funding gaps are the key bottleneck on our impact.

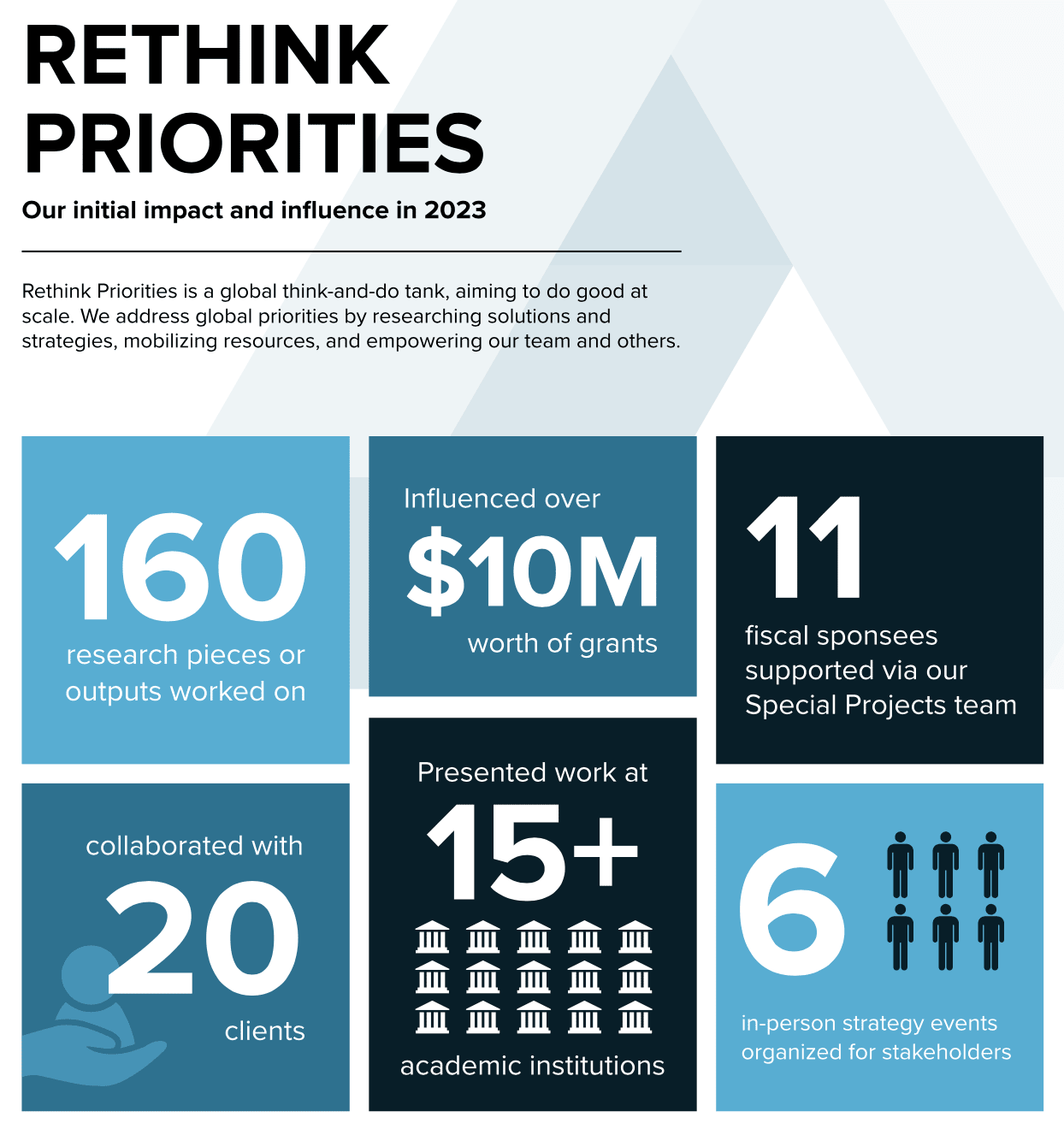

All our published research can be found here.[1] Over 2023, we worked on approximately 160 research pieces or outputs. Our research directly informed grants made by other organizations of a volume at least similar to the one of our operating budget (i.e., over $10M).[2] Further, through our Special Projects program, we supported 11 external organizations and initiatives with $5.1M in associated expenditures. We have reason to think we may be influencing grantmakers, implementers, and other key stakeholders in actions that aren't immediately captured in either that grants influenced or special projects expenditures sum. We have also completed work for ~20 different clients, presented at more than 15 academic institutions, and organized six of our own in-person convenings of stakeholders.

By the end of 2023, RP will have spent ~$11.4M.[3] We predict a revenue of ~$11.7M over 2023, and predict assets of ~$10.3M at year's end. We will have made 14 new hires over 2023, for a total of 72 permanent staff at year's end,[4] corresponding to ~70 full-time equivalent (FTE) staff.[5] The expenditure distributions for our focus areas over the year in total were as follows: 29% of our resources were spent working on animal welfare, 23% on artificial intelligence, 16% on global health and development, 11% on Worldview Investigations, 10% on our existential security work[6], and 9% on surveys and data analysis which encompasses various causes.[7]

Some of RP’s key strategic priorities for 2024 are: 1) continuing to strengthen our reputation and relations with key stakeholders, 2) diversifying our funding and stakeholders to scale our impact, and 3) investing greater resources into other parts of our theory of change beyond producing and disseminating research to increase others’ impact. To accomplish our strategic priorities, we aim to hire for new senior positions.

Some of our tentative plans for next year are:

- Creating key pieces of animal advocacy research such as a cost-effectiveness tracking database for chicken welfare campaigns, and annual state of the movement report for the farmed animal advocacy movement.

- Addressing perhaps critical windows for AI regulations by producing and disseminating research on compute governance, and lab governance.

- Consulting with more clients on global health and development interventions to attempt to shift large sums of money in effective fashion.

- Helping launch new projects that aim to reduce existential risk from AI.

- Being an excellent option for any promising projects seeking a fiscal sponsor.

- Providing rapid surveys and analysis to inform high priority strategic questions.

- Examining how foundations may best allocate resources across different causes, perhaps by creating a tool that inputs user values across different views.

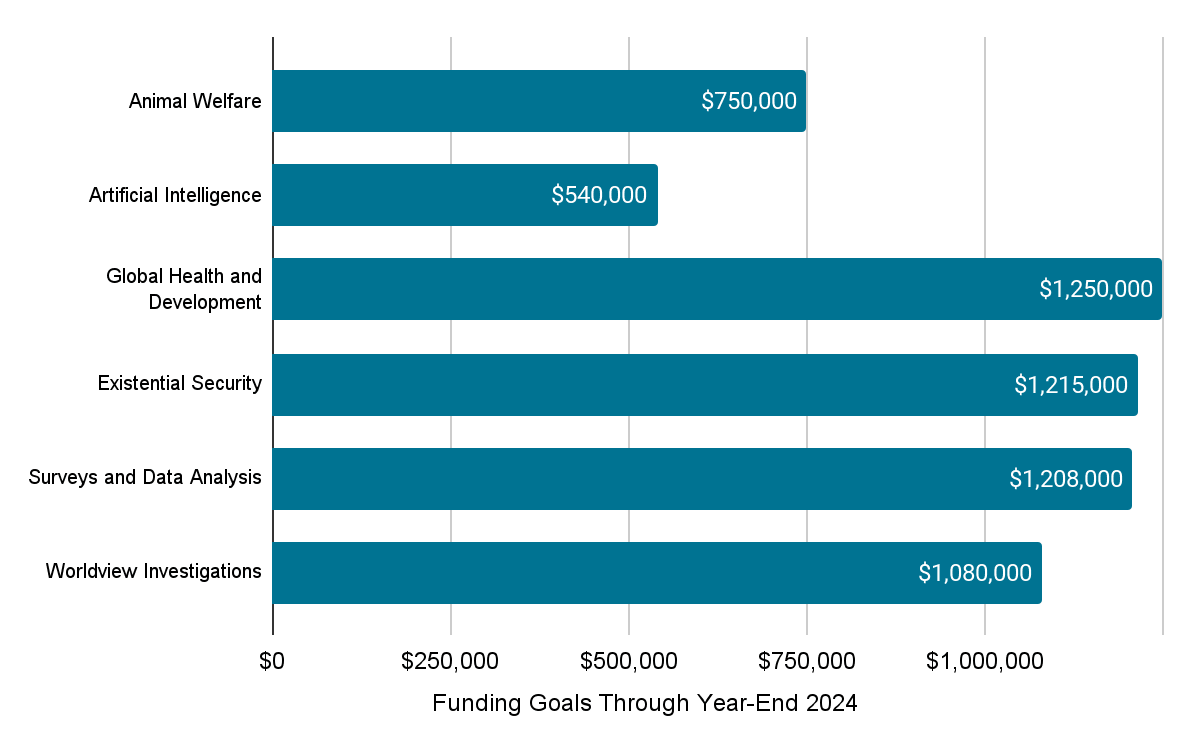

The gap between our current funding and the funding we would need to achieve our 2024 plans is several hundred thousand dollars. To further quantify the size of our funding gaps, this report outlines three scenarios over two years: 1) no growth, 2) low growth (~7.5% growth next year), and 3) moderate growth (~15% growth next year). For each scenario, we roughly estimate the total gap, including our goals for diversifying our funding, i.e. by receiving grants from funders other than OP (which is currently our largest funder) as well as targets for donors giving less than $100,000. The total current funding goals for non-Open Philanthropy funders under the growth scenarios through year-end 2024 range from ~$500,000 to ~$1.4M. Note these amounts assume we maintain 12 months of reserves at the end of 2024 for our work throughout 2025.

Our cause area, excluding Open Phil funding goals through year-end 2024 for the no-growth scenario are shown above.[8]

This report concludes with some reasons to consider funding RP.

The appendix then mainly provides background context on the organization, a somewhat fuller list of outputs by area, as well as some financial statements (balance sheet, and 2023 expenses).

***

Some readers may also be interested in our upcoming webinars:

- ^

Please subscribe to our newsletter if you want to hear about job openings, events, and research. Note that a little over half of our research is not publicly accessible either due to client confidentiality or due to lack of capacity to publish the work publicly.

- ^

This and all other dollar amounts in this review are in USD.

- ^

Note too that this $11.4M and the other amounts referred to in this paragraph aren’t inclusive of the amount that Special Projects of Rethink Priorities (e.g., Epoch, The Insect Institute, Apollo, etc.) spend.

- ^

This exact number may be slightly off due to any staff transitions late this year. Note too that we also worked, to differing extents, with close to 30 contractors throughout the year.

- ^

With roughly 47 FTE focused on research, 19 FTE on operations and communications, and five FTE on Special Projects focused on fiscal sponsorship and new project incubation.

- ^

Formerly called General Longtermism.

- ^

The time allocation across departments fairly closely matches the financial distributions.

- ^

Note that we have proportionately split our operations team's costs across all these areas.

Thanks for sharing, Kieran!

It is possible to donate specifically to a single area of RP? If yes, to which extend would the donation be fungible with donations to other areas?

Thanks for the question, Vasco :)

Yes. Donors can restrict their donations to RP. When making the donation, the donor should just mention what restriction is on the donation, and then we will restrict those funds for only that use in our accounting.

The only way this would be fungible is if it changes how we allocate unrestricted money. Based on our current plans, this would not happen for donations to our animal welfare or longtermist work but could happen for donations to other areas. If this is a concern for you, please flag that and we can actually go and increase the budget for the area by the size of your donation, thus fully eliminating all fungibility concerns completely.

We take donor preferences very seriously and do not think fungibility concerns should be a barrier to those giving to RP. That being said, we do appreciate those that trust us to allocate money to where we think it is needed most.

Thanks for clarifying!

The priorities section requires permission to access.

Should be fixed now.

Has RP published anything laying out its current plans for funding and/or executing work on AI governance and existential risk? I was surprised to see that no RP team members are listed under existential security or AIGS anymore. Is RP's plan going forward that all work in these areas will be carried out by special projects initiatives rather than the core RP team? And how does that relate to funding? When RP receives unrestricted donor funds, are those sometimes regranted to incubated orgs within special projects?

Thanks for your comment and questions!

RP is still involved in work on AI and existential risk. This work now takes place internally at RP on our Worldview Investigations Team and externally via our special projects program.

Across the special projects program in particular we are supporting over 50 total staff working on various AI-related projects! RP is still very involved with these groups, from fundraising to comms to strategic support and I personally dedicate almost all of my time to AI-related initiatives.

As part of this strategy, our team members who were formerly working in our "Existential Security Team" and our "AI Governance and Strategy" department are doing their work under a new banner that is better positioned to have the impact that RP wants to support.

We don't grant RP unrestricted funds to special projects, so if you want to donate to them you would have to restrict your donation to them. RP unrestricted funds could be used to support our Worldview Investigation Team. Feel free to reach out to me or to Henri Thunberg henri@rethinkpriorities.org if you want to learn more.