About three hours ago I posted a brief argument about why one of the US presidential candidates is strongly preferable to the other on five criteria of concern to EAs: AI governance, nuclear war prevention, climate change, pandemic preparedness and overall concern for people living outside the United States. I concluded by urging readers who were American citizens to vote accordingly, and encourage anyone whom they might potentially influence to do so. After about an hour the post had a karma of 23; shortly after that, it was removed from the front page and relegated to 'personal blogposts'. Not surprisingly, at that point it started to attract less attention.

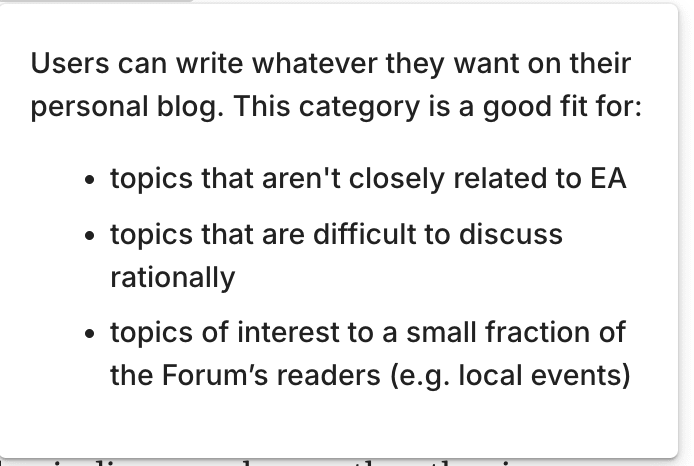

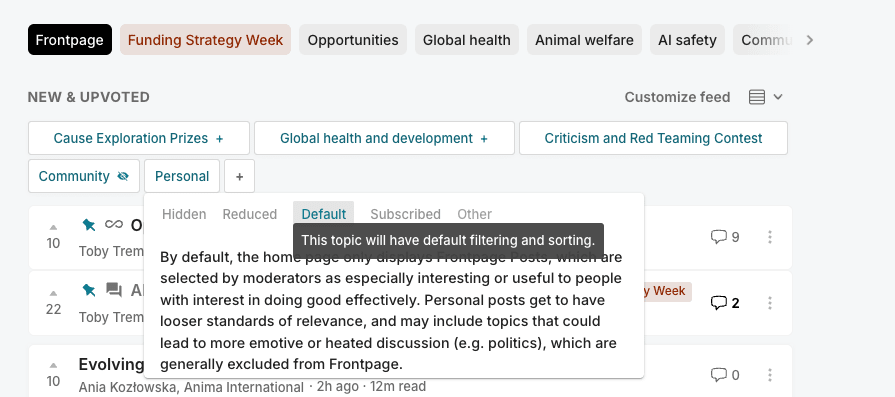

Not having heard of 'personal blogposts', I checked the description, which suggested that they were appropriate for '[bullet point] topics that aren't closely related to EA; [bullet point] topics that are difficult to discuss rationally; [bullet point] topics of interest to a small fraction of the forum's readers (e.g. local events)'. Frankly, I can't see how my blogpost fit any of these descriptors. It focused on the candidates' positions on issues of core concern to EAs, and from the fact that it had a karma score of 23 after an hour, it was obviously of interest to the message board's readership. The remaining possibility--unless there were other unstated criteria--is that it was judged to be on a topic that was 'difficult to discuss rationally'.

If so, I think that's a troubling commentary on EA, or the moderator's conception of EA. My post was clearly partisan, but I don't think any reasonable observer would have called it a rant. This election will almost surely make more difference to most of the causes that EAs hold dear than any other event this year--perhaps any other event this decade. Shouldn't the EA community, if anybody, be able to discuss these issues in a reasonably rational manner? I'd be grateful for a response from the moderator justifying the decision to exclude them.

You can read our politics policy here.

Thanks! Yeah, I thought maybe this was what Larks was referring to. Putting to one side the question of whether that was a valuable discussion or not, I wouldn't put that in the same category as OP's post. The Manifest discussion was about whether an organisation such as Manifest should give a platform to people with views some people consider racist, OP's post is an analysis of the policy platform of a leading candidate in what is arguably the world's most important election. I wouldn't describe the former discussion as 'political' in the same way that I would describe the OP's post. But perhaps others see it differently?