Sequence summary

This sequence investigates the expected loss of value from non-extinction global catastrophes. This post is a criticism of the biases and ambiguities inherent in longtermist terminology (including ‘global catastrophes’). The next post, A proposed hierarchy of longtermist concepts, lays out the terms which I intend to use for the rest of this sequence, and which encourage less heuristic, more expected-value thinking. Finally, for now, Modelling civilisation after a catastrophe lays out the structure of a proposed model which will inform the direction of my research for the next few months. If feedback on the structure is good, later parts will populate the model with some best-guess values, and present it in an editable form.

Introduction

Longtermist terminology has evolved haphazardly, so that much of it is misleading or noncomplementary. Michael Aird wrote a helpful post attempting to resolve inconsistencies in our usage, but that post’s necessity and its use of partially overlapping Venn diagrams - implying no formal relationships between the terms - itself highlights these problems. Moreover, during the evolution of longtermism, assumptions that originally started out as heuristics seem to have become locked in to the discussion via the terminology, biasing us towards those heuristics and away from expected value analyses.

In this post I discuss these concerns, but since I expect it to be relatively controversial and it isn’t really a prerequisite for the rest of the sequence so much as an explanation of why I’m not using standard terms, I would emphasise that this is strictly optional reading for the rest of the sequence, so think of it as a 'part 0' of the sequence. You should feel free to skip ahead if you disagree strongly or just aren’t particularly interested in a terminology discussion.

Concepts under the microscope

Existential catastrophe

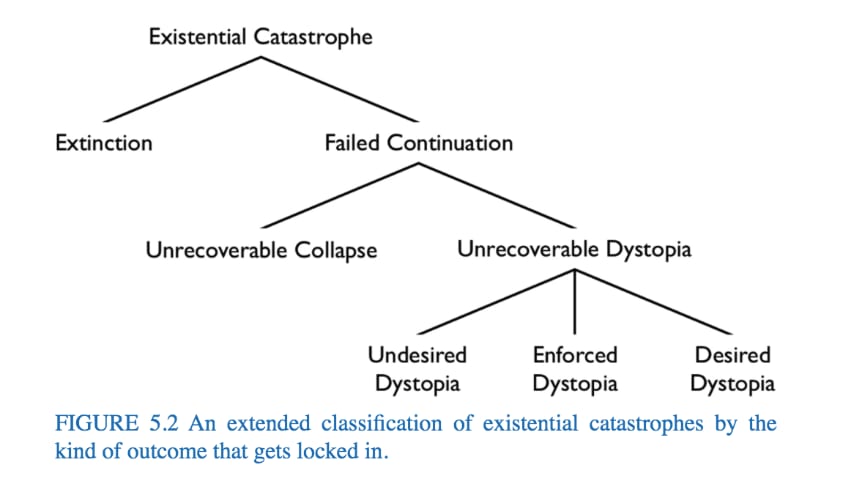

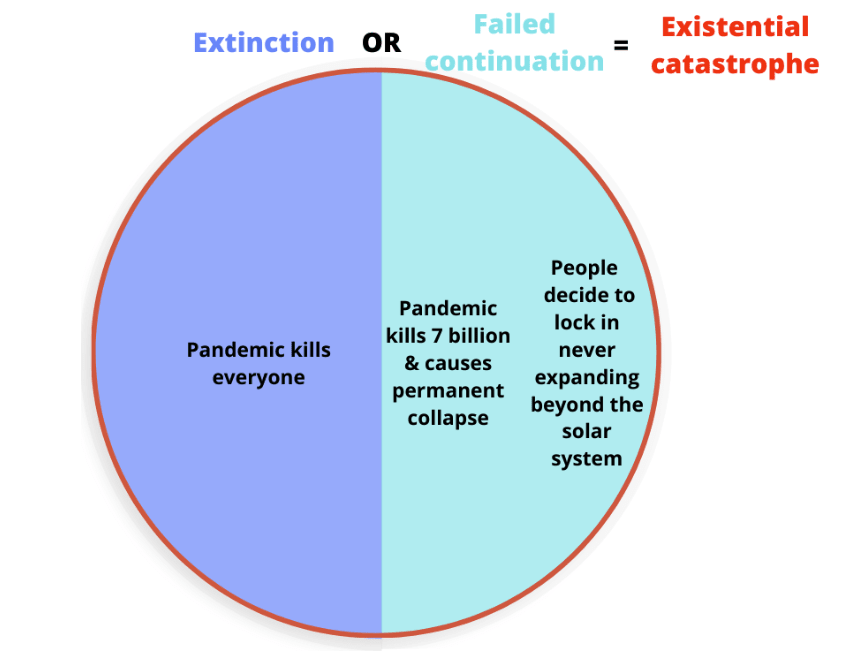

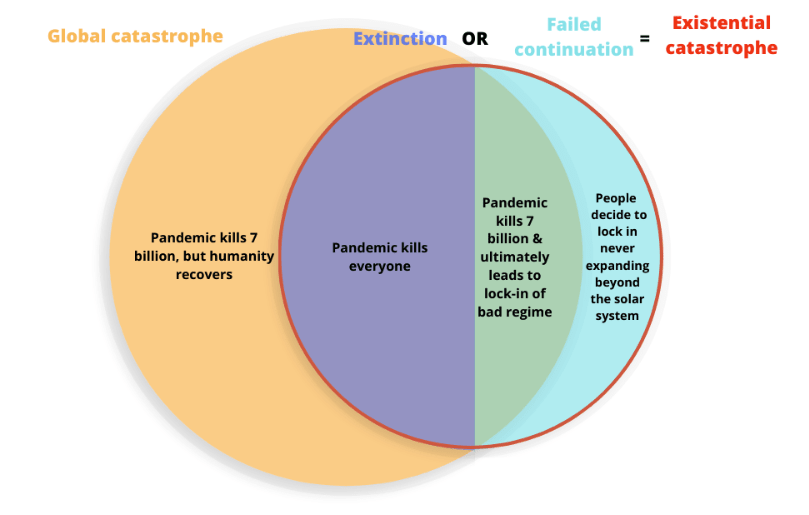

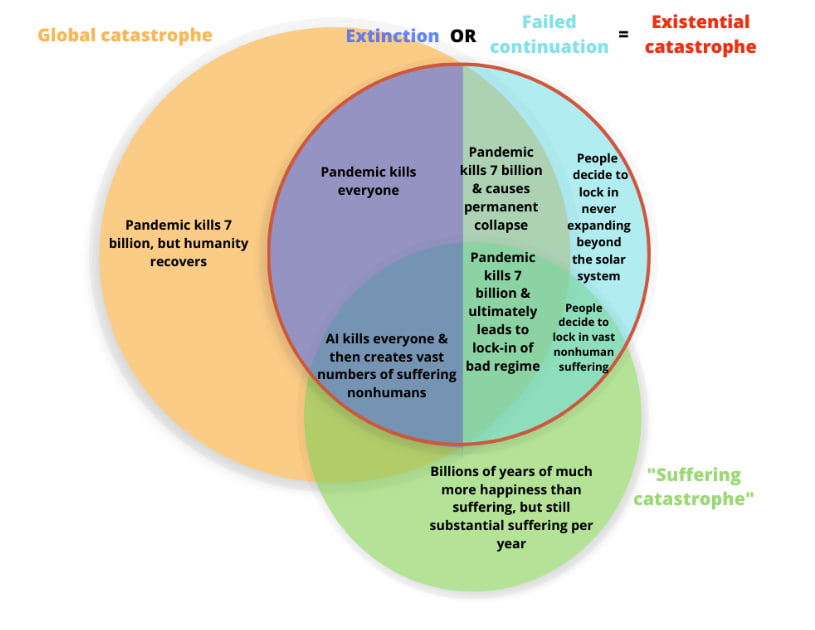

Recreating Ord and Aird’s diagrams of the anatomy of an existential catastrophe here, we can see an ‘existential catastrophe’ has various possible modes:

Venn diagram figures all from Aird’s post

It’s the ‘failed continuation’ branch which I think needlessly muddies the waters.

An ‘existential catastrophe’ doesn’t necessarily relate to existence…

In theory an existential catastrophe can describe a scenario in which civilisation lasts until the end of the universe, but has much less net welfare than we imagine it could have had.

This seems odd to consider an ‘existential’ risk - there are many ways in which we can imagine positive or negative changes to expected future quality of life (see for example Beckstead’s idea of trajectory change). Classing low-value-but-interstellar outcomes as existential catastrophes seems unhelpful both since it introduces definitional ambiguity over how much net welfare must be lost for them to qualify, and since questions of expected future quality of life are very distinct from questions of future quantity of life, and so seem like they should be asked separately.

… nor involve a catastrophe that anyone alive recognises

The concept also encompasses a civilisation that lives happily on Earth until the sun dies, perhaps even finding a way to survive that, but never spreading out across the universe. This means that, for example, universal adoption of a non-totalising population ethic would be an existential catastrophe. I’m strongly in favour of totalising population ethics, but this seems needlessly biasing.

‘Unrecoverable’ or ‘permanent’ states are a superfluous concept

In the diagram above, Ord categorises ‘unrecoverable dystopias’ as a type of existential risk. He actually seems to consider them necessarily impermanent, but (in their existentially riskiest form) irrevocably harmful to our prospects, saying ‘when they end (as they eventually must), we are much more likely than we were before to fall down to extinction or collapse than to rise up to fulfill our potential’.[1] Bostrom imagines related scenarios in which ‘it may not be possible to climb back up to present levels if natural conditions are less favorable than they were for our ancestors, for example if the most easily exploitable coal, oil, and mineral resources have been depleted.’

The common theme in these scenarios is that they lock humanity onto Earth, meaning we go extinct prematurely (as in, much sooner than we could have done if we’d expanded into the universe). Understood this way, the vast majority of the loss of value from either scenario comes from that premature extinction, not from the potentially lower quality of life until then or (following Bostrom’s original calculation) from a delay of even 10 million years on the path to success. So at the big picture level to which an ‘existential catastrophe’ applies, we can class ‘permanent’ states as ‘premature extinction’.

This doesn’t hold for scenarios in which a totalitarian government rules over the universe until its heat death, but a) Ord’s ‘as they eventually must’ suggests he doesn’t consider that a plausible outcome, and b) inasmuch as it is a plausible outcome, it would be subject to the ‘needn’t relate to existence’ criticism above.

Interpreting ‘unrecoverable’ probabilistically turns everything into a premature extinction risk

There seems little a priori reason to draw a categorical distinction between ‘unrecoverable’ and ‘recoverable’ states:

- Events that have widely differing extinction probabilities conditional on their occurrence might have widely differing probabilities of occurrence and/or widely differing tractability - so longtermists still need to do EV estimates to prioritise among them.

- It’s extremely hard to estimate even our current premature extinction probability - on some assumptions, we’re already in a state where it’s high enough to give low expected value even given the possibility of astronomical value. So on any probabilistic definition of an ‘unrecoverable’ state we might already be in one. Other people think a single serious setback would make success very unlikely.

- For categorical purposes it might not even be that valuable to estimate it - it’s hard to imagine a real-world resource-depletion or totalitarian scenario that felt so locked in that to a strict expected value maximiser the probability of success seemed low enough to give up on astronomical value.

This section isn’t meant to be a reductio ad absurdum - in the next couple of posts I suggest a model on which even setbacks as ‘minor’ as the 2008 financial crisis carry some amount of premature extinction riskiness.

Global catastrophe

Aird’s essay, referencing Bostrom & Ćirković, considers a catastrophe causing 10,000 deaths or $10,000,000,000 of damage to be insufficient to qualify as ‘global’ and considers ‘a catastrophe that caused 10 million fatalities or 10 trillion dollars worth of economic loss’ to be sufficient - a definition shared by the UN. Aird thus presents the concept as an overlapping Venn diagram:

But these distinctions, as Bostrom & Ćirković observe, are fairly loose and not that practically relevant - and the concept of ‘recovery’ (see orange area) is importantly underspecified.

A global catastrophe isn’t necessarily global……

Covid has caused about 6 million confirmed fatalities but probably over 20 million in practice and over 10 trillion dollars worth of economic loss, and I’m unsure whether in practice longtermists typically treat it as a global catastrophe.

Any absolute-value definition also misses something about relative magnitude - according to the World Bank, global GDP now is about 2.5 times its value 20 years ago, so 10 trillion dollars worth of economic loss around 2000 would have constituted 2.5 times the proportional harm it did today. And if the economy continues growing at a comparable rate, such a loss would barely register a century from now.

It’s also vague about time: humanity has had multiple disasters that might have met the above definition, but most of them were spread over many years or decades.

… and focusing on it as a category encourages us to make premature assumptions

The longtermist focus on global catastrophic risks appears to be a Schelling point, perhaps a form of maxipok - the idea that we should maximise the probability of an ‘ok outcome’. But though Bostrom originally presented maxipok as ‘at best … a rule of thumb, a prima facie suggestion, rather than a principle of absolute validity’, it has come to resemble the latter, without, to my knowledge, any rigorous defence of why it should now be so.

To estimate the counterfactual value of some event or class of events on the long-term future, we need to separately determine the magnitude of such events in some event-specific unit (here, fatalities or $ cost), and, separately, our credence in the long-term value of the event’s various effects. Focusing on ‘catastrophes’ implies that we believe these two questions are strongly correlated - that we can be much more confident in the outcome of higher magnitude events. In the limit (extinction) this seems like a robust belief (but perhaps not a settled question), but it’s less clear that it holds for lesser magnitudes. There might be good arguments to think the likelihood of long-term disvalue from an event that caused 10,000,000 deaths is higher than likelihood of long-term disvalue from one that caused 10,000, but the focus on global catastrophes bakes that belief into longtermist discussion before any argument has established such a strong correlation.

‘Recovery’ from catastrophe is a vague goal…

The concept of ‘recovery’ from a collapse is widely referred to in existential-risk-related discussion (see note 2.22 in last link) - but it’s used to mean anything from ‘recovering industry’ through something like ‘getting to technology equivalent to the modern day’s’[2], up to ‘when human civilization recovers a space travel program that has the potential to colonize space’.

For any of these interpretations, the differential technological progress of a reboot would also make it hard to identify a technological state ‘equivalent’ to the modern world.[3]

… and not the one we actually care about

As longtermists, we need to look at the endgame, which, per Bostrom’s original discussion is approximately ‘settling the Virgo Supercluster’.[4] The important question, given catastrophe, is not ‘how likely is it we could reinvent the iPhone?’, but ‘how likely is it that civilisation would reach the stars?’ This question, in addition to sounding cooler, captures two important concerns that as far as I’ve seen have only been discussed in highly abstract terms in collapse and resilience literature:

- the difference in difficulty for future civilisations of a) getting back to industrialisation, or wherever ‘recovery’ would leave us, and of b) getting from there to becoming robustly interstellar

- the possibility that those future civilisations might regress again, and that this could happen many times

Suffering catastrophe

Aird defines this as ‘an event or process involving suffering on an astronomical scale’.

The definition of a suffering catastrophe depends on your moral values

For example a universe full of happy people who occasionally allow themselves a moment of melancholy for their ancestors would qualify under a sufficiently negative-leaning population ethic. Perhaps less controversially, a generally great universe with some serious long-term suffering would qualify under a less stringently negative ethic. So it seems often more helpful to talk about specific scenarios (such as systemic oppression of animals). And when we do talk about suffering catastrophes, or by extension S-risks, we should make explicit the contextual population ethic.

A mini-manifesto for expected-value terminology

To speak about a subject amenable to expected value analysis, I think as many as possible of the following qualities are useful, inspired by the discussion above:

- Having well defined formal relationships between the key concepts

- Using categorical distinctions only to reflect relatively discrete states. That is, between things that seem ‘qualitatively different’, such as plants and animals, rather than between things whose main difference is quantitative, such as mountains and hills

- Using language which is as close to intuitive/natural use as possible

- Using precise language which doesn’t evoke concepts that aren’t explicitly intended in the definition

Though there will usually be a tradeoff between the last two.

In the next post, I’ll give the terms that I intend to use for discussion around ‘catastrophes’ in the rest of the sequence, which adhere as closely as possible to these principles.

- ^

The Precipice, p225

- ^

For an example of it meaning ‘recovering industry’, see Figure 3 in Long-Term Trajectories of Human Civilization. For an example of it meaning ‘getting to the modern day’, see the text immediately preceding and referring to that figure, which describes ‘recovering back towards the state of the current civilization’.

For another example of referring to the industrial revolution, see What We Owe the Future, which doesn’t define recovery explicitly, but whose section entitled ‘Would We Recover from Extreme Catastrophes?’ proceeds chronologically only as far as the first industrial revolution (the final mention of time in the section is ‘Once Britain industrialised, other European countries and Western offshoots like the United States quickly followed suit; it took less than two hundred years for most of the rest of the world to do the same. This suggests that the path to rapid industrialisation is generally attainable for agricultural societies once the knowledge is there’).

In Luisa Rodriguez’s post specifically on the path to recovery, she seems to switch from ‘recovery of current levels of technology’ in her summary to ‘recovering industry’ in the discussion of the specifics.

- ^

In The Knowledge, Lewis Dartnell evocatively describes how ‘A rebooting civilization might therefore conceivably resemble a steampunk mishmash of incongruous technologies, with traditional-looking four-sail windmills or waterwheels harnessing the natural forces not to grind grain into flour or drive trip-hammers, but to generate electricity to feed into local power grids.’

- ^

More specifically, settling it with a relatively benign culture. I tend toward optimism in assuming that if we settle it, it will be a pretty good net outcome, but, per a theme running throughout this post, the average welfare per person is a distinct question.

Thank you for the post!

I have thought of one particularly biased terminology in longtermism a lot: "humanity" / "future people". Why is it not "all sentient beings"? (I am guessing one big reason is strategic instead of conceptual)

It is not a bias, at least not as the term is used by leading longtermists. Toby Ord explains it clearly in The Precipice (pp. 38–39):

I understand that Ord, and MacAskill too, have given similar explanations, and for multiple times among each of them. But I disagree that the terminology is not biased - It still leads a lot of readers/listeners to focus on the future of humans if they haven't seen/heard these caveats, or maybe even if they have read/heard about it.

I don't think the fact that among organisms only humans can help other sentient beings justifies almost always using languages like "future of humanity", "future people", etc. For example, "future people matter morally just as much as people alive today". Whether this sentence should be said with "future people" or "future sentient beings" shouldn't have anything to do whether humans/people will be the only beings who can help other sentient beings. It just looks like a strategic move to reduce the weirdness of longtermism, or avoiding fighting two philosophical battles (which are probably sound reasons, but I also worry that this practice locks in humancentric/speciesist values) So yes, until AGI comes only humans can help other sentient beings but the future that matters should still be a "future of sentient beings".

And I am not convinced that the terminology didn't serve speciesism/humancentrism in the community. As a matter of fact, some of prominent longtermists, when they try to evaluate the value of the future, they focused on how many future humans there could be and what could happen to them. Holden Karnofsky and some others took it further and discussed digital people. MacAskill wrote about the number of nonhuman animals in the past and present in WWOTF, but didn't discuss how many of them there will be and what might happen to them.

Fair enough.

In this context, I think there are actually two separate ways in which terminology can inadvertently bias our thinking:

There's a footnote in the next post to the effect that 'people' shouldn't be taken too literally. I think that's the short answer in many cases - that it's just easier to say/write 'people'. There's also a tradition in philosophy of using 'personhood' more generally than to refer to homo sapiens. It's maybe a confusing tradition, since I think much of the time people also use the word in its more commonly understood way, so the two can get muddled.

For what it's worth, my impression is that few philosophical longtermists would exclude nonhuman animals from moral consideration, though they might disagree around how much we should value the welfare of particularly alien-seeming artificial intelligences.

I think "easier to say/write" is not a good enough reason (certainly much weaker than the concern of fighting two philosophical battles, or weirding people away) to always say/write "people"/"humanity".

My understanding is that when it was proposed to use humans/people/humanity to replace men/man/mankind to refer to humans generally, there were some pushbacks. I didn't check the full details of the pushbacks but I can imagine some saying man/mankind is just easier to say/write because they have fewer words, and are more commonly known at that time. And I am pretty sure that "mankind" not being gender-neutral is what led to feminists, literature writers, and even etymologists to eventually support using "humanity" instead.

You mentioned "the two [meanings] can get muddled". For me, that's a reason to use "sentient beings" instead of "people". This was actually the reason some etymologists mentioned when they supported using "humanity" in place of "mankind", because back in their times, the word "man/men" started to mean both "humans" and "male humans", making it possibly, if not likely, suggest that anything that relates to the whole humanity has nothing to do with women.

And it seems to me that we need to ask, as much as we need to ask whether "mankind" is not explicitly mentioning women as one of the stakeholders given the currently most common meaning of the word "man", whether "people" is a good umbrella term for all sentient beings. It seems to me that it is clearly not.

I am glad that you mentioned the word "person". I think even though the same problems still exist if we use this word insofar as people think the word "person" can only be used on humans (which arguably is most people), the problems are less severe. For instance, some animal advocates are trying to advocate for some nonhuman animals to be granted legal personhood, (and some environmentalists tried to seek for nature entities to be given legal personhood, and some of them succeeded). My current take is that "person" is better. But still not ideal as it is quite clear that most people now can only think of humans when they see/hear "person".

I agree that "few philosophical longtermists would exclude nonhuman animals from moral consideration". But I took it literally because I do think there is at least one who would exclude nonhuman animals. Eliezer Yudkowsky, whom some might doubt how much he is a philosopher/longtermist, holds the view that pigs cannot feel pain (and by choosing pig he is basically saying no nonhuman animals can feel pain). It also seems to me some "practical longtermists" I came across are omitting/heavily discounting nonhuman animals in their longtermist pictures. For instance, Holden Karnofsky said in an 2017 article on Radical Empathy that his " own reflections and reasoning about philosophy of mind have, so far, seemed to indicate against the idea that e.g. chickens merit moral concern. And my intuitions value humans astronomically more." (but he accepts that he could be wrong and that there are smart people who think he is wrong about this, so that he is willing to have OP's "neartermist" part to help nonhuman animals) And it seems to me that the claim is still mostly right because most longtermists are EAs or lesswrongers or both. But I expect some non-EA/lesswronger philosophers to become longtermists in the future (and presumably this is what the advocates of longtermism want, even for those people who only care about humans), and I also expect some of them to not care about animals.

Also, excluding nonhumans from longtermism philosophically is different from excluding nonhumans from the longtermist project. The fact that there isn't yet a single project supported by longtermist funders supporting work on animal welfare under the longtermist worldview makes the philosophical inclusion rather non-comforting, if not more depressing. (I mean, not even a single, which could easily be justified by worldview/intervention diversification. And I can assure you that it is not because of a lack of proposals)

P.S. I sometimes have to say "animal" instead of "nonhuman animals" in my writings to not freak people out or think I am an extremist. But this clearly suffers from the same problem I am complaining.

Wow, this and the next post are exceptionally clear, major kudos

Thank you :)

When I saw "implicit bias" in the post's title, I was expecting a very different post. I was expecting a definition along the lines of:

I don't know if others will have a similar misunderstanding of what you meant by "implicit bias". But if so, to avoid confusion, I'd suggest you use a different term like "implicit assumption" instead.

Thanks! I've changed it to 'biasing assumptions', since everything has implicit assumptions :)

Thanks for this post!

I strongly agree with this:

But I feel like it'd be more confusing at this point to start using "existential risk" to mean "extinction risk" given the body of literature that's gone in for the former?

I certainly don't think we should keep using the old terms with different meanings. I suggest using some new, cleaner terms that are more conducive to probabilistic thinking. In practice I'm sure people will still talk about existential risk for the reason you give, but perhaps less so, or perhaps specifically when talking about less probabilistic concepts such as population ethics discussions.

Thank you so much, this is especially helpful for a newbie like me who was a little confused about these things and now thinks that is mainly to do with terminology.