This post is part of the Community Events Retrospective sequence.

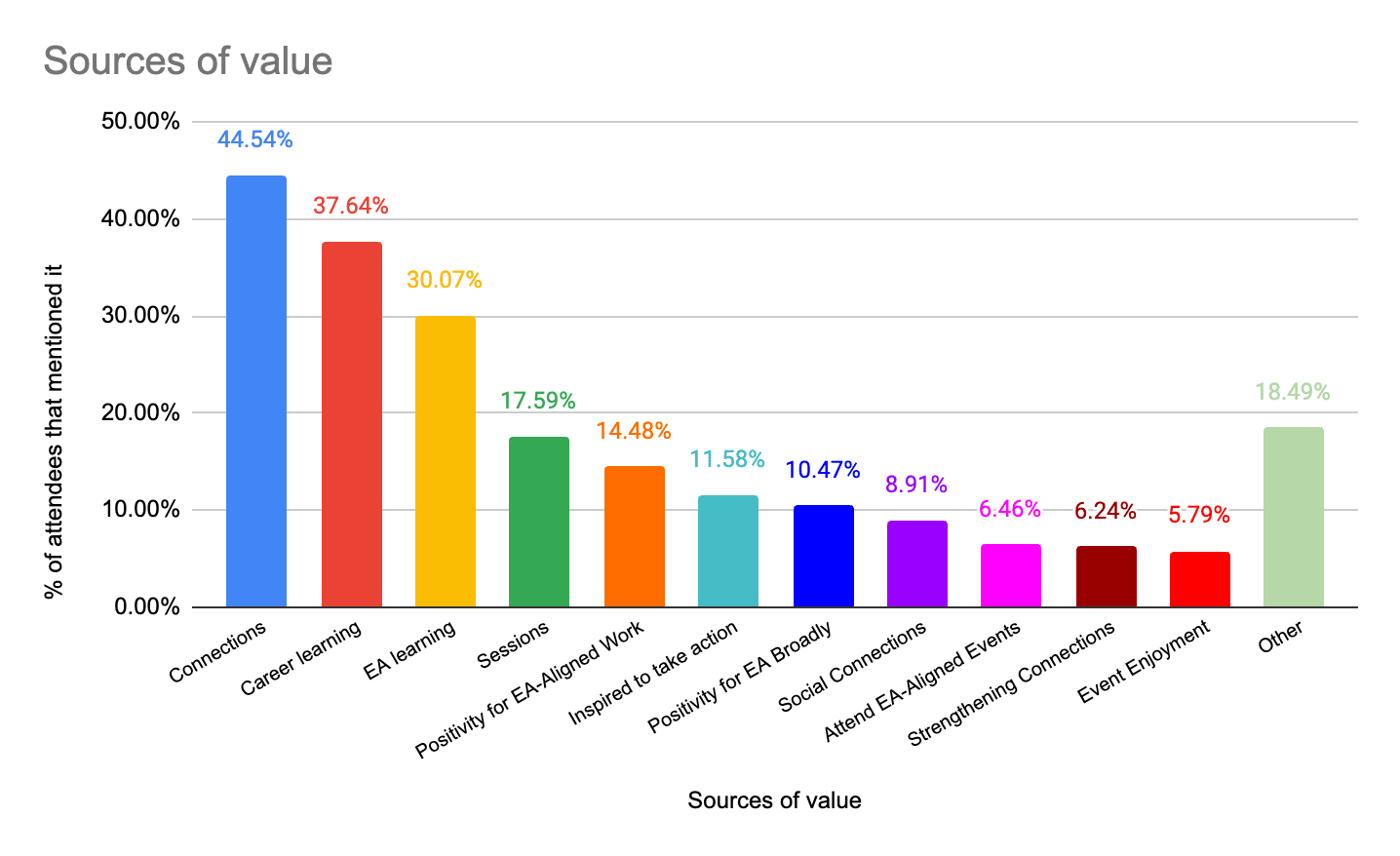

Earlier this year, I surveyed ~500 people who had attended an EAGx event or an event funded by the Community Events Programme. The key sources of value that attendees report getting are:

- New connections in their network;

- Learning about new career options; and

- Learning about EA cause areas.

This finding is quite rough and we plan on getting more clarity on sources of value from our events in future surveys.

Results

In the survey, I asked attendees about routes to valuable outcomes from our events,[1] and then coded attendee responses into the following baskets:[2]

- Connections

- Learning about new career options

- Learning more about EA cause areas or taking them more seriously

- Increased motivation or positivity for EA broadly

- Increased motivation or positivity for EA-aligned work

- Finding specific sessions interesting or valuable

- More likely to attend other EA-aligned events/opportunities

- Inspired or motivated to take a specific action

- Strengthening existing connections

- Making friends or social connections

- Event enjoyment (just having a really positive experience)

These are the results:

A key limitation to this analysis was that we explicitly asked attendees to report how connections were valuable to them, meaning the “Connections” response is likely artificially high. Learning about career options and EA cause areas (incl. via sessions) came through as a frequent source of value for our attendees. I had expected more attendees to report general positivity about the event, but this appeared far less frequently than learning or connections.

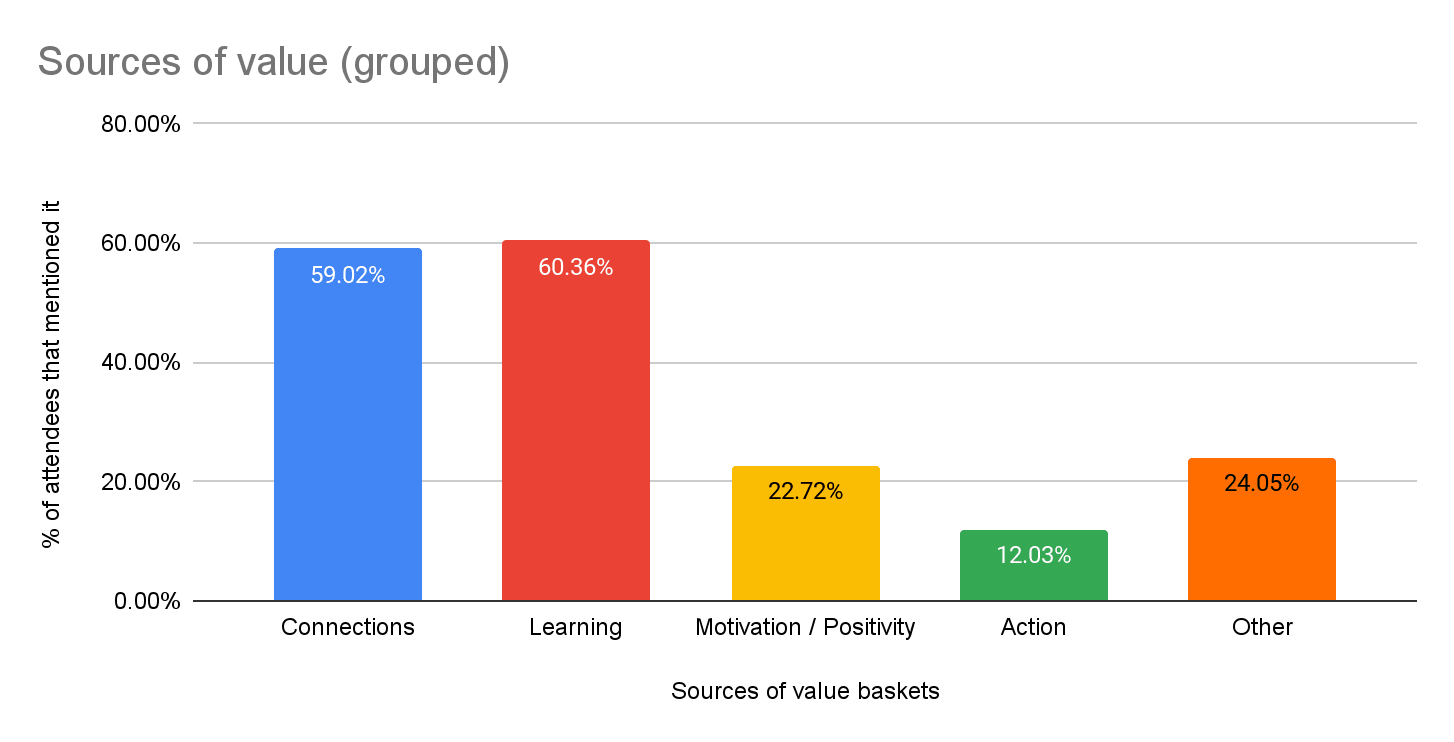

Some of these options have significant overlap. To get a slightly clearer picture of how “learning” in general might compare to “connections” in general, I grouped options together into the following baskets:

- Connections (“Connections”, “Strengthening existing connections” and “Making friends or social connections”)

- Learning (“Learned about new career options”, “Finding specific sessions interesting or valuable” and “Learning more about EA cause areas or taking them more seriously”)

- Motivation / Positivity (“Increased motivation or positivity for EA broadly” and “Increased motiviation or positivity for EA-aligned work”)

- Action (“More likely to attend other EA-aligned events/opportunities” and “Inspired or motivated to take a specific action”)

- Other (“Event enjoyment” and “Other”).

These are the results using the grouped baskets:

With this grouping, which is more favourable to the “Learning” basket (previously split into frequently cited categories), it’s striking that this basket outperforms Connections, despite the survey explicitly prompting attendees to report on connections.

This updates me towards thinking that learning (about career options or ideas) is a large source of value from EA community-building events. Like connections, it’s a source of value where tangible outcomes that might occur downstream of this value will be difficult to track - attendees might well just form a deeper understanding about a key EA cause area, take action later on, but not report an event as being a pivotal moment in their journey.

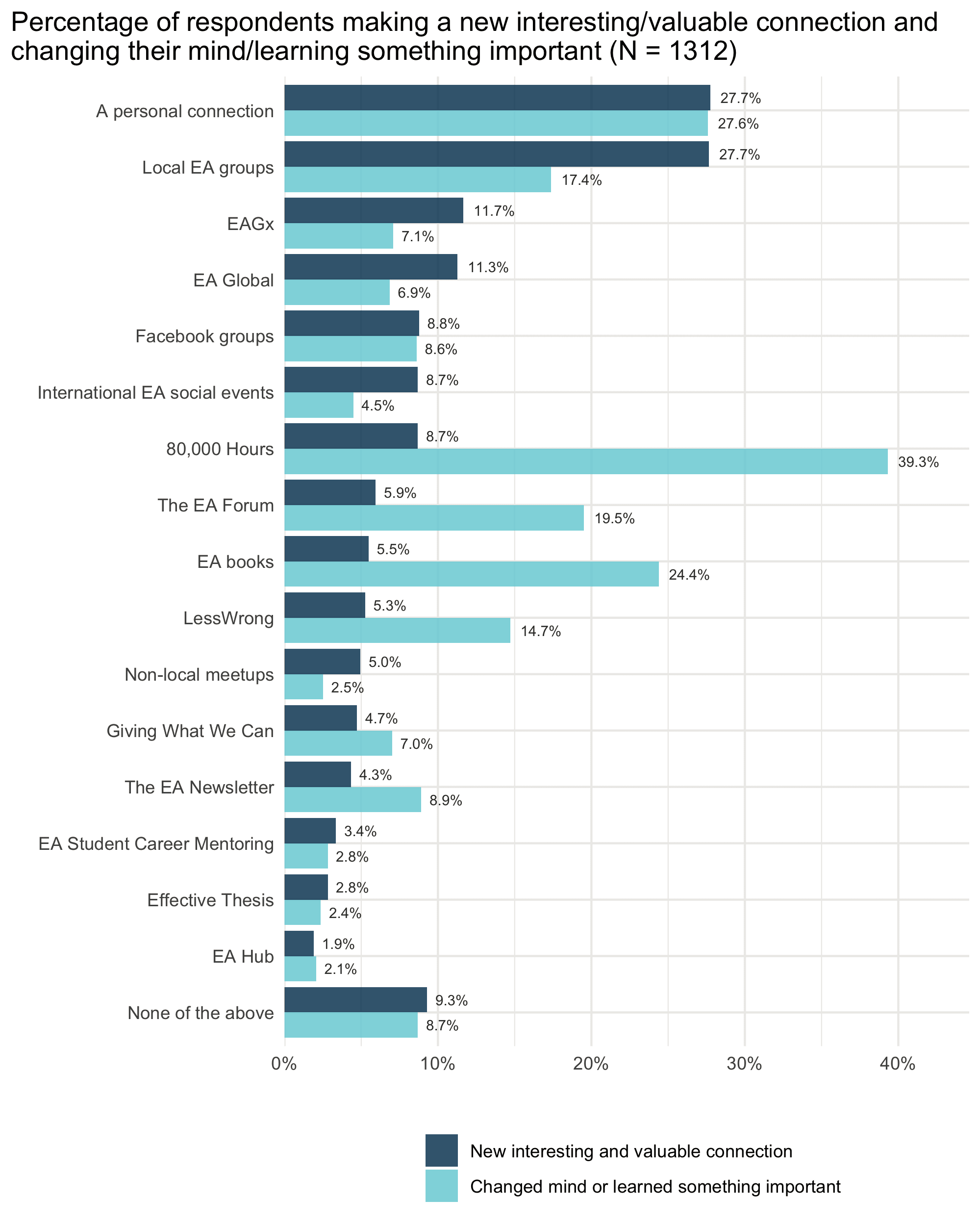

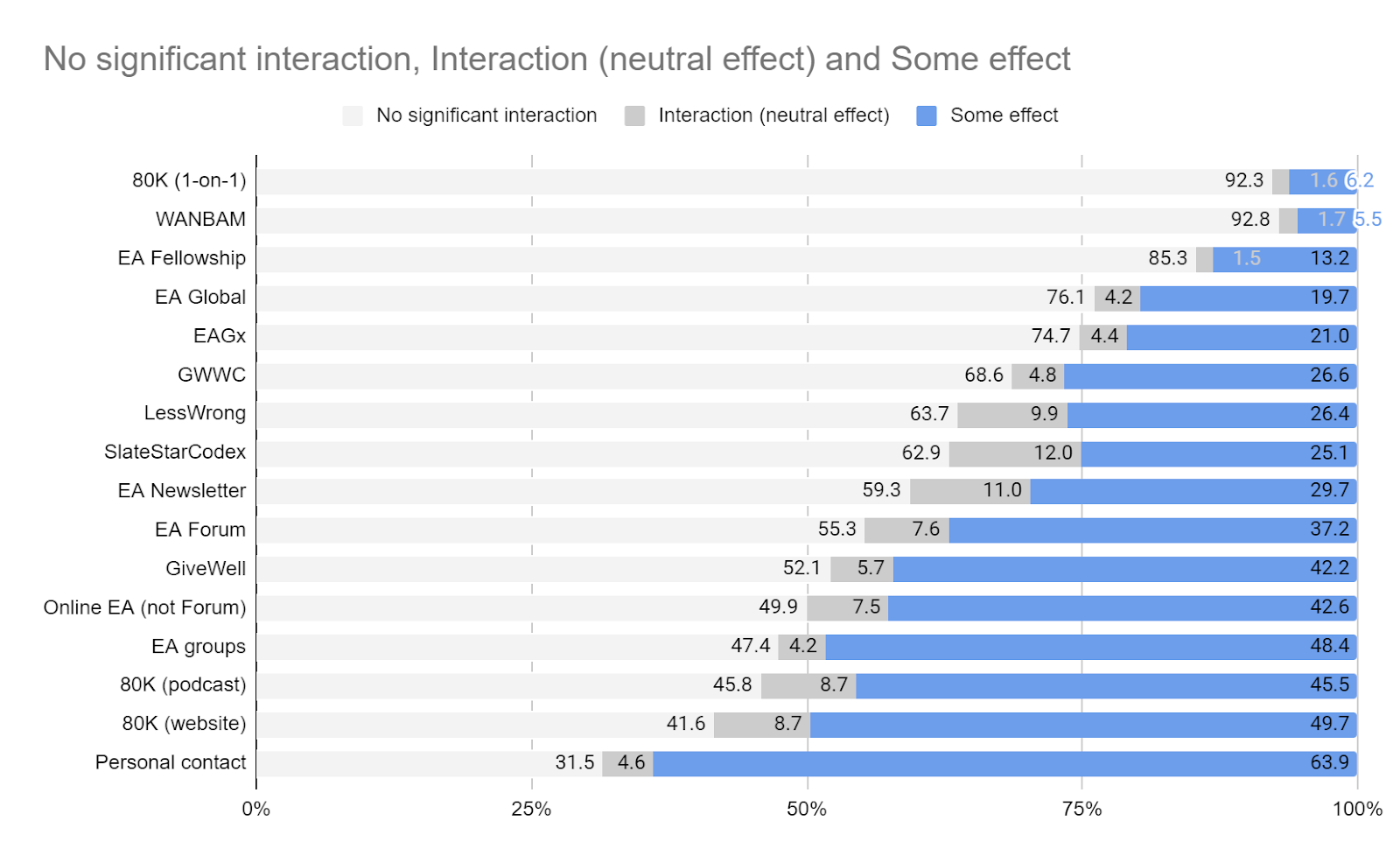

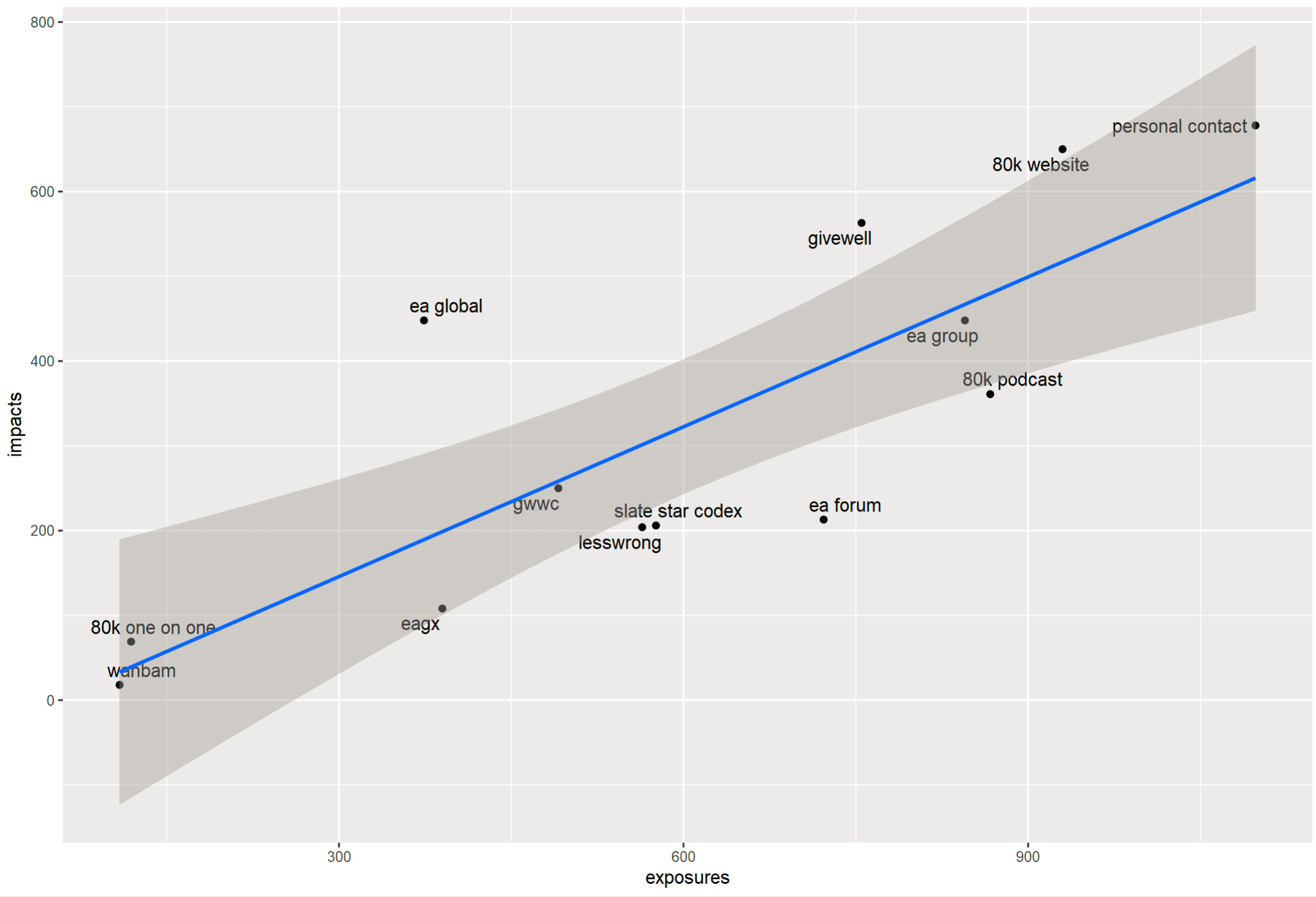

That said, providing opportunities for learning might not be the comparative advantage for events. Information about EA-aligned career opportunities and ideas can be found online (e.g. via the 80,000 Hours website, the 80,000 Hours podcast[3], the EA Forum or via the many EA newsletters) whereas in-person events are one of the few ways EA community members can meet other community members in person and build their network. This weakly suggests that connections might still be the source of value event organisers should focus on.

My thanks to Callum Calvert, Jona Glade, Michel Justen, Sophie Thomson, Oscar Howie, Ben West, Eli Nathan, Ivan Burduk and Amy Labenz for comments and feedback.

- ^

Specifically, we asked how many new connections attendee made, “How were these new connections valuable to you, specifically?” and “What other sources of value did you get from attending the event, which aren’t captured by connections?”.

- ^

My thanks to our virtual assistant, George Go, who actually coded all of the answers with my input and feedback.

- ^

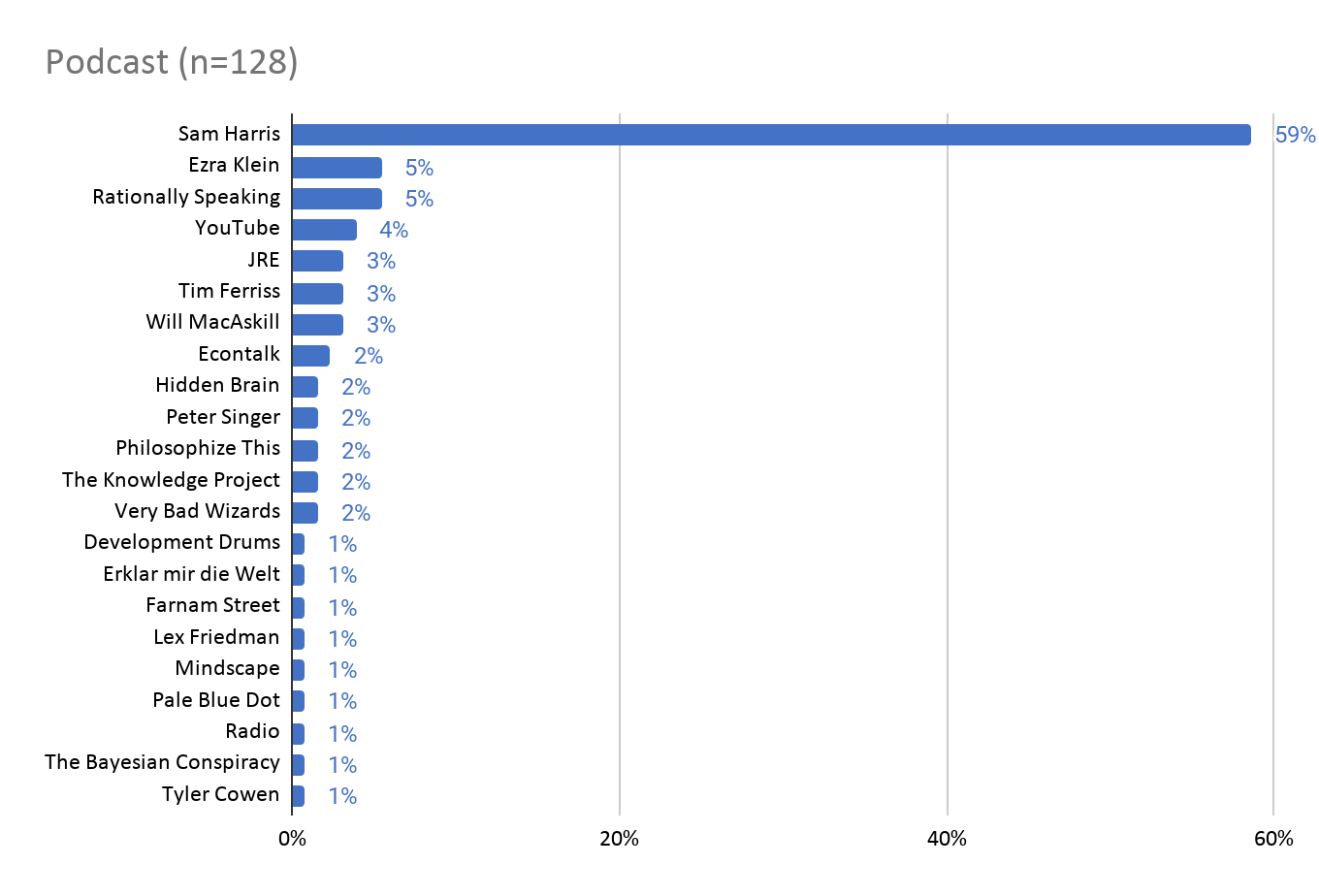

Conflict of interest: my fiancé, Luisa Rodriguez, is a co-host of this podcast.

Some important qualifiers:

Some important qualifiers:

We're very excited to accept people with experience in EA cause areas to EA Global and EAGx events, and usually weigh this quite a lot when considering an application. A common type of application that we accept is someone who's working in an EA cause area and is curious to learn more about the community.

It's true that we ask about engagement with EA, but that isn't the only thing we consider, far from it.