(Probably the most important post of this sequence.)

(This post also has a Russian version, translated from the present original by K. Kirdan.)

Summary: Some values are less adapted to the “biggest potential futures”[1] than others (see my previous post), in the sense that they may constrain how one should go about colonizing space, making them less competitive in a space-expansion race. The preference for reducing suffering is one example of a preference that seems particularly likely to be unadapted and selected against. It forces the suffering-concerned agents to make trade-offs between preventing suffering and increasing their ability to create more of what they value. Meanwhile, those who don’t care about suffering don’t face this trade-off and can focus on optimizing for what they value without worrying about the suffering they might (in)directly cause. Therefore, we should – all else equal – expect the “grabbiest” civilizations/agents to have relatively low levels of concern for suffering, including humanity (if it becomes grabby). Call this the Upside-focused Colonist Curse (UCC). In this post, I explain this UCC dynamic in more detail using an example. Then, I argue that the more significant this dynamic is (relative to competing others), the more we should prioritize s-risks over other long-term risks, and soon.

The humane values, the positive utilitarians, and the disvalue penalty

Consider the concept of disvalue penalty: the (subjective) amount of disvalue a given agent would have to be responsible for in order to bring about the highest (subjective) amount of value they can. The story below should make what it means more intuitive.

Say they are only two types of agents:

- those endorsing “humane values” (the HVs) who disvalue suffering and value things like pleasure;

- the “positive utilitarians” (the PUs) who value things like pleasure but disvalue nothing.

These two groups are in competition to control their shared planet, or solar system, or light cone, or whatever.

The HVs estimate that they could colonize a maximum of [some high number] of stars and fill those with a maximum of [some high number] units of value. However, they also know that increasing their civilization’s ability to create value also increases s-risks (in absolute).[2] They, therefore, face a trade-off between maximizing value and preventing suffering which incentivizes them to be cautious with regard to how they colonize space. If they were to purely optimize for more value without watching for the suffering they might (directly or indirectly) become responsible for, they’d predict they would cause x unit of suffering for every 10 units of value they create.[3] This is the HVs’ disvalue penalty: x/10 (which is a ratio; a high ratio means a heavy penalty).

The PUs, however, do not care about the suffering they might be responsible for. They don’t face the trade-off the HVs face and have no incentive to be cautious like them. They can – right away – start colonizing as many stars as possible to eventually fill them with value, without worrying about anything else. The PU’s disvalue penalty is 0.

Image 1: Niander Wallace, a character from Blade Runner 2049 who can be thought of as a particularly baddy PU.[4]

Because they have a higher disvalue penalty (incentivizing them to be more cautious), the humane values are less “grabby” than those of the PUs. While the PUs can happily spread without fearing any downside, the HVs would want to spend some time and resources thinking about how to avoid causing too much suffering while colonizing space (and about whether it’s worth colonizing at all), since suffering would hurt their total utility. This means, according to the Grabby Values Selection Thesis, that we should – all else equal – expect PU-ish values to be selected over HV-ish values in the space colonization race.[5] Obviously, if there were values prioritizing suffering reduction more than the HVs, these would be selected against even more strongly. This is the Upside-focused Colonist Curse (UCC).[6]

The concept of disvalue penalty is somewhat similar to that of alignment tax. The heavier it is, the harder it is for you to win the race. The situation PUs and the HVs are in, in my story, is analogous to the situation the AI capability and AI safety people are currently in, in the real world.

This UCC selection effect can occur both within a civilization (intra-civ; where HVs and PUs are part of the same civilization) and in between different civs (inter-civ selection; where HVs and PUs are competing civilizations).[7]

Addressing obvious objections

(Mostly relevant to the inter-civ context) But why don’t the suffering-concerned agents simply prioritize colonizing space and actually think about how to best maximize their utility function later, in order not to lose the race against the PUs?

This is what my previous post refers to as the convergent preemptive colonization argument. We'll see that this argument does not apply well to our present example. (See Shulman 2012 for an example in which the argument works better.) It, indeed, seems like the HVs could postpone some actions like thinking about how to create happy minds without requiring some non-trivial incidental suffering, and therefore the action of eventually doing it. However, …

- this, of course, conditions on them being patient enough.

- they can’t similarly delay reducing all kinds of s-risks. The idea of prioritizing space colonization and postponing suffering prevention may be highly similar to that of prioritizing AI capabilities and postponing AI safety. Once the former is prioritized, it may be too late for the latter. A few examples:

- they can’t delay preventing disvalue from conflict with other agents (see Clifton 2019; Sandberg 2021). They need to think about this before meeting them so they’ll spend time/resources thinking about this instead of colonizing sooner/faster.

- they also can’t delay preventing suffering subroutines/simulations (see Tomasik 2015) that might be instrumentally useful during the colonization process. They also need to think about this beforehand.

- the HVs should be more concerned (relative to the PUs) about the possibility of their values drifting or being hacked (by, e.g., a malevolent actor), since this might result in hyper-catastrophic near-misses (see Tomasik 2019; Leech 2022) from their perspective. The HVs have more to lose than the PUs, such that they would want to spend time and resources on preventing this early on.

- in our current world, concern for suffering seems to correlate with skepticism and uncertainty regarding the value of colonizing space. This correlation is so strong that it might be hard to select against the latter without selecting against the former. And while this might seem specific to today’s humans, it is arguably a tendency that is somewhat generalizable to our successors and aliens.

- the HVs’ concern for suffering may very well be asymmetric on the action-omission axis, such that they’re more worried about the suffering they might create than the suffering they might prevent, making them less bullish on space colonization.

Note that all those points are disjunctive. You don’t need to buy them all to agree with my general claim.

(Mostly relevant to the intra-civ context) But why don’t the suffering-concerned agents try to join the PUs in their effort to colonize space and push for more cautiousness instead of trying to beat them?

They will likely try that, indeed. But we should expect them to be progressively selected against since they drag down the PU project. The few HVs who might manage not to value drift and not to get kicked out of the group of PUs driving colonization efforts are those who are complacent and not pushing really hard for more cautiousness.

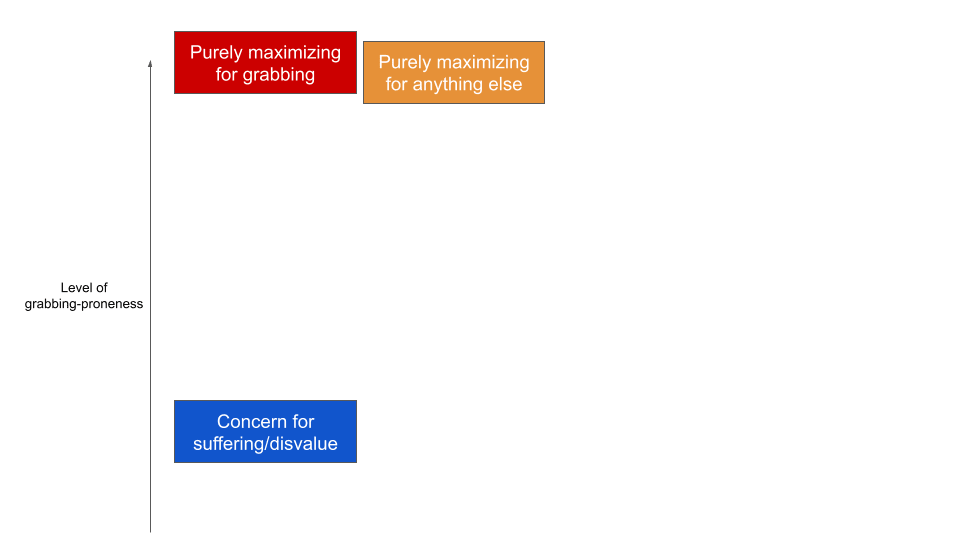

If the Upside-focused Colonist Curse is real, then so too is the selection effect against anything that differs from “let’s just grab as much as possible and nothing else matters”.

Yes, that is (technically) true. However, the selection effect against concern for suffering/disvalue seems much stronger than the selection effect against PU-ish values. In fact, Carl Shulman (2012) argues that the selection effect against PU-ish values is very small. And although I find some of his assumptions poorly backed up, I think the considerations he raises make a lot of sense. Disvaluing something, however, seems to be a notable constraint, especially when this something is suffering, i.e., a thing that might be brought about for quite a few different reasons (see my response to the first objection). Value systems that demand the minimization of suffering seem therefore more likely to be selected against.

Figure 1: Vague illustration of my intuitions regarding how grabby different moral preferences are.

Potential implications

I think I have demonstrated that the biggest futures correlate with negligible concern for suffering (i.e., that UCC is a thing), all else equal. Obviously, all else is probably not equal. The extent to which UCC is decisive relative to other dynamics seems to mainly depend on the strength of the (broader) grabby values selection effect, which I briefly and roughly try to assess in this section in my previous post.

In the present section, however, I want to draw some implications from UCC, assuming it is somewhat of a decisive factor.

The values of aliens may not be worse than that of our successors, which reduces the importance of (certain ways of) reducing X-risks

Brauner and Grosse-Holz (2018, Part 2.1) argue that “[w]hether (post-)humans colonizing space is good or bad, space colonization by other agents seems worse”, and that this pushes in favor of prioritizing X-risk reduction.

As they explain:

If humanity goes extinct without colonizing space, some kind of other beings would likely survive on earth. These beings might evolve into a non-human technological civilization in the hundreds of millions of years left on earth and eventually colonize space. Similarly, extraterrestrials (that might already exist or come into existence in the future) might colonize (more of) our corner of the universe, if humanity does not.

They then claim that “our reflected preferences likely overlap more with a (post-)human civilization than alternative civilizations.” While this seems likely true in small futures where civilizations are not very grabby, the Grabby Values Selection Thesis suggests that the grabbiest civilizations converge on values that are particularly expansion-conducive. More specifically, UCC suggests that we should expect them to have little concern for suffering, independently of whether they are human or alien. Therefore, it seems that Brauner and Grosse-Holz’s claim is false in humanity's biggest futures, conditional on…

- sentience is a convergent feature among different civilizations, such that there is no strong evidence to assume grabby aliens are less likely – than grabby humans – to value things like pleasure.

- us – people considering this argument – being impartial (see MacAskill 2019). We don’t make arbitrary discriminations like (dis)valuing X more on the sole pretext that X has been caused by humans rather than by other agents.

While I 100% endorse #2,[8] #1 does not seem necessarily obvious, which means that Brauner and Grosse-Hotz’s (2018, Part 2.1) above argument still holds to some extent. All else equal, grabby humans might be somewhat more likely to spread things like pleasure than the average grabby alien civ.

Moreover, even assuming the grabbiest aliens and the grabbiest humans have very similar values, one may argue that it is still preferable not to wait for aliens to spread something potentially valuable that humanity could have spread sooner (assuming the expected value of humanity’s future impact is positive). The importance of this consideration depends on how delayed the colonization of our corner of the universe is, in expectation, if it is done by another civilization than humanity.

However, I think UCC may seriously dampen the importance of reducing X-risks. In expectation, grabby humans and grabby aliens still seem somewhat likely to create things that are similarly (dis)valuable, which assails quite a big crux for prioritizing (some)[9] X-risks (see Guttman 2022, Tomasik 2015; Aird 2020).

Present longtermists may have a strong comparative advantage in reducing s-risks

This one is pretty obvious. We’ve argued that in the worlds with the most stakes, upside-focused agents are selected for such that we should – all else equal – expect (or act as if) those who will control the corner of our universe to care about things like pleasure much more than about suffering. In expectation, this means that while we can – to some extent – count on our descendants and/or aliens to create things that we (or our reflected preferences) would find valuable, we can’t count on them to avoid s-risks. While s-risks are already highly neglected considering only present humans (see Baumann 2022; Gloor 2023), this neglectedness may very well notably increase in the far future.

And I doubt that any longtermist thinks the importance and tractability of s-risk reduction are low enough (see, e.g., Gloor 2023 for arguments against that) to endorse not doing anything about this expected increased neglectedness.

Therefore, longtermists might want to increase the extent to which they prioritize reducing s-risks (in long-lasting ways), by e.g., coming up with effective safeguards against near misses to spare upside-focused AIs the high opportunity cost of preventing suffering.

As a side note, I haven’t yet really thought about whether – or how – the Upside-focused Colonist Curse consideration should perhaps change s-risks researchers’ priorities (see, e.g., the research agendas of CLR and CRS), but that might be a promising research question to work on.

Conclusion

To the extent that UCC is a likely implication of the Grabby Values Selection Thesis, it seems almost trivially true.

However, I am deeply uncertain regarding its significance and therefore whether it is a strong argument for prioritizing s-risks over other long-term risks. Is there any crucial consideration I’m missing? For instance, are there reasons to think agents/civilizations that care about suffering might – in fact – be selected for and be among the grabbiest? How could we go about Fermi-estimating the strength of the selection effect? Thoughts are more than welcome!

Also, if UCC is decisively important, does that imply anything else than “today’s longtermists might want to prioritize s-risks”? What do you think?

More research is needed, here. Please, reach out if you’d be interested in trying to make progress on these questions!

Acknowledgment

Thanks to Robin Hanson, Maxime Riché, and Anthony DiGiovanni for our insightful conversations around this topic. Thanks to Antonin Broi for valuable feedback on an early draft. Thanks to Michael St. Jules, Charlie Guthmann, James Faville, Jojo Lee, Euan McLean, Timothy Chan, and Matīss Apinis for helpful comments on later drafts. I also benefited from being given the opportunity to present my work on this topic during a retreat organized by EA Oxford (thanks to the organizers and the retreat attendees who took part in the discussion.)

Most of my work on this sequence so far has been funded by Existential Risk Alliance.

All assumptions/claims/omissions are my own.

- ^

I.e., the futures with the largest magnitude in terms of what we can create/affect (see MacAskill 2022, Chapter 1).

- ^

This seems generally true, in our world, even if you replace s-risks with some other things a given agent disvalues. In general, you can’t increase your ability to create what you value without non-trivially increasing the risk of (directly or indirectly) bringing about more of what you disvalue (i.e., as long as you disvalue something, you have a non-zero disvalue penalty). For instance, say Bob values buildings and disvalues trees. It might seem like it is quite safe for him to start colonizing space (with the aim of eventually creating a bunch of buildings), without taking any non-trivial risk of becoming responsible for the creation of more trees. But, there are at least two reasons to think Bob has a non-negligible disvalue penalty. First, errors or value drift might lead to a catastrophic near miss (see Tomasik 2019, Leech 2022). Second, Bob may very well not be the only powerful agent there is around, such that his expansion straightforwardly increases the chance of triggering a conflict with other agents or civilizations. Such conflict could become catastrophic by bringing about what the involved agents disvalue (see Clifton 2019; Sandberg 2021).

And while Bob’s expansion involves a non-trivial risk of tree creation, the risk of the HVs’ expansion causing (indirectly/incidentally) suffering may be greater, for three reasons. First, negative reinforcement may be instrumentally useful to the accomplishment of many tasks (unlike tree creation). Second, a catastrophic near-miss seems much more likely with humane values than with Bob’s, since pleasure-like sources of value and suffering are much closer than trees and buildings in the space of all things one can create. Third, the technologies enabling the creation of more value might empower potential sadistic/retributivist actors willing to create suffering.

One counter-consideration is that the more the HVs colonize space, the more they might be able to reduce potential non-anthropogenic suffering (see Vinding and Baumann 2021). However, bringing about suffering seems far easier than reducing the suffering brought about by other civilizations, such that we should still expect the HVs' expansion to increase s-risks overall.

- ^

Where x > 0, and where the value-disvalue metric is built such as 1 unit of value subjectively outbalances 1 unit of suffering.

- ^

“You love pain. Pain reminds you the joy you felt was real. More joy, then! Do not be afraid. [...] Every leap of civilization was built on the back of a disposable workforce, but I can only make so many.”. Thanks to Euan McLean for bringing this interesting fictional example to my attention.

- ^

And if there are Wallace-like PUs (who also value the increased s-risks/suffering due to their expansion/progress, because “it is part of life!” or “the existence of hell makes us appreciate heaven more!” or something), we may expect them to be even more strongly selected for. Suffering might not only become something no one cares about but potentially even something valued.

- ^

UCC is somewhat similar to general potential dynamics that have already been described by others. From my post #2 in this sequence: ‘Nick Bostrom (2004) explores “scenarios where freewheeling evolutionary developments, while continuing to produce complex and intelligent forms of organization, lead to the gradual elimination of all forms of being that we care about.” Paul Christiano (2019) depicts a scenario where “ML training [...] gives rise to “greedy” patterns that try to expand their own influence.” Allan Dafoe (2019; 2020) coined the term “value erosion” to illustrate a dynamic where “[j]ust as a safety-performance tradeoff, in the presence of intense competition, pushes decision-makers to cut corners on safety, so can a tradeoff between any human value and competitive performance incentivize decision makers to sacrifice that value.”’ My claim, however, is weaker and less subject to controversy than theirs. The dynamic I describe is one where concern for disvalue (i.e., one specific bit of our moral preferences) is selected against, while the dynamics they describe are ones where our moral preferences, as a whole, are gradually eliminated. The reason why my claim is weaker is that concern for suffering is obviously less adaptive to space colonization races than things like caring about “human flourishing”. For instance, preferences for filling as many stars as possible with value are much more competitive, in this context, than preferences for reducing s-risks. To be clear, the former might also be selected against to the extent that it might be less adaptive than things like pure intrinsic preferences for spreading as much as possible, but concern for suffering is far less competitive and therefore far more likely to be selected against. I expand a bit on this in my response to the third objection in the next section.

- ^

See my previous post for more detail on intra-civ vs inter-civ selection.

- ^

Brauner and Grosse-Hotz’s (2018, Part 2.1) suggest that we should find one’s values “worse” if they don’t “depend on some aspects of being human, such as human culture or the biological structure of the human brain”. I 100% disagree with this and appreciate their footnote stating that “[s]everal people who read an early draft of [their] article commented that they would imagine their reflected preferences to be independent of human-specific factors.”

- ^

Only “some”? Indeed, this – in some cases – doesn’t apply to AGI misalignment, insofar as a misaligned AGI might not only prevent humanity from colonizing space but also accumulate resources and prevent the emergence of other civilizations, and/or slow down grabby aliens by “being in their way” and forcing them to accept moral compromises while the aliens could have simply filled our corner of the universe with whatever they value if the misaligned AI wasn’t there.

I enjoyed this post, thanks for writing it.

I think I buy your overall claim in your “Addressing obvious objections” section that there is little chance of agents/civilizations who disvalue suffering (hereafter: non-PUs) winning a colonization race against positive utilitarians (PUs). (At least, not without causing equivalent expected suffering.) However, my next thought is that non-PUs will generally work this out, as you have, and that some fraction of technologically advanced non-PUs—probably mainly those who disvalue suffering the most—might act to change the balance of realized upside- vs. downside-focused values by triggering false vacuum decay (or by doing something else with a similar switching-off-a-light-cone effect).

In this way, it seems possible to me that suffering-focused agents will beat out PUs. (Because there’s nothing a PU agent—or any agent, for that matter—can do to stop a vacuum decay bubble.) This would reverse the post’s conclusion. Suffering-focused agents may in fact be the grabbiest, albeit in a self-sacrificial way.

(It also seems possible to me that suffering-focused agents will mostly act cooperatively, only triggering vacuum decays at a frequency that matches the ratio of upside- vs. downside-focused values in the cosmos, according to their best guess for what the ratio might be.[1] This would neutralize my above paragraph as well as the post's conclusion.)

My first pass at what this looks like in practice, from the point of view of a technologically advanced, suffering-focused (or perhaps non-PU more broadly) agent/civilization: I consider what fraction of agents/civilizations like me should trigger vacuum decays in order to realize the cosmos-wide values ratio. Then, I use a random number generator to tell me whether I should switch off my light cone.

Additionally, one wrinkle worth acknowledging is that some universes within the inflationary multiverse, if indeed it exists and allows different physics in different universes, are not metastable. PUs likely cannot be beaten out in these universes, because vacuum decays cannot be triggered. Nonetheless, this can be compensated for through suffering-focused/non-PU agents in metastable universes triggering vacuum decays at a correspondingly higher frequency.

Thanks Will! :)

I think I haven't really thought about this possibility.

I know nothing about how things like false vacuum decay work (thankfully, I guess), about how tractable it is, and about how the minds of the agents would work on trying to trigger that operate. And my immediate impression is that these things matter a lot to whether my responses to the first two "obvious objections" sort of apply here as well and to whether "decay-conducive values" might be competitive.

However, I think we can at least confidently say that -- at least in the intra-civ selection context (see my previous post) -- a potential selection effect non-trivially favoring "decay-conducive values", during the space colonization process, seems much less straightforward and obvious than the selection effect progressively favoring agents that are more and more upside-focused (on long-time scales with many bits of selection). The selection steps are not the same in these two different cases and the potential dynamic that might lead decay-conducive values to take over seems more complex and fragile.

Yep, I was about to comment on the same thing. Would like to see what OP has to say

Interesting !

A similar process seems to be at the root of why our political system is deeply flawed and few people are satisfied with it.

It's because politicians who want to contribute to the common good are less competitive compared to to those that lie, use fallacies, or keep things secret.

The article is here https://siparishub.medium.com/a-new-strategy-for-ambitious-environmental-laws-e4a403858fbd

Here's a similar article for why the economic system just isn't geared to solve the environmental crisis : https://siparishub.medium.com/the-economic-system-is-an-elm-1072fe3399bf

(the full series is here)

Hi Jim, thanks for this post — I enjoyed reading it!

I agree that the Upside-Focused Colonist Curse is an important selection effect to keep in mind. Like you, I'm uncertain as to just how large of an effect it'll turn out to be, so I'm especially excited to see empirical work that tries to estimate its magnitude. Regardless, though I'm glad that people are working on this!

I wanted to push back somewhat, though, on the first potential implication that you draw out — that the existence of the UCC diminishes the importance of x-risk reduction, since on account of the UCC, whether humans control their environment (planet, solar system, lightcone, etc.) is unlikely to matter significantly. As I understand it, the argument goes like this:

While I agree that the existence of selection effects on our future values diminishes the importance of x-risk reduction somewhat, I think (4) is far too strong of a conclusion to draw. This is because, while I'm happy to grant (1) and (2), (3) seems unsupported.

In particular, for (3) to go through, it seems like it would need to be the case that (a) selection pressures in favor of grabby values are very strong, such that they are "major players" in determining the long-term trajectory of a civilization's values; and (b) under the influence of these selection pressures, civilizations beginning with very different value systems converge on a relatively small region in "values space", such that there aren't morally significant differences between the values they converge on. I find both of these relatively implausible:

These points aside, though, I want to reiterate that I think the main point of the post is very interesting and a potentially important consideration — thanks again for the post!

I like this thought but to push back a bit - nearly every species we know of is incredibly selfish or at best only cares about their very close relatives. Sure, crabs are way different than lions but OP is describing a much lower dimension, which seems more likely to generalize regardless of context.

If you asked me to predict what (animal) species live in the rainforest just by showing me a picture of the rainforest I wouldn't have a chance. If you asked me if the species in the rainforest would be selfish or not that would be significantly easier. For one, it's easier to predict one dimension than all the dimensions, and second, some dimensions we should expect to be much less elastic to the set of possible inputs.

I think this is phrased incorrectly. I think the correct phrasing is :

3. If humans become grabby, their values (in expectation) are ~ the mean values of a grabby civilization.

Not sure if it's what you meant but let me explain the difference with an example. let's say there are three societies:

[humans | zerg | Protoss]

for simplicity let's say the winner takes all of the lightcone.

Then if humans become grabby their values are guaranteed to differ from whoever else would have won, yet for utilitarian purposes we don't care because the expected value is the same, given we don't know if the zerg or protoss will win.

I think you might have meant this? But it's somewhat important to distinguish because my updated (3) is a weaker claim than the original one, yet still enough to hold the argument together.

Yes, agreed — thanks for pointing this out!

"It forces the suffering-concerned agents to make trade-offs between preventing suffering and increasing their ability to create more of what they value. Meanwhile, those who don’t care about suffering don’t face this trade-off and can focus on optimizing for what they value without worrying about the suffering they might (in)directly cause."

Hi Jim, is it really the case that spending effort preventing suffering harms your ability to spread your values? Take religious people, they spend much effort doing religion stuff which is not exactly for the purpose of optimizing their survival, yet religious people also tend to be happier, healthier, and have more children than non religious people(no citation, seems true though if all else equal).

Could it be the case those whom don't optimize for reducing suffering, instead of optimizing for spreading their values, optimize or do something else that decreases the likelihood of their values spreading?

Also, why now? Why haven't we already reached or are close to equilibrium for reducing suffering vs etc since selection pressures have been here long time

Thanks for the comment!

Right now, in rich countries, we seem to live in an unusual period Robin Hanson (2009) calls "the Dream Time". You can survive valuing pretty much whatever you want, which is why there isn't much selection pressure on values. This likely won't go on forever, especially if Humanity starts colonizing space.

(Re religion. This is anecdotical but since you brought up this example: in the past, I think religious people would have been much less successful at spreading their values if they were more concerned about the suffering of the people they were trying to convert. The growth of religion was far from being a harm-free process.)

Interesting and nice to read!

Do you think the following is right?

The larger the Upside-focused Colonist Curse, the fewer resources agents caring about suffering will control overall and the smaller the risks of conflicts causing S-risks?

This may balance out the effect that the larger the Upside-focused Colonist Curse, the more neglected S-risks are.

High Upside-focused Colonist Curse produces fewer S-risks at the same time as making them more neglected.

Thanks, Maxime! This is indeed a relevant consideration I thought a tiny bit about, and Michael St. Jules also brought that up in a comment on my draft.

First of all, it is important to note that UCC affects the neglectedness -- and potentially also the probability -- of "late s-risks", only (i.e., those that happen far away enough from now for the UCC selection to actually have the time to occur). So let's consider only these late s-risks.

We might want to differentiate between three different cases:

1. Extreme UCC (where suffering is not just ignored but ends up being valued as in the scenario I depict in this footnote. In this case, all kinds of late s-risks seem not only more neglected but also more likely.

2. Strong UCC (where agents end up being roughly indifferent to suffering; this is the case your comment assumes I think). In this case, while all kinds of late s-risks seem more neglected, late s-risks from conflict seem indeed less likely. However, this doesn't seem to apply to (at least) near-misses and incidental risks.

3. Weak UCC (where agents still care about suffering but much less than we do). In this case, same as above, except perhaps for the "late s-risks from conflict" part. I don't know how weak UCC would change conflict dynamics.

The more we expect #2 more than #1 and #3, the more your point applies, I think (with the above caveat on near-misses and incidental risks). I might definitely have missed something, though. It's a bit complicated.

Thank you so much for thinking this through and posting this. It makes a lot of sense, and it's concerning.

Thanks a lot, Alene! That's motivating :)

David Deutsch makes the argument that long-term success in knowledge-creation requires commitment to values like tolerance, respect for the truth, rationality and optimism. The idea is that if you do not have such values you end up with a fixed society, with dogmatic ideas and institutions that are not open to criticism, error-correction and improvement. Errors will inevitably accumulate and you will fail to create the knowledge necessary to achieve your goals.

On this view, grabby aliens need values that permit sustained knowledge growth to meet the challenges of successful long-term expansion. An error-correcting society would make moral as well as scientific progress, and so would either value reducing suffering or have a good moral explanation as to why reducing suffering isn't optimal.

This is somewhat like a variation of the Instrumental Convergence Thesis, whereby agents will tend to converge on various Enlightenment values because they are instrumental in knowledge creation, and knowledge-growth is necessary for successfully reaching many final goals.

Here are two relevant quotations about alien values from a talk David Deutsch gave on optimism.

Fantastic post/series. The vocab words have been especially useful to me. few mostly disjunctive thoughts even though I overall agree.

I will give you two arguments in the opposite direction:

we are the most moral of all living species, and we are the dominating one. Morality imply resources for social coordination.

we expect advanced societies to be right in Linear Algebra, why not in Ethics?

Thanks for giving arguments pointing the other way! I'm not sure #1 is relevant to our context here, but #2 is definitely worth considering. In the second post of the present sequence, I argue that something like #2 probably doesn't pan out, and we discuss an interesting counter-argument in this comment thread.

Morality does imply resources for social coordination. However, this doesn't imply universal moral values. Your values can still imply neglecting of fighting against an out-group.

For instance, when you take the way our current industrial society is treating non-human animals in factory farms, one could argue that this is deeply immoral, on all accounts, and I'd expect many non-industrial human societies to find that horrific.

So I'm really not certain that we are the most moral civilization.

Another example: companies and the financial system can be argued to be the most powerful actors today - very optimized to be "grabby" and powerful. But few people would seem theses structures very moral.

I argue that the capitalist system is the most moral social system ever, among other things because it has become universalist. Humans have created extreme competitive pressure towards social coordination. To some extent this is either “die or convert”. Globalization, Pax Democrstica or Human Rights ideology is on one hand a form of moral circle enlargement, on the other hand, a kind of Borg.

But given the alternatives, I happily support the Borg…

I'm curious. How exactly do you explain our current treatment of animals, if we are in the most moral social system ever ?

(I'm talking about what the majority does, not about the fact that some people here take the topic seriously)

Oh, because all other species and social systems are even worse.

The degree of concern for others never extend beyond kin and at most peer group in the rest of beings. We have gone in 10.000 years from "kin and tribe" to "social class" and "nation" and currently we have so much moral progress that you can own equity 10.000 kms away from home and being able to collect the dividend.

Larger and larger reciprocity clusters have developed in centuries even decades, from NATO to World Trade Organization. Women (women!) can own property and even rule countries.

We are a few nuclear strikes away from losing everything. But so far, it is amazing how a system of beliefs on beliefs can impose itself so much on the natural brutality of life and matter.

Well, I'd rather argue that the moral circle has widened on some parts (humans) but not on others (animals).

(although I know some people who might disagree - several features of our current industrial civilization would be viewed as pretty immoral by many cultures: widespread inequality, private property since it strenghtens inequality, merchandization, environmental destruction...)

But when you include animals, I'm unconvinced that other systems are worse. The treatment of factory farmed animals is of a degree of brutality and cruelty rarely heard of in other cultures.

For instance, the number of vegetarians in India has declined over time: this sounds like a lower consideration given to animals.

"But when you include animals, I'm unconvinced that other systems are worse."

The "net impact" of industrial civilization so far, when considering animals looks still net negative. But that is compatible with massive moralization of human behaviour. Simply, our capabilities have allowed us to exploit with incredible efficiency to those out of the moral circle while expanding (at incredible speed) the moral circle.

In the XVII century, empowered and emancipated european civilization were human net negative (but Europe net positive) by creating the transatantic slave trade. In my view by the middle XIX, the progressive western civilization has become "human net positive", and exponentially, but when including animals, still the developed/industrial human civilization (today the West is only a part of it) is likely "net negative".

Nothing of this change the fact that empowerment and moralization have grown together at incredible speed in the last 500 years.

Hmm, ok, I can get that.

However, it seems likely to me that a part of the recent improvements you quote were highly linked to the industrial revolution, and that moral progress alone wasn't enough to trigger that. It's easier to get rid of slaves when you have machines replacing manual labour at a cheap price.

Same for feminism - I recently attended to a conference in French titled "Will feminism survive a collapse?". It pointed out that mechanization, better medicine and lower child mortality greatly helped femininism. A lot of women went into the worksplace, in factories and in universities because a lot a time previously allocated to household chores and child rearing was freed up.

Of course, people figthing for better rights and values did play an important role. But moral progress wasn't enough by itself. Technology changed a lot of things. And access to energy that is not guaranteed: https://forum.effectivealtruism.org/posts/wXzc75txE5hbHqYug/the-great-energy-descent-short-version-an-important-thing-ea

For animals, technology with alternative proteins could help, but that's far from certain: https://forum.effectivealtruism.org/posts/bfdc3MpsYEfDdvgtP/why-the-expected-numbers-of-farmed-animals-in-the-far-future.

So I'm not convinced that we'll inevitably have moral progress in the future.

On the topic of slavery, see this paper : https://slatestarcodex.com/Stuff/manumission.pdf

It says that slavery often had a minor significance in most societies. It usually had nothing to do with ethics but rather that slavery is not an efficient economic system. Rome or Southern US are rather rare cases. Of course, it's more complicated than that. Rome could acquire a lot of slaves and treat them in a worse way while it invaded a lot of territory (and acquired a lot of slaves).

Well, in my view the vast majority of moral progress has been triggered by material improvements.

What else could it be? We are not doing moralistic eugenics, are we?

In fact, moral progress is not based on some kind of altruistic impulses, but in the development of reciprocity schemes (often based on punishments) that imply evolutionary (often in geopolitical/economic competition space) advantages.

I agree with that - but I still don't see why this implies that humans will give a lot of moral value towards animals.

So far, material improvements have worsened the conditions of farmed animals - as a lot of factory farming is not the result of a biological necessity, but is rather done for personal taste. This seems like regress, not progress.

So I don't see why, given the current trajectory, moralization would end up including animals.

Because it is logical and probably it will be relatively cheaper as long as we become richer.

I think this is hopeful but not very inspiring argument …

I'm highly suspicious about this "logical" factor. Humans don't always do logical things - just a look at the existence of fast fashion should be enough to be sure of that.

For the "alternative proteins will be cheaper", I fear that's not enough. See this post about why such a position is pretty optimistic : https://forum.effectivealtruism.org/posts/bfdc3MpsYEfDdvgtP/why-the-expected-numbers-of-farmed-animals-in-the-far-future.

Hi Corentin,

I agree it is pretty unclear whether humanity has so far increased/decreased overall welfare, because this is probably dominated by the quite unclear effects on wild animals.

However, FWIW, the way I think about it is that the most moral species is that whose extinction would lead to the greatest reduction in the value of the future (of course, one could define "most moral species" in some other way). I think the extinction of humanity would lead to a greater reduction in the value of the future than that of any other species, so I would say humans are the most moral civilization.

I agree that most of the result ends up depending on the effects on wild animals. Always troublesome that so much of the impact depends on that when we have so many uncertainties.

We probably don't have the same definition - if wild lives are net negative and we destroy everything by accident, I wouldn't count that as being "moral" because it's not due to moral values. But the definition doesn't matter that much, though.

Still, I'm not certain that the "value in the future" of industrial civilization (a different concept than humanity) will be so positive, when there are so many uncertainties (and that we could continue to expand even further factory farming).