Epistemic status: …hang on a second.[1]

It’s common to see posts on the EA Forum (this platform) start with “Epistemic status: [something about uncertainty, or time spent on the post].”

This post tries to do three things: [2]

- Briefly explain what “Epistemic status” means

- Suggest that writers consider hyperlinking the phrase (e.g. to this explainer)

- Discuss why people use “epistemic status”

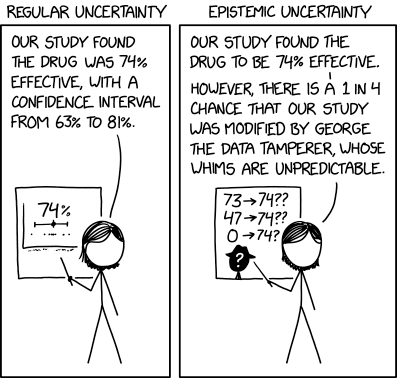

What does “epistemic status” mean?

According to Urban Dictionary:[3]

The epistemic status is a short disclaimer at the top of a post that explains how confident the author is in the contents of the post, how much reputation the author is willing to stake on it, what sorts of tests the thesis has passed.

It should give a reader a sense of how seriously they should take the post.

I think that’s a surprisingly good explanation. Commenters might be able to add to it, in which case I’ll add an elaboration.

A bunch of examples:

- Epistemic status: Pretty confident. But also, enthusiasm on the verge of partisanship

- Epistemic Status: I have worked for 1 year in a junior role at a large consulting company. Most security experts have much more knowledge regarding the culture and what matters in information security. My experiences are based on a sample size of five projects, each with different clients. It is therefore quite plausible that consulting in information security is very different from what I experienced. Feedback from a handful of other consultants supports my views.

- Epistemic status: personal observations, personal preferences extrapolated. Uses one small random sample and one hard data source, but all else is subjective.

- Epistemic status/effort: I spent only around 5 hours on the work test and around 3 hours later on editing/adapting it, though I happened to have also spent a bunch of time thinking about somewhat related matters previously

- Epistemic status: uncertain. I removed most weasel words for clarity, but that doesn't mean I'm very confident. Don’t take this too literally. I'd love to see a cluster analysis to see if there's actually a trend, this is a rough guess.

- Epistemic status (how much you should trust me): Engaging with the Forum is my job, and I ran this by a few people, who all agreed with the argument. One person was surprised that this was an issue. So I’m more confident than usual.

- Epistemic status: Writing off-the-cuff about issues I haven't thought about in a while - would welcome pushback and feedback

- Epistemic status: Divine revelation.

- Epistemic Status: In this post I’m mainly referring to university group community builders. It’s possible that a lot of what I say will still apply to city / country / other groups, but I’m less confident of this. In my problem section, I give some percentage estimates of how much organizers are marketing (defined later) and how much they should be marketing. This is based off of some rough estimates, which I’m not confident in. I’d love to see someone better estimate this or run a survey.

- Epistemic status: a rambling, sometimes sentimental, sometimes ranting, sometimes informative, sometimes aesthetic autobiographical reflection on ten years trying to do the most good.

- Note: epistemic confidence is lower here, as not much time was spent looking into these relative to other areas.

- Epistemic note: I am engaging in highly motivated reasoning and arguing for veg*n.

- Epistemic Status: I am uncertain about these uncertainties! Most of them are best guesses. I could also be wrong about the inconsistencies I've identified. A lot of these issues could easily be considered bike-shedding. [This post also includes: “Effort: This took about 40 hours to research and write, excluding time spent developing Squiggle.”]

Most of these express a lot of uncertainty. I’d be excited to see more posts that lean into their beliefs — if that’s the real position of the author.

Valuable information to list in an epistemic status

Some things I think are especially useful to include, when relevant:

- Biases you might have

- E.g. “I’m funded by the main organization discussed in this post…” or “I’m arguing that this should be a priority area, but it’s also what I specialized in, so there might be some suspicious convergence.”

- This can also include reasons the data you’re using might be biased (e.g. if you’re talking about events, but only have experience about certain types of events).

- The main reasons (the cruxes) you believe what you write, especially if it might not be obvious from the body of the post (e.g. if you reference data or information that’s not actually crucial to your personal belief in the conclusion)

- E.g. “I list some data in this post, but the strongest factor in me believing the conclusion I describe is my personal experience.” Or “The main reason I wrote this post is because of a conversation I had with a professional. Arguments 3-6 presented here are more like add-ons after I thought about it a little longer.”

- This is very related to Epistemic Legibility.

- Your qualifications

- E.g. “This is not my field of expertise, but I read [this book] about it,” or “I have a Ph.D. in a related field, and have thought about this for at least 80 hours.”

- The effort you put into this post and into making its claims very precise

- E.g. “I wrote this up in 30 minutes, might have made mistakes or misstated my actual opinions,” or “I spent 40 hours researching this subject and writing this report.”

- The number and type of people who gave feedback on the writing or project, and broadly what their feedback was

- E.g. “I ran a sketch of this argument past 2 friends who are also in my field, and they broadly agreed.” Or “These are consolidated views. X, Y, and Z gave me feedback on this, and I’ve incorporated it.”

If you end up having an epistemic status on a longer post with different claims, I think it’s also often very useful to have epistemic statuses or “how much I believe this and how much you should trust me” notes on the different sections, as in this post.

- E.g. “I ran a sketch of this argument past 2 friends who are also in my field, and they broadly agreed.” Or “These are consolidated views. X, Y, and Z gave me feedback on this, and I’ve incorporated it.”

Consider hyperlinking the phrase

Epistemic status for this section: I think the harm I describe is real, but I’m not sure it’s actually very big. I’m pretty confident more people should just hyperlink the term, though.

I think the phrase “epistemic status” can be confusing to some readers, and some of what Michael writes in his post, 3 suggestions about jargon in EA, applies here. In particular, while I think adding epistemic statuses is often very useful (see below), newcomers might be disoriented by it, especially at the very top of a post. This seems especially relevant for posts that are aimed at a wider or more general audience.

One solution: simply hyperlink this post (or some other explanation) when you use “epistemic status.”

(As a reminder, you don’t need to have an epistemic status note.)

Why have an “epistemic status”? (And are there reasons to not have one?)

Adding a field like this can help readers broadly understand how seriously they should take what’s been written, highlight biases of the writer that readers might not be aware of (but should be), make the post more legible, and clarify the purpose of the post. I think this is especially important if the author is someone whose views might get accepted because they have some status or authority.

Epistemic statuses can also help us discuss things more collaboratively. If I add “Epistemic status; just figuring things out, really uncertain” at the top of my post, commenters might feel more welcome to point out the flaws in my argument, and might do so more generously than if I had just straightforwardly argued for something incorrect.

And, importantly (and relatedly), epistemic statuses can help us avoid information cascades, which is a way to collectively arrive at false beliefs when people defer to each other without understanding the true reasons for why others might believe something. (Here’s a silly toy example; imagine three people A, B, and C trying to understand a given subject. C knows that A and B both hold belief X, and, deferring, decides that X is probably right. A and B notice that C also thinks X, and become more confident in X. In reality, B only thinks X because A thinks X. So everyone is depending on A’s belief in X, which could be the result of only one bit of independent information.

I’m probably missing some reasons for using epistemic statuses in Forum posts. I’d welcome more suggestions in the comments!

Note: an epistemic status communicating uncertainty doesn’t mean that all readers will fully process the uncertainty

One caveat to all of the above is that an epistemic status doesn’t fully remove the danger of over-deferral, and might give false confidence that we’ve addressed over-deferral.

So if you’re very uncertain about what you’re claiming, or about specific claims you’re making, it’s worth stating that clearly (and repeatedly) in the body of the post.

As readers, we often less-than-critically accept the conclusions of a post (especially if it’s by someone who has some expertise or status), even if the post has a note at the top disclaiming: “epistemic status — these are just rough thoughts.” If misused, epistemic statuses might give people false confidence that they’ve caveated their writing enough. There’s a related discussion here.

(Note also that deferring isn’t always bad. I just think it’s important to know when and why we’re deferring.)

Further reading

- Reasoning transparency

- Epistemic Legibility

- Information Cascades (LessWrong) (Wikipedia)

- Use resilience, instead of imprecision, to communicate uncertainty

- Epistemic Hygiene (LessWrong)

- Potential downsides of using explicit probabilities

- ^

For real though: Epistemic status: this is a post I think could be useful to some people, but I’m not confident I captured the right reasoning for the different things I argue for, and hope the comments will supplement anything I missed or got wrong. A couple of people looked at a draft, and their feedback led to minor edits, but I'm not sure they endorse everything here.

- ^

This post is not an attempt to get more people to use epistemic statuses. I think they can be useful, but don’t think they’re always necessary or even always helpful.

- ^

Thanks to Lorenzo for discovering this! Some hyperlinks removed.

Thanks for this, I applaud the effort to make forum posts more legible to

newcomerseveryone.I would go even further and eliminate the jargon completely (or preserve it just to link to this post):

OR