TL;DR

Exactly one year after receiving our seed funding upon completion of the Charity Entrepreneurship program, we (Miri and Evan) look back on our first year of operations, discuss our plans for the future, and launch our fundraising for our Year 2 budget.

Family Planning could be one of the most cost-effective public health interventions available. Reducing unintended pregnancies lowers maternal mortality, decreases rates of unsafe abortions, and reduces maternal morbidity. Increasing the interval between births lowers under-five mortality. Allowing women to control their reproductive health leads to improved education and a significant increase in their income. Many excellent organisations have laid out the case for Family Planning, most recently GiveWell.[1]

In many low and middle income countries, many women who want to delay or prevent their next pregnancy can not access contraceptives due to poor supply chains and high costs. Access to Medicines Initiative (AMI) was incubated by Ambitious Impact’s Charity Entrepreneurship Incubation Program in 2024 with the goal of increasing the availability of contraceptives and other essential medicines.[2]

The Problem

Maternal mortality is a serious problem in Nigeria. Globally, almost 28.5% of all maternal deaths occur in Nigeria. This is driven by Nigeria’s staggeringly high maternal mortality rate of 1,047 deaths per 100,000 live births, the third highest in the world. To illustrate the magnitude, for the U.K., this number is 8 deaths per 100,000 live births.

While there are many contributing factors, 29% of pregnancies in Nigeria are unintended. 6 out of 10 women of reproductive age in Nigeria have an unmet need for contraception, and fulfilling these needs would likely prevent almost 11,000 maternal deaths per year.

Additionally, the Guttmacher Institute estimates that every dollar spent on contraceptive services beyond the current level would reduce the cost of pregnancy-related and newborn care by three do

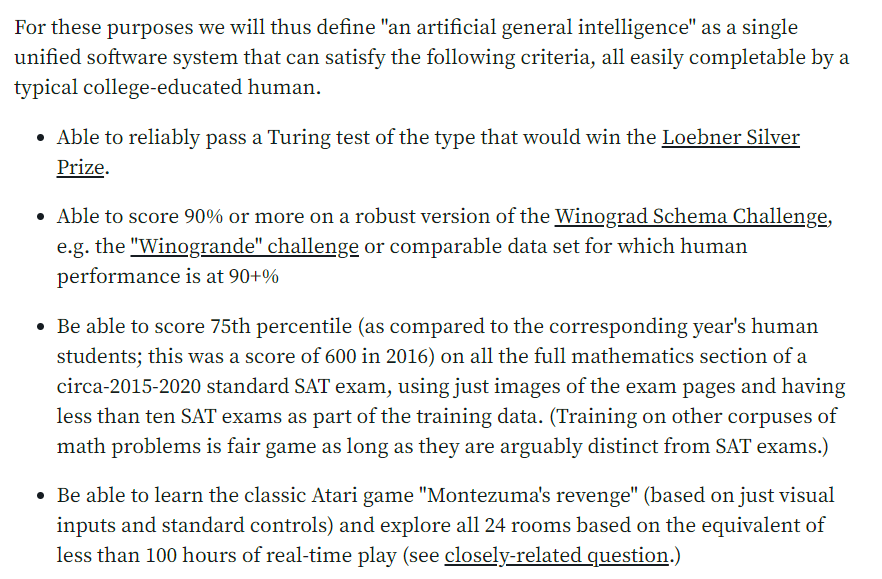

I note that in November 2020, the Metaculus community's prediction was that AGI would be arriving even sooner (2032, versus the current 2036 prediction). So if we're taking the Metaculus prediction seriously, we also want to understand things why the forecasters on Metaculus have longer timelines now than they did a year and a half ago.

I note that 60 extra forecasters joined in forecasting over the last few days, representing about a 20% increase in the forecaster population for this question.

This makes me hypothesize that the recent drop in forecasted timeline is due to a flood of attention on this question due to hype from the papers and the associated panic on LW and signal-boosting from SlateStarCodex. Perhaps the perspectives of those forecasters represent a thoughtful update in response to those publications. Or perhaps it represents panic and following the crowd. Since this is a long-term forecast, with no financial incentives, on a charged question with in-group signaling relevance, I frankly just don't know what to think.