Edgy and data-driven TED talk on how the older generations in America are undermining the youth. Worth a watch.

I'm proud to announce the 5-minute animated short on mental health I wrote back in 2020 is finally finished! I'd love you to watch it and let me know what you think (like, share…). It's currently "unlisted" as I wait to see how the production studio wants to release it publicly. But in the meantime I'm sharing it with my extended network.

I haven’t gone through this all the way, but I’ve loved Nicky Case’s previous explainers.

https://aisafety.dance/

The Onion: What To Know About The Collapse Of FTX

Q: What is the “effective altruism” philosophy Bankman-Fried practices?

A: A movement to allocate one’s money to where it can do the most benefit for oneself.

The Atlantic has a column called “Progress” by contributor Derek Thompson with the tag line: A special series focused on two big questions: How do you solve the world’s most important problems? And how do you inspire more people to believe that the most important problems can actually be solved?

Sounds a lot like EA to me.

Derek is holding virtual office hours on June 14

There will be an EAG Coaching meetup during EAGxVirtual.

Feel free to join if you are a coach, therapist, or anyone in a related personal development field!

Saturday, November 18

8 PM UTC / 3 PM Eastern / 12 PM Pacific / 7 AM Sydney

https://meet.google.com/eas-pyaa-gxk

Or dial: (US) +1 405-356-8141 PIN: 225 495 585#

More phone numbers:

https://tel.meet/eas-pyaa-gxk?pin=9017005535543

We started a #role-coaches-and-therapists channel in the EA Everywhere Slack.

There will also be a meeting for coaches and therapists to talk about organizing and coordinating at the EASE monthly meeting on January 24, 2024. More details in the Slack.

Here's my experience donating bone marrow in the USA. I recommend all EAs in the USA sign up for the registry at Be The Match. You can decide whether or not to do it if you are asked. The odds of getting asked are 1 in 430.

Stand-up comedian in San Francisco spars with ChatGPT AI developers in the audience

https://youtu.be/MJ3E-2tmC60

Seems like this is important, neglected, and possibly tractable. Is there anyone out there working on screening leaders for psychopathy?

Here's a framework I use for A or B decisions. There are 3 scenarios:

- One is clearly better than the other.

- They are both about the same

- I'm not sure; more data is needed.

1 & 2 are easy. In the first case, choose the better one. In the second, choose the one that in your gut you like better (or use the "flip a coin" trick, and notice if you have any resistance to the "winner". That's a great reason to go with the "loser").

It's the third case that's hard. It requires more research or more analysis. But here's the thing: there are costs to doing this work. You have to decide if the opportunity cost to delve in is worth the investment to increase the odds of making the better choice.

My experience shows that—especially for people who lean heavily on logic and rationality like myself 😁—we tend to overweight "getting it right" at the expense of making a decision and moving on. Switching costs are often lower than you think, and failing fast is actually a great outcome. Unless you are sending a rover to Mars where there is literally no opportunity to "fix it in post-", I suggest you do a a nominal amount of research and analysis, then make a decision and move onto other things in your life. Revisit as needed.

[cross-posted from a comment I wrote in response to Why CEA Online doesn’t outsource more work to non-EA freelancers]

Volunteers of America (VOA) Futures Fund Community Health Incubator

Accelerate your innovative business solution for community health disparities.

Volunteers of America (VOA), one of the nation's largest and most experienced nonprofit housing, health, and human service organizations, launched this first-of-its-kind Incubator to accelerate social enterprises that improve quality, equity, and access to care for Medicaid and at-risk populations. Sponsored by the Humana Foundation, the VOA Community Health Incubator powered by SEEP SPOT supports early-stage entrepreneurship that develops innovative products and services for equitable community health outcomes

Leveraging VOA’s vast portfolio of assets – 16,000 employees, 400 communities, 22,000+ units of affordable housing, 15+ senior healthcare facilities, 1.5 million lives touched annually, hundreds of programs and service models – in this 12-week program founders will benefit from the expertise and collaboration with the VOA network, gain business training and tailored mentorship while tackling the most intractable community health disparities. This is a fully funded opportunity with non-diluted grants and the potential follow-on investment of up to $200,000.

APPLY BY APRIL 28

Update Jan 4: Fixed, thanks! 🙏🏼

@Centre for Effective Altruism, is there a reason this video—From Bednets to Mindsets: The Case for Mental Health in Effective Altruism | @Joy Bittner—is unlisted?

I had watched it not long after it aired from a direct link in Vida Plena's newsletter. I was trying to find it again, but because it's unlisted it doesn't show up in YouTube search results. I feel all EAG talks like this should be public to give as much exposure to the ideas as possible.

Thanks!

Thank you for flagging this!

We've now made this talk public. All EAGxVirtual 2023 talks were unlisted. I think (90% confidence) the team hadn't yet received confirmation from the speakers that they should post, and I'm just checking that with them. I've asked the team to post all the videos that they have received consent from the speaker to share.

I’m not affiliated with this, but I suspect it might be of interest to other folks

https://www.humanflourishing.org/

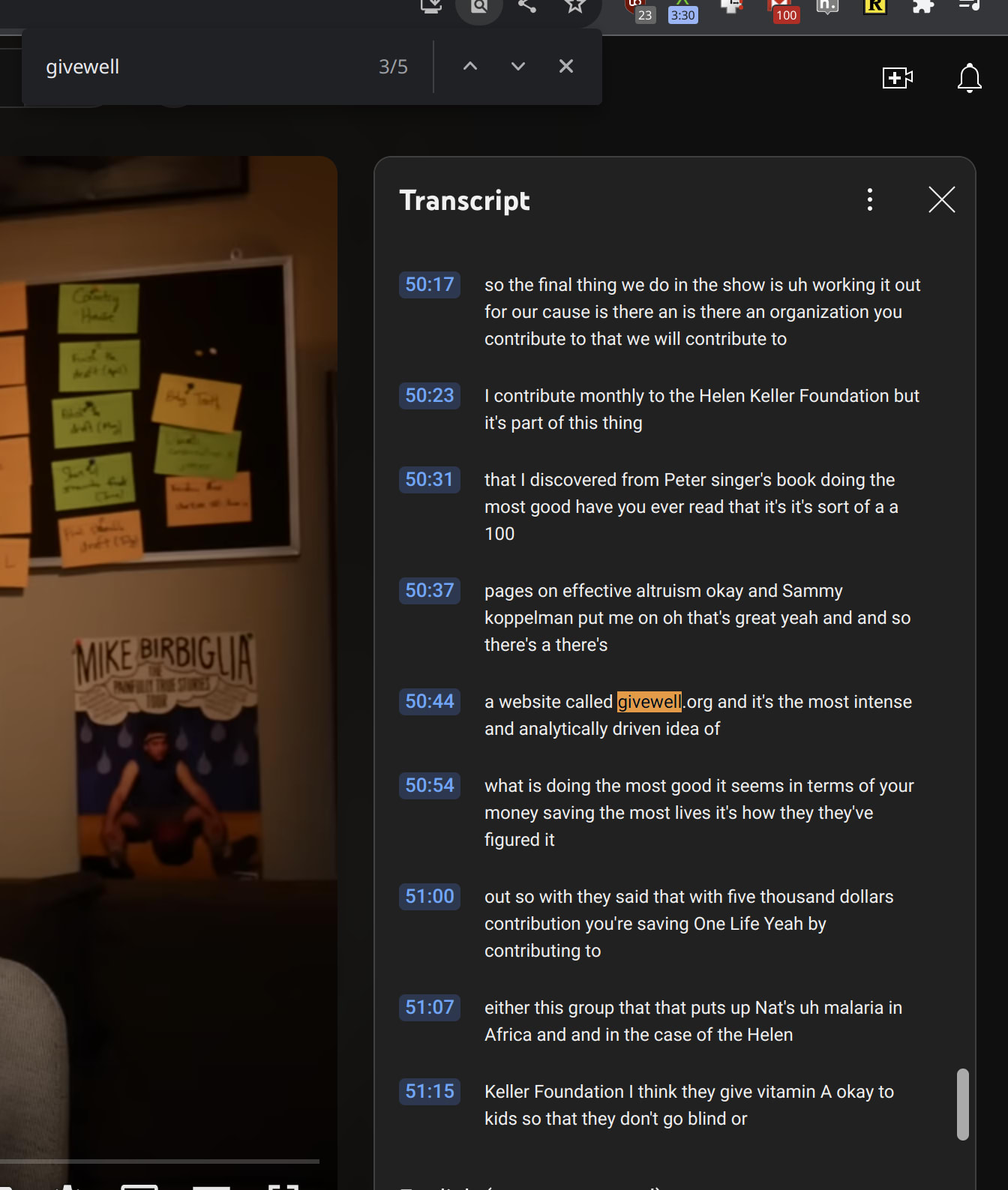

Comedian Gary Gulman recommends GiveWell on Mike Birbiglia’s “Working It Out” podcast

If you expand the description, you can click "Show transcript" and then search for givewell

Timestamp is 50:17

I’ve been working on a logical, science-based definition of the arbitrary race labels[1] we’ve assigned to humans. The most succinct definition I’ve come up with is evolutionary physiological acclimatization. Essentially, the bodies of the descendants of people living in an environment with specific climate attributes and trends will become more adapted to that environment. For example, the darker skin, larger noses, and bigger lips of people of African descent helped their ancestors survive in the intense sunlight and heat.[2] Ironically, we have migrated to parts of the world where our physiology is mismatched with the environment.

Race is fundamentally an artificial construct that helped people in positions of wealth and power protect their place. They chose visible superficial traits to make it easier to delineate the in-group (whom the law protects but does not bind) from the out-group (whom the law binds but does not protect).[3]

I believe it’s necessary to acknowledge what race was and what it actually is if we are to move to a world where we truly treat all people as equals.

Imposter syndrome is innately illogical. It presumes that everyone else either has poor judgment, or they see the truth but is going along with the deception that you aren’t capable of your current position. Poor judgment or “going along to get along” may be the case for any given individual, but when you add up all of the people in the group you interact with, it is statistically improbable, or it would require a Truman Show level of coordination to execute.

The antidote to imposter syndrome is trust. Trust in others to make fair and honest assessments of you and your capabilities. And trust in yourself and the objective successes you’ve achieved to reach your current place.

Ryuji Chua advocates for the suffering of fish

Loved Ryuji’s interview on The Daily Show. His nonjudgmental attitude towards those who still eat animals is a wonderful way to keep the conversation open and welcoming. A true embodiment of the "big tent" approach that benefits EA expansion.

I also watched his documentary How Conscious Can A Fish Be? It’s always hard for me to see animals suffering, but I also know I need to keep renewing that emotional connection to the cause so I don't drift towards apathy.

Here's a framework I use for A or B decisions. There are 3 scenarios:

- One is clearly better than the other.

- They are both about the same

- I'm not sure; more data is needed.

1 & 2 are easy. In the first case, choose the better one. In the second, choose the one that in your gut you like better (or use the "flip a coin" trick, and notice if you have any resistance to the "winner". That's a great reason to go with the "loser").

It's the third case that's hard. It requires more research or more analysis. But here's the thing: there are costs to doing this work. You have to decide if the opportunity cost to delve in is worth the investment to increase the odds of making the better choice.

My experience shows that—especially for people who lean heavily on logic and rationality like myself 😁—we tend to overweight "getting it right" at the expense of making a decision and moving on. Switching costs are often lower than you think, and failing fast is actually a great outcome. Unless you are sending a rover to Mars where there is literally no opportunity to "fix it in post-", I suggest you do a a nominal amount of research and analysis, then make a decision and move onto other things in your life. Revisit as needed.

[cross-posted from a comment I wrote in response to Why CEA Online doesn’t outsource more work to non-EA freelancers]

"This Request for Information (RFI) seeks input on how to best collect and integrate environmental health data into the All of Us Research Program dataset.

The All of Us Research Program seeks to accelerate health research and medical breakthroughs to enable individualized prevention, treatment, and care for all of us. To do this, the program will partner with one million or more participants nationwide and build one of the most diverse biomedical data resources of its kind. Researchers may leverage the All of Us platform for thousands of studies on a wide range of health conditions.

Diversity is one of the core values of the All of Us Research Program. The program aims to reflect the diversity of the United States and has a special focus on engaging communities that have been underrepresented in health research in the past. Participants are from different races, ethnicities, age groups, and regions of the country. They are also diverse in gender identity, sexual orientation, socioeconomic status, educational attainment, and health status. …"

Abigail Marsh’s 2016 TED talk on “Extraordinary Altruists”

It doesn’t look like anyone posted this TED talk on extraordinary altruists who donate a kidney to a stranger. The thing that stood out for me was the movement away from ego and into what could be called a non-dualistic perspective of humanity. I also detect a higher EQ—the ability to read and connect with others’ emotions, and this requires them to be skillful at recognizing, connecting, and regulating their own emotions.

What are your thoughts?

Devil’s Advocate: the only way to truly minimize suffering is to eliminate all life.

Of course, that also eliminates all serene existence too. I don't think anyone compassionate would advocate for this modest proposal.

Even QALY’s don't really work; they don't capture what proportion of a given life was in suffering vs serenity. It seems to me we should be trying to maximize QALYs/DALYs, right?

[Apologies if this is a noob question; I looked around but couldn't find anything that satisfactorily addressed this.]

I'm a big fan of standup comedians. In many cases, they offer alternative viewpoints that challenge societal norms.

In that vein, Whitney Cummings has a Netflix special from July 2019 (Can I Touch It?) where the second half is about the growing market for more and more realistic sex dolls and touches on some AI safety issues. TBH, it lacks depth and nuance, but she's a comedian going for laughs, not meaningful discourse. But I post it here because I do appreciate seeing these issues come up in more mainstream culture.

Hypothesis: our planet’s ecosystem and the process of evolution necessarily have some inherent level (or range) of pain and suffering. Has anyone done an analysis of what this might be? Having this data from pre-civilization times would be most enlightening, as it would give an idea of what the system looks like without human meddling (whether intentional or not).

And yes it will fluctuate. Earth’s previous mass extinction events would involve a HUGE amount of suffering in a short period of time (like that first day after the Chicxulub impact). But what is the average baseline?

Good data visualization of record temperatures in USA cities. https://pudding.cool/2022/03/weather-map/

Mental health org in India that follows the paraprofessional model

https://reasonstobecheerful.world/maanasi-mental-health-care-women/

#mental-health-cause-area

Humans are cucinivores—the conjecture all vegans should know

The crux: humans are a new and unique type of eater: cucinivores. We evolved to eat cooked food.

- Humans as cucinivores: comparisons with other species (2015)

- The Whippet #152: Omnivores vs Cucinivores (2022) [this is a friendlier write-up of the first paper]

- Catching Fire: How Cooking Made Us Human (2009)

More people should know about this conjecture, and for anyone looking for a vegan-related research project, I believe the world would benefit from more research in this area (which I suspect would support the conjecture).

One common argument against veganism is that humans are carnivores. We aren’t. We are designed to eat cooked food.

A good friend turned me onto The Telepathy Tapes. It presents some pretty compelling evidence that people who are neurodivergent can more easily tap into an underlying universal Consciousness. I suspect Buddhists and other Enlightened folks who spend the time and effort quieting their mind and letting go of ego and dualism can also. I'm curious what others in EA (self-identified rationalists for example) make of this…

Abigail Marsh’s 2016 TED talk on “Extraordinary Altruists”

It doesn’t look like anyone posted this TED talk on extraordinary altruists who donate a kidney to a stranger. The thing that stood out for me was the movement away from ego and into what could be called a non-dualistic perspective of humanity. I also detect a higher EQ—the ability to read and connect with others’ emotions, and this requires them to be skillful at recognizing, connecting, and regulating their own emotions.

What are your thoughts?