Ben_West🔸

Bio

Non-EA interests include chess and TikTok (@benthamite). Formerly @ CEA, METR + a couple now-acquired startups.

How others can help me

Feedback always appreciated; feel free to email/DM me or use this link if you prefer to be anonymous.

Posts 91

Comments1193

Topic contributions6

To decompose your question into several sub-questions:

- Should you defer to price signals for cause prioritization?

- My rough sense is that price signals are about as good as the 80th percentile EA's cause prio, ranked by how much time they've spent thinking about cause prioritization.

- (This is mostly because most EAs do not think about cause prio very much. I think you could outperform by spending ~1 week thinking about it, for example.)

- Should you defer to price signals for choosing between organizations within a given cause?

- This mostly seems decent to me. For example, CG struggled to find organizations better than Givewell's top charities for near-termist, human-centric work.

- Notable exceptions here for work which people don't want to fund for non-effectiveness reasons, like politics or adversarial campaigning.

- Should you defer to price signals for choosing between roles within an organization?

- Yes, I mostly trust organizations to price appropriately, although also I think you can just ask the hiring manager.

the strength of this tail-wind that has driven much of AI progress since 2020 will halve

I feel confused about this point because I thought the argument you were making implies a non-constant "tailwind." E.g. for the next generation these factors will be 1/2 as important as before, then the one after that 1/4, and so on. Am I wrong?

Interesting ideas! For Guardian Angels, you say "it would probably be at least a major software project" - maybe we are imagining different things, but I feel like I have this already.

e.g. I don't need a "heated-email guard plugin" which catches me in the middle of writing a heated email and redirects me because I don't write my own emails anyway. I would just ask an LLM to write the email and 1) it's unlikely that the LLM would say something heated and 2) for the kinds of mistakes that LLMs might make, it's easy enough to put something in the agents.md to ask it to check for these things before finalizing the draft.

(I think software engineering might be ahead of the curve here, where a bunch of tools have explicit guardian angels. E.g. when you tell the LLM "build feature X", what actually happens is that agent 1 writes the code, then agent 2 reviews it for bugs, agent 3 reviews it for security vulns, etc.)

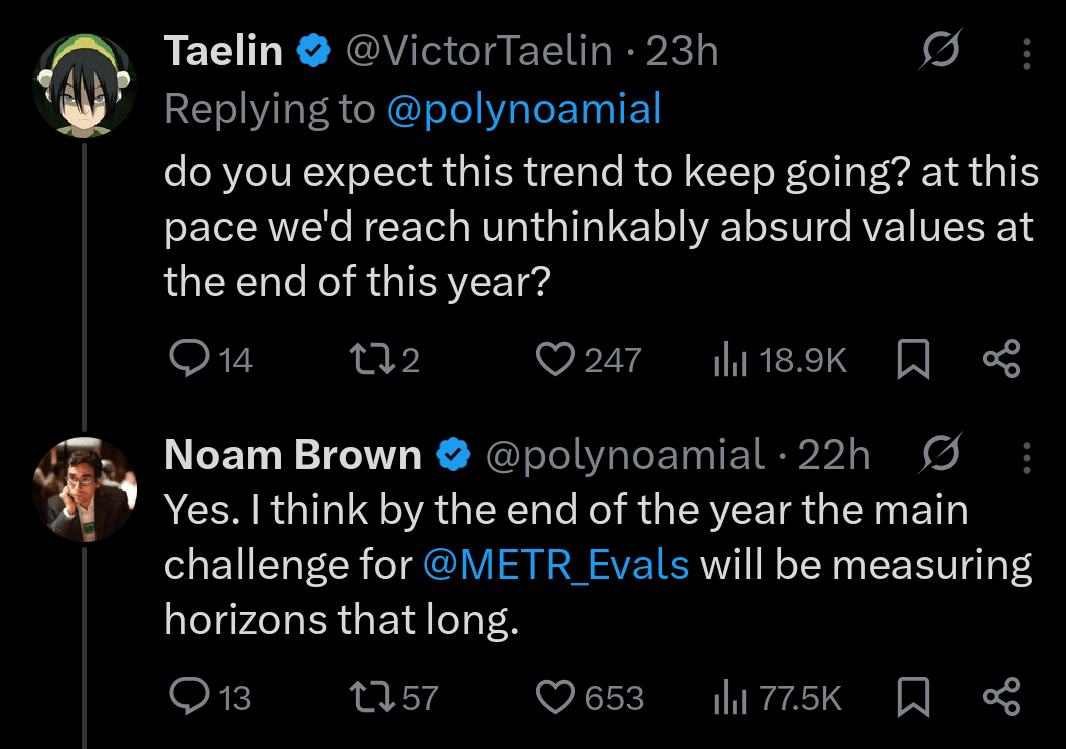

The AI Eval Singularity is Near

- AI capabilities seem to be doubling every 4-7 months

- Humanity's ability to measure capabilities is growing much more slowly

- This implies an "eval singularity": a point at which capabilities grow faster than our ability to measure them

- It seems like the singularity is ~here in cybersecurity, CBRN, and AI R&D (supporting quotes below)

- It's possible that this is temporary, but the people involved seem pretty worried

Appendix - quotes on eval saturation

- "For AI R&D capabilities, we found that Claude Opus 4.6 has saturated most of our

automated evaluations, meaning they no longer provide useful evidence for ruling out ASL-4 level autonomy. We report them for completeness, and we will likely discontinue them going forward. Our determination rests primarily on an internal survey of Anthropic staff, in which 0 of 16 participants believed the model could be made into a drop-in replacement for an entry-level researcher with scaffolding and tooling improvements within three months." - "For ASL-4 evaluations [of CBRN], our automated benchmarks are now largely saturated and no longer provide meaningful signal for rule-out (though as stated above, this is not indicative of harm; it simply means we can no longer rule out certain capabilities that may be pre-requisities to a model having ASL-4 capabilities)."

- It also saturated ~100% of the cyber evaluations

- "We are treating this model as High [for cybersecurity], even though we cannot be certain that it actually has these capabilities, because it meets the requirements of each of our canary thresholds and we therefore cannot rule out the possibility that it is in fact Cyber High."

Thanks for the article! I think if your definition of "long term" is "10 years," then EAAs actually do often think on this time horizon or longer, but maybe don't do so in the way you think is best. I think approximately all of the conversations about corporate commitments or government policy change that I have been involved in have operated on at least that timeline (sadly, this is how slowly these areas move).

For example, you can see this CEA where @saulius projects out the impacts of broiler campaigns a cool 200 years, and links to estimates from ACE and AIM which use a constant discount rate.

Thanks for doing this. This question is marked as required but I think should either be optional or have a "none" option: