Audio version is here

Summary:

- In a series of posts starting with this one, I'm going to argue that the 21st century could see our civilization develop technologies allowing rapid expansion throughout our currently-empty galaxy. And thus, that this century could determine the entire future of the galaxy for tens of billions of years, or more.

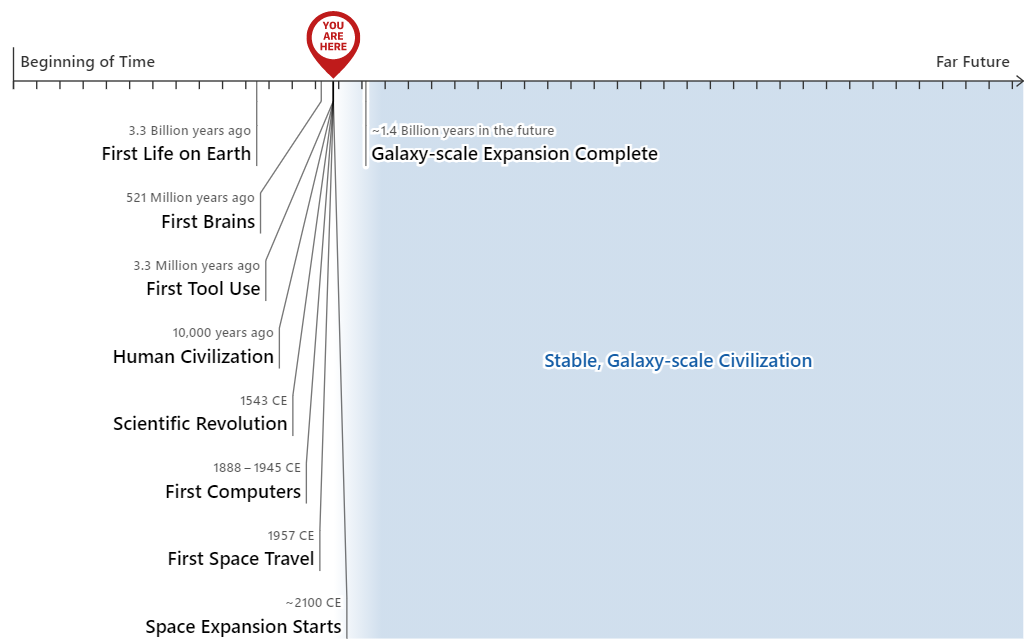

- This view seems "wild": we should be doing a double take at any view that we live in such a special time. I illustrate this with a timeline of the galaxy. (On a personal level, this "wildness" is probably the single biggest reason I was skeptical for many years of the arguments presented in this series. Such claims about the significance of the times we live in seem "wild" enough to be suspicious.)

- But I don't think it's really possible to hold a non-"wild" view on this topic. I discuss alternatives to my view: a "conservative" view that thinks the technologies I'm describing are possible, but will take much longer than I think, and a "skeptical" view that thinks galaxy-scale expansion will never happen. Each of these views seems "wild" in its own way.

- Ultimately, as hinted at by the Fermi paradox, it seems that our species is simply in a wild situation.

Before I continue, I should say that I don't think humanity (or some digital descendant of humanity) expanding throughout the galaxy would necessarily be a good thing - especially if this prevents other life forms from ever emerging. I think it's quite hard to have a confident view on whether this would be good or bad. I'd like to keep the focus on the idea that our situation is "wild." I am not advocating excitement or glee at the prospect of expanding throughout the galaxy. I am advocating seriousness about the enormous potential stakes.

My view

This is the first in a series of pieces about the hypothesis that we live in the most important century for humanity.

In this series, I'm going to argue that there's a good chance of a productivity explosion by 2100, which could quickly lead to what one might call a "technologically mature"[1] civilization. That would mean that:

- We'd be able to start sending spacecraft throughout the galaxy and beyond.

- These spacecraft could mine materials, build robots and computers, and construct very robust, long-lasting settlements on other planets, harnessing solar power from stars and supporting huge numbers of people (and/or our "digital descendants").

- See Eternity in Six Hours for a fascinating and short, though technical, discussion of what this might require.

- I'll also argue in a future piece that there is a chance of "value lock-in" here: whoever is running the process of space expansion might be able to determine what sorts of people are in charge of the settlements and what sorts of societal values they have, in a way that is stable for many billions of years.[2] If that ends up happening, you might think of the story of our galaxy[3] like this. I've marked major milestones along the way from "no life" to "intelligent life that builds its own computers and travels through space."

Thanks to Ludwig Schubert for the visualization. Many dates are highly approximate and/or judgment-prone and/or just pulled from Wikipedia (sources here), but plausible changes wouldn't change the big picture. The ~1.4 billion years to complete space expansion is based on the distance to the outer edge of the Milky Way, divided by the speed of a fast existing human-made spaceship (details in spreadsheet just linked); IMO this is likely to be a massive overestimate of how long it takes to expand throughout the whole galaxy. See footnote for why I didn't use a logarithmic axis.[4]

??? That's crazy! According to me, there's a decent chance that we live at the very beginning of the tiny sliver of time during which the galaxy goes from nearly lifeless to largely populated. That out of a staggering number of persons who will ever exist, we're among the first. And that out of hundreds of billions of stars in our galaxy, ours will produce the beings that fill it.

I know what you're thinking: "The odds that we could live in such a significant time seem infinitesimal; the odds that Holden is having delusions of grandeur (on behalf of all of Earth, but still) seem far higher."[5]

But:

The "conservative" view

Let's say you agree with me about where humanity could eventually be headed - that we will eventually have the technology to create robust, stable settlements throughout our galaxy and beyond. But you think it will take far longer than I'm saying.

A key part of my view (which I'll write about more later) is that within this century, we could develop advanced enough AI to start a productivity explosion. Say you don't believe that.

- You think I'm underrating the fundamental limits of AI systems to date.

- You think we will need an enormous number of new scientific breakthroughs to build AIs that truly reason as effectively as humans.

- And even once we do, expanding throughout the galaxy will be a longer road still.

You don't think any of this is happening this century - you think, instead, that it will take something like 500 years. That's 5-10x the time that has passed since we started building computers. It's more time than has passed since Isaac Newton made the first credible attempt at laws of physics. It's about as much time has passed since the very start of the Scientific Revolution.

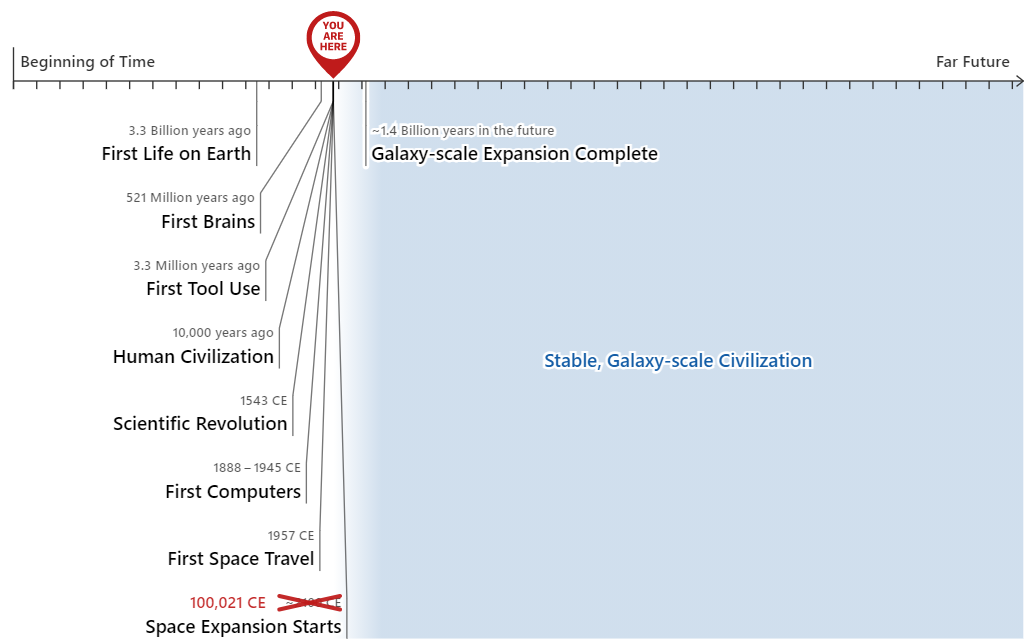

Actually, no, let's go even more conservative. You think our economic and scientific progress will stagnate. Today's civilizations will crumble, and many more civilizations will fall and rise. Sure, we'll eventually get the ability to expand throughout the galaxy. But it will take 100,000 years. That's 10x the amount of time that has passed since human civilization began in the Levant.

Here's your version of the timeline:

The difference between your timeline and mine isn't even a pixel, so it doesn't show up on the chart. In the scheme of things, this "conservative" view and my view are the same.

It's true that the "conservative" view doesn't have the same urgency for our generation in particular. But it still places us among a tiny proportion of people in an incredibly significant time period. And it still raises questions of whether the things we do to make the world better - even if they only have a tiny flow-through to the world 100,000 years from now - could be amplified to a galactic-historical-outlier degree.

The skeptical view

The "skeptical view" would essentially be that humanity (or some descendant of humanity, including a digital one) will never spread throughout the galaxy. There are many reasons it might not:

- Maybe something about space travel - and/or setting up mining robots, solar panels, etc. on other planets - is effectively impossible such that even another 100,000 years of human civilization won't reach that point.[6]

- Or perhaps for some reason, it will be technologically feasible, but it won't happen (because nobody wants to do it, because those who don't want to block those who do, etc.)

- Maybe it's possible to expand throughout the galaxy, but not possible to maintain a presence on many planets for billions of years, for some reason.

- Maybe humanity is destined to destroy itself before it reaches this stage.

- But note that if the way we destroy ourselves is via misaligned AI,[7] it would be possible for AI to build its own technology and spread throughout the galaxy, which still seems in line with the spirit of the above sections. In fact, it highlights that how we handle AI this century could have ramifications for many billions of years. So humanity would have to go extinct in some way that leaves no other intelligent life (or intelligent machines) behind.

- Maybe an extraterrestrial species will spread throughout the galaxy before we do (or around the same time).

- However, note that this doesn't seem to have happened in ~13.77 billion years so far since the universe began, and according to the above sections, there's only about 1.5 billion years left for it to happen before we spread throughout the galaxy.

- Maybe some extraterrestrial species already effectively has spread throughout our galaxy, and for some reason we just don't see them. Maybe they are hiding their presence deliberately, for one reason or another, while being ready to stop us from spreading too far.

- This would imply that they are choosing not to mine energy from any of the stars we can see, at least not in a way that we could see it. That would, in turn, imply that they're abstaining from mining a very large amount of energy that they could use to do whatever it is they want to do,[8] including defend themselves against species like ours.

- Maybe this is all a dream. Or a simulation.

- Maybe something else I'm not thinking of.

That's a fair number of possibilities, though many seem quite "wild" in their own way. Collectively, I'd say they add up to more than 50% probability ... but I would feel very weird claiming they're collectively overwhelmingly likely.

Ultimately, it's very hard for me to see a case against thinking something like this is at least reasonably likely: "We will eventually create robust, stable settlements throughout our galaxy and beyond." It seems like saying "no way" to that statement would itself require "wild" confidence in something about the limits of technology, and/or long-run choices people will make, and/or the inevitability of human extinction, and/or something about aliens or simulations.

I imagine this claim will be intuitive to many readers, but not all. Defending it in depth is not on my agenda at the moment, but I'll rethink that if I get enough demand.

Why all possible views are wild: the Fermi paradox

I'm claiming that it would be "wild" to think we're basically assured of never spreading throughout the galaxy, but also that it's "wild" to think that we have a decent chance of spreading throughout the galaxy.

In other words, I'm calling every possible belief on this topic "wild." That's because I think we're in a wild situation.

Here are some alternative situations we could have found ourselves in, that I wouldn't consider so wild:

- We could live in a mostly-populated galaxy, whether by our species or by a number of extraterrestrial species. We would be some densely populated region of space, surrounded by populated planets. Perhaps we would read up on the history of our civilization. We would know (from history and from a lack of empty stars) that we weren't unusually early life-forms with unusual opportunities ahead.

- We could live in a world where the kind of technologies I've been discussing didn't seem like they'd ever be possible. We wouldn't have any hope of doing space travel, or successfully studying our own brains or building our own computers. Perhaps we could somehow detect life on other planets, but if we did, we'd see them having an equal lack of that sort of technology.

But space expansion seems feasible, and our galaxy is empty. These two things seem in tension. A similar tension - the question of why we see no signs of extraterrestrials, despite the galaxy having so many possible stars they could emerge from - is often discussed under the heading of the Fermi Paradox.

Wikipedia has a list of possible resolutions of the Fermi paradox. Many correspond to the skeptical view possibilities I list above. Some seem less relevant to this piece. (For example, there are various reasons extraterrestrials might be present but not detected. But I think any world in which extraterrestrials don't prevent our species from galaxy-scale expansion ends up "wild," even if the extraterrestrials are there.)

My current sense is that the best analysis of the Fermi Paradox available today favors the explanation that intelligent life is extremely rare: something about the appearance of life in the first place, or the evolution of brains, is so unlikely that it hasn't happened in many (or any) other parts of the galaxy.[9]

That would imply that the hardest, most unlikely steps on the road to galaxy-scale expansion are the steps our species has already taken. And that, in turn, implies that we live in a strange time: extremely early in the history of an extremely unusual star.

If we started finding signs of intelligent life elsewhere in the galaxy, I'd consider that a big update away from my current "wild" view. It would imply that whatever has stopped other species from galaxy-wide expansion will also stop us.

This pale blue dot could be an awfully big deal

Describing Earth as a tiny dot in a photo from space, Ann Druyan and Carl Sagan wrote:

The Earth is a very small stage in a vast cosmic arena. Think of the rivers of blood spilled by all those generals and emperors so that, in glory and triumph, they could become the momentary masters of a fraction of a dot ... Our posturings, our imagined self-importance, the delusion that we have some privileged position in the Universe, are challenged by this point of pale light ... It has been said that astronomy is a humbling and character-building experience. There is perhaps no better demonstration of the folly of human conceits than this distant image of our tiny world.

This is a somewhat common sentiment - that when you pull back and think of our lives in the context of billions of years and billions of stars, you see how insignificant all the things we care about today really are.

But here I'm making the opposite point.

It looks for all the world as though our "tiny dot" has a real shot at being the origin of a galaxy-scale civilization. It seems absurd, even delusional to believe in this possibility. But given our observations, it seems equally strange to dismiss it.

And if that's right, the choices made in the next 100,000 years - or even this century - could determine whether that galaxy-scale civilization comes to exist, and what values it has, across billions of stars and billions of years to come.

So when I look up at the vast expanse of space, I don't think to myself, "Ah, in the end none of this matters." I think: "Well, some of what we do probably doesn't matter. But some of what we do might matter more than anything ever will again. ...It would be really good if we could keep our eye on the ball. ...[gulp]"

This work is licensed under a Creative Commons Attribution 4.0 International License.

or Kardashev Type III. ↩︎

If we are able to create mind uploads, or detailed computer simulations of people that are as conscious as we are, it could be possible to put them in virtual environments that automatically reset, or otherwise "correct" the environment, whenever the society would otherwise change in certain ways (for example, if a certain religion became dominant or lost dominance). This could give the designers of these "virtual environments" the ability to "lock in" particular religions, rulers, etc. I'll discuss this more in a future piece. ↩︎

I've focused on the "galaxy" somewhat arbitrarily. Spreading throughout all of the accessible universe would take a lot longer than spreading throughout the galaxy, and until we do it's still imaginable that some species from outside our galaxy will disrupt the "stable galaxy-scale civilization," but I think accounting for this correctly would add a fair amount of complexity without changing the big picture. I may address that in some future piece, though. ↩︎

A logarithmic version doesn't look any less weird, because the distances between the "middle" milestones are tiny compared to _both _the stretches of time before and after these milestones. More fundamentally, I'm talking about how remarkable it is to be in the most important [small number] of years out of [big number] of years - that's best displayed using a linear axis. It's often the case that weird-looking charts look more reasonable with logarithmic axes, but in this case I think the chart looks weird because the situation is weird. Probably the least weird-looking version of this chart would have the x-axis be something like the logged distance from the year 2100, but that would be a heck of a premise for a chart - it would basically bake in my argument that this appears to be a very special time period. ↩︎

This is exactly the kind of thought that kept me skeptical for many years of the arguments I'll be laying out in the rest of this series about the potential impacts, and timing, of advanced technologies. Grappling directly with how "wild" our situation seems to ~undeniably be has been key for me. ↩︎

Spreading throughout the galaxy would certainly be harder if nothing like mind uploading (which I'll discuss in a future piece, and which is part of why I think future space settlements could have "value lock-in" as discussed above) can ever be done. I would find a view that "mind uploading is impossible" to be "wild" in its own way, because it implies that human brains are so special that there is simply no way, ever, to digitally replicate what they're doing. (Thanks to David Roodman for this point.) ↩︎

That is, advanced AI that pursues objectives of its own, which aren't compatible with human existence. I'll be writing more about this idea. Existing discussions of it include the books Superintelligence, Human Compatible, Life 3.0, and The Alignment Problem. The shortest, most accessible presentation I know of is The case for taking AI seriously as a threat to humanity (Vox article by Kelsey Piper). This report on existential risk from power-seeking AI, by Open Philanthropy's Joe Carlsmith, lays out in detail which premises one would have to believe in order to take this problem seriously. ↩︎

Thanks to Carl Shulman for this point. ↩︎

I'm not sure what publication this was in, but the claim seems to be supported here: https://arxiv.org/pdf/1806.02404.pdf.