Almost a year after the 2024 holiday season Twitter fundraiser, we managed to score a very exciting "Mystery EA Guest" to interview: Will MacAskill himself.

@MHR🔸 was the very talented interviewer and shrimptastic fashion icon

And of course huge thanks to Will for agreeing to do this

Summary, highlights, and transcript below video!

Summary and Highlights

(summary AI-generated)

Effective Altruism has changed significantly since its inception. With the arrival of "mega donors" and major institutional changes, does individual effective giving still matter in 2025?

Will MacAskill—co-founder of the Centre for Effective Altruism and Giving What We Can, and currently a senior research fellow at the Forethought Institute—says the answer is a resounding yes. In fact, he argues that despite the resources currently available, individuals are "systematically not ambitious enough" relative to the scale of the problems the world faces.

In this special interview for the 2025 EA Twitter Fundraiser, Will joins host Matt to discuss the evolution of the movement’s focus. They discuss why animal we... (read more)

SAN FRANCISCO, Sept 25 (Reuters) - ChatGPT-maker OpenAI is working on a plan to restructure its core business into a for-profit benefit corporation that will no longer be controlled by its non-profit board, people familiar with the matter told Reuters, in a move that will make the company more attractive to investors.

The case for a legal challenge seems hugely overdetermined to me:

Stop/delay/complicate the restructuring, and otherwise make life appropriately hard for Sam Altman

Settle for a large huge amount of money that can be used to do a huge amount of good

Signal that you can't just blatantly take advantage of OpenPhil/EV/EA as you please without appropriate challenge

I know OpenPhil has a pretty hands-off ethos and vibe; this shouldn't stop them from acting with integrity when hands-on legal action is clearly warranted

I understand that OpenAI's financial situation is not very good [edit: this may not be a high-quality source], and if they aren't able to convert to a for-profit, things will become even worse:

OpenAI has two years from the [current $6.6 billion funding round] deal’s close to convert to a for-profit company, or its funding will convert into debt at a 9% interest rate.

As an aside: how will OpenAI pay that interest in the event they can't convert to a for-profit business? Will they raise money to pay the interest rate? Will they get a loan?

It's conceivable that OpenPhil suing OpenAI could buy us 10+ years of AI timeline, if the following dominoes fall:

OpenPhil sues, and OpenAI fails to convert to a for-profit.

As a result, OpenAI struggles to raise additional capital from investors.

Losing $4-5 billion a year with little additional funding in sight, OpenAI is forced to make some tough financial decisions. They turn off the free version of ChatGPT, stop training new models, and cut salaries for employees. They're able to eke out some profit, but not much profit, because their product is not highly differentiated from other AI off

I made a tool to play around with how alternatives to the 10% GWWC Pledge default norm might change:

How much individuals are "expected" to pay

The idea being that there are functions of income that people would prefer to the 10% pledge behind some relevant veil of ignorance, along the lines of "I don't want to commit 10% of my $30k salary, but I gladly commit 20% of my $200k salary"

Some folks pushed back a bit, citing the following:

The pledge isn't supposed to be revenue maximizing

A main function of the pledge is to "build the habit of giving" and complexity/higher expectations undermines this

Many pledgers give >10% - the pledge doesn't set an upper bond

I don't find these points very convincing...

Some points of my own

To quote myself: "I think it would be news to most GWWC pledgers that actually the main point is some indirect thing like building habits rather than getting money to effective orgs"

If the distribution of GWWC pledgers resembles the US distribution of household incomes and then cut implied donations in half to account for individual vs household discrepancy (obviou

Mostly for fun I vibecoded an API to easily parse EA Forum posts as markdown with full comment details based on post URL (I think helpful mostly for complex/nested comment sections where basic copy and paste doesn't work great)

I have tested it on about three posts and every possible disclaimer applies

Is there a good list of the highest leverage things a random US citizen (probably in a blue state) can do to cause Trump to either be removed from office or seriously constrained in some way? Anyone care to brainstorm?

Like the safe state/swing state vote swapping thing during the election was brilliant - what analogues are there for the current moment, if any?

This post (especially this section) explores this. There are also some ideas on this website. I've copied and pasted the ideas from that site below. I think it's written with a more international perspective, but likely has some overlap with actions which could be taken by Americans.

* Promoting free and fair elections, especially at the midterms

* Several NGOs are well established as working on this, eg Common Cause works to reduce needless barriers to voting and stop gerrymandering, etc. Verified voting advocates for secure voting systems, and the Brennan Center for Justice researches and advocates for relevant policies.

* Enabling bravery of key individuals.

* Example: Mike Pence was very brave in standing up to Trump and enabling a transition of power, and he has been vilified for this by Trump and his supporters.

* Today, members of Congress don’t always seem to stand up for what they believe in (eg not opposing controversial appointments such as RFK and Hegseth). Presumably they are concerned about threats made by Trump.

* Unclear exactly what this intervention looks like (provide financial support? Or something else?)

* Consumer power and investor power

* The boycott of Tesla is an obvious example of this, and Musk is clearly feeling the pain.

* Further work could identify and assess the extent to which other large corporates are kowtowing to the Trump administration, so that consumers can make informed choices.

* People who are members of pension schemes could write to the trustees asking them to divest from relevant corporates (Tesla being the obvious choice at this stage, this large scheme has already divested from Tesla). Furthermore, people could coordinate this activity.

* Support grassroots protests

* MoveOn, Democracy forward, etc

* Bail project

* Supporting free and balanced media

* We need media sources which are critical of government.

* Such media sources don’t seem naturally set up to accept moderate sized d

4

Chakravarthy Chunduri

tl;dr: Getting Trump removed from office is not high-leverage and can be actively dangerous, since that only means JD Vance becomes President, and he and the Republicans will be politically obligated to dig in their heels and implement a Trump agenda.

Instead, I think somehow getting millions of Americans to recognize Trump's gameplan (which I've summarized below), and getting them to panic-vote Democrats in the 2026 midterms is the highest leverage thing you can do.

Here's my $0.02, even though I don't know how to achieve a Dem victory.

I think Trump is doing all of this with an eye on the 2026 midterms. I'll try to publish a more detailed write-up later if I'm able to, but all these arguably inane policy decisions that even well connected Trump supporters were blindsided by- are all things that the Trump administration is hoping will play well with the voters in the mid-term elections. Then more Trumpists get elected into the House, then that empowers Trump even more, considering he's already installed his vassal Mike Johnson as the leader of the House of Representatives.

I think that's the overall game plan. That's why he's had no compunction in walking decisions back as soon as they've generated news headlines.

As plans go, it certainly is a cogent plan. It looks and feels like the Southern Strategy 2.0. Frankly I was expecting this sort of populist grandstanding from Bernie Sanders, Elizabeth Warren and the Democratic Socialists; and not this generation of Republicans.

It looks like Trump saw Bernie Sanders attempting Ralph Nader's political strategy and thought, "Huh. I could do that!" and so here we are today. That's why I personally dislike politicians who are not centrists. But I think there's a flaw in Trump's plan.

I'm not an American, but I'm guessing the reason previous presidents haven't done this kind of populism is because American voters (a) aren't that stupid, like, most American citizens have at least a high-school level education; and

3

David Mathers🔸

"Because once a country embraces Statism, it usually begins an irreversible process of turning into a "shithole country", as Trump himself eloquently put it. "

Ignoring tiny islands (some of them with dubious levels of independence from the US), the 10 nations with the largest %s of GDP as government revenue include Finland, France, Belgium and Austria, although, also, yes, Libya and Lesotho. In general, the top of the list for government revenue as % of GDP seems to be a mixture of small islands, petro states, and European welfare state democracies, not places that are particularly impoverished or authoritarian: https://en.wikipedia.org/wiki/List_of_countries_by_government_spending_as_percentage_of_GDP#List_of_countries_(2024)

Meanwhile the countries with the low levels of government revenue as a % of GDP that aren't currently having some kind of civil war are places like Bangladesh, Sri Lanka, Iran and (weirdly) Venezuela.

This isn't a perfect proxy for "statism" obviously, but I think it shows that things are more complicated than simplistic libertarian analysis would suggest. Big states (in purely monetary) seem to often be a consequence of success. Maybe they also hold back further success of course, but countries don't seem to actively degenerate once they arrive (i.e. growth might slow, but they are not in permanent recession.)

1

Chakravarthy Chunduri

You make good points. Obviously, every country is either definitionally or practically a nation-state. But IMHO the only conditions under which individual freedoms and economic freedoms for individuals survive in a country, are when Statism is not embraced but is instead held at arm's length and treated with caution and hesitation.

My argument for voting against Trump and Trumpists in the 2026 midterms, for a republican-leaning American citizen is this:

The current situation is directly a result of both Republican and Democrat politicians explicitly trying to increase and abuse state power for their definition of "the greater good", which the other side disagrees with.

Up to an arbitrary point, this can be considered the ordinary functioning of democratic nation-states. Beyond the arbitrary point, the presence or absence of democracy is irrelevant, and the very nature of the social contract changes.

The fact that the arbitrary point is unknown or unpredictable is precisely the reason that Statism should not embraced but instead be held at arm's length and treated with caution and hesitation!

Every dollar the government takes out of you pocket or restricts you from earning, every sector or part of the economy or society the government feels the need to "direct" or "reshape" for the greater good, the less freedom there is for the individual, and private citizens as a whole.

If the Republican voters abdicate too much sovereignty to support Trumpist pet projects, even if the Dems ultimately defeat Trumpists, or even if Vance turns out to be a much better president, the social contract may or may not revert back to what it used to be. Which could really suck.

4

MaxRa

(Just quick random thoughts.)

The more that Trump is perceived as a liability for the party, the more likely they would go along with an impeachment after a scandal.

1. Reach out to Republicans in your state about your unhappiness about the recent behavior of the Trump administration.

2. Financially support investigative reporting on the Trump administration.

3. Go to protests?

4. Comment on Twitter? On Truth Social?

1. It's possibly underrated to write concise and common sense pushback in the Republican Twitter sphere?

After thinking about this post ("Utilitarians Should Accept that Some Suffering Cannot be “Offset”") some more, there's an additional, weaker claim I want to emphasize, which is: You should be very skeptical that it’s morally good to bring about worlds you wouldn’t personally want to experience all of

We can imagine a society of committed utilitarians all working to bring about a very large universe full of lots of happiness and, in an absolute sense, lots of extreme suffering. The catch is that these very utilitarians are the ones that are going to be experiencing this grand future - not future people or digital people or whomever.

Personally, they all desperately want to opt out of this - nobody wants to actually go through the extreme suffering regardless of what benefits await, and yet all work day in and day out to bring about this future anyway, condemning themselves to heaven and to hell.

Of course it’s not impossible that their stated morals are right and preferences are morally wrong, but my claim is that we should be very skeptical that the “skin in the game” answer is the wrong one.

And of course here I’m just supposing/assuming that these utilitarians have the prefere... (read more)

Media is often bought on a CPM basis (cost per thousand views). A display ad on LinkedIn for e.g. might cost $30 CPM. So yeah I think merch is probably underrated.

@MHR🔸@Laura Duffy, @AbsurdlyMax and I have been raising money for the EA Animal Welfare Fund on Twitter and Bluesky, and today is the last day to donate!

If we raise $3k more today I will transform my room into an EA paradise complete with OWID charts across the walls, a literal bednet, a shrine, and more (and of course post all this online)! Consider donating if and only if you wouldn't use the money for a better purpose!

See some more fun discussion and such by following the replies and quote-tweets here:

Sharing https://earec.net, semantic search for the EA + rationality ecosystem. Not fully up to date, sadly (doesn't have the last month or so of content). The current version is basically a minimal viable product!

On the results page there is also an option to see EA Forum only results which allow you to sort by a weighted combination of karma and semantic similarity thanks to the API!

Final feature to note is that there's an option to have gpt-4o-mini "manually" read through the summary of each article on the current screen of results, which will give better evaluations of relevance to some query (e.g. "sources I can use for a project on X") than semantic similarity alone.

Still kinda janky - as I said, minimal viable product right now. Enjoy and feedback is welcome!

How much do you trust other EA Forum users to be genuinely interested in making the world better using EA principles?

This is one thing I've updated down quite a bit over the last year.

It seems to me that relatively few self-identified EA donors mostly or entirely give to the organization/whatever that they would explicitly endorse as being the single best recipient of a marginal dollar (do others disagree?)

Of course the more important question is whether most EA-inspired dollars are given in such a way (rather than most donors). Unfortunately, I think the answer to this is "no" as well, seeing as OpenPhil continues to donate a majority of dollars to human global health and development[1] (I threw together a Claude artifact that lets you get a decent picture of how OpenPhil has funded cause areas over time and in aggregate)[2]

Edit: to clarify, it could be the case that others have object-level disagreements about what the best use of a marginal dollar is. Clearly this is sometimes the case, but it's not what I am getting at here. I am trying to get at the phenomenon where people implicitly say/reason "yes, EA principles imply th... (read more)

In your original post, you talk about explicit reasoning, in the your later edit, you switch to implicit reasoning. Feels like this criticism can't be both. I also think the implicit reasoning critique just collapses into object-level disagreements, and the explicit critique just doesn't have much evidence.

The phenomenon you're looking at, for instance, is:

"I am trying to get at the phenomenon where people implicitly say/reason "yes, EA principles imply that the best thing to do would be to donate to X, but I am going to donate to Y instead."

And I think this might just be an ~empty set, compared to people having different object-level beliefs about what EA principles are or imply they should do, and also disagree with you on what the best thing to do would be.[1] I really don't think there's many people saying "the bestthing to do is donate to X, but I will donate to Y". (References please if so - clarification in footnotes[2]) Even on OpenPhil, I think Dustin just genuinely believes in worldview diversification is the best thing, so there's no contradiction there where he implies the best thing would be to do X but in practice does do Y.

I think causing this to 'update downwa... (read more)

Thanks and I think your second footnote makes an excellent distinction that I failed to get across well in my post.

I do think it’s at least directionally an “EA principle” that “best” and “right” should go together, although of course there’s plenty of room for naive first-order calculation critiques, heuristics/intuitions/norms that might push against some less nuanced understanding of “best”.

I still think there’s a useful conceptual distinction to be made between these terms, but maybe those ancillary (for lack of a better word) considerations relevant to what one thinks is the “best” use of money blur the line enough to make it too difficult to distinguish these in practice.

Re: your last paragraph, I want to emphasize that my dispute is with the terms “using EA principles”. I have no doubt whatsoever about the first part, “genuinely interested in making the world better”

4

JWS 🔸

Thanks Aaron, I think you're responses to me and Jason do clear things up. I still think the framing of it is a bit off though:

* I accept that you didn't intend your framing to be insulting to others, but using "updating down" about the "genuine interest" of others read as hurtful on my first read. As a (relative to EA) high contextualiser it's the thing that stood out for me, so I'm glad you endorse that the 'genuine interest' part isn't what you're focusing on, and you could probably reframe your critique without it.

* My current understanding of your position is that it is actually: "I've come to realise over the last year that many people in EA aren't directing their marginal dollars/resources to the efforts that I see as most cost-effective, since I also think those are the efforts that EA principles imply are the most effective."[1] To me, this claim is about the object-level disagreement on what EA principles imply.

* However, in your response to Jason you say “it’s possible I’m mistaken over the degree to which direct resources to the place you think needs them most” is a consensus-EA principle which switches back to people not being EA? Or not endorsing this view? But you've yet to provide any evidence that people aren't doing this, as opposed to just disagreeing about what those places are.[2]

1. ^

Secondary interpretation is: "EA principles imply one should make a quantitative point estimate of the good of all your relevant moral actions, and then act on the leading option in a 'shut-up-and-calculate' way. I now believe many fewer actors in the EA space actually do this than I did last year"

2. ^

For example, in Ariel's piece, Emily from OpenPhil implies that they have much lower moral weights on animal life than Rethink does, not that they don't endorse doing 'the most good' (I think this is separable from OP's commitment to worldview diversification).

9

Jason

It seems a bit harsh to treat other user-donors' disagreement with your views on concentrating funding on their top-choice org (or even cause area) as significant evidence against the proposition that they are "genuinely interested in making the world better using EA principles."

I think a world in which everyone did this would have some significant drawbacks. While I understand how that approach would make sense through an individual lens, and am open to the idea that people should concentrate their giving more, I'd submit that we are trying to do the most good collectively. For instance: org funding is already too concentrated on a too-small number of donors. If (say) each EA is donating to an average of 5 orgs, then a norm of giving 100% to a single org would decrease the number of donors by 80%. That would impose significant risks on orgs even if their total funding level was not changed.

It's also plausible that the number of first-place votes an org (or even a cause area) would get isn't a super-strong reflection of overall community sentiment. If a wide range of people identified Org X as in their top 10%, then that likely points to some collective wisdom about Org X's cost-effectiveness even if no one has them at number 1. Moreover, spreading the wealth can be seen as deferring to broader community views to some extent -- which could be beneficial insofar as one found little reason to believe that wealthier community members are better at deciding where donation dollars should go than the community's collective wisdom. Thus, there are reasons -- other than a lack of genuine interest in EA principles by donors -- that donors might reasonably choose to act in accordance with a practice of donation spreading.

6

Aaron Bergman

Thanks, it’s possible I’m mistaken over the degree to which “direct resources to the place you think needs them most” is a consensus-EA principle.

Also, I recognize that "genuinely interested in making the world better using EA principles” is implicitly value-laden, and to be clear I do wish it was more the case, but I also genuinely intend my claim to be an observation that might have pessimistic implications depending on other beliefs people may have rather than an insult or anything like it, if that makes any sense.

Love this quick take, and would appreciate more similar short fun/funny takes to lift the mood :D.

7

Aaron Bergman

Boy do I have a website for you (twitter.com)!

(I unironically like twitter for the lower stakes and less insanely high implicit standards)

On mobile now so can’t add image but https://x.com/aaronbergman18/status/1782164275731001368?s=46

Interesting lawsuit; thanks for sharing! A few hot (unresearched, and very tentative) takes, mostly on the Musk contract/fraud type claims rather than the unfair-competition type claims related to x.ai:

1. One of the overarching questions to consider when reading any lawsuit is that of remedy. For instance, the classic remedy for breach of contract is money damages . . . and the potential money damages here don't look that extensive relative to OpenAI's money burn.

2. Broader "equitable" remedies are sometimes available, but they are more discretionary and there may be some significant barriers to them here. Specifically, a court would need to consider the effects of any equitable relief on third parties who haven't done anything wrongful (like the bulk of OpenAI employees, or investors who weren't part of an alleged conspiracy, etc.), and consider whether Musk unreasonably delayed bringing this lawsuit (especially in light of those third-party interests). On hot take, I am inclined to think these factors would weigh powerfully against certain types of equitable remedies.

1. Stated more colloquially, the adverse effects on third parties and the delay ("laches") would favor a conclusion that Musk will have to be content with money damages, even if they fall short of giving him full relief.

2. Third-party interests and delay may be less of a barrier to equitable relief against Altman himself.

3. Musk is an extremely sophisticated party capable of bargaining for what he wanted out of his grants (e.g., a board seat), and he's unlikely to get the same sort of solicitude on an implied contract theory that an ordinary individual might. For example, I think it was likely foreseeable in 2015 to January 2017 -- when he gave the bulk of the funds in question -- that pursuing AGI could be crazy expensive and might require more commercial relationships than your average non-profit would ever consider. So I'd be hesitant to infer much in the way of implied-contractual

This is not surprising to me given the different historical funding situations in the relevant cause areas, the sense that animal-welfare and global-health are not talent-constrained as much as funding-constrained, and the clearer presence of strong orgs in those areas with funding gaps.

For instance:

* there are 15 references to "upskill" (or variants) in the list of microgrants, and it's often hard to justify an upskilling grant in animal welfare given the funding gaps in good, shovel-ready animal-welfare projects.

* Likewise, 10 references to "study," 12 to "development,' 87 to "research" (although this can have many meanings), 17 for variants of "fellow," etc.

* There are 21 references to "part-time," and relatively small, short blocks of time may align better with community building, small research projects than (e.g.) running a corporate campaign

5

Charles Dillon 🔸

Seems pretty unsurprising - the animal welfare fund is mostly giving to orgs, while the others give to small groups or individuals for upskilling/outreach frequently.

8

Michael St Jules 🔸

I think the differences between the LTFF and AWF are largely explained by differences in salary expectations/standards between the cause areas. There are small groups and individuals getting money from the AWF, and they tend to get much less for similar duration projects. Salaries in effective animal advocacy are pretty consistently substantially lower than in AI safety (and software/ML, which AI safety employers and grantmakers might try to compete with somewhat), with some exceptions. This is true even for work in high-income countries like the US and the UK. And, of course, salary expectations are even lower in low- and middle-income countries, which are an area of focus of the AWF (within neglected regions). Plus, many AI safety folks are in the Bay Area specifically, which is pretty expensive (although animal advocates in London also aren't paid as much).

8

Aaron Bergman

Yeah but my (implicit, should have made explicit lol) question is “why this is the case?”

Like at a high level it’s not obvious that animal welfare as a cause/field should make less use of smaller projects than the others. I can imagine structural explanations (eg older field -> organizations are better developed) but they’d all be post hoc.

6

Charles Dillon 🔸

I think getting enough people interested in working on animal welfare has not usually been the bottleneck, relative to money to directly deploy on projects, which tend to be larger.

2

Aaron Bergman

This doesn't obviously point in the direction of relatively and absolutely fewer small grants, though. Like naively it would shrink and/or shift the distribution to the left - not reshape it.

4

Charles Dillon 🔸

I don't understand why you think this is the case. If you think of the "distribution of grants given" as a sum of multiple different distributions (e.g. upskilling, events, and funding programmes) of significantly varying importance across cause areas, then more or less dropping the first two would give your overall distribution a very different shape.

5

Aaron Bergman

Yeah you're right, not sure what I missed on the first read

In Q1 we will launch a GPT builder revenue program. As a first step, US builders will be paid based on user engagement with their GPTs. We'll provide details on the criteria for payments as we get closer.

Friends everywhere: Call your senators and tell them to vote no on HR 22 (...)

Friends everywhere: If you’d like to receive personalized guidance on what opportunities are best suited to your skills or geographic area, we are excited to announce that our personalized recommendation form has been reopened! Fill it in here!

Bolding is mine to highlight the 80k-like opportunity. I'm abusing the block quote a bit by taking out most of the text, so check out the actual post if interested!

There's also a volunteering opportunities page advertising "A short list of high-impact election opportunities, continuously updated" which links to a notion page that's currently down.

I'm pretty happy with how this "Where should I donate, under my values?" Manifold market has been turning out. Of course all the usual caveats pertaining to basically-fake "prediction" markets apply, but given the selection effects of who spends manna on an esoteric market like this I put a non-trivial weight into the (live) outcomes.

I guess I'd encourage people with a bit more money to donate to do something similar (or I guess defer, if you think I'm right about ethics!), if just as one addition to your portfolio of donation-informing considerations.

Thanks! Let me write them as a loss function in python (ha)

For real though:

Some flavor of hedonic utilitarianism

I guess I should say I have moral uncertainty (which I endorse as a thing) but eh I'm pretty convinced

Longtermism as explicitly defined is true

Don't necessarily endorse the cluster of beliefs that tend to come along for the ride though

"Suffering focused total utilitarian" is the annoying phrase I made up for myself

I think many (most?) self-described total utilitarians give too little consideration/weight to suffering, and I don't think it really matters (if there's a fact of the matter) whether this is because of empirical or moral beliefs

Maybe my most substantive deviation from the default TU package is the following (defended here):

"Under a form of utilitarianism that places happiness and suffering on the same moral axis and allows that the former can be traded off against the latter, one might nevertheless conclude that some instantiations of suffering cannot be offset or justified by even an arbitrarily large amount of wellbeing."

Moral realism for basically all the reasons described by Rawlette on 80k but I don't think this really matters after conditioning on normati

According to Kevin Esvelt on the recent 80,000k podcast (excellent btw, mostly on biosecurity), eliminating the New World New World screwwormcould be an important farmed animal welfare (infects livestock), global health (infects humans), development (hurts economies), science/innovation intervention, and most notably quasi-longtermist wild animal suffering intervention.

More, if you think there’s a non-trivial chance of human disempowerment, societal collapse, or human extinction in the next 10 years, this would be important to do ASAP because we may not be able to later.

From the episode:

Kevin Esvelt:

...

But from an animal wellbeing perspective, in addition to the human development, the typical lifetime of an insect species is several million years. So 106 years times 109 hosts per year means an expected 1015 mammals and birds devoured alive by flesh-eating maggots. For comparison, if we continue factory farming for another 100 years, that would be 1013 broiler hens and pigs. So unless it’s 100 times worse to be a factory-farmed broiler hen than it is to be devoured alive by flesh-eating maggots, then when you integrate over the future, it is more important for animal we

I didn't have a good response to @DanielFilan, and I'm pretty inclined to defer to orgs like CEA to make decisions about how to use their own scarce resources.

At least for EA Global Boston 2024 (which ended yesterday), there was the option to pay a "cost covering" ticket fee (of what I'm told is $1000).[1]

All this is to say that I am now more confident (although still <80%) that marginal rejected applicants who are willing to pay their cost-covering fee would be good to admit.[2]

In part this stems from an only semi-legible background stance that, on the whole, less impressive-seeming people have more ~potential~ and more to offer than I think "elite EA" (which would those running EAG admissions) tend to think. And this, in turn, has a lot to do with the endogeneity/path dependence of I'd hastily summarize as "EA involvement."

That is, many (most?) people need a break-in point to move from something like "basically convinced that EA is good, interested in the ideas and consuming content, maybe donating 10%" to anything more ambitious.

For some, that comes in the form of going to an elite college with a vibrant EA group/communi... (read more)

[This comment is no longer endorsed by its author]Reply

5

harfe

I am under the impression that EAGx can be such a break-in point, and has lower admission standards than EAG. In particular, there is EAGxVirtual (Applications are open!).

Has the rejected person you are thinking of applied to any EAGx conference?

4

NickLaing

I agree. One minor issue with your "low bar" is the giving 10 percent. Giving this much is extremely uncommon to any cause, so for me might be more of a "medium bar" ;)

2

Jason

Would this (generally) be a one-time deal? The idea that some people would benefit from a bolus of EA as a "break-in point" or "on-ramp" seems plausible, and willingness to pay a hefty admission fee / other expenses would certainly have a signaling value.[1] However, the argument probably gets weaker after the first marginal admission (unless the marginal applicant is a lot closer to the line on the second time around).

Maybe allowing only one marginal admission per person absent special circumstances would mitigate concerns about "diluting" the event.

1. ^

I recognize the downsides of a pay-extra-to-attend approach as far as perceived fairness, equity, accessibility to people from diverse backgrounds, and so on. That would be a tradeoff to consider.

Offer subject to be arbitrarily stopping at some point (not sure exactly how many I'm willing to do)

Give me chatGPT Deep Research queries and I'll run them. My asks are that:

You write out exactly what you want the prompt to be so I can just copy and paste something in

Feel free to request a specific model (I think the options are o1, o1-pro, o3-mini, and o3-mini-high) but be ok with me downgrading to o3-mini

Be cool with me very hastily answering the inevitable set of follow-up questions that always get asked (seems unavoidable for whatever reason). I might say something like "all details are specified above; please use your best judgement"

Note that the UI is atrocious. You're not using o1/o3-mini/o1-pro etc. It's all the same model, a variant of o3, and the model in the bar at the top is completely irrelevant once you click the deep research button. I am very confused why they did it like this https://openai.com/index/introducing-deep-research/

Random sorta gimmicky AI safety community building idea: tabling at universities but with a couple laptop signed into Claude Pro with different accounts. Encourage students (and profs) to try giving it some hard question from eg a problem set and see how it performs. Ideally have a big monitor for onlookers to easily see.

Most college students are probably still using ChatGPT-3.5, if they use LLMs at all. There’s a big delta now between that and the frontier.

cool, but I don't think a year is right. I would have said 3 years.

4

Aaron Bergman

I think the proxy question is “after what period of time is it reasonable to assume that any work building or expanding on the post would have been published?” and my intuition here is about 1 year but would be interested in hearing others thoughts

4

Larks

Hopefully people will be sparing in applying it to their own recent posts!

2

Aaron Bergman

Eh I'm not actually sure how bad this would be. Of course it could be overdone, but a post's author is its obvious best advocate, and a simple "I think this deserves more attention" vote doesn't seem necessarily illegitimate to me

This post is half object level, half experiment with “semicoherent audio monologue ramble → prose” AI (presumably GPT-3.5/4 based) program audiopen.ai.

In the interest of the latter objective, I’m including 3 mostly-redundant subsections:

A ’final’ mostly-AI written text, edited and slightly expanded just enough so that I endorse it in full (though recognize it’s not amazing or close to optimal)

The raw AI output

The raw transcript

1) Dubious asymmetry argument in WWOTF

In Chapter 9 of his book, What We Are the Future, Will MacAskill argues that the future holds positive moral value under a total utilitarian perspective. He posits that people generally use resources to achieve what they want - either for themselves or for others - and thus good outcomes are easily explained as the natural consequence of agents deploying resources for their goals. Conversely, bad outcomes tend to be side effects of pursuing other goals. While malevolence and sociopathy do exist, they are empirically rare.

MacAskill argues that in a future with continued economic growth and no existential risk, we will likely direct more resources towards doing good things due to self-interest and increase... (read more)

I think there’s a case to be made for exploring the wide range of mediocre outcomes the world could become.

Recent history would indicate that things are getting better faster though. I think MacAskill’s bias towards a range of positive future outcomes is justified, but I think you agree too.

Maybe you could turn this into a call for more research into the causes of mediocre value lock-in. Like why have we had periods of growth and collapse, why do some regions regress, what tools can society use to protect against sinusoidal growth rates.

1) How often (in absolute and relative terms) a given forum topic appears with another given topic

2) Visualizing the popularity of various tags

An updated Forum scrape including the full text and attributes of 10k-ish posts as of Christmas, '22

See the data without full text in Google Sheets here

Post explaining version 1.0 from a few months back

From the data in no. 2, a few effortposts that never garnered an accordant amount of attention (qualitatively filtered from posts with (1) long read times (2) modest positive karma (3) not a ton of comments.

A (potential) issue with MacAskill's presentation of moral uncertainty

Not able to write a real post about this atm, though I think it deserves one.

MacAskill makes a similar point in WWOTF, but IMO the best and most decision-relevant quote comes from his second appearance on the 80k podcast:

There are possible views in which you should give more weight to suffering...I think we should take that into account too, but then what happens? You end up with kind of a mix between the two, supposing you were 50/50 between classical utilitarian view and just strict negative utilitarian view. Then I think on the natural way of making the comparison between the two views, you give suffering twice as much weight as you otherwise would.

I don't think the second bolded sentence follows in any objective or natural manner from the first. Rather, this reasoning takes a distinctly total utilitarian meta-level perspective, summing the various signs of utility and then implicitly considering them under total utilitarianism.

Even granting that the mora arithmetic is appropriate and correct, it's not at all clear what to do once the 2:1 accounting is complete. MacAskill's suffering-focused ... (read more)

The recent 80k podcast on the contingency of abolition got me wondering what, if anything, the fact of slavery's abolition says about the ex ante probability of abolition - or more generally, what one observation of a binary random variable X says about p as in

Turns out there is an answer (!), and it's found starting in paragraph 3 of subsection 1 of section 3 of the Binomial distribution Wikipedia page:

The uniform prior case just generalizes to Laplace's Law of Succession, right?

2

Aaron Bergman

In terms of result, yeah it does, but I sorta half-intentionally left that out because I don't actually think LLS is true as it seems to often be stated.

Why the strikethrough: after writing the shortform, I get that e.g., "if we know nothing more about them" and "in the absence of additional information" mean "conditional on a uniform prior," but I didn't get that before. And Wikipedia's explanation of the rule,

seems both unconvincing as stated and, if assumed to be true, doesn't depend on that crucial assumption

Hypothesis: from the perspective of currently living humans and those who will be born in the currrent <4% growth regime only (i.e. pre-AGI takeoff or I guess stagnation) donations currently earmarked for large scale GHW, Givewell-type interventions should be invested (maybe in tech/AI correlated securities) instead with the intent of being deployed for the same general category of beneficiaries in <25 (maybe even <1) years.

The arguments are similar to those for old school "patient philanthropy" except now in particular seems exceptionally uncerta... (read more)

I'm skeptical of this take. If you think sufficiently transformative + aligned AI is likely in the next <25 years, then from the perspective of currently living humans and those who will be born in the current <4% growth regime, surviving until transformative AI arrives would be a huge priority. Under that view, you should aim to deploy resources as fast as possible to lifesaving interventions rather than sitting on them.

Idea/suggestion: an "Evergreen" tag, for old (6 months month? 1 year? 3 years?) posts (comments?), to indicate that they're still worth reading (to me, ideally for their intended value/arguments rather than as instructive historical/cultural artifacts)

I think we want someone to push them back into the discussion.

Or you know, have editable wiki versions of them.

2

quinn

yeah some posts are sufficiently 1. good/useful, and 2. generic/not overly invested in one particular author's voice or particularities that they make more sense as a wiki entry than a "blog"-adjacent post.

I tried making a shortform -> Twitter bot (ie tweet each new top level ~quick take~) and long story short it stopped working and wasn't great to begin with.

I feel like this is the kind of thing someone else might be able to do relatively easily. If so, I and I think much of EA Twitter would appreciate it very much! In case it's helpful for this, a quick takes RSS feed is at https://ea.greaterwrong.com/shortform?format=rss

Note: this sounds like it was written by chatGPT because it basically was (from a recorded ramble)🤷

I believe the Forum could benefit from a Shorterform page, as the current Shortform forum, intended to be a more casual and relaxed alternative to main posts, still seems to maintain high standards. This is likely due to the impressive competence of contributors who often submit detailed and well-thought-out content. While some entries are just a few well-written sentences, others resemble blog posts in length and depth.

That^ is too big for Google Sheets, so here's the same thing just without a breakdown by country that you should be able to open easily if you want to take a look.

Basically the UN data generally used for tracking/analyzing the amount of fish and other marine life captured/farmed and killed only tracks the total weight captured for a given country-year-species (or group of species).

I had chatGPT-4 provide estimated lo... (read more)

WWOTF: what did the publisher cut? [answer: nothing]

Contextual note: this post is essentially a null result. It seemed inappropriate both as a top-level post and as an abandoned Google Doc, so I’ve decided to put out the key bits (i.e., everything below) as Shortform. Feel free to comment/message me if you think that was the wrong call!

Actual post

On hisrecent appearance on the 80,000 Hours Podcast, Will MacAskill noted thatDoing Good Better was significantly influenced by the book’s publisher:[1]

There's a ton there, but one anecdote from yesterday: referred me to this $5 IOS desktop app which (among other more reasonable uses) made me this full quality, fully intra-linked >3600 page PDF of (almost) every file/site linked to by every file/site linked to from Tomasik's homepage (works best with old-timey simpler sites like that)

For 2.a the closest I found is https://forum.effectivealtruism.org/allPosts?sortedBy=topAdjusted&timeframe=yearly&filter=all, you can see the inflation-adjusted top posts and shortforms by year.

For 1 it's probably best to post in the EA Forum feature suggestion thread

Note: inspired by the FTX+Bostrom fiascos and associated discourse. May (hopefully) develop into longform by explicitly connecting this taxonomy to those recent events (but my base rate of completing actual posts cautions humility)

Event as evidence

The default: normal old Bayesian evidence

The realm of "updates," "priors," and "credences"

Pseudo-definition: Induces [1] a change to or within a model (of whatever the model's user is trying to understand)

Corresponds to models that are (as is often assumed):

Are you factoring in that CEA pays a few hundred bucks per attendee? I'd have a high-ish bar to pay that much for someone to go to a conference myself. Altho I don't have a good sense of what the marginal attendee/rejectee looks like.

Ok so things that get posted in the Shortform tab also appear in your (my) shortform post , which can be edited to not have the title "___'s shortform" and also has a real post body that is empty by default but you can just put stuff in.

There's also the usual "frontpage" checkbox, so I assume an individual's own shortform page can appear alongside normal posts(?).

New interview with Will MacAskill by @MHR🔸

Almost a year after the 2024 holiday season Twitter fundraiser, we managed to score a very exciting "Mystery EA Guest" to interview: Will MacAskill himself.

Summary, highlights, and transcript below video!

Summary and Highlights

(summary AI-generated)

Effective Altruism has changed significantly since its inception. With the arrival of "mega donors" and major institutional changes, does individual effective giving still matter in 2025?

Will MacAskill—co-founder of the Centre for Effective Altruism and Giving What We Can, and currently a senior research fellow at the Forethought Institute—says the answer is a resounding yes. In fact, he argues that despite the resources currently available, individuals are "systematically not ambitious enough" relative to the scale of the problems the world faces.

In this special interview for the 2025 EA Twitter Fundraiser, Will joins host Matt to discuss the evolution of the movement’s focus. They discuss why animal we... (read more)

From Reuters:

I sincerely hope OpenPhil (or Effective Ventures, or both - I don't know the minutia here) sues over this. Read the reasoning for and details of the $30M grant here.

The case for a legal challenge seems hugely overdetermined to me:

I know OpenPhil has a pretty hands-off ethos and vibe; this shouldn't stop them from acting with integrity when hands-on legal action is clearly warranted

Good Ventures rather than Effective Ventures, no?

What's the legal case for a lawsuit?

There's a broader point here about the takeover of a non-profit organization by financial interests that I'd really like to see fought back against.

You could also add:

"Negotiate safety conditions as part of a settlement"

I understand that OpenAI's financial situation is not very good [edit: this may not be a high-quality source], and if they aren't able to convert to a for-profit, things will become even worse:

https://www.wheresyoured.at/oai-business/

It's conceivable that OpenPhil suing OpenAI could buy us 10+ years of AI timeline, if the following dominoes fall:

-

-

-

... (read more)OpenPhil sues, and OpenAI fails to convert to a for-profit.

As a result, OpenAI struggles to raise additional capital from investors.

Losing $4-5 billion a year with little additional funding in sight, OpenAI is forced to make some tough financial decisions. They turn off the free version of ChatGPT, stop training new models, and cut salaries for employees. They're able to eke out some profit, but not much profit, because their product is not highly differentiated from other AI off

I made a tool to play around with how alternatives to the 10% GWWC Pledge default norm might change:

How much total donation revenue gets collected

There's some discussion at this Tweet of mine

Some folks pushed back a bit, citing the following:

I don't find these points very convincing...

Some points of my own

- To quote myself: "I think it would be news to most GWWC pledgers that actually the main point is some indirect thing like building habits rather than getting money to effective orgs"

- If the distribution of GWWC pledgers resembles the US distribution of household incomes and then cut implied donations in half to account for individual vs household discrepancy (obviou

... (read more)Wanted to bring this comment thread out to ask if there's a good list of AI safety papers/blog posts/urls anywhere for this?

(I think local digital storage in many locations probably makes more sense than paper but also why not both)

Mostly for fun I vibecoded an API to easily parse EA Forum posts as markdown with full comment details based on post URL (I think helpful mostly for complex/nested comment sections where basic copy and paste doesn't work great)

I have tested it on about three posts and every possible disclaimer applies

Is there a good list of the highest leverage things a random US citizen (probably in a blue state) can do to cause Trump to either be removed from office or seriously constrained in some way? Anyone care to brainstorm?

Like the safe state/swing state vote swapping thing during the election was brilliant - what analogues are there for the current moment, if any?

After thinking about this post ("Utilitarians Should Accept that Some Suffering Cannot be “Offset”") some more, there's an additional, weaker claim I want to emphasize, which is: You should be very skeptical that it’s morally good to bring about worlds you wouldn’t personally want to experience all of

We can imagine a society of committed utilitarians all working to bring about a very large universe full of lots of happiness and, in an absolute sense, lots of extreme suffering. The catch is that these very utilitarians are the ones that are going to be experiencing this grand future - not future people or digital people or whomever.

Personally, they all desperately want to opt out of this - nobody wants to actually go through the extreme suffering regardless of what benefits await, and yet all work day in and day out to bring about this future anyway, condemning themselves to heaven and to hell.

Of course it’s not impossible that their stated morals are right and preferences are morally wrong, but my claim is that we should be very skeptical that the “skin in the game” answer is the wrong one.

And of course here I’m just supposing/assuming that these utilitarians have the prefere... (read more)

A couple takes from Twitter on the value of merch and signaling that I think are worth sharing here:

1)

2)

~30 second ask: Please help @80000_Hours figure out who to partner with by sharing your list of Youtube subscriptions via this survey

Unfortunately this only works well on desktop, so if you're on a phone, consider sending this to yourself for later. Thanks!

@MHR🔸 @Laura Duffy, @AbsurdlyMax and I have been raising money for the EA Animal Welfare Fund on Twitter and Bluesky, and today is the last day to donate!

If we raise $3k more today I will transform my room into an EA paradise complete with OWID charts across the walls, a literal bednet, a shrine, and more (and of course post all this online)! Consider donating if and only if you wouldn't use the money for a better purpose!

See some more fun discussion and such by following the replies and quote-tweets here:

Sharing https://earec.net, semantic search for the EA + rationality ecosystem. Not fully up to date, sadly (doesn't have the last month or so of content). The current version is basically a minimal viable product!

On the results page there is also an option to see EA Forum only results which allow you to sort by a weighted combination of karma and semantic similarity thanks to the API!

Final feature to note is that there's an option to have gpt-4o-mini "manually" read through the summary of each article on the current screen of results, which will give better evaluations of relevance to some query (e.g. "sources I can use for a project on X") than semantic similarity alone.

Still kinda janky - as I said, minimal viable product right now. Enjoy and feedback is welcome!

Thanks to @Nathan Young for commissioning this!

There's a question on the forum user survey:

This is one thing I've updated down quite a bit over the last year.

It seems to me that relatively few self-identified EA donors mostly or entirely give to the organization/whatever that they would explicitly endorse as being the single best recipient of a marginal dollar (do others disagree?)

Of course the more important question is whether most EA-inspired dollars are given in such a way (rather than most donors). Unfortunately, I think the answer to this is "no" as well, seeing as OpenPhil continues to donate a majority of dollars to human global health and development[1] (I threw together a Claude artifact that lets you get a decent picture of how OpenPhil has funded cause areas over time and in aggregate)[2]

Edit: to clarify, it could be the case that others have object-level disagreements about what the best use of a marginal dollar is. Clearly this is sometimes the case, but it's not what I am getting at here. I am trying to get at the phenomenon where people implicitly say/reason "yes, EA principles imply th... (read more)

In your original post, you talk about explicit reasoning, in the your later edit, you switch to implicit reasoning. Feels like this criticism can't be both. I also think the implicit reasoning critique just collapses into object-level disagreements, and the explicit critique just doesn't have much evidence.

The phenomenon you're looking at, for instance, is:

And I think this might just be an ~empty set, compared to people having different object-level beliefs about what EA principles are or imply they should do, and also disagree with you on what the best thing to do would be.[1] I really don't think there's many people saying "the best thing to do is donate to X, but I will donate to Y". (References please if so - clarification in footnotes[2]) Even on OpenPhil, I think Dustin just genuinely believes in worldview diversification is the best thing, so there's no contradiction there where he implies the best thing would be to do X but in practice does do Y.

I think causing this to 'update downwa... (read more)

Banger from @Linch

Re: a recent quick take in which I called on OpenPhil to sue OpenAI: a new document in Musk's lawsuit mentions this explicitly (page 91)

Interesting that the Animal Welfare Fund gives out so few small grants relative to the Infrastructure and Long Term Future funds (Global Health and Development has only given out 20 grants, all very large, so seems to be a more fundamentally different type of thing(?)). Data here.

A few stats:

Proportions under $threshold

Grants under $threshold

Summary stats (rounded)

I made a custom GPT that is just normal, fully functional ChatGPT-4, but I will donate any revenue this generates[1] to effective charities.

Presenting: Donation Printer

OpenAI is rolling out monetization for custom GPTs:

Was sent a resource in response to this quick take on effectively opposing Trump that at a glance seems promising enough to share on its own:

From A short to-do list by the Substack Make Trump Lose Again:

Bolding is mine to highlight the 80k-like opportunity. I'm abusing the block quote a bit by taking out most of the text, so check out the actual post if interested!

There's also a volunteering opportunities page advertising "A short list of high-impact election opportunities, continuously updated" which links to a notion page that's currently down.

I'm pretty happy with how this "Where should I donate, under my values?" Manifold market has been turning out. Of course all the usual caveats pertaining to basically-fake "prediction" markets apply, but given the selection effects of who spends manna on an esoteric market like this I put a non-trivial weight into the (live) outcomes.

I guess I'd encourage people with a bit more money to donate to do something similar (or I guess defer, if you think I'm right about ethics!), if just as one addition to your portfolio of donation-informing considerations.

Thanks! Let me write them as a loss function in python (ha)

For real though:

- Some flavor of hedonic utilitarianism

- I guess I should say I have moral uncertainty (which I endorse as a thing) but eh I'm pretty convinced

- Longtermism as explicitly defined is true

- Don't necessarily endorse the cluster of beliefs that tend to come along for the ride though

- "Suffering focused total utilitarian" is the annoying phrase I made up for myself

- I think many (most?) self-described total utilitarians give too little consideration/weight to suffering, and I don't think it really matters (if there's a fact of the matter) whether this is because of empirical or moral beliefs

- Maybe my most substantive deviation from the default TU package is the following (defended here):

- "Under a form of utilitarianism that places happiness and suffering on the same moral axis and allows that the former can be traded off against the latter, one might nevertheless conclude that some instantiations of suffering cannot be offset or justified by even an arbitrarily large amount of wellbeing."

- Moral realism for basically all the reasons described by Rawlette on 80k but I don't think this really matters after conditioning on normati

... (read more)According to Kevin Esvelt on the recent 80,000k podcast (excellent btw, mostly on biosecurity), eliminating the New World New World screwworm could be an important farmed animal welfare (infects livestock), global health (infects humans), development (hurts economies), science/innovation intervention, and most notably quasi-longtermist wild animal suffering intervention.

More, if you think there’s a non-trivial chance of human disempowerment, societal collapse, or human extinction in the next 10 years, this would be important to do ASAP because we may not be able to later.

From the episode:

... (read more)A little while ago I posted this quick take:

I didn't have a good response to @DanielFilan, and I'm pretty inclined to defer to orgs like CEA to make decisions about how to use their own scarce resources.

At least for EA Global Boston 2024 (which ended yesterday), there was the option to pay a "cost covering" ticket fee (of what I'm told is $1000).[1]

All this is to say that I am now more confident (although still <80%) that marginal rejected applicants who are willing to pay their cost-covering fee would be good to admit.[2]

In part this stems from an only semi-legible background stance that, on the whole, less impressive-seeming people have more ~potential~ and more to offer than I think "elite EA" (which would those running EAG admissions) tend to think. And this, in turn, has a lot to do with the endogeneity/path dependence of I'd hastily summarize as "EA involvement."

That is, many (most?) people need a break-in point to move from something like "basically convinced that EA is good, interested in the ideas and consuming content, maybe donating 10%" to anything more ambitious.

For some, that comes in the form of going to an elite college with a vibrant EA group/communi... (read more)

Offer subject to be arbitrarily stopping at some point (not sure exactly how many I'm willing to do)

Give me chatGPT Deep Research queries and I'll run them. My asks are that:

Random sorta gimmicky AI safety community building idea: tabling at universities but with a couple laptop signed into Claude Pro with different accounts. Encourage students (and profs) to try giving it some hard question from eg a problem set and see how it performs. Ideally have a big monitor for onlookers to easily see.

Most college students are probably still using ChatGPT-3.5, if they use LLMs at all. There’s a big delta now between that and the frontier.

I have a vague fear that this doesn't do well on the 'try not to have the main net effect be AI hypebuilding' heuristic.

I went ahead and made an "Evergreen" tag as proposed in my quick take from a while back:

This post is half object level, half experiment with “semicoherent audio monologue ramble → prose” AI (presumably GPT-3.5/4 based) program audiopen.ai.

In the interest of the latter objective, I’m including 3 mostly-redundant subsections:

1) Dubious asymmetry argument in WWOTF

In Chapter 9 of his book, What We Are the Future, Will MacAskill argues that the future holds positive moral value under a total utilitarian perspective. He posits that people generally use resources to achieve what they want - either for themselves or for others - and thus good outcomes are easily explained as the natural consequence of agents deploying resources for their goals. Conversely, bad outcomes tend to be side effects of pursuing other goals. While malevolence and sociopathy do exist, they are empirically rare.

MacAskill argues that in a future with continued economic growth and no existential risk, we will likely direct more resources towards doing good things due to self-interest and increase... (read more)

A few Forum meta things you might find useful or interesting:

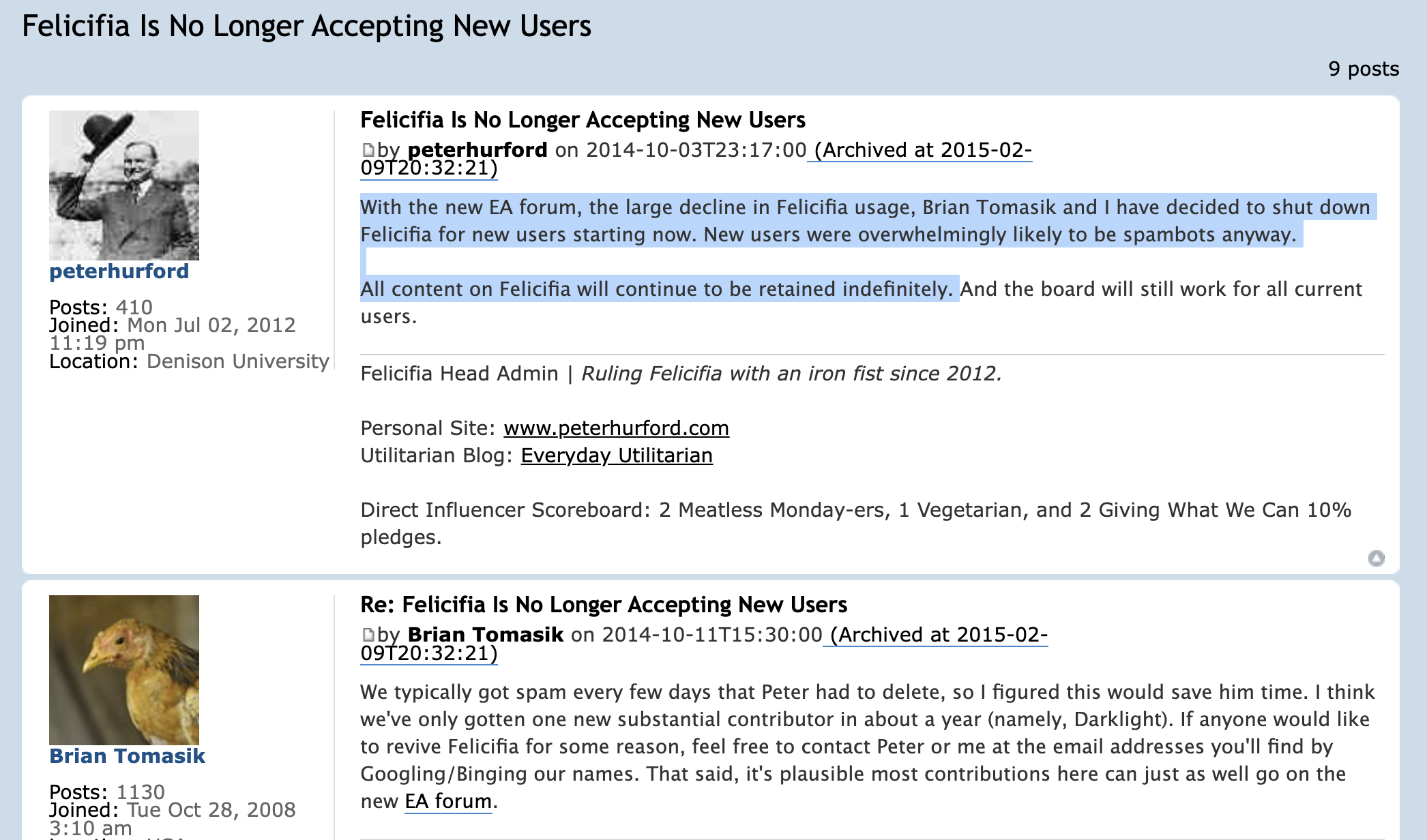

Open Philanthropy: Our Approach to Recruiting a Strong TeamHistories of Value Lock-in and Ideology CritiqueWhy I think strong general AI is coming soonAnthropics and the Universal DistributionRange and Forecasting AccuracyA Pin and a Balloon: Anthropic Fragility Increases Chances of Runaway Global WarmingSo the EA Forum has, like, an ancestor? Is this common knowledge? Lol

Felicifia: not functional anymore but still available to view. Learned about thanks to a Tweet from Jacy

From Felicifia Is No Longer Accepting New Users:

Update: threw together

Made a podcast feed with EAG talks. Now has both the recent Bay Area and London ones:

Full vids on the CEA Youtube page

A (potential) issue with MacAskill's presentation of moral uncertainty

Not able to write a real post about this atm, though I think it deserves one.

MacAskill makes a similar point in WWOTF, but IMO the best and most decision-relevant quote comes from his second appearance on the 80k podcast:

I don't think the second bolded sentence follows in any objective or natural manner from the first. Rather, this reasoning takes a distinctly total utilitarian meta-level perspective, summing the various signs of utility and then implicitly considering them under total utilitarianism.

Even granting that the mora arithmetic is appropriate and correct, it's not at all clear what to do once the 2:1 accounting is complete. MacAskill's suffering-focused ... (read more)

The recent 80k podcast on the contingency of abolition got me wondering what, if anything, the fact of slavery's abolition says about the ex ante probability of abolition - or more generally, what one observation of a binary random variable X says about p as in

Turns out there is an answer (!), and it's found starting in paragraph 3 of subsection 1 of section 3 of the Binomial distribution Wikipedia page:

... (read more)Hypothesis: from the perspective of currently living humans and those who will be born in the currrent <4% growth regime only (i.e. pre-AGI takeoff or I guess stagnation) donations currently earmarked for large scale GHW, Givewell-type interventions should be invested (maybe in tech/AI correlated securities) instead with the intent of being deployed for the same general category of beneficiaries in <25 (maybe even <1) years.

The arguments are similar to those for old school "patient philanthropy" except now in particular seems exceptionally uncerta... (read more)

I'm skeptical of this take. If you think sufficiently transformative + aligned AI is likely in the next <25 years, then from the perspective of currently living humans and those who will be born in the current <4% growth regime, surviving until transformative AI arrives would be a huge priority. Under that view, you should aim to deploy resources as fast as possible to lifesaving interventions rather than sitting on them.

Idea/suggestion: an "Evergreen" tag, for old (6 months month? 1 year? 3 years?) posts (comments?), to indicate that they're still worth reading (to me, ideally for their intended value/arguments rather than as instructive historical/cultural artifacts)

As an example, I'd highlight Log Scales of Pleasure and Pain, which is just about 4 years old now.

I know I could just create a tag, and maybe I will, but want to hear reactions and maybe generate common knowledge.

LessWrong has a new feature/type of post called "Dialogues". I'm pretty excited to use it, and hope that if it seems usable, reader friendly, and generally good the EA Forum will eventually adopt it as well.

I tried making a shortform -> Twitter bot (ie tweet each new top level ~quick take~) and long story short it stopped working and wasn't great to begin with.

I feel like this is the kind of thing someone else might be able to do relatively easily. If so, I and I think much of EA Twitter would appreciate it very much! In case it's helpful for this, a quick takes RSS feed is at https://ea.greaterwrong.com/shortform?format=rss

Note: this sounds like it was written by chatGPT because it basically was (from a recorded ramble)🤷

I believe the Forum could benefit from a Shorterform page, as the current Shortform forum, intended to be a more casual and relaxed alternative to main posts, still seems to maintain high standards. This is likely due to the impressive competence of contributors who often submit detailed and well-thought-out content. While some entries are just a few well-written sentences, others resemble blog posts in length and depth.

As such, I find myself hesitant... (read more)

New fish data with estimated individuals killed per country/year/species (super unreliable, read below if you're gonna use!)

That^ is too big for Google Sheets, so here's the same thing just without a breakdown by country that you should be able to open easily if you want to take a look.

Basically the UN data generally used for tracking/analyzing the amount of fish and other marine life captured/farmed and killed only tracks the total weight captured for a given country-year-species (or group of species).

I had chatGPT-4 provide estimated lo... (read more)

WWOTF: what did the publisher cut? [answer: nothing]

Contextual note: this post is essentially a null result. It seemed inappropriate both as a top-level post and as an abandoned Google Doc, so I’ve decided to put out the key bits (i.e., everything below) as Shortform. Feel free to comment/message me if you think that was the wrong call!

Actual post

On his recent appearance on the 80,000 Hours Podcast, Will MacAskill noted that Doing Good Better was significantly influenced by the book’s publisher:[1]

... (read more)A resource that might be useful: https://tinyapps.org/

There's a ton there, but one anecdote from yesterday: referred me to this $5 IOS desktop app which (among other more reasonable uses) made me this full quality, fully intra-linked >3600 page PDF of (almost) every file/site linked to by every file/site linked to from Tomasik's homepage (works best with old-timey simpler sites like that)

New Thing

Last week I complained about not being able to see all the top shortform posts in one list. Thanks to Lorenzo for pointing me to the next best option:

It wasn't too hard to put together a text doc with (at least some of each of) all 1470ish shortform posts, which you can view or download here.

- Pros: (practically) infinite scroll of insight porn

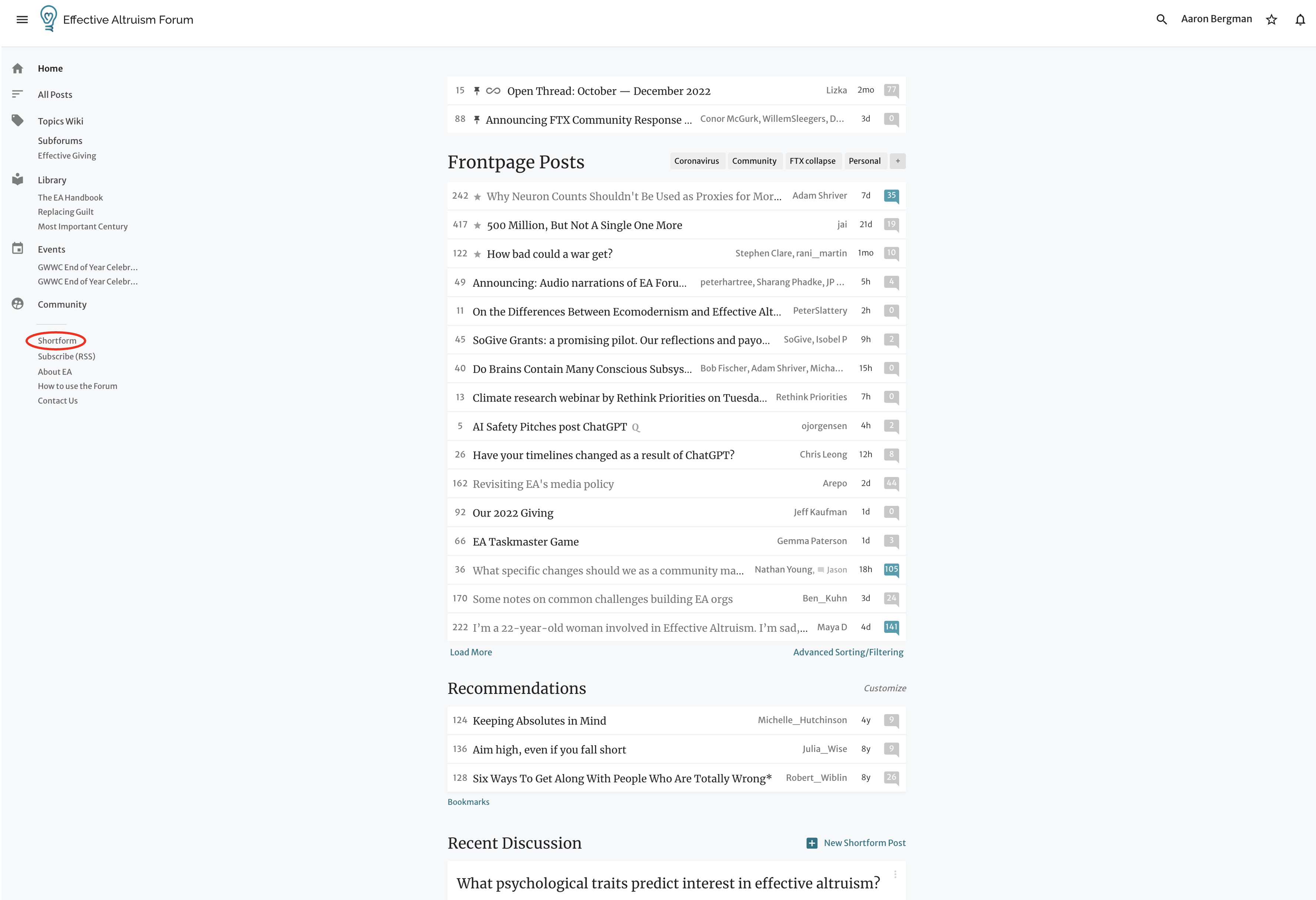

... (read more)Infinitely easier said than done, of course, but some Shortform feedback/requests

- The link to get here from the main page is awfully small and inconspicuous (1 of 145 individual links on the page according to a Chrome extension)

- I can imagine it being near/stylistically like:

- "All Posts" (top of sidebar)

- "Recommendations" in the center

- "Frontpage Posts", but to the main section's side or maybe as a replacement for it you can easily toggle back and forth from

- Would be cool to be able to sort and aggregate like with the main posts (nothing to filter by afaik

... (read more)Events as evidence vs. spotlights

Note: inspired by the FTX+Bostrom fiascos and associated discourse. May (hopefully) develop into longform by explicitly connecting this taxonomy to those recent events (but my base rate of completing actual posts cautions humility)

Event as evidence

- The default: normal old Bayesian evidence

- The realm of "updates," "priors," and "credences"

- Pseudo-definition: Induces [1] a change to or within a model (of whatever the model's user is trying to understand)

- Corresponds to models that are (as is often assumed):

- Well-de

... (read more)EAG(x)s should have a lower acceptance bar. I find it very hard to believe that accepting the marginal rejectee would be bad on net.

Are you factoring in that CEA pays a few hundred bucks per attendee? I'd have a high-ish bar to pay that much for someone to go to a conference myself. Altho I don't have a good sense of what the marginal attendee/rejectee looks like.

Ok so things that get posted in the Shortform tab also appear in your (my) shortform post , which can be edited to not have the title "___'s shortform" and also has a real post body that is empty by default but you can just put stuff in.

There's also the usual "frontpage" checkbox, so I assume an individual's own shortform page can appear alongside normal posts(?).

The link is: [Draft] Used to be called "Aaron Bergman's shortform" (or smth)

I assume only I can see this but gonna log out and check

Effective Altruism Georgetown will be interviewing Rob Wiblin for our inaugural podcast episode this Friday! What should we ask him?