From Reuters:

SAN FRANCISCO, Sept 25 (Reuters) - ChatGPT-maker OpenAI is working on a plan to restructure its core business into a for-profit benefit corporation that will no longer be controlled by its non-profit board, people familiar with the matter told Reuters, in a move that will make the company more attractive to investors.

I sincerely hope OpenPhil (or Effective Ventures, or both - I don't know the minutia here) sues over this. Read the reasoning for and details of the $30M grant here.

The case for a legal challenge seems hugely overdetermined to me:

- Stop/delay/complicate the restructuring, and otherwise make life appropriately hard for Sam Altman

- Settle for a large huge amount of money that can be used to do a huge amount of good

- Signal that you can't just blatantly take advantage of OpenPhil/EV/EA as you please without appropriate challenge

I know OpenPhil has a pretty hands-off ethos and vibe; this shouldn't stop them from acting with integrity when hands-on legal action is clearly warranted

I understand that OpenAI's financial situation is not very good [edit: this may not be a high-quality source], and if they aren't able to convert to a for-profit, things will become even worse:

OpenAI has two years from the [current $6.6 billion funding round] deal’s close to convert to a for-profit company, or its funding will convert into debt at a 9% interest rate.

As an aside: how will OpenAI pay that interest in the event they can't convert to a for-profit business? Will they raise money to pay the interest rate? Will they get a loan?

https://www.wheresyoured.at/oai-business/

It's conceivable that OpenPhil suing OpenAI could buy us 10+ years of AI timeline, if the following dominoes fall:

-

OpenPhil sues, and OpenAI fails to convert to a for-profit.

-

As a result, OpenAI struggles to raise additional capital from investors.

-

Losing $4-5 billion a year with little additional funding in sight, OpenAI is forced to make some tough financial decisions. They turn off the free version of ChatGPT, stop training new models, and cut salaries for employees. They're able to eke out some profit, but not much profit, because their product is not highly differentiated from other AI off

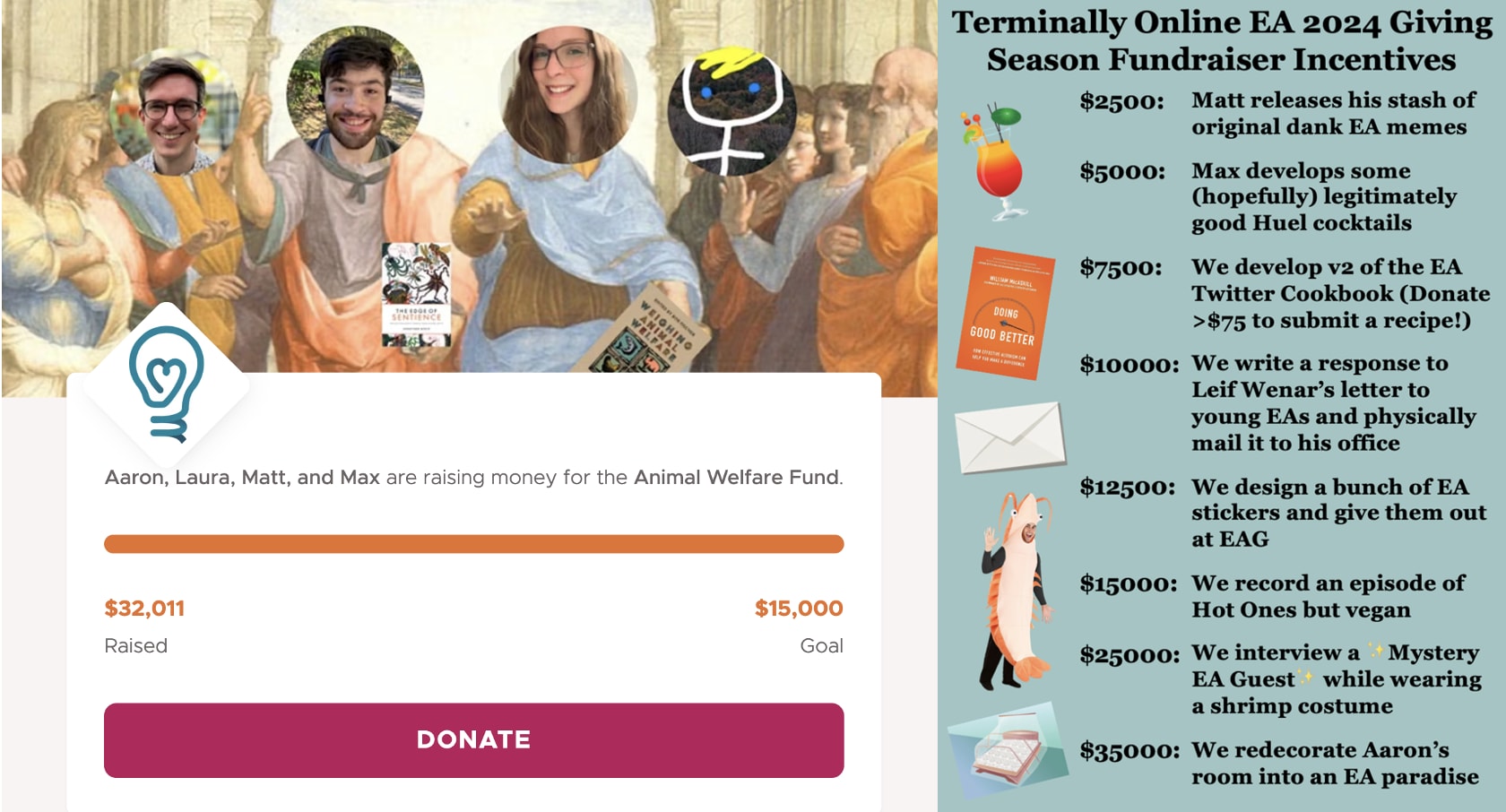

@MHR🔸 @Laura Duffy, @AbsurdlyMax and I have been raising money for the EA Animal Welfare Fund on Twitter and Bluesky, and today is the last day to donate!

If we raise $3k more today I will transform my room into an EA paradise complete with OWID charts across the walls, a literal bednet, a shrine, and more (and of course post all this online)! Consider donating if and only if you wouldn't use the money for a better purpose!

See some more fun discussion and such by following the replies and quote-tweets here:

Re: a recent quick take in which I called on OpenPhil to sue OpenAI: a new document in Musk's lawsuit mentions this explicitly (page 91)

There's a question on the forum user survey:

How much do you trust other EA Forum users to be genuinely interested in making the world better using EA principles?

This is one thing I've updated down quite a bit over the last year.

It seems to me that relatively few self-identified EA donors mostly or entirely give to the organization/whatever that they would explicitly endorse as being the single best recipient of a marginal dollar (do others disagree?)

Of course the more important question is whether most EA-inspired dollars are given in such a way (rather than most donors). Unfortunately, I think the answer to this is "no" as well, seeing as OpenPhil continues to donate a majority of dollars to human global health and development[1] (I threw together a Claude artifact that lets you get a decent picture of how OpenPhil has funded cause areas over time and in aggregate)[2]

Edit: to clarify, it could be the case that others have object-level disagreements about what the best use of a marginal dollar is. Clearly this is sometimes the case, but it's not what I am getting at here. I am trying to get at the phenomenon where people implicitly say/reason "yes, EA principles imply th... (read more)

In your original post, you talk about explicit reasoning, in the your later edit, you switch to implicit reasoning. Feels like this criticism can't be both. I also think the implicit reasoning critique just collapses into object-level disagreements, and the explicit critique just doesn't have much evidence.

The phenomenon you're looking at, for instance, is:

"I am trying to get at the phenomenon where people implicitly say/reason "yes, EA principles imply that the best thing to do would be to donate to X, but I am going to donate to Y instead."

And I think this might just be an ~empty set, compared to people having different object-level beliefs about what EA principles are or imply they should do, and also disagree with you on what the best thing to do would be.[1] I really don't think there's many people saying "the best thing to do is donate to X, but I will donate to Y". (References please if so - clarification in footnotes[2]) Even on OpenPhil, I think Dustin just genuinely believes in worldview diversification is the best thing, so there's no contradiction there where he implies the best thing would be to do X but in practice does do Y.

I think causing this to 'update downwa... (read more)

A little while ago I posted this quick take:

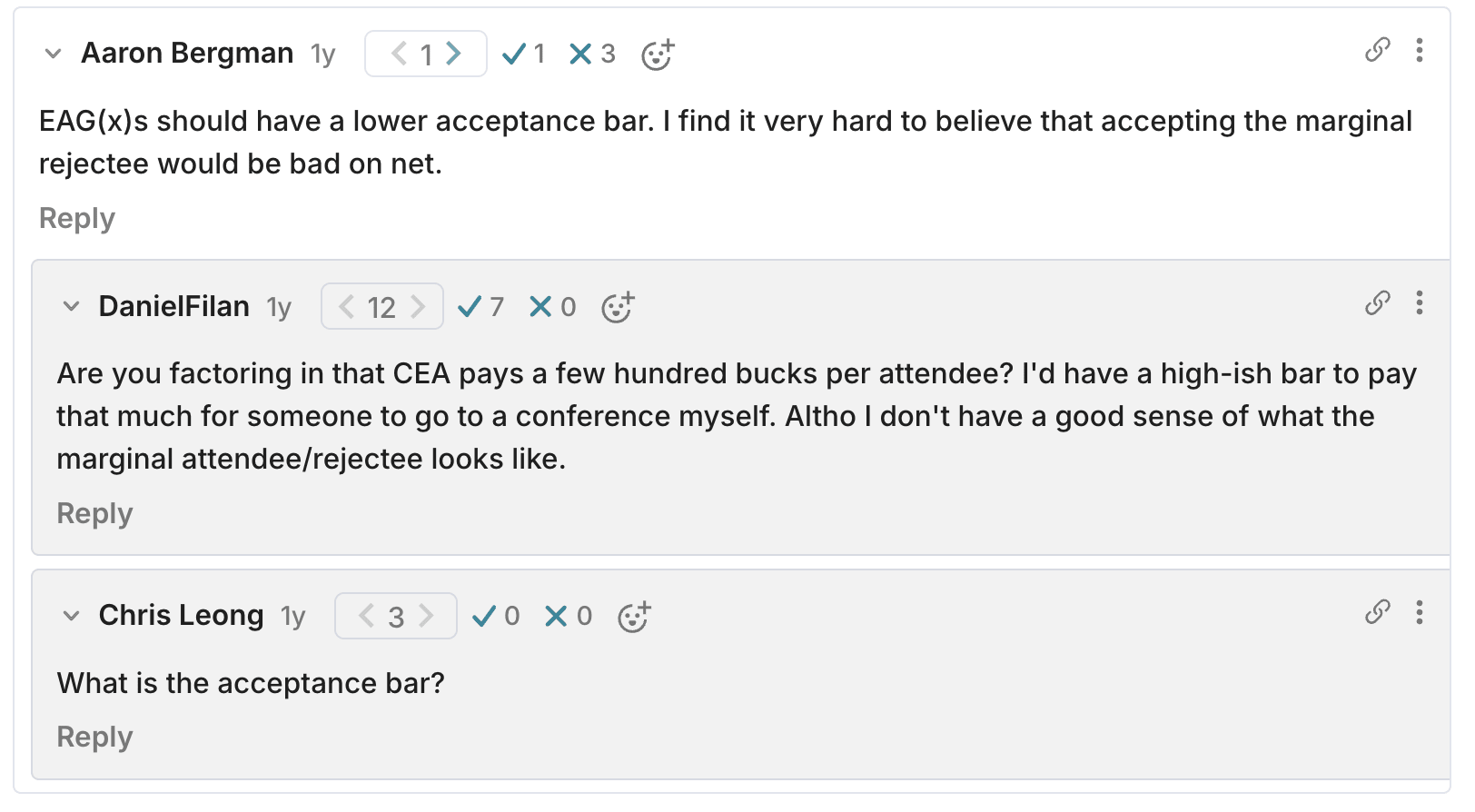

I didn't have a good response to @DanielFilan, and I'm pretty inclined to defer to orgs like CEA to make decisions about how to use their own scarce resources.

At least for EA Global Boston 2024 (which ended yesterday), there was the option to pay a "cost covering" ticket fee (of what I'm told is $1000).[1]

All this is to say that I am now more confident (although still <80%) that marginal rejected applicants who are willing to pay their cost-covering fee would be good to admit.[2]

In part this stems from an only semi-legible background stance that, on the whole, less impressive-seeming people have more ~potential~ and more to offer than I think "elite EA" (which would those running EAG admissions) tend to think. And this, in turn, has a lot to do with the endogeneity/path dependence of I'd hastily summarize as "EA involvement."

That is, many (most?) people need a break-in point to move from something like "basically convinced that EA is good, interested in the ideas and consuming content, maybe donating 10%" to anything more ambitious.

For some, that comes in the form of going to an elite college with a vibrant EA group/communi... (read more)

Interesting that the Animal Welfare Fund gives out so few small grants relative to the Infrastructure and Long Term Future funds (Global Health and Development has only given out 20 grants, all very large, so seems to be a more fundamentally different type of thing(?)). Data here.

A few stats:

- The 25th percentile AWF grant was $24,250, compared to $5,802 for Infrastructure and $7,700 for LTFF (and median looks basically the same).

- AWF has only made just nine grants of less than $10k, compared to 163 (Infrastructure) and 132 (LTFF).

Proportions under $threshold

| fund | prop_under_1k | prop_under_2500 | prop_under_5k | prop_under_10k |

|---|---|---|---|---|

| Animal Welfare Fund | 0.000 | 0.004 | 0.012 | 0.036 |

| EA Infrastructure Fund | 0.020 | 0.086 | 0.194 | 0.359 |

| Global Health and Development Fund | 0.000 | 0.000 | 0.000 | 0.000 |

| Long-Term Future Fund | 0.007 | 0.068 | 0.163 | 0.308 |

Grants under $threshold

| fund | n | under_2500 | under_5k | under_10k | under_25k | under_50k |

|---|---|---|---|---|---|---|

| Animal Welfare Fund | 250 | 1 | 3 | 9 | 243 | 248 |

| EA Infrastructure Fund | 454 | 39 | 88 | 163 | 440 | 453 |

| Global Health and Development Fund | 20 | 0 | 0 | 0 | 5 | 7 |

| Long-Term Future Fund | 429 | 29 | 70 | 132 | 419 | 429 |

Summary stats (rounded)

| fund | n | median | mean | q1 | q3 | total |

|---|---|---|---|---|---|---|

| Animal Welfare Fund | 250 | $50,000 | $62,188 | $24,250 | $76,000 | $15,546,957 |

| EA Infrastructure Fund | 454 | $15,319 | $41,331 | $5,802 | $45,000 | $18,764,097 |

I made a custom GPT that is just normal, fully functional ChatGPT-4, but I will donate any revenue this generates[1] to effective charities.

Presenting: Donation Printer

- ^

OpenAI is rolling out monetization for custom GPTs:

Builders can earn based on GPT usage

In Q1 we will launch a GPT builder revenue program. As a first step, US builders will be paid based on user engagement with their GPTs. We'll provide details on the criteria for payments as we get closer.

According to Kevin Esvelt on the recent 80,000k podcast (excellent btw, mostly on biosecurity), eliminating the New World New World screwworm could be an important farmed animal welfare (infects livestock), global health (infects humans), development (hurts economies), science/innovation intervention, and most notably quasi-longtermist wild animal suffering intervention.

More, if you think there’s a non-trivial chance of human disempowerment, societal collapse, or human extinction in the next 10 years, this would be important to do ASAP because we may not be able to later.

From the episode:

... (read more)Kevin Esvelt:

...

But from an animal wellbeing perspective, in addition to the human development, the typical lifetime of an insect species is several million years. So 106 years times 109 hosts per year means an expected 1015 mammals and birds devoured alive by flesh-eating maggots. For comparison, if we continue factory farming for another 100 years, that would be 1013 broiler hens and pigs. So unless it’s 100 times worse to be a factory-farmed broiler hen than it is to be devoured alive by flesh-eating maggots, then when you integrate over the future, it is more important for animal we

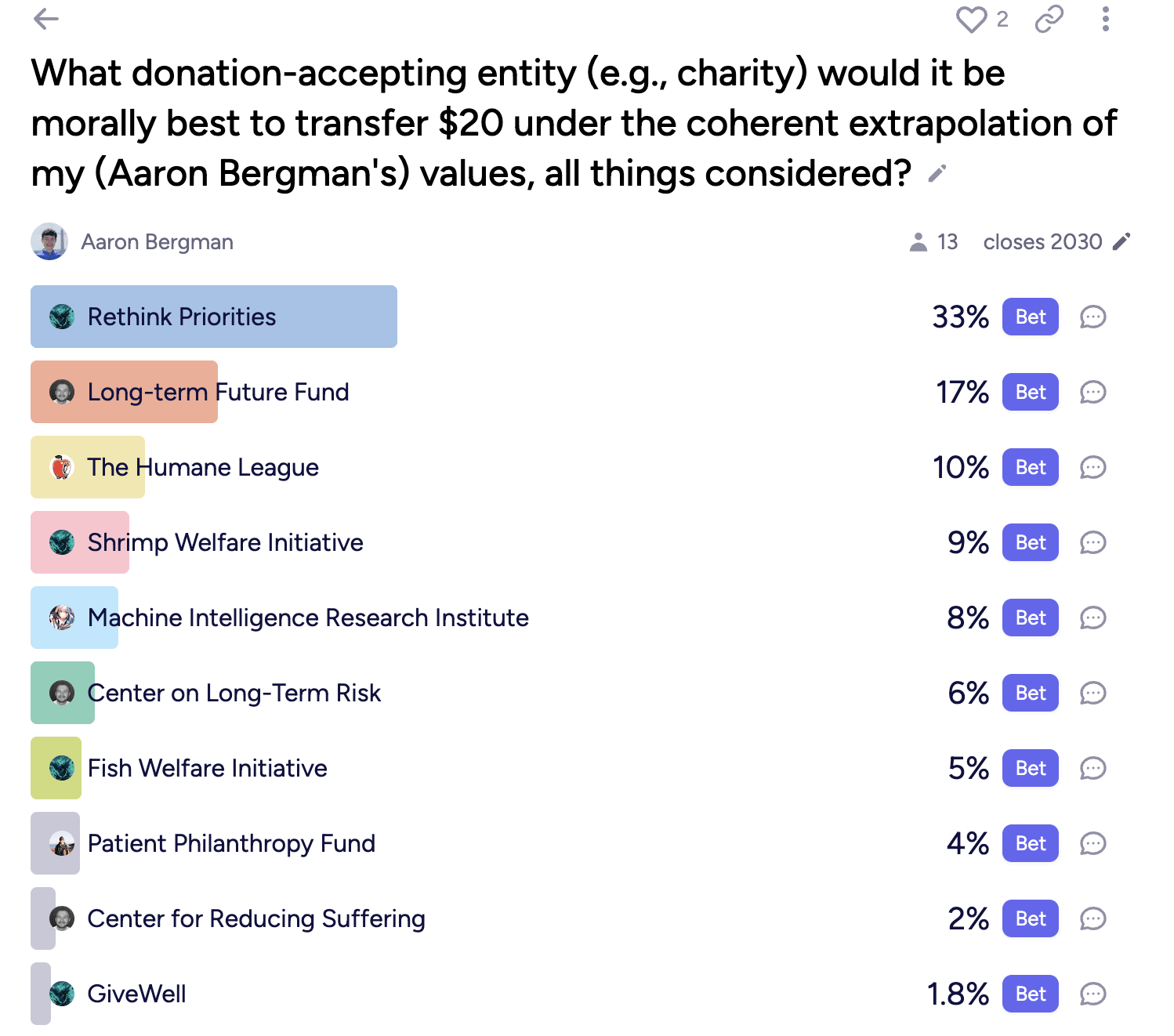

I'm pretty happy with how this "Where should I donate, under my values?" Manifold market has been turning out. Of course all the usual caveats pertaining to basically-fake "prediction" markets apply, but given the selection effects of who spends manna on an esoteric market like this I put a non-trivial weight into the (live) outcomes.

I guess I'd encourage people with a bit more money to donate to do something similar (or I guess defer, if you think I'm right about ethics!), if just as one addition to your portfolio of donation-informing considerations.

Thanks! Let me write them as a loss function in python (ha)

For real though:

- Some flavor of hedonic utilitarianism

- I guess I should say I have moral uncertainty (which I endorse as a thing) but eh I'm pretty convinced

- Longtermism as explicitly defined is true

- Don't necessarily endorse the cluster of beliefs that tend to come along for the ride though

- "Suffering focused total utilitarian" is the annoying phrase I made up for myself

- I think many (most?) self-described total utilitarians give too little consideration/weight to suffering, and I don't think it really matters (if there's a fact of the matter) whether this is because of empirical or moral beliefs

- Maybe my most substantive deviation from the default TU package is the following (defended here):

- "Under a form of utilitarianism that places happiness and suffering on the same moral axis and allows that the former can be traded off against the latter, one might nevertheless conclude that some instantiations of suffering cannot be offset or justified by even an arbitrarily large amount of wellbeing."

- Moral realism for basically all the reasons described by Rawlette on 80k but I don't think this really matters after conditioning on normati

Random sorta gimmicky AI safety community building idea: tabling at universities but with a couple laptop signed into Claude Pro with different accounts. Encourage students (and profs) to try giving it some hard question from eg a problem set and see how it performs. Ideally have a big monitor for onlookers to easily see.

Most college students are probably still using ChatGPT-3.5, if they use LLMs at all. There’s a big delta now between that and the frontier.

I went ahead and made an "Evergreen" tag as proposed in my quick take from a while back:

Meant to highlight that a relatively old post (perhaps 1 year or older?) still provides object level value to read i.e., above and beyond:

- It's value as a cultural or historical artifact above

- The value of more recent work it influenced or inspired

This post is half object level, half experiment with “semicoherent audio monologue ramble → prose” AI (presumably GPT-3.5/4 based) program audiopen.ai.

In the interest of the latter objective, I’m including 3 mostly-redundant subsections:

- A ’final’ mostly-AI written text, edited and slightly expanded just enough so that I endorse it in full (though recognize it’s not amazing or close to optimal)

- The raw AI output

- The raw transcript

1) Dubious asymmetry argument in WWOTF

In Chapter 9 of his book, What We Are the Future, Will MacAskill argues that the future holds positive moral value under a total utilitarian perspective. He posits that people generally use resources to achieve what they want - either for themselves or for others - and thus good outcomes are easily explained as the natural consequence of agents deploying resources for their goals. Conversely, bad outcomes tend to be side effects of pursuing other goals. While malevolence and sociopathy do exist, they are empirically rare.

MacAskill argues that in a future with continued economic growth and no existential risk, we will likely direct more resources towards doing good things due to self-interest and increase... (read more)

A few Forum meta things you might find useful or interesting:

- Two super basic interactive data viz apps

- 1) How often (in absolute and relative terms) a given forum topic appears with another given topic

- 2) Visualizing the popularity of various tags

- An updated Forum scrape including the full text and attributes of 10k-ish posts as of Christmas, '22

- From the data in no. 2, a few effortposts that never garnered an accordant amount of attention (qualitatively filtered from posts with (1) long read times (2) modest positive karma (3) not a ton of comments.

- Columns labels should be (left to right):

- Title/link

- Author(s)

- Date posted

- Karma (as of a week ago)

- Comments (as of a week ago)

- Columns labels should be (left to right):

Open Philanthropy: Our Approach to Recruiting a Strong Team | pmk | 10/23/2021 | 11 | 0 |

Histories of Value Lock-in and Ideology Critique | clem | 9/2/2022 | 11 | 1 |

Why I think strong general AI is coming soon | porby | 9/28/2022 | 13 | 1 |

Anthropics and the Universal Distribution | Joe_Carlsmith | 11/28/2021 | 18 | 0 |

Range and Forecasting Accuracy | niplav | 5/27/2022 | 12 | 2 |

A Pin and a Balloon: Anthropic Fragility Increases Chances of Runaway Global Warming | tu | |||

Made a podcast feed with EAG talks. Now has both the recent Bay Area and London ones:

Full vids on the CEA Youtube page

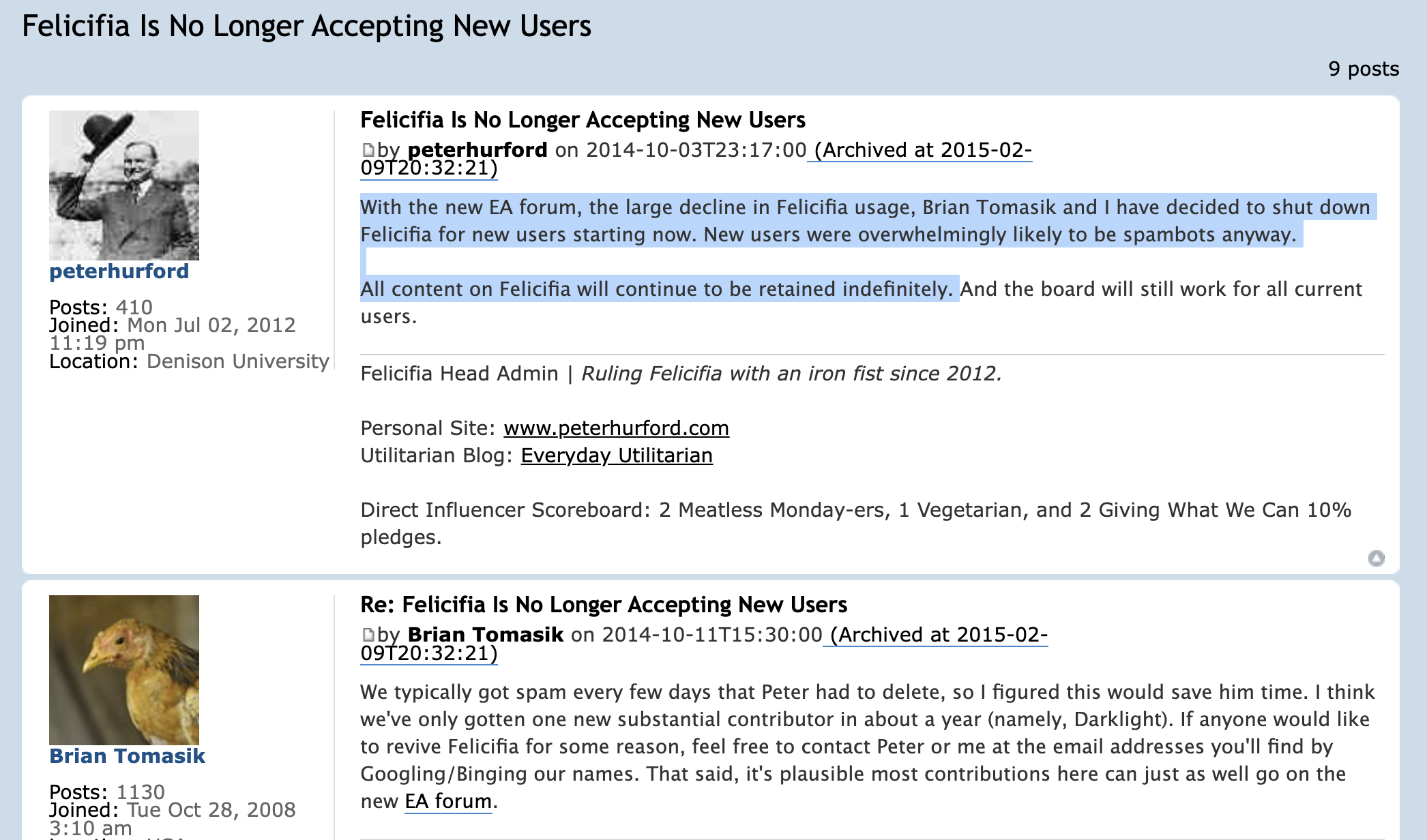

So the EA Forum has, like, an ancestor? Is this common knowledge? Lol

Felicifia: not functional anymore but still available to view. Learned about thanks to a Tweet from Jacy

From Felicifia Is No Longer Accepting New Users:

Update: threw together

- some data with authors, post title names, date, and number of replies (and messed one section up so some rows are missing links)

- A rather long PDF with the posts and replies together (for quick keyword searching), with decent but not great formatting

A (potential) issue with MacAskill's presentation of moral uncertainty

Not able to write a real post about this atm, though I think it deserves one.

MacAskill makes a similar point in WWOTF, but IMO the best and most decision-relevant quote comes from his second appearance on the 80k podcast:

There are possible views in which you should give more weight to suffering...I think we should take that into account too, but then what happens? You end up with kind of a mix between the two, supposing you were 50/50 between classical utilitarian view and just strict negative utilitarian view. Then I think on the natural way of making the comparison between the two views, you give suffering twice as much weight as you otherwise would.

I don't think the second bolded sentence follows in any objective or natural manner from the first. Rather, this reasoning takes a distinctly total utilitarian meta-level perspective, summing the various signs of utility and then implicitly considering them under total utilitarianism.

Even granting that the mora arithmetic is appropriate and correct, it's not at all clear what to do once the 2:1 accounting is complete. MacAskill's suffering-focused ... (read more)

Hypothesis: from the perspective of currently living humans and those who will be born in the currrent <4% growth regime only (i.e. pre-AGI takeoff or I guess stagnation) donations currently earmarked for large scale GHW, Givewell-type interventions should be invested (maybe in tech/AI correlated securities) instead with the intent of being deployed for the same general category of beneficiaries in <25 (maybe even <1) years.

The arguments are similar to those for old school "patient philanthropy" except now in particular seems exceptionally uncerta... (read more)

I'm skeptical of this take. If you think sufficiently transformative + aligned AI is likely in the next <25 years, then from the perspective of currently living humans and those who will be born in the current <4% growth regime, surviving until transformative AI arrives would be a huge priority. Under that view, you should aim to deploy resources as fast as possible to lifesaving interventions rather than sitting on them.

LessWrong has a new feature/type of post called "Dialogues". I'm pretty excited to use it, and hope that if it seems usable, reader friendly, and generally good the EA Forum will eventually adopt it as well.

The recent 80k podcast on the contingency of abolition got me wondering what, if anything, the fact of slavery's abolition says about the ex ante probability of abolition - or more generally, what one observation of a binary random variable X says about p as in

Turns out there is an answer (!), and it's found starting in paragraph 3 of subsection 1 of section 3 of the Binomial distribution Wikipedia page:

... (read more)A closed form Bayes estimator for p also exists when using the Beta distribution as a conjugate prior distribution. When using a genera

Idea/suggestion: an "Evergreen" tag, for old (6 months month? 1 year? 3 years?) posts (comments?), to indicate that they're still worth reading (to me, ideally for their intended value/arguments rather than as instructive historical/cultural artifacts)

As an example, I'd highlight Log Scales of Pleasure and Pain, which is just about 4 years old now.

I know I could just create a tag, and maybe I will, but want to hear reactions and maybe generate common knowledge.

I tried making a shortform -> Twitter bot (ie tweet each new top level ~quick take~) and long story short it stopped working and wasn't great to begin with.

I feel like this is the kind of thing someone else might be able to do relatively easily. If so, I and I think much of EA Twitter would appreciate it very much! In case it's helpful for this, a quick takes RSS feed is at https://ea.greaterwrong.com/shortform?format=rss

Note: this sounds like it was written by chatGPT because it basically was (from a recorded ramble)🤷

I believe the Forum could benefit from a Shorterform page, as the current Shortform forum, intended to be a more casual and relaxed alternative to main posts, still seems to maintain high standards. This is likely due to the impressive competence of contributors who often submit detailed and well-thought-out content. While some entries are just a few well-written sentences, others resemble blog posts in length and depth.

As such, I find myself hesitant... (read more)

New fish data with estimated individuals killed per country/year/species (super unreliable, read below if you're gonna use!)

That^ is too big for Google Sheets, so here's the same thing just without a breakdown by country that you should be able to open easily if you want to take a look.

Basically the UN data generally used for tracking/analyzing the amount of fish and other marine life captured/farmed and killed only tracks the total weight captured for a given country-year-species (or group of species).

I had chatGPT-4 provide estimated lo... (read more)

WWOTF: what did the publisher cut? [answer: nothing]

Contextual note: this post is essentially a null result. It seemed inappropriate both as a top-level post and as an abandoned Google Doc, so I’ve decided to put out the key bits (i.e., everything below) as Shortform. Feel free to comment/message me if you think that was the wrong call!

Actual post

On his recent appearance on the 80,000 Hours Podcast, Will MacAskill noted that Doing Good Better was significantly influenced by the book’s publisher:[1]

... (read more)Rob Wiblin: ...But in 2014 you wrote&

A resource that might be useful: https://tinyapps.org/

There's a ton there, but one anecdote from yesterday: referred me to this $5 IOS desktop app which (among other more reasonable uses) made me this full quality, fully intra-linked >3600 page PDF of (almost) every file/site linked to by every file/site linked to from Tomasik's homepage (works best with old-timey simpler sites like that)

New Thing

Last week I complained about not being able to see all the top shortform posts in one list. Thanks to Lorenzo for pointing me to the next best option:

...the closest I found is https://forum.effectivealtruism.org/allPosts?sortedBy=topAdjusted&timeframe=yearly&filter=all, you can see the inflation-adjusted top posts and shortforms by year.

It wasn't too hard to put together a text doc with (at least some of each of) all 1470ish shortform posts, which you can view or download here.

- Pros: (practically) infinite scroll of insight porn

Infinitely easier said than done, of course, but some Shortform feedback/requests

- The link to get here from the main page is awfully small and inconspicuous (1 of 145 individual links on the page according to a Chrome extension)

- I can imagine it being near/stylistically like:

- "All Posts" (top of sidebar)

- "Recommendations" in the center

- "Frontpage Posts", but to the main section's side or maybe as a replacement for it you can easily toggle back and forth from

- I can imagine it being near/stylistically like:

- Would be cool to be able to sort and aggregate like with the main posts (nothing to filter by afaik

Events as evidence vs. spotlights

Note: inspired by the FTX+Bostrom fiascos and associated discourse. May (hopefully) develop into longform by explicitly connecting this taxonomy to those recent events (but my base rate of completing actual posts cautions humility)

Event as evidence

- The default: normal old Bayesian evidence

- The realm of "updates," "priors," and "credences"

- Pseudo-definition: Induces [1] a change to or within a model (of whatever the model's user is trying to understand)

- Corresponds to models that are (as is often assumed):

- Well-de

EAG(x)s should have a lower acceptance bar. I find it very hard to believe that accepting the marginal rejectee would be bad on net.

Are you factoring in that CEA pays a few hundred bucks per attendee? I'd have a high-ish bar to pay that much for someone to go to a conference myself. Altho I don't have a good sense of what the marginal attendee/rejectee looks like.

Ok so things that get posted in the Shortform tab also appear in your (my) shortform post , which can be edited to not have the title "___'s shortform" and also has a real post body that is empty by default but you can just put stuff in.

There's also the usual "frontpage" checkbox, so I assume an individual's own shortform page can appear alongside normal posts(?).

The link is: [Draft] Used to be called "Aaron Bergman's shortform" (or smth)

I assume only I can see this but gonna log out and check

Effective Altruism Georgetown will be interviewing Rob Wiblin for our inaugural podcast episode this Friday! What should we ask him?

Haha you’re welcome!