(This is a section from a previous post that combined two ideas into one. I thought it would be good to separate out the second idea and explore it more.)

It may be better to see EA as coordination rather than a marketing funnel with the end goal of working for an 'EA organisation'.

There is still a funnel where people hear about EA and learn more but they use the frameworks, questions and EA network to see what their values are and what that means for cause[1] and career selection.

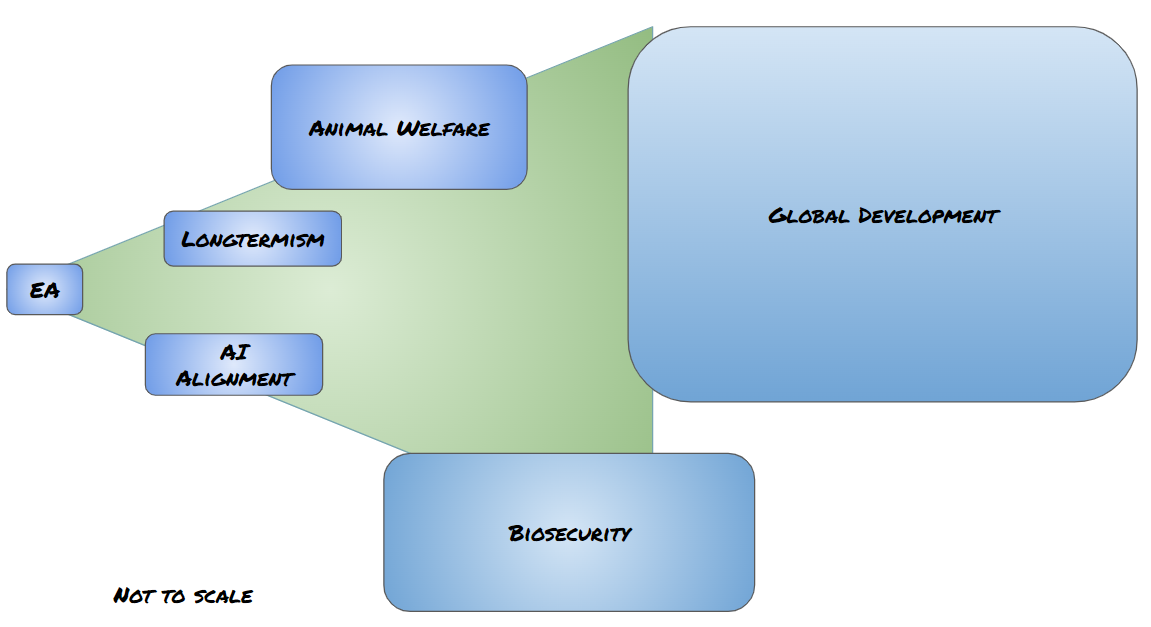

The left side of the diagram below is similar to the original funnel model from CEA, with people engaging more with EA. Rather than seeing that as the endpoint, people can then be connected to individuals and organisations in the fields they have a good fit for.

Focusing on the right side of the diagram, I've tried to represent some fields that people often consider after looking into EA. The size of the boxes aim to represent the different sizes of the fields[2], and how much overlap[3] they have with EA.

What could be seen as EA is the meta research, movement building and crosscutting support. Whereas organisations working on a specific cause area are in a separate field rather than part of EA (which isn't a bad thing).

It's possible that by focusing on EA as a whole rather than specific causes, we are holding back the growth of these fields. It would be surprising if the best strategies for each field were the same as the best strategy for EA.

What would visualising EA in this way mean for movement building?

- More movement building on the field specific level

- Support for cause areas to have their own version of the Centre for Effective Altruism and equivalent meta organisations

- Less emphasis on leading people down a chain of reasoning (for example, effective altruism-->longtermism --> existential risks --> biosecurity), where they may drop off at any point, and more emphasis on connecting people directly to a field

- More research on how to find, incubate and grow causes

- This could lead to more meta organisations (the Centre for Effective Centre's)

One example would be when designing an EA conference the attendees would mainly be people who are undecided as to which cause/career to go into, people that can help them decide, key EA stakeholders from each field, and people in nascent fields . This is compared to a conference that had many people that had already decided which cause area to focus on, they would probably find more value from going to a conference tailored to help dive into higher level questions where everyone had a deeper shared level of understanding.

One key issue is that there are organisations for specific causes but they tend to focus on research first, comms or lobbying second, and community building is third or fourth in their priorities. The organisation might occasionally arrange a conference every few years or some fellowships, but they generally don't have their top goal as movement building. When something is a third priority, it often doesn't get done well, or happen at all. This is in comparison to CEA, which I think has helped grow EA by having movement building be the top priority.

There are some projects in these spaces and I've attempted to list a few of them here, but there are still quite a few gaps, and the organisations that do exist are generally small and don't face much competition.

Field Building Gaps

- Global Development

- Giving money - GiveWell, The Life You Can Save

- Career - Probably Good

- Coordination[4] - I can't think of anything here, although it looks like something Open Philanthropy would fund

- Longtermism

- Giving money- Not much for individuals, but foundations are attempting to work out what to fund, and there is the Long Term Future Fund

- Career - 80,000 Hours

- Coordination - There doesn't seem to be any one organisation doing this, although there are a variety of projects like this and there is a newsletter

- AI Alignment

- Giving money - There is a yearly post by Larks, given that it seems that it isn't hard to fund good projects in this space, this probably isn't much of an issue

- Career - AI Safety Support for technical research and 80,000 Hours for technical and policy

- Coordination - The Future of Life Institute has organised some small conferences, but their remit is wider than just AI alignment

- Animal Welfare

- Giving money - Animal Charity Evaluators

- Career - Animal Advocacy Careers

- Coordination - Not much but there is a new project aiming to cover this gap

- Alternative Proteins

- Good Food Institute seem to help coordinate money, careers and the field as a whole

- Biosecurity

- I couldn't think of an organisation for any of the three categories, although there are informal networks and a new hub being set up in Boston

- Existential Risk

- Careers - 80,000 Hours

- Coordination - CSER, FHI, GCR and FLI all do parts of this, but they tend to focus on research rather than having movement building be a top priority

- Suffering Risks

- Coordination - Center on Long-Term Risk, although they focus mainly on research

- Environmentalism

- Giving money - Giving Green, Founders Pledge

- Careers - Work On Climate has a very active slack community but is only tangentially related to EA

- Coordination - Effective Environmentalism, but it's only volunteer run

There are lots of other causes that could be added here and they often have much less field building infrastructure.

With fields that are already large there are usually organisations that do some of this work, and it may not help to reinvent the wheel. Although it is still worth considering if there is value to coordinating the people interested in EA within a larger cause. One example is that there are thousands of global development conferences, but none for EA & global development. I think there would be value to having that organised, allowing for people in EA to tackle the most important questions in the field, and allowing people in global development to get a strong intro to EA if it is their first event.

If anyone is interested in tackling one of these gaps, I'd love to chat about it and see if there is a way I can help, just send me a message.

- ^

I use field and cause interchangeably throughout

- ^

If this was done to scale, then the amount of money/people/organisations in global development would probably be hundreds or thousands of times bigger than the other fields.

Also lots of interventions often help in multiple fields, for example alt proteins impacting climate change, land use, etc. I haven't attempted to take that into account.

- ^

This is a rough guess and represented by how much of the green funnel overlaps with the different fields

- ^

I mean an organisation that does some of the following; conferences and other events, online discussion spaces, supporting subgroups and organisers, outreach, community health, connecting members of the network with each other

I found the concrete implications distinguishing between this more cause-oriented model of EA really useful, thanks!

I also agree, at least based on my own perception of the current cultural shift (away from GHD and farmed animal welfare, and towards longtermist approaches), that the most marginally impactful meta-EA opportunities might increasingly be in field-building.

When I was at EAG London this year, I noticed that there was a fair amount of energy and excitement towards AI Safety specific field building. I'm fairly keen on this since a lot has to go right in order for AI safety to go well and I think this is more likely when there are people specifically trying to develop a community that can achieve these goals, rather than having to compromise between satisfying the needs of the different cause areas.

One thought I had: If there is an EA conference dedicated to a specific cause area, it might also be worthwhile having some boothes related to other EA causes areas in order to address concerns about insularity.

I think this is a good idea. I feel there might be enough for EA adjacent to Progress Studies for this to be a field. I think Tom Westgarth was interested here too and in London you have a small progress cluster.