Intro

In this post I’m looking at how much focus we should place on the wider network of people interested in effective altruism versus highly engaged members.

The Centre for Effective Altruism has a funnel model describing their focus on contributors and core members as well as people moving down the funnel. I think this has often been interpreted by group organisers as the idea that engagement is key although that is seen as an open question in CEA’s three-factor model of community building. This means people often link engagement to impact, thinking that those who are more involved in the community, go to lots of events or work at EA related organisations are having more impact and so put on events and tailor content towards those activities.

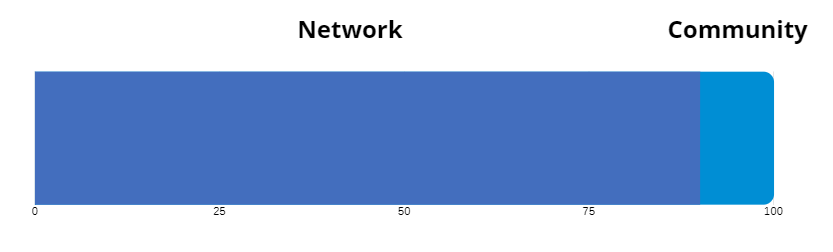

It might be that impact is better represented by the diagram below with the percentage of people along the bottom and it is supposed to represent a range of increasing involvement rather than binary in or out. Although the percentages aren’t accurate I think the rough image is true, that the vast majority of people who know about effective altruism aren’t involved in the community. Looking at the larger network of people who are interested in EA, this includes people in a wider variety of careers, potentially busy lives with work, family and other communities, potentially even high up in government, academia and business. They may have 0 or 1 connections to people who are also interested in EA and look for EA related advice when making donations or thinking about career changes once every few years. I’ve made the diagram assuming equal average impact whether someone is in the ‘community’ or ‘network’ but even if you doubled or tripled the average amount of impact you think someone in the community has there would still be more overall impact in the network.

Rather than trying to get this 90% to attend lots of events or get involved in a tangential ‘make work’ project, it may be more worthwhile to provide them with value that they are looking for, whether that’s donation advice, career ideas or connections to people in similar fields.

Anecdotally I have had quite a few meetings with less engaged members of the wider EA network in London. People who maybe haven’t been to an event or don’t read the forum but have 10-20 years experience and have gone on to work in higher impact organisations or connect to the relevant people involved in EA so that they can help each other.

I think the advice to get involved in the EA community still makes sense, but we should focus on the wider network of people getting 1-3 extra connections rather than making a more tight knit community.

What does this mean for movement building?

If people agree with this analysis, what would it mean for wider EA movement building and for individuals?

- Less focus on groups based around their location

- More focus on groups based around a shared career, cause or interest area

- For example in careers that could be lawyers, info security, consulting

- For cause areas that could be animal welfare, beneficial AI, new causes

- For interest areas, some current examples are EA for Christians, Project 4 Awesome and the Giving What We Can community

- When people want to get more involved in movement building, there are more resources for them to consider a global career or cause network/community as a possible option

- Less focus on EA aligned organisations for career options (much more discussion here, here and here)

- More support on helping new cause areas become their own fields

- More reference to advice that isn’t EA specific, there doesn’t need to be an EA version of each self improvement book that already exists (although sometimes bespoke advice is useful)

Effective Altruism as Coordination

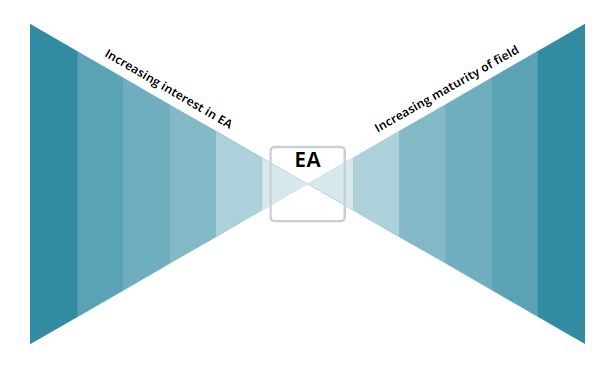

It may be better to see EA as coordination rather than a marketing funnel with the end goal of working for an EA organisation. What is seen as EA may be meta research and movement building whereas organisations working on a specific cause area are in a separate field rather than part of EA.

There is still a funnel where people hear about EA and learn more but they use the frameworks, questions and EA network as a sounding board to see what their values are and what that means for cause selection and also what that means for careers and donations.

The left side of the diagram below is similar to the original funnel model from CEA, with people gaining more interest and knowledge of EA. Rather than seeing that as the endpoint, people can then be connected to individuals and organisations in the causes and careers they have a good fit for.

Building up networks based on careers and causes can build better feedback loops for knowing what career advice works and what roles are potentially impactful in a wider variety of areas. It also allows people to have a higher fidelity introduction to EA if they see relevant conversations from others in the same field or knowledgeable about their cause interests.

EA could also be seen as an incubator for cause areas, with more research into finding new causes to support, testing them and supporting their growth as a cause area until it becomes a separate field with it’s own organisations, conferences, forums, newsletters, podcasts etc

If anyone does want to start a group for people in a particular cause or career I’d be happy to chat or put them in touch with others doing similar things as it’s something that I think is particularly neglected within EA compared to local group support.

(thank you for writing this, my comment is related to Denkenberger)A consideration against the creation of groups around cause areas if they are open for younger people (not only senior professionals) who are also new to EA: (the argument does not hold if those groups are only for people who are very familiar with EA thinking - of course among others those groups could also make work and coordination more effective)

It might be that this leads to a misallocation of people and resources in EA as the cost of switching focus or careers increases with this network.

If those cause groups existed two years ago, I would have either joined the "Global Poverty Group" or the "Climate Change group" (for sure not the "Animal Welfare group" for instance) (or with some probability also a general EA group). Most of my EA friends and acquaintances would have focused on the same cause area (maybe I would have been better skilled and knowledgable about them now which is important). But the likelihood that I would have changed my cause area because other causes are more important to work on would have been smaller. This could be because it is less likely to come across good arguments for other causes as not that many people around me have an incentive to point me towards those resources. Switching the focus of my work would also be costly in a selfish sense as one would not see all the acquaintances and friends from the monthly meetups/skypes or career workshops of my old cause area anymore.

I think that many people in EA become convinced over time to focus on the longterm. If we reasonably believe that these are rational decision, then changing cause areas and ways of working on the most pressing problems (direct work, lobby work, community building, ETG) several times during one's life is one of the most important things when trying to maximize impact and should be as cheap as possible for individuals and hence encouraged. That means that the cost of information provision of other cause areas and the private costs of switching should be reduced. I think that this might be difficult with potential cause area groups (especially in smaller cities with less EAs in general).

(Maybe this is similar to the fact that many Uni groups try to not do concrete career advice before students have engaged in cause prioritization discussion. Otherwise, people bind themselves too early to cause areas which seem intuitively attractive or fit to the perceived identity of the person or some underlying beliefs they hold and never questioned ( "AI seems important I have watched SciFi movies" "I am altruistic so I will help the to reduce poverty" "Capitalism causes poverty hence I wont do earning to give").)

I think when creating most groups/sub-communities it's important that there is a filter to make sure people have an understanding of EA, otherwise it can become an average group for that cause area rather than a space for people who have an interest in EA and that specific cause, and are looking for EA related conversations.

I think most people who have an interest in EA also hold uncertainty about their moral values, the tractability of various interventions and which causes are most important. It can be easy sometimes to pigeonhole people with particular causes depending on where they work or donate but I don't meet many people who only care about one cause, and the EA survey had similar results.

If people are able to come across well reasoned arguments for interventions within a cause area they care about, I think it's more likely that they'll stick around. As most of the core EA material (newsletters, forum, FB) has reference to multiple causes, it will be hard to avoid these ideas. Especially if they are also in groups for their career/interests/location.

I think the bigger risk is losing people who instantly bounce from EA when it doesn't even attempt to answer their questions rather than the risk of people not getting exposed to other ideas. If EA doesn't have cause groups then there's probably a higher chance of someone just going to another movement that does allow conversation in that area.

This quote from an 80,000 Hours interview with Kelsey Piper phrases it much better.

"Maybe pretty early on, it just became obvious that there wasn’t a lot of value in preaching to people on a topic that they weren’t necessarily there for, and that I had a lot of thoughts on the conversations people were already having. Then I think one thing you can do to share any reasoning system, but it works particularly well for effective altruism is just to apply it consistently, in a principled way, to problems that people care about. Then, they’ll see whether your tools look like useful tools. If they do, then they’ll be interested in learning more about that. I think my ideal effective altruist movement, and obviously this trade off against lots of other things and I don’t know that we can be doing more of it on the margin. My ideal effective altruist movement had insightful nuanced, productive, takes on lots and lots of other things so that people could be like, “Oh, I see how effective altruists have tools for answering questions. “I want the people who have tools for answering questions to teach me about those tools. I want to know what they think the most important questions are. I want to sort of learn about their approach."

This feels very important, and the concepts of the EA Network, EA as coordination and EA as an incubator should be standard even if this will not completely transform EA. Thanks for writing it so clearly.

I mainly want to suggest that this relates strongly to the discussion in the recent 80k podcast about sub-communities in EA. And mostly the conversation between Rob and Arden at the end.

This part of the discussion really rang true to me, and I want to hear more serious discussion on this topic. To many people outside the community it's not at all clear what AI research, animal welfare, and global poverty have in common. Whatever corner of the movement they encounter first will guide their perception of EA; this obviously affects their likelihood of participation and the chances of their giving to an effective cause.

We all mostly recognize that EA is a question and not an answer, but the question that ties these topics together itself requires substantial context and explanation for the uninitiated (people who are relatively unused to thinking in a certain way). In addition, entertaining counterintuitive notions is a central part of lots of EA discourse, but many people simply do not accept counterintuitive conclusions as a matter of habit and worldview.

The way the movement is structured now, I fear that large swaths of the population are basically excluded by these obstacles. I think we have a tendency to write these people off. But in the "network" sense, many of these people probably have a lot to contribute in the way of skills, money, and ideas. There's a lot of value—real value of the kind we like to quantify when we think about big cause areas—lost in failing to include them.

I recognize that EA movement building is an accepted cause area. But I'd like to see our conception of that cause area broaden by a lot— even the EA label is enough to turn people off, and strategies for communication of the EA message to the wider world have severely lagged the professionalization of discourse within the "community."

People in EA regularly talk about the most effective community members having 100x or 1000x the impact of a typical EA-adjacent person, with impact following a power law distribution. For example, 80k attempts to measure "impact-adjusted significant plan changes" as a result of their work, where a "1" is a median GWWC pledger (which is already more commitment than a lot of EA-adjacent people, who are curious students or giving-1% or something, so maybe 0.1). 80k claims credit for dozens of "rated 10" plan changes per year, a handful of "rated 100" per year, and at least one "rated 1000" (see p15 of their 2018 annual report here).

I'm personally skeptical of some of the assumptions about future expected impact 80k rely on when making these estimates, and some of their "plan changes" are presumably by people who would fall under "network" and not "community" in your taxonomy. (Indeed on my own career coaching call with them they said they thought their coaching was most likely to be helpful to people new to the EA community, though they think it can provide some value to people more familiar with EA ideas as well.) But it seems very strange for you to anchor on a 1-3x community vs network impact multiplier, without engaging with core EA orgs' belief that 100x-10000x differences between EA-adjacent people are plausible.

The 1-3X and 10/90 percent are loosely held assumptions. I think it may be more accurate to assume there are power law distributions for people who would consider themselves in the community and also for those who are in the wider network. If both groups have a similar distribution, than the network probably has an order of magnitude more people who have 100x-1000x impact. Some examples include junior members of the civil service being quite involved in EA, but there are also senior civil servants and lots of junior civil servants who are interested in EA but don't attend meetups.

I'm not sure that it is a core EA org belief that the difference is down to whether someone is heavily engaged in the community or not. Lots of examples they use of people who have had a much larger impact come from before EA was a movement.

It seems unlikely that the distribution of 100x-1000x impact people is *exactly* the same between your "network" and "community" groups, and if it's even a little bit biased towards one or the other the groups would wind up very far from having equal average impact per person. I agree it's not obvious which way such a bias would go. (I do expect the community helps its members have higher impact compared to their personal counterfactuals, but perhaps e.g. people are more likely to join the community if they are disappointed with their current impact levels? Alternatively, maybe everybody else is swamped by the question of which group you put Moskovitz in?) However assuming the multiplier is close to 1 rather than much higher or lower seems unwarranted, and this seems to be a key question on which the rest of your conclusions more or less depend.

Just a quick clarification that 80k plan changes aim to measure 80k's counterfactual impact, rather than the expected lifetime impact of the people involved. A large part of the spread is due to how much 80k influenced them vs. their counterfactual.

Thanks for writing this-it is important to think about. However, I have very different impressions of the ratio of impact of people in the EA community versus the EA network. Comparisons have been made before about the impact of an EA and, e.g., the average developed country person. If we use a relatively simple example of charity for the present generation, if we say the impact to a typical developed country charity is one, and a typical less developed country charity is 100, and 1% of the charity goes to less developed countries, that would have an impact per dollar of 1.99 (false precision for clarity). However, if the EA donates to the most effective less developed country charity, that could have a ratio of 1000 (perhaps saving a life for $5000 vs $5 million), or about 500 times as effective as the average US money going to charity (in the average, very little would go to GiveWell recommended charities). Then there could be a factor of several more in terms of the percentage of income given to charity (though it appears not yet), so I think the poverty EA being three orders of magnitude more effective than the mean developed country giver is reasonable. If you switch to the longterm future, then an extremely tiny amount of conventional money goes to high impact ways of improving the long-term future, so the ratio of the EA to mean would be much higher.

I'm not sure how to quantify the impact of the EA network. It very well could be significantly higher impact than the mean developed country person, because the EA community members will generally have chosen high-impact fields and therefore have connections with EA network people in those fields. However, many people's connections would not be in their field, and the field as a whole might not be very effective. Also, the EA community tends to be especially capable. So depending on the cause area, I would be surprised if the typical EA community member is less than one order of magnitude more effective than the typical EA network member. So then the impact of the EA community would be larger than the EA network (which I believe you are saying is 9x as many people and I think this is the right order of magnitude). Then there is the question of how much influence the EA community could have on the EA network. I would guess it would be significantly lower than the influence the EA community could have on itself. I still think it is useful to think about what influence we could have on the EA network because it is relatively neglected, but I don't think it should be as large a change in priorities as you are suggesting. If we could bring more professionals into the EA community through your suggested groups, I think that could be high impact.

I'm not sure that an EA community member is 'especially capable' compared to a capable person who attends less events or is less engaged with online content. The wider network may have quite a few people who have absorbed 5+ years of online material to do with EA, but rarely interacted, and those people will have used the same advice to choose donations and careers as more engaged members.

I also think the network has higher variance, you may get people who are not doing much altruistically, but there will also be more people in business with 20+ year experience, leading academics in their field and people higher up in government who want to good with their careers.

Side point

Whilst I'm aware that you move on from this point, I'm not sure it's useful to have as a comparison when the post is about people who are aware of EA and of having impact within their career rather than everyone in a developed country. It may be that it's also hard to parse your text without paragraphs and removing that first point would have helped.

Thanks for the feedback-now I have broken it into two paragraphs. It's not clear to me whether to use the reference class of average person in the developed country versus reference class of EA community. I was not envisioning someone who has read 5+ years of EA content and is making career and donation decisions as the "EA network." Then I would agree that the EA community would be a better reference class for EA network. I was envisioning for the EA network more people who have heard about EA through an EA community contact, and might've had a one hour conversation. How would you define the EA community and how large do you think it is?

I think the community is composed of people who either attend multiple EA events each year or contribute to online discussion, and some proportion of people who work at an EA related organisation, so maybe between 500-2000 people.

There are quite a few people who might attend an EAG or read content but don't get involved and wouldn't consider themselves part of the community. I might be biased as part of my work at EA London has included having lots of conversations with people who often have a great understanding of EA but have never been to an event.

This is very helpful to understand where you are coming from. Local groups have 2124 regular attendees (more than an event every 2 months or more than 25% of events, which appears to be more selective than your criterion, and not all groups would have filled out the survey). Then there are ~18,000 main EA Facebook group members (and there would be some non-overlaps in other EA-themed Facebook groups), but many of them would not actually be contributing to online discussions. Of course there would be overlap with the active local group members, but there could be people in neither of these groups who are still in the community. Giving What We Can members are now up to 4,400, who I would count as being part of the EA community (though some of those have gone silent). 843 out of the 2576 people who took the 2018 EA survey had taken the GWWC pledge (33%). Not all of the EA survey takers identified as EA, and not all would meet your criterion for being in the community, but if this were representative, that would indicate about 13,000 EAs. Still, in 2017, there were about 23,000 donors to GiveWell. And there would be many other EA-inspired donations and a lot of people making career decisions based on EA who are not engaged directly with the community. So that would be evidence that the number of people making EA-informed donations and career decisions is a lot bigger than the community, as you say. The 80k newsletter has >200,000 subscribers do you have a different term for that level of engagement? I would love to hear others’ perspectives as well.

This post introduces the idea of structuring the EA community by cause area/career proximity as opposed to geographical closeness. Persons in each interest-/expertise-based network are involved with EA to a different extent (traditional CEA’s funnel model) and the few most involved amend EA thinking, which is subsequently shared with the network.

While this post offers an important notion of organizing the EA community by networks, it does not specify the possible interactions among networks and their impact on global good or mention sharing messages with key stakeholders in various networks in a manner that minimizes reputational loss risk and improves prospects for further EA-related dialogues. Further, the piece mentions feedback loops in the context of inner-to-outer career advice sharing, which can be a subset of overall knowledge sharing.

Thus, while I can recommend this writing to relatively junior community organizers who may be otherwise hesitant to encourage their group members[1] to engage with the community beyond their local group as a discussion starter, this thinking should not be taken as a guide for the organization of EA networks, because it does not pay attention to strategic network development.

who understand EA and are willing to share their expertise with others in the broader EA community so that the engagement of these persons outside of their local group would be overall beneficial