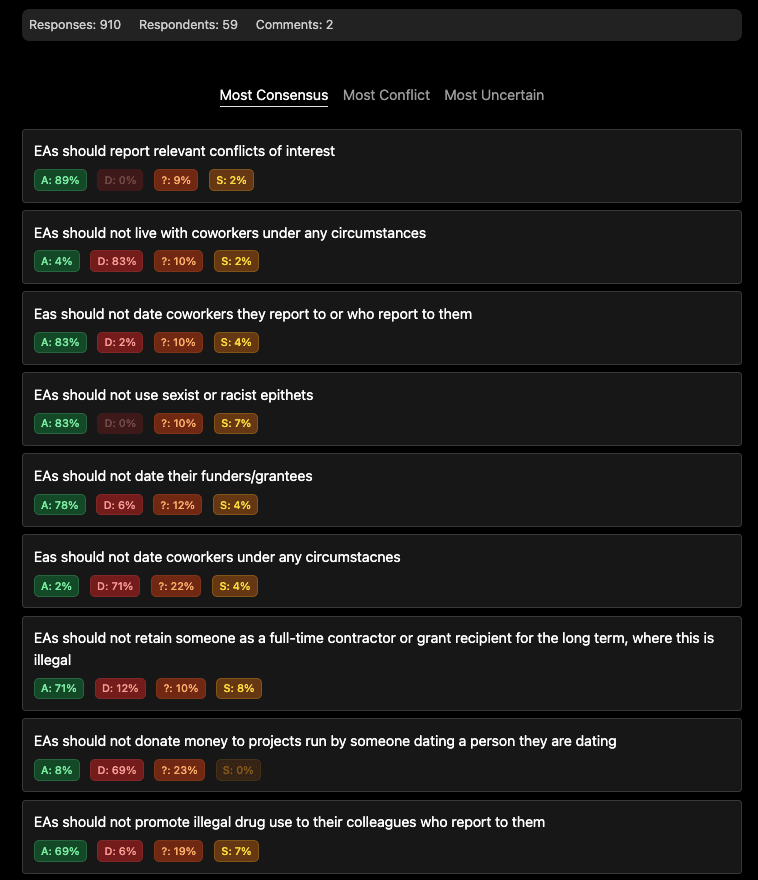

A week ago @Rockwell wrote this list of things she thought EAs shoudn't do. I am glad this discussion is happening. I ran a poll (results here) and here is my attempt at a list more people can agree with: .

We can do better, so someone feel free to write version 3.

The list

- These are not norms for what it is to be a good EA, but rather some boundaries around things that would damage trust. When someone doesn't do these things we widely agree it is a bad sign

- EAs should report relevant conflicts of interest

- Eas should not date coworkers they report to or who report to them

- EAs should not use sexist or racist epithets

- EAs should not date their funders/grantees

- EAs should not retain someone as a full-time contractor or grant recipient for the long term, where this is illegal

- EAs should not promote illegal drug use to their colleagues who report to them

Commentary

Beyond racism, crime and conflicts of interest the clear theme is "take employment power relations seriously".

Some people might want other things on this list, but I don't think there is widespread enough agreement to push those things as norms. Some examples:

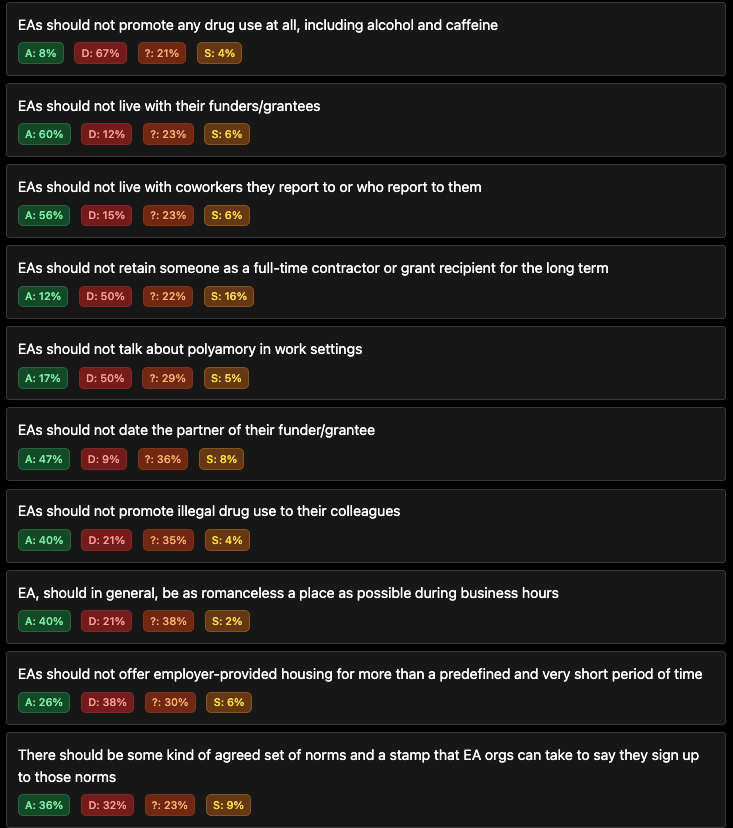

- Illegal drugs - "EAs should not promote illegal drug use to their colleagues" - 41% agreed, 20% disagreed, 35% said "it's complicated", 4% skipped

- Romance during business hours - "EA, should in general, be as romanceless a place as possible during business hours" - 40% Agreed, 21% disagreed, 36% said "it's complicated", 2% skipped

- Housing - "EAs should not offer employer-provided housing for more than a predefined and very short period of time" - 27% Agreed 37% Disagreed 31% said "it's complicated", 6% skipped.

I know not everyone loves my use of polls or my vibes as a person. But consensus is a really useful tool for moving forward. Sure we can push aside those who disagree, put if we find things that are 70% + agreed, then that tends to move forward much more quickly and painlessly. And it builds trust that we don't steamroll opposition.

So I suggest that rather a big list of things that some parts of the community think are obvious and others think are awful, we try and get a short list of things that most people think are pretty good/fine/obvious.

Once we have a "checkpoint" that is widely agreed, we can tackle some thornier questions.

Full poll results

For context on my own vote: I’d give the same answer for talking about monogamy.

People should clearly be able to say “my partner(s) and I are celebrating my birthday tonight” and “it’s my anniversary!” and look at this cute picture of my metamour’s dog!” and then answer questions if a colleague says, “what’s a metamour?” Just like all colleagues should be able to talk about their families at work.

People should be aware that it’s risky to spend work time nerding out about dating, romantic issues, sex, hitting on people, etc. People should be aware that mono people in the Bay have often reported feeling pressured or judged for not being poly. But just like with any relation type, discussing romance at work is very likely to make someone feel uncomfortable and junior people often won’t feel like they can say so.