The EA Hub will retire on the 6th of October 2024.

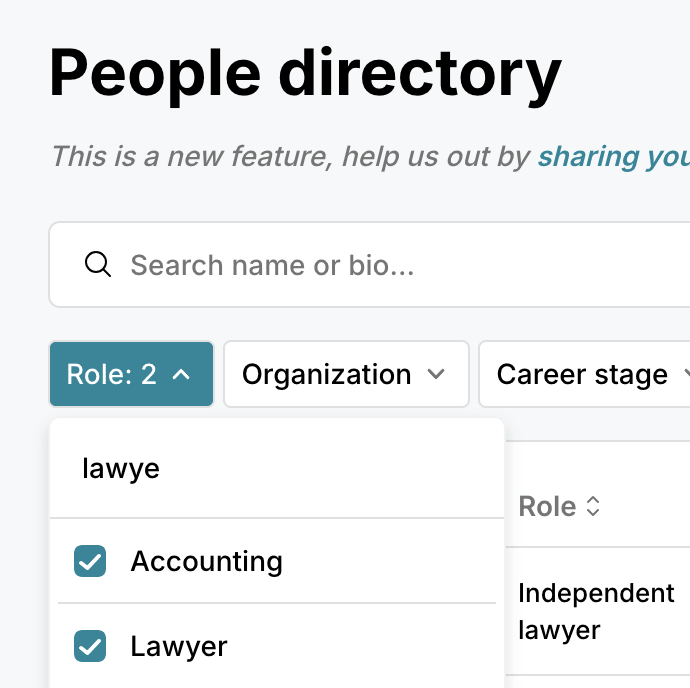

In 2022 we announced that we would stop further feature development and maintain the EA Hub until it is ready to be replaced by a new platform. In July this year, the EA Forum launched its People Directory, which offers a searchable directory of people involved in the EA movement, similar to what the Hub provided in the past.

We believe the Forum has now become better positioned to fulfil the EA Hub’s mission of connecting people in the EA community online. The Forum team is much better resourced and users have many reasons to visit the Forum (e.g. for posts and events), which is reflected in it having more traffic and users. The Hub’s core team has also moved on to other projects.

EA Hub users will be informed of this decision via email. All data will be deleted after the website has been shut down.

We recommend that you use the EA Forum’s People Directory to continue to connect with other people in the effective altruism community. The feature is still in beta mode and the Forum team would very much appreciate feedback about it here.

We would like to thank the many people who volunteered their time in working on the EA Hub.

Receiving the news that the hub was shutting down is the reason I finally came here and looked around properly.

Glad I did.