On 17 February 2024, the mean length of the main text of the write-ups of Open Philanthropy’s largest grants in each of its 30 focus areas was only 2.50 paragraphs, whereas the mean amount was 14.2 M 2022-$[1]. For 23 of the 30 largest grants, it was just 1 paragraph. The calculations and information about the grants is in this Sheet.

Should the main text of the write-ups of Open Philanthropy’s large grants (e.g. at least 1 M$) be longer than 1 paragraph? I think greater reasoning transparency would be good, so I would like it if Open Philanthropy had longer write-ups.

In terms of other grantmakers aligned with effective altruism[2]:

- Charity Entrepreneurship (CE) produces an in-depth report for each organisation it incubates (see CE’s research).

- Effective Altruism Funds has write-ups of 1 sentence for the vast majority of the grants of its 4 funds.

- Founders Pledge has write-ups of 1 sentence for the vast majority of the grants of its 4 funds.

- Future of Life Institute’s grants have write-ups roughly as long as Open Philanthropy.

- Longview Philanthropy’s grants have write-ups roughly as long as Open Philanthropy.

- Manifund's grants have write-ups (comments) of a few paragraphs.

- Survival and Flourishing Fund has write-ups of a few words for the vast majority of its grants.

I encourage all of the above except for CE to have longer write-ups. I focussed on Open Philanthropy in this post given it accounts for the vast majority of the grants aligned with effective altruism.

Some context:

- Holden Karnofsky posted about how Open Philanthropy was thinking about openness and information sharing in 2016.

- There was a discussion in early 2023 about whether Open Philanthropy should share a ranking of grants it produced then.

- ^

Open Philanthropy has 17 broad focus areas, 9 under global health and wellbeing, 4 under global catastrophic risks (GCRs), and 4 under other areas. However, its grants are associated with 30 areas.

I define main text as that besides headings, and not including paragraphs of the type:

- “Grant investigator: [name]”.

- “This page was reviewed but not written by the grant investigator. [Organisation] staff also reviewed this page prior to publication”.

- “This follows our [dates with links to previous grants to the organisation] support, and falls within our focus area of [area]”.

- “The grant amount was updated in [date(s)]”.

- “See [organisation's] page on this grant for more details”.

- “This grant is part of our Regranting Challenge. See the Regranting Challenge website for more details on this grant”.

- “This is a discretionary grant”.

I count lists of bullets as 1 paragraph.

- ^

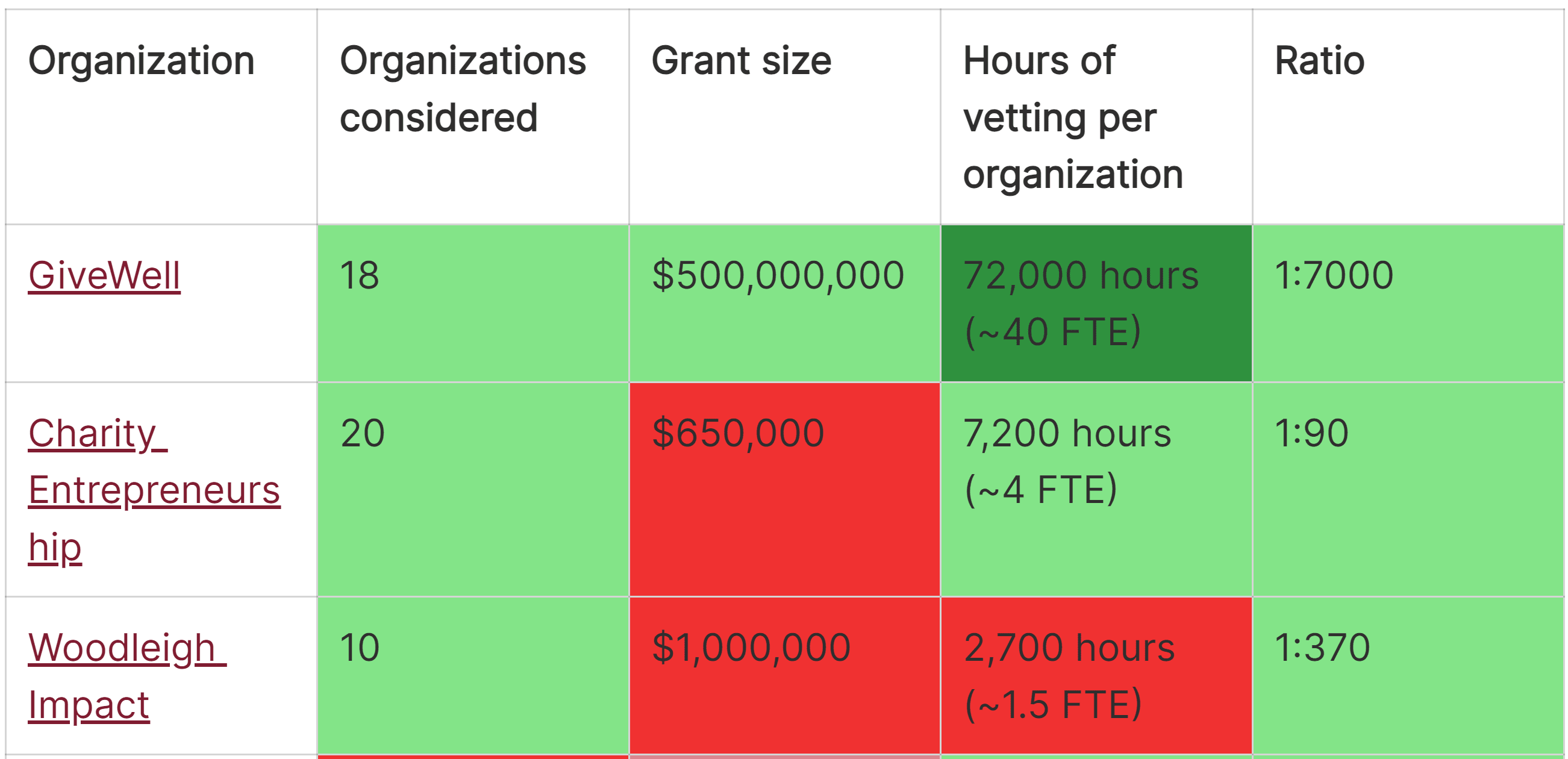

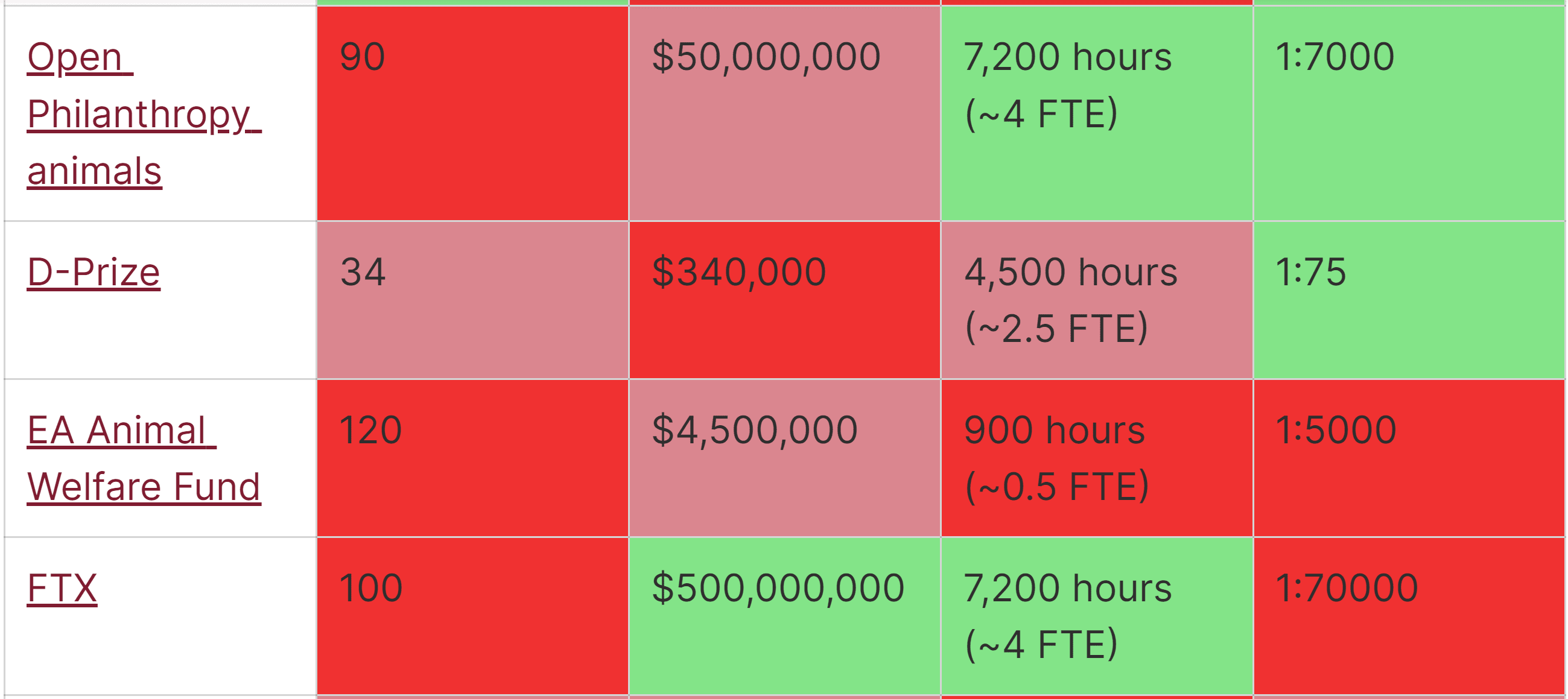

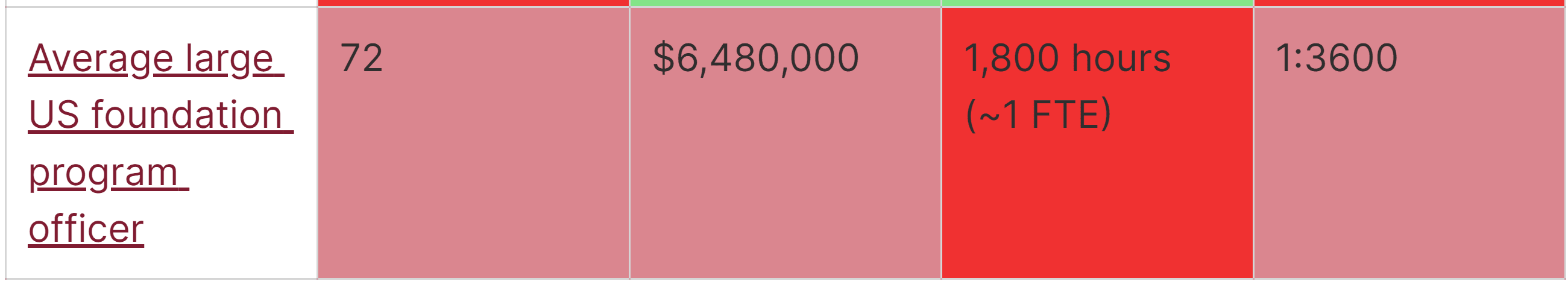

The grantmakers are ordered alphabetically.

There's a lot of room between publishing more than ~1 paragraph and "publishing their internal analyses." I didn't read Vasco as suggesting publication of the full analyses.

Assertion 4 -- "The costs for Open Phil to reduce the error rate of analyses, would not be worth the benefits" -- seems to be doing a lot of work in your model here. But it seems to be based on assumptions about the nature and magnitude of errors that would be detected. If a number of errors were material (in the sense that correcting them would have changed the grant/no grant decision, or would have seriously changed the funding level), I don't think it would take many errors for assertion 4 to be incorrect.

Moreover, if an error were found in -- e.g., a five-paragraph summary of a grant rationale -- the odds of the identified error being material / important would seem higher than the average error found in (say) a 30-page writeup. Presumably the facts and conclusions that made the short writeup would be ~the more important ones.