Epistemic status: 30% (plus or minus 50%). Further details at the bottom.

In the 2019 EA Cause Prioritisation survey, Global Poverty remains the most popular single cause across the sample as a whole. But after more engagement with EA, around 42% of people change their cause area, and of that, a majority (54%) moved towards the Long Term Future/Catastrophic and Existential Risk Reduction.

While many people find that donations help them stay engaged (and continue to be a great thing to do), there has been much discussion of other ways people can contribute positively.

In thinking about the long-run future, one area of research has been improving human's resilience to disasters. A 2014 paper looked at global refuges, and more recently ALLFED, among others, have studied ways to feed humanity in disaster scenarios.

There is much work done, and even much more needed, to directly reduce risks such as through pandemic preparedness, improving nuclear treaties, and improving the functioning of international institutions.

But we believe that there are still opportunities to increase resilience in disaster scenarios. Wouldn't it be great if there was a way to directly link the simplicity of donations with effective methods for the recovery of civilisation?

Canning what we give

In The Knowledge by Lewis Dartnell (p. 40), an estimate is given of how long a supermarket would be able to feed a single person:

So if you were a survivor with an entire supermarket to yourself, how long could you subsist on its contents? Your best strategy would be to consumable perishable goods for the first few weeks, and then turn to dried pasta and nice... A single average-sized supermarket should be able to sustain you for around 55 years - 63 if you eat the canned cat and dog food as well.

But in thinking about an population, there would be fewer resources to go around per person.

The UK Department for Environment, Food and Rural Affairs (DEFRA) estimated in 2010 that there was a national stock reserve of 11.8 days of 'ambient slow-moving groceries'. (ibid, p.40)

It seems clear that there aren't enough canned goods.

Our proposal

We propose that:

- We try to expand both the range of things that are canned, and find ways to bury them deep in the earth (ideally beyond of the reach of the mole people)

- Donors to GWWC instead consider CWWG

- Donors put valuable items in cans which they would want in a disaster scenario, e.g. fruit salads, Worcester sauce, marmelade

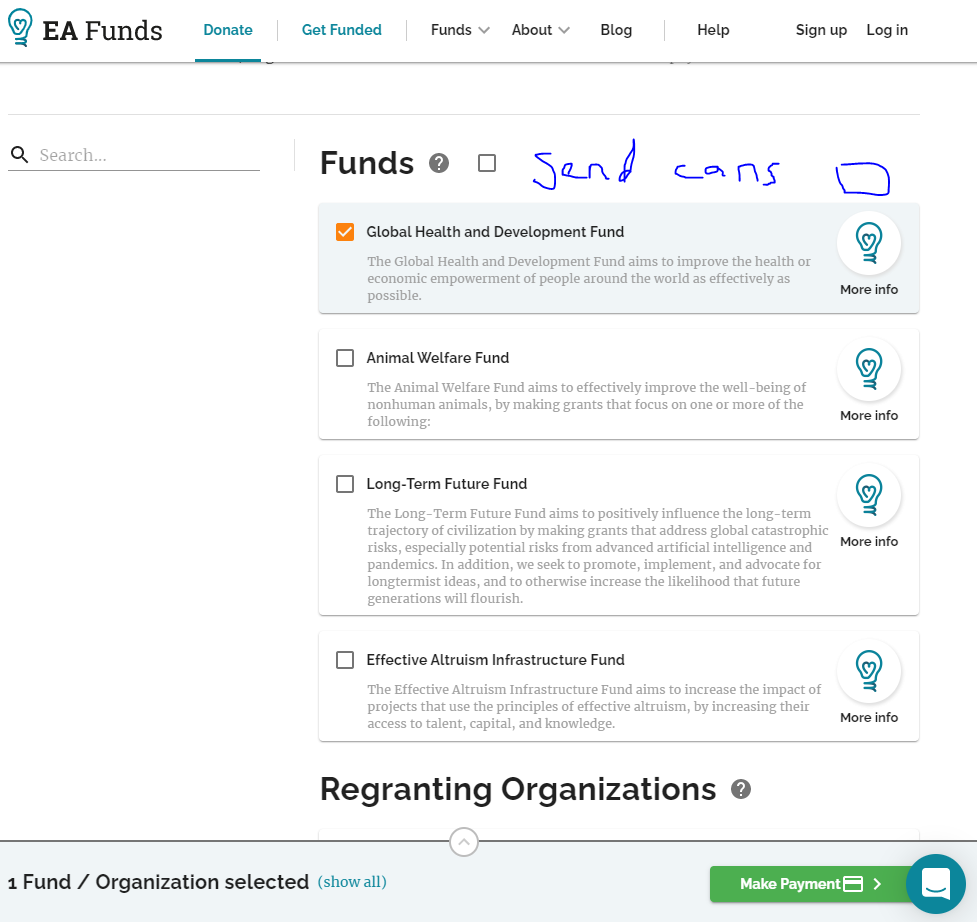

- EA funds provides a donation infrastructure to support sending cans

A mock-up of the CWWG dashboard

Risks

We are concerned that:

- Cluelessness could lead to uncertain outcomes

- The production of too many cans could make things too shiny and there could be a shortage of sunglasses

- There might not be enough can openers

- Focusing industrial production on making can could lead to a global arms race to make more cans

Further information

Partial credit to this goes to Harri Besceli - we came up with the idea together.

This was a joke. Happy April fools.

"Yes We Can"