There was a recent discussion on twitter about whether global development had been deprioritised within EA. This struck a chord with some (*edit* despite the claim in the twitter thread being false). So:

What is the priority of Global poverty within EA, compared to where it ought to be?

I am going to post some data and some theories. I'd like if people in the comments falsified them and then we'd know the answer.

- Some people seem to think that global development is lower priority than it should be within EA. Is this view actually widespread?

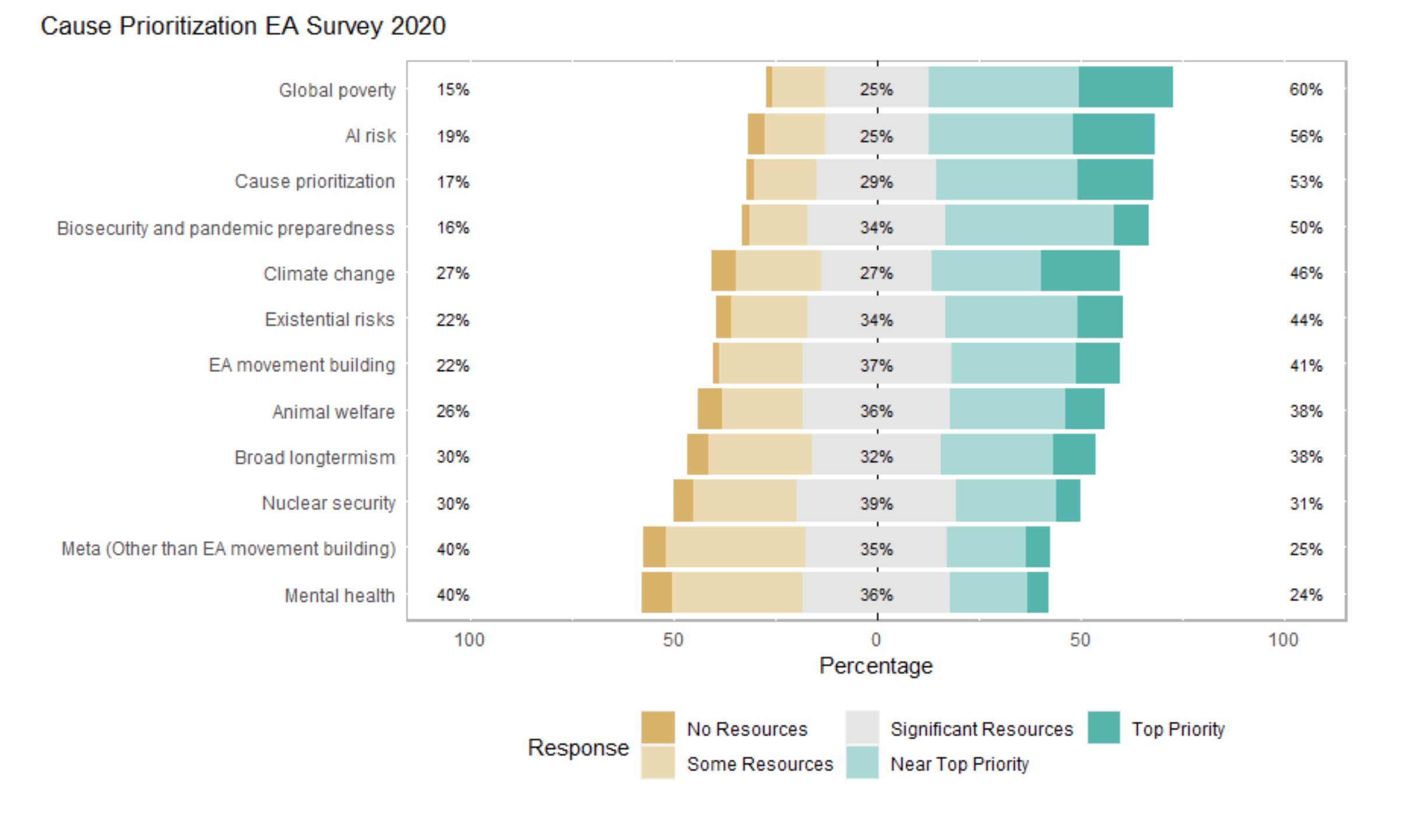

- Global poverty was held in very high esteem in 2020. Without further evidence we should assume it still is. In the 2020 survey, no cause area had a higher average rating (I'm eyeballing this graph) or a higher % of near top + top priority ratings. In 2020, global development was considered the highest priority by EAs in general.

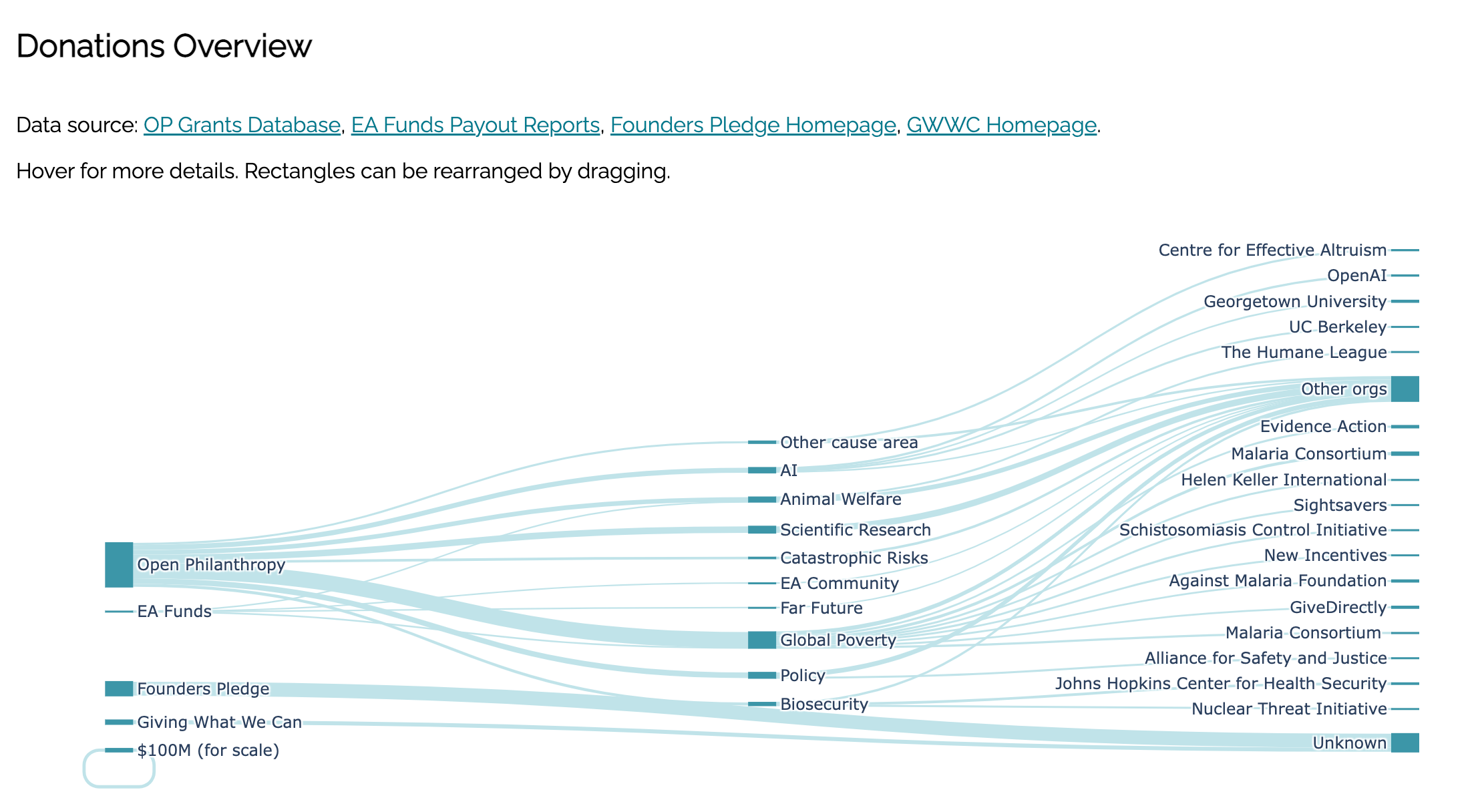

- Global poverty gets the most money by cause area from Open Phil & GWWC according to https://www.effectivealtruismdata.com/

- The FTX future fund lists economic growth as one of its areas of interest (https://ftxfuturefund.org/area-of-interest/)

- Theory: Elite EA conversation discusses global poverty less than AI or animal welfare. What is the share of cause areas among forum posts, 80k episodes or EA tweets? I'm sure some of this information is trivial for one of you to find. Is this theory wrong?

- Theory: Global poverty work has ossified around GiveWell and their top charities. Jeff Mason and Yudkowsky both made variations of this point. Yudkowsky's reasoning was that risktakers hadn't been in global poverty research anyway - it attracted a more conservative kind of person. I don't know how to operationalise thoughts against this, but maybe one of you can.

- Personally, I think that many people find global poverty uniquely compelling. It's unarguably good. You can test it. It has quick feedback loops (compared to many other cause areas). I think it's good to be in coalition with the most effective area of an altruistic space that vibes with so many people. I like global poverty as a key concern (even though it's not my key concern) because I like good coalitional partners. And Longtermist and global development EAs seem to me to be natural allies.

- I can also believe that if we care about the lives of people currently alive in the developing world and have AI timelines of less than 20 years, we shouldn't focus on global development. I'm not an expert here and this view makes me uncomfortable, but conditional on short AI timelines, I can't find fault with it. In terms of QALYs there may be more risk to the global poor from AI than malnourishment. If this is the case, EA would moves away from being divided by cause areas towards a primary divide of "AI soon" vs "AI later" (though deontologists might argue it's still better to improve people's lives now rather than save them from something that kills all of us). Feel fry to suggest flaws in this argument

- I'm going to seed a few replies in the comments. I know some of you hate it when I do this, but please bear with me.

What do you think? What are the facts about this?

endnote: I predict 50% that this discussion won't work, resolved by me in two weeks. I think that people don't want to work together to build a sort of vague discussion on the forum. We'll see.

Please note that the Twitter thread linked in the first paragraph starts with a highly factually inaccurate claim. In reality, at EAGxBoston this year there were five talks on global health, six on animal welfare, and four talks and one panel on AI (alignment plus policy). Methodology: I collected these numbers by filtering the official conference app agenda by topic and event type.

I think it's unfortunate that the original tweet got a lot of retweets / quote-tweets and Jeff hasn't made a correction. (There is a reply saying "I should add, friend is not 100% sure about the number of talks by subject at EAGx Boston," but that's not an actual correction, and it was posted as a separate comment so it's buried under the "show more replies" button.)

This is not an argument for or against Jeff's broader point, just an attempt to combat the spread of specific false claims.

Here's a corrective: https://twitter.com/JeffJMason/status/1511663114701484035?t=MoQZV653AZ_K1f2-WVJl7g&s=19

Unfortunately I can't do anything about where it shows up. Elon needs to get working on that edit button.

Jeff should not delete the tweet

Jeff should delete the tweet

Sorry I've made an edit to this effect. An oversight on my part.

This is exactly why I wish I could allow others to edit my posts - then you could edit it too!

This post wanted data, and I’m looking forward to that … but here is another anecdotal perspective.

I was introduced to EA several years ago via a Life You Can Save. I learned a lot about effective, evidence-based giving, and “GiveWell approved” global health orgs. I felt that EA had shared the same values as the traditional “do good” community, just even more obsessed with evidence-based, rigorous measurement. I changed my donation strategy accordingly and didn’t pay much more attention to EA community for a few years.

But in 2020, I checked back in to EA and attended an online conference. I was honestly quite surprised that very little of the conversation was about how to measurably help the world’s poor. Everyone I talked to was now focusing on things like AI Safety and Wild Animal Welfare. Even folks that I met for 1:1s, whose bio included global health work, often told me that they were “now switching to AI, since that is the way to have real impact.” Even more surprising was the fact that the most popular arguments weren’t based on measurable evidence, like GiveWell, but based on philosophical arguments and thought experiments. The “weirdness” of the philosophical arguments was a way to signal EA-ness; lack of empirical grounding wasn’t a dealbreaker anymore.

Ultimately I misjudged what the “core” principles of EA were. Rationalism and logic were a bigger deal than empiricism. But in my opinion, the old EA was defined by citing mainstream RCT studies to defend an intervention that was currently saving X lives. The current EA is defined by citing esoteric debates between Paul Christiano and Eliezer, which themselves cite EA-produced papers… all to decide which AI Safety org is actually going to save the world in 15 years. I’m hoping for a rebalance towards the old EA, at least until malaria is actually eradicated!

The original EA materials (at least the ones that I first encountered in 2015 when I was getting into EA) promoted evidence-based charity, that is making donations to causes with very solid evidence. But the the formal definition of EA is equally or more consistent with hits based charity, making donations with limited or equivocal evidence but large upside with the expectation that you will eventually hit the jackpot.

I think the failure to separate and explain the difference between these things leads to a lot of understandable confusion and anger.

I think I find it a bit hard to know what to do with this. It seems fair to me that the overall tone has changed. Do you think the tone within global poverty has? ie, if someone wants to do RCTs can't they still?

Upvote this if you think that global poverty is under prioritised within EA.

Great post! I don't have fully formed view of the consequences of EA's increasing focus on longtermism, but I do think it was important that we notice and discuss these trends.

I actually spent some of my last saturday categorising all EA-forum posts by their cause-area[1], and am planning on spending my next saturday making a few graphs over any trends I can spot on the forum.

The reason I wanted to do this is exactly because I had an inclination that global poverty posts were getting comparatively less engagement than it used to, and was wondering whether this was actually the case or just my imagination.

Just now put the code I've written so far on github in case else wants to contribute or do something similar: https://github.com/MperorM/ea-forum-analysis

Superb work from you! You should get in touch with the person who runs this and put it on https://www.effectivealtruismdata.com/

Upvote this if you think that global poverty is appropriately or over prioritised within EA.

Is there anywhere I can upvote something like "global poverty is likely appropriately prioritised within EA so far, but I guess longtermism & Animal Welfare have been receiving proportionally more attention and resources in the last couple of years than they used to, probably because that's where some salient EAs think there are juicier arguments and low-hanging fruits to grab, and now we even read more about disputes between randomistas and systemistas than about how to compare AMF vs. GD impact these days"?

Feel free to write that.

On the other hand, they explicitly justify it with longtermist considerations (I'm prety OK w that).

All EA funders who give away more than $10 million should produce data on their funding by cause area which can be published on sites like https://www.effectivealtruismdata.com/

edited to take Chris Leong's feedback into account.

I wouldn't say all funders, but maybe all funders over a certain size.

This post would be better if one could write wiki posts. So if anyone could edit my post but if it could still be given karma.

I think the global poverty thing is great for PR and it's very important to have a highly visible discourse on it in outer EA outposts like the subreddit because otherwise people see us talking about AI and think we're a cult. There's a serious problem on Reddit with many young people getting taken in by an intelligent, fashionable and dangerous anti rationalsphere group that grows at 300% per year. It's very important that EA makes humanitarian noises on Reddit rather than just doing our usual thing of just helping people, to handle the antagonistic group. I used to frequent the sub trying to handle them and I think we got a reputation increase but right now my mental health can't cope with that kind of emotional labour and the required self restraint and empathy for influential insane bpd people who will soon grow up to become journalists. There's already at least one journalist who dedicates his career to attacking rationalsphere x risk and gets published in intellectually fashionable outlets like salon.com -- there's a very real danger imho that we'll have a plague of these bastards soon and we'll be considered the new far right, as this guy is already successfully painting us. We need to make more good PR noises and take a countering violent extremism approach to our adversaries, prevent them from turning young intelligent people against us.

I think there is serious neglect of PR in EA . EAs seem to be PR blind. Those who see the threat are often tempted to argue with nutters and make it worse. People forget that teens grow up to be adults with academic ppsts and journalism careers. I personally understand that mundane humanitarianism is relatively unimportant compared to x risk but we still need to look after the reputation side of things so I suggest retaining some humanitarian stuff on the highly visible outside of the movement and talking about it constantly is necessary for survival. I feel sometimes anti-superficial-bullshit-altruism sentiment in our glorious movement works as a kind of masochism where we basically fail to get credit for anything and invite irrational but intelligent and socially skilled people to attack us. We need a highly socially skilled and emotionally resilient counter-force to spout humanitarianism and take care of people's feelings.

To respond to one specific point here. I don't think EA exists to save young people from being taken in by antirationalism. If it acheives that, great, but I don't think that's our stated goal and I'm not sure it should be. I guess I worry a bit when PR concerns are ends in themselves rather than instrumental goals.

But maybe I'm wrong? I like helping people become more rational, but do you think it's underrated by EA currently?

I'm pretty clearly Longtermist by most reasonable definitions of that phrase and think there are much better reasons to care about GHW than PR, for example the fact that future people mattering doesn't stop me thinking that people dying of malaria is bad. I think this is also in general true of my Longtermist friends.

I have thought this about PR for some time. Do you know anyone in EA who is skilled at PR?

I think global poverty and health is important because…

there is a case that it is the most effective cause from a utilitarian standpoint. This is particularly the case id you have population ethics other than the total view or you are suffering focused (or you think that it is as likely as not that the future will be net bad).

I have not seen any strong case that for someone with, say a person affecting view, it is clear they can have a greater impact by working to prevent extinction risk.

(Animal welfare considerations and the meat eaters dilemma may counteract this. But they also counteract the case for preventing extinction.)

I think the person affecting view isn't very common among EA? I'm not 100% sure on this, but I believe Derek Parfit started with the person affecting view and latter shifted away from this.

One can also have a population ethic that is neither total nor person affecting. I can have a value function that is concave in the number of people existing going forward and some measure of how happy they are. I can also heavily weight suffering even if I have a total view.

As to your claim, I'm not sure what the data is. My impression is that maybe about half the EA people who have thought about it have a view other than total utilitarianism, and a larger share have some doubts about it, some moral uncertainty.

I also think that the fact that 'pronatalism' is not a big EA cause suggests people aren't fully on board with the total view?

But I have a vague sense that people are working on some survey/opinion research on this? It might be an interesting question to poll EAs on in some way.

And of course, obviously the share of people (even EAs) who believe something doesn't determine its rightness

The below statement is anecdotal; I think it's hard to have a fact-based argument without clear/more up-to-date survey data.

The EA movement includes an increasing number of extreme long-termists (i.e., we should care about the trillions of humans who come after us rather than the 7 billion alive now). If AI development happens even in the next 200 years (and not 20) then we would still want to prioritize that work, per a long-termist framework.

I also find the above logic unsettling; there's a long philosophical argument to be had regarding what to prioritize and when, and even if we should prioritize broad topic areas like these.

A general critique of utilitarianism is that without bounds, it results in some extreme recommendations.

There are also weighing arguments -- "we can't have a long term without a short term" and more concretely "people whose lives are saved now, discounted to the present, have significant power because they can help us steer the most important century in a positive direction."

Potentially relevant post here: https://forum.effectivealtruism.org/posts/GFkzLx7uKSK8zaBE3/we-need-more-nuance-regarding-funding-gaps.

Post author makes the claim that there is lots of funding for big global poverty orgs but less for smaller newer innovative orgs. Whereas farmed animal welfare and AI has more funding available for small new projects and individuals.

This could mean that just looking at the total amount of funding available is not complete measure of how prioritised an area is.

I believe longtermism is prioritised, that it should be prioritised and in some ways I would even prioritise it more [1], however, I also think that there are limits. For example, if there were really just two sessions on Global Health and Development, then I would feel that things had gone too far, but Ben Kunh has explained that this is factually incorrect.

I don't think the conclusion that long-termism is being increasingly prioritised should surprise anyone with the publication of Toby Ord's The Precipe and Will MacAskill's upcoming What We Owe The Future. They founded the movement, so it shouldn't be surprising that those two updating has significantly shifted the direction of the movement. I expect Holden's Most Important Century has influential as well as he has a lot of credibility among global poverty people due to Co-Founding Givewell.

Anecdotally, I can tell you that it seems that people who are more involved in EA tend to have shifted more towards longtermism than those less involved or those who are new to EA[2]. I think Australia has also shifted less towards longtermism, which is unsurprising since it's more at the periphery and so ideas take time to propagate.

If you want evidence, you can look at 80,000 Hours top career paths, observe that the FTX Future Fund[3] focuses on long-termism with the FTX Foundation making smaller contributions to global health/climate change[4]. Also, notice how OpenPhilanthropy has launched a new $10 million grantmaking program: supporting the effective altruism community around Global Health and Wellbeing. While this marks a substantial increase in the amount of money dedicated towards growing this arm of the community, not that the reason why they are starting this arm is because "the existing program evaluates grants through the lens of longtermism". Further, while Open Philanthropy appointing a Co-CEO focusing on near-termism represented an increase in the prominence of near-termism, it should be remembered that this took place because Holden was increasingly focusing on Long-Termism.

I think we should be open about these changes and I should be clear I support all of these choices. Long-termism has been increasing in prominence in EA and it feels like this should obviously be reflected in terms of how the movement operates. At the same time, the movement seems to have been taking sensible actions to ensure that the ball isn't being dropped on short-termism. Obviously, there's more that could be done here and I'd be keen to hear any ideas that people have.

I'll acknowledge that actions may seem surprising to some in the base as they are slightly ahead of where the base is at, but as far as I can tell, the base seems to be mostly coming along[5]. I also acknowledge that some of these changes may be disconcerting to some people, but I believe EA would have lost its soul if we were unwilling to update because we didn't want our members to feel sad.

I want to finish by saying that many people are probably unaware of how much of a shift has taken place recently. You might wonder how this is considering how many of the links above effectively state this outright, but I suppose many EAs are very busy and don't have time to read all of this.

I know this is controversial.

Many might not even know of long-termism yet.

Now the biggest EA funder.

The Future Fund is looking to spend $100 million - $1 billion this year, while the FTX Foundation has earmarked about $20 million for charity.

In a functional movement, the leadership leads. It should obviously pay close attention to the views of the base and try to figure out if they might actually be correct, but the leadership should also be willing to diverge from their views, especially when they can bring the base along with them.

Can you elaborate on your endnote that "this discussion won't work"? What would success look like?

I didn't think it would get more than 30 karma or that people would supply answers to 2 or more of the questions.

So here's a funny twist. I personally have been longtermist since independently coming to the conclusion that it was the correct way to conceptualize ethics, around 30 years ago. I realized that I cared about as much about future people as current people far away. After some thought, I settled on global health/poverty/rule-of-law as one of my major cause areas because I believe that bringing current people out of bad situations is good not only for them but for the future people who will descend from them or be neighbors of their descendants, etc. Also, because society as a whole sees these people suffering, thinks and talks about them, and adjusts their ethical decision-making in accordance. I think that the common knowledge that we are part of a worldwide society which allows children to starve or suffer from cheaply curable diseases negatively influences our perception of how good our society COULD be. I think my other important cause areas, like existential risk mitigation and planning for sustainable exponential growth are also important, but.... Suppose we succeed at these two, and fail at the first. I don't want a galaxy spanning civilization which allows a substantial portion of its subjects to suffer hugely from preventable problems the way we currently allow our fellow humans to suffer. That wouldn't be worse than no-galaxy-spanning-civilization, but it would be a lot less good than one which takes reasonable care of its members.