This is the first post in a planned three-part series.

Overview of the Series

Conflict between Great Power states—countries with global interests and the military strength to defend them against their rivals—has shaped the course of history. The Napoleonic Wars redrew the borders of Europe. The League of Nations, created in the aftermath of World War I, foreshadowed the structure of today's international world order. World War II allowed the United States and the Soviet Union to emerge as dueling superpowers. The resultant Cold War sparked ideological conflicts around the world, accelerated the development of technology like rocketry and computers, and led to the growth of stockpiles of thousands of nuclear weapons. And, of course, each of these conflicts led to fighting around the world that caused hundreds of thousands or millions of casualties.

Over the last year I’ve been thinking about the influence Great Power conflict will play in the 21st century. From a longterm perspective, I've been particularly focused on its influence as an existential risk factor: something that affects multiple other existential risks. This is the first post in a planned three-part series summarizing my current views. The series tackles three broad questions:

Post 1: By what mechanisms does tension between Great Powers affect total existential risk?

Post 2: How likely are various scenarios, including all-out war, this century?

Post 3: What, if anything, can we do to make good outcomes more likely and bad outcomes less likely?

Working through these questions, I updated my views a lot. My most important updates were:

- My estimate of the likelihood of a World War-type conflict between Great Powers increased. My current best guess is that, between now and 2100, we face a ~35% chance of a serious, direct conflict between Great Powers, a ~10% chance of a war causing more deaths than World War II, and 0.1% to 1% chance of an extinction-level war. I previously put more stock in the argument that humanity had experienced a sudden, dramatic, and lasting decline in the likelihood of conflict after World War II.

- My estimate of how bad such a war would be if it occurred increased significantly. While I find it intuitively hard to imagine how a war could grow to be worse than World War II, the long-tailed distribution of deaths per war makes it difficult to rule out extreme outcomes.

- I think there’s about a 1% to 2% chance of an existential disaster due to Great Power war before 2100. I’ve generated this number by disaggregating several specific pathways.[1]

- I think the range of possible outcomes is broader than I previously believed. I updated away from thinking that conflicts are overdetermined, at least on sufficiently long timescales. It seems to me that the geopolitical possibilities for the 21st century range from devastating hot war to renewed cooperation between Great Powers. Where we end up landing on this spectrum will be influenced by policy choices made by leaders of Great Power states, and it is possible to influence these policies.

There were also some other important things about which I learned a lot, but didn’t change my views as much:

- I’m still uncertain about how the average annual probability of Great Power conflict changed after World War II (if at all). Because the timing and size of wars is very noisy, the “Long Peace” that has prevailed since 1945 is consistent with two main models:

- A model in which there is a consistent risk of war, but large wars are rare.

- A model in which the probability of war fell significantly after World War II due to globalization, the spread of liberal norms, democratization, nuclear weapons, or some other factor.

- I’ve updated more towards the first model, but still put at least 30% probability on each.

- I'm not yet sure about what we should do about all this. I’ve found a few approaches and interventions that seem interesting, but it's really difficult to say how effective funding them would be in expectation. Still, the risk is high enough and the interventions promising enough that I think it’s worth spending more time working in this area.

I did most of this work while I was at Founders Pledge. You can read the full report I published and the funding opportunities FP currently recommends here. These posts will be more useful for most Forum readers than the FP report, which is super long, written for a more general audience, and a bit out of date at this point (I finished it last August).

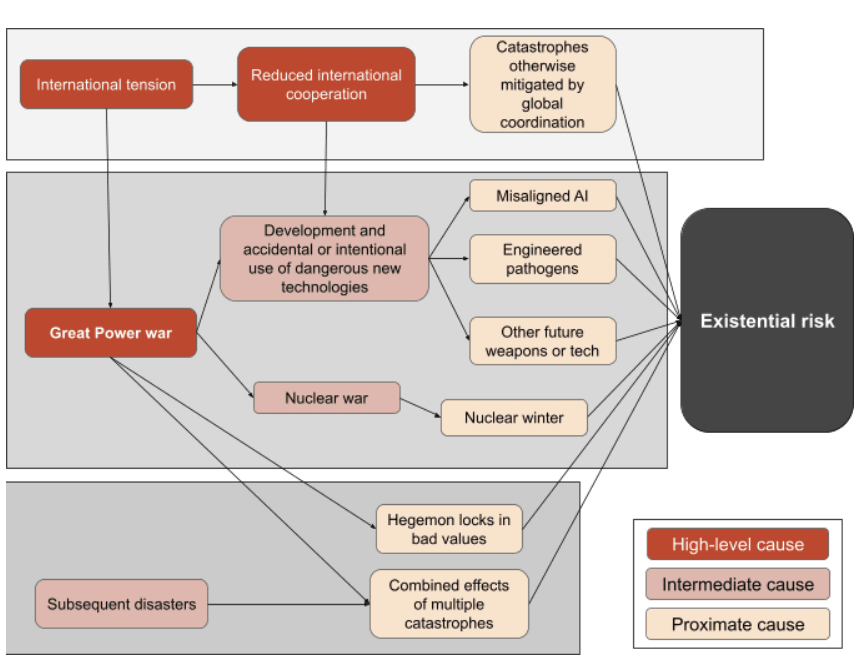

In the rest of this post, I consider various ways that Great Power interactions affect existential risk. I’ve built a model that traces specific causal pathways from Great Power tensions to existential catastrophes, sorted into three broad categories. I show how the model can be used to help make prioritization decisions across causes and interventions and discuss both its implications and shortcomings.

Epistemic status

I don’t have an academic background in international relations or conflict studies. I decided to look into these issues because I think they’re both important and understudied within longtermism and EA. To get up to speed I’ve worked through several book-length literature reviews and spoken to about 20 experts. You can see a full list of interviewees in the Acknowledgements section of the FP report. I am by no means an expert. I expect my views to evolve as I and the EA community learn more about this cause.

I’ve done my best to demonstrate reasoning transparency. I usually cite full-text quotes from sources where I rely on them to support important claims. I quantify my uncertainty and predictions wherever possible. Sometimes transparency has conflicted with my desire to be concise.

Acknowledgements

Please see the Acknowledgements section of the FP report for a full list of the various experts and reviewers who helped with this work. Thank you to Founders Pledge for supporting the production of that report. For additional help putting together these Forum posts, thanks to John Halstead, Max Daniel, and Matt Lerner.

I. What is Great Power conflict?

Sometimes defined as economically-powerful countries with global interests and the military strength to defend them, Great Powers warrant longtermist attention for three reasons:

- They are more likely to fight each other than average.[2]

- When they do fight, they can unleash enormous amounts of destructive power.

- Great Power competition in areas like technological innovation and alliance-building can affect global risks even in the absence of direct conflict.

There is no universally accepted list of Great Powers. I focus on four countries: the US, China, India, and Russia. I think each is relevant here for slightly different reasons.

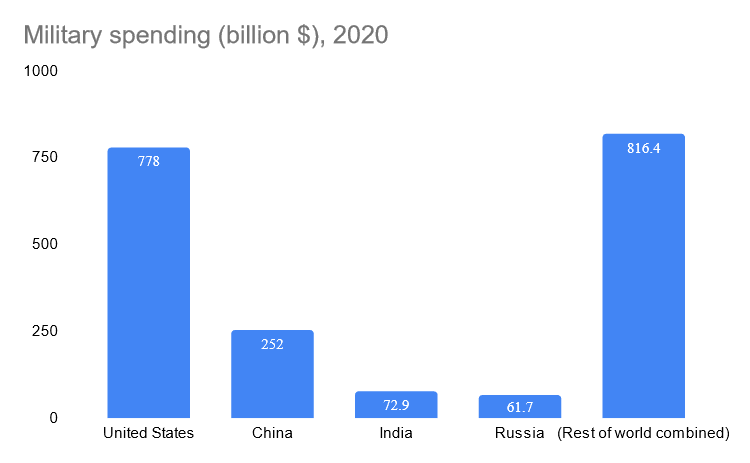

Obviously the United States should be included. By nearly any metric, the US is the world’s most powerful country. The US military's annual budget is $780 billion, nearly 39 percent of total global military spending.[3] America maintains over 700 military bases in over 80 countries to project force around the world.[4]

As the world’s next most powerful country, accounting for about 13 percent of global military spending, China should also clearly be included. While China’s spending and R&D efforts still seem to trail those of the US,[5] its modernizing economy and fast growth rate mean that the American lead is projected to narrow in the future. China is also interested in influencing events beyond its borders. Chinese foreign investments, both private and public, have risen dramatically. The Chinese military recently established its first overseas military base (in Djibouti).

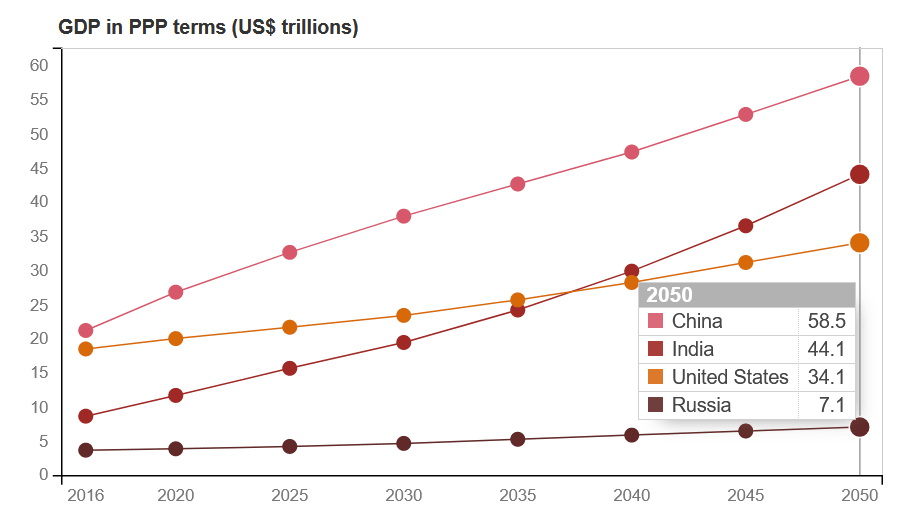

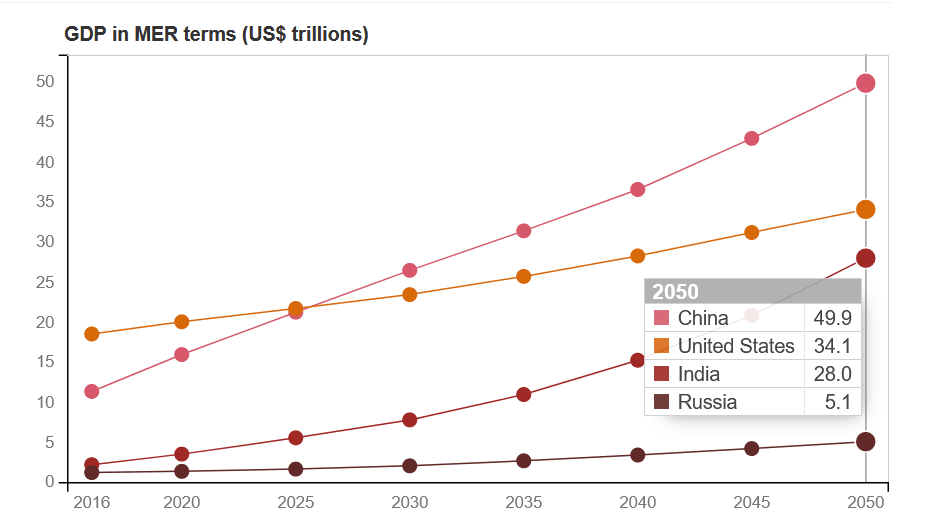

I also think that if current trends hold, India should claim Great Power status at some point this century. Since the 1980s, India has usually grown its economy by at least 4% per year.[6] Should this continue, India will be the world’s second biggest economy by 2100. A report from PwC suggests India’s economy could surpass the US’s in PPP terms by 2040, and be on track to catch up even at market exchange rates soon after 2050.

The PwC report is one scenario, not a projection of economic growth in expectation. The assumed growth rates could easily be wrong. China and India may grow slower than projected, or the US may grow more quickly.

Another possibility is that the economy of the world, or a subset of countries, could enter a new growth mode before 2050. Breakthrough technologies could accelerate economic growth, as they have done in the past. For example, if transformative AI technologies are developed before 2050 and widely deployed throughout the economy, economic growth could speed up dramatically. On the other hand, a catastrophic pandemic or political collapse could slow growth in one or more of these countries. Still, I think the prediction that China will overtake the US while India slowly closes the gap is plausible. I’d put something like 60% of my credence in a scenario where each of these three countries grow at an average rate between 1% and 8% annually between now and 2100.

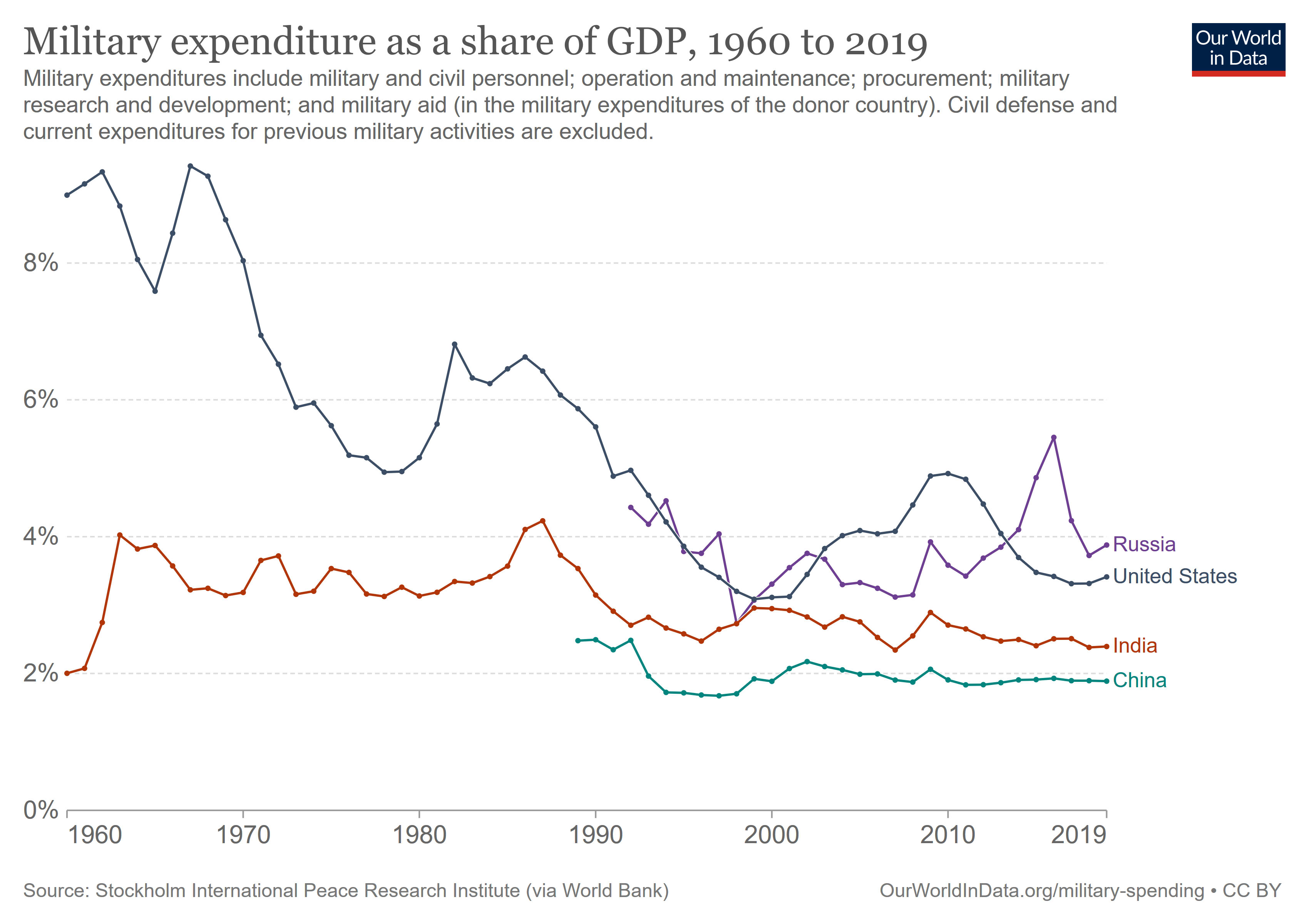

Finally, I think we should also consider Russia. This is definitely arguable. Russia’s economy is relatively small and predicted to fall farther behind the other Great Powers. While Russia is also investing in advanced military technologies,[7] it can bring to bear only a fraction of the resources of larger economies like China and the US. Russia’s military budget comprises just 3.1 percent of the total global spending, less than a tenth the size of America’s. [8]

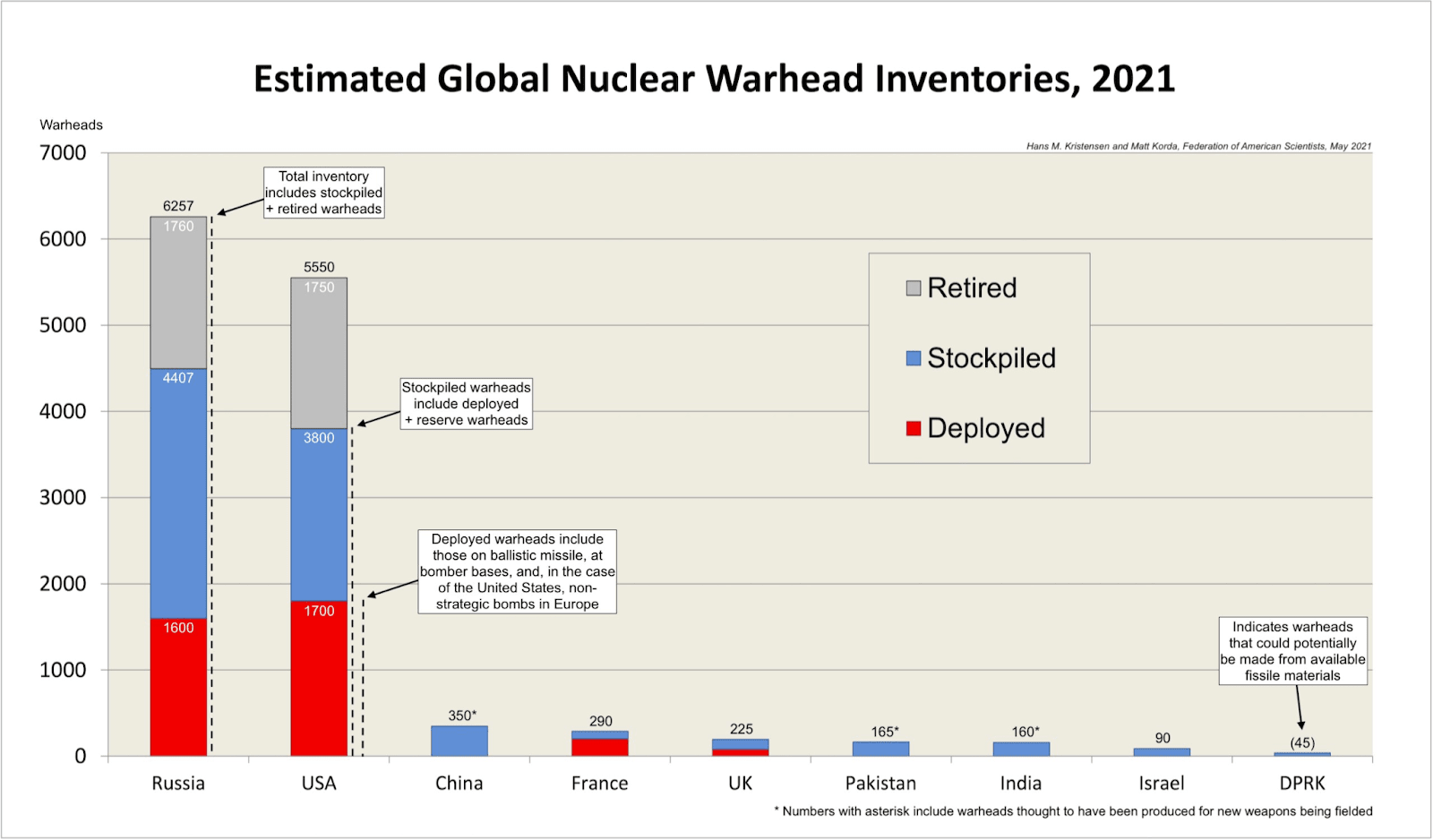

However, Russia still has a big nuclear arsenal. In addition to their destructive potential, nuclear weapons dramatically alter the geopolitical strategies a state can adopt.[9] On multiple occasions, Russia has also projected force beyond its borders. This includes conventional military actions and using emerging technologies like cyber attacks. Overlooking Russia’s role in modern Great Power competitions may lead us to scope out a considerable source of risk.

The clearest form of competition between these four countries is militaristic. Together, they account for about half of global military spending. It's true that, in recent decades, Great Powers have devoted a smaller proportion of their economy to military matters.[10] But because their economies have grown, this does not mean that spending has fallen in real terms. In fact, in real terms, American military spending is high by historical standards.[11] China and India have grown their military budgets almost every year since 1988.[12] Russia is the exception, not having recovered from the fall of the USSR.

But these countries also compete outside the military sphere as they jockey for influence over trade terms, emerging technologies, international institutions, global talent flows, and alliances with smaller countries.

II. Great Power conflict as an existential risk

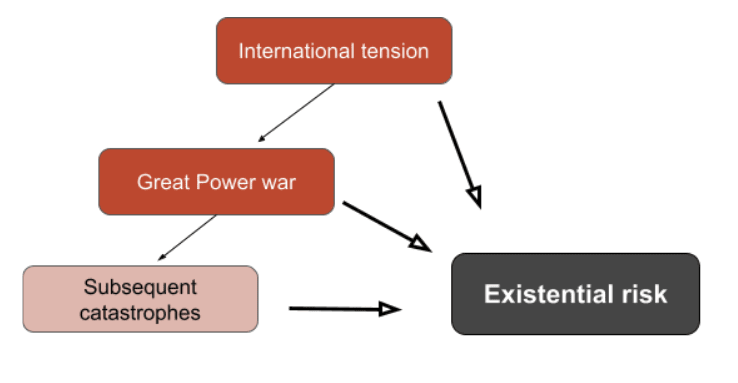

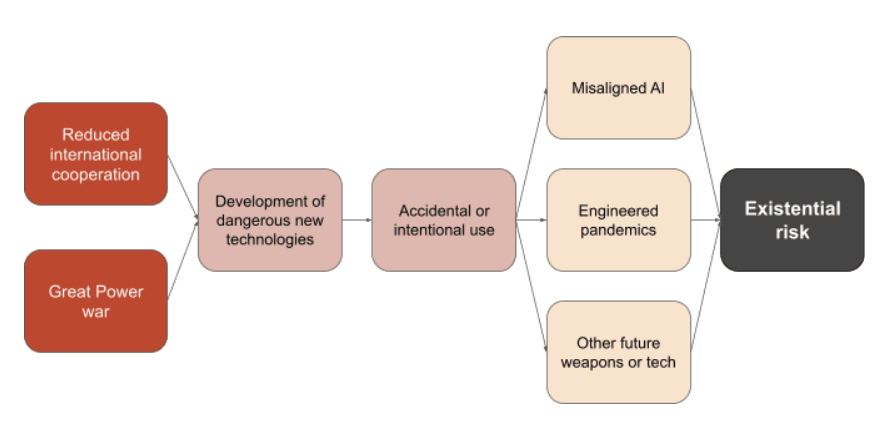

I think there are three kinds of pathways through which this competition could affect the long-term future:

- Great Power tension affects other risks. Rising international tensions could hamper cooperation against other risks or stoke competitive dynamics that increase the danger posed by other major risks.

- A Great Power war could directly cause a catastrophe. Everyone could be killed, or civilization could be damaged so severely that it never recovers.

- Great Power war could combine with another risk to cause a catastrophe. Human civilization could survive the war, but left in a weakened state and more vulnerable to subsequent disasters.

Each category can be divided into more specific pathways. By assigning probabilities to each step in a pathway, we can make estimates of their likelihoods. You can view a Guesstimate model I’ve made based on these pathways here. In this post, I’m going to discuss how each pathway could lead to an existential catastrophe and roughly how likely this seems.

This is not the first longtermist analysis of Great Power conflict. Brian Tse and Dani Nedal have both given valuable EAG talks and Toby Ord highlighted Great Power conflict as a major risk factor in The Precipice. My framework is similar to by Brian’s and Dani’s, but more detailed and quantitative.

I’ve decided to focus on specific mechanisms because it’s important to show clearly how Great Power conflict drives existential risk. Past wars have been terrible events, killing millions of people. But none has threatened to extinguish humanity or cause a civilizational collapse. In fact, post-war recoveries seem surprisingly quick. For example, the effect of the World Wars is not noticeable in a graph of total world population. This means that for a war to pose an existential threat, either the scale of the fighting, the strategies employed, or the types of weapons used would have to be dramatically different than what we've seen in all previous wars.

I also want to emphasize that I don’t think the probabilities I’ve assigned in the Guesstimate model are very robust. I expect some of them will change by an order of magnitude as more research is carried out in this area. The model is meant to capture the gist of my intuitions and facilitate discussion, not make confident predictions. That said, the level of risk varies so widely among different pathways that most estimates could change by an order of magnitude or more without affecting my key conclusions.

Now let's discuss each category in turn.

III. Category 1: International tension

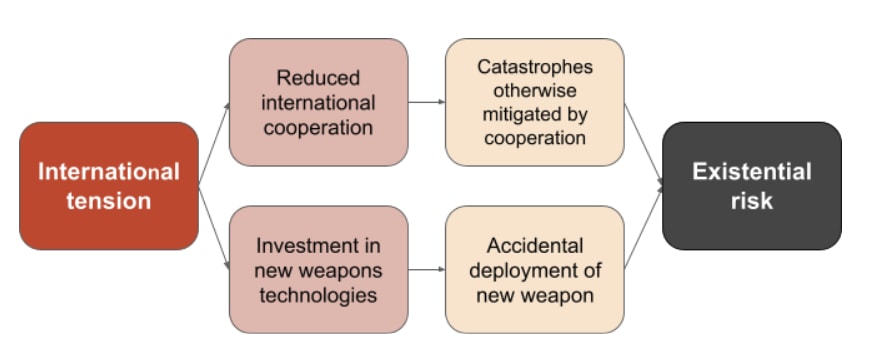

First, I want to show how tensions between Great Powers can affect existential risk even if they don’t lead to direct conflict.

The history of nuclear risk demonstrates this effect. Nuclear weapons are arguably the greatest threat to the long-term future that humanity has created so far. While they were invented during World War II, their proliferation and the invention of new delivery mechanisms such as submarines and intercontinental ballistic missiles occurred after the war, amid mutual fear of conflict between the US and Soviet Union.

By international tension I mean something like “a shared worry about an imminent conflict [which] itself may contribute to starting a war”.[13][14] There’s at least two specific dynamics worth worrying about if international tension is high in the 21st century.

The first is that hostilities will probably make it harder for countries to coordinate to mitigate other risks. As a recent example, progress towards a strong, international treaty governing lethal autonomous weapons was reportedly stymied by the US and Russia. We might expect the effect on risks like future pandemics, governance of new technologies, or climate change to be similarly negative. However, I’m very uncertain about the size of this effect. In some ways a more divided world may be even more resilient to certain risks if it helps avoid correlated policy failures. The Covid-19 pandemic could have been even worse, for example, if every country had a policy that was similar to those adopted in a country like the US.

The second dynamic is that international tension could speed up the development of dangerous technologies like military AI, new weapons of mass destruction, and other weapons that are hard to foresee in 2022. Militaristic competition is particularly intense, paranoid, and aggressive. The more likely they think a war is, the more likely decision-makers are to make large and risky investments in military technologies to try to gain an advantage over their rivals.[15] Once these weapons are developed, they could be deployed unintentionally, as nearly occurred with nuclear weapons on several occasions during the Cold War.

Again, though, I’m uncertain about the size of the effect. I also think there is some upside risk. It seems like states will also be more likely to develop defensive technologies if international tension is high. These defenses could be helpful in mitigating accidents as well as purposeful attacks. So if the probability that an accident is avoided outweighs the probability that an attack is successful, a more tense world could be a safer one.

For these reasons, I think Great Power relations affect existential risk even if they do not lead to direct conflict. More international tension will increase existential risk to the extent that (1) international tension makes it harder for countries to cooperate and (2) more cooperation reduces risk. The more likely Great Powers think a war is, the more they will increase their military budgets and invest in developing new weapon technologies. But international tension could also decrease risk. For example, it could increase policy diversity among countries which could boost resilience. It could also speed up the development of defensive technologies. I’m very uncertain about the net effect of these dynamics. I think it would be really helpful to see more research in this area.

I have not included this pathway in my Guesstimate model. In contrast to the other risk pathways, I’m currently too uncertain about the dynamics at play here for a model to be useful.

IV. Category 2: Great Power war

But what if tensions do lead to war?

World War II killed about 75 million people, or 3% of the world’s population. An extinction-level war would have to be 30 times more severe to wipe out humanity.[17] This is an enormous increase. But war deaths appear to follow a power law distributio;[18] this implies that the chance of such a war is low, yet non-negligible.[19] Huge nuclear arsenals, or extremely lethal future weapons, could provide the technical capacity to exterminate humanity.

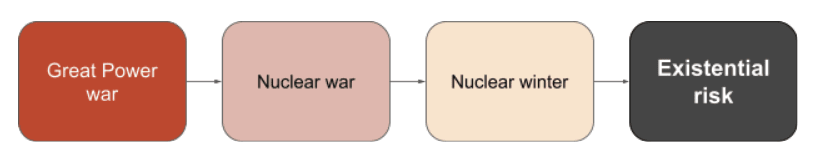

I discuss two pathways by which direct conflict between Great Powers could drive x-risk. First, I briefly discuss the most destructive weapons we already possess, nuclear weapons. Second, I discuss the possibility that future weapons of huge destructive potential will be invented.

Nuclear weapons are really dangerous

There are still more than 8,000 nuclear warheads on Earth, and China’s arsenal will probably grow. The degree of longterm risk posed by nuclear war is controversial and has been discussed better elsewhere. There are several steps in the causal chain here.

First, nuclear weapons must be used in the war. This is not a given. Nuclear-armed powers have, since WWII, been very reluctant to use nuclear weapons. And if the war occurs later in this century, nuclear weapons may have been superceded or made useless by new technologies.

Second, if weapons are used, they must cause a nuclear winter. Luisa Rodriguez has estimated that the chance of a US-Russia nuclear exchange triggering a severe nuclear winter is about 11%. However, she also notes that while this estimate takes the foundational nuclear winter research at face value, that research is controversial. I agree with her that, given this, we should lower the probability of a large nuclear exchange triggering a severe nuclear winter, perhaps to something like 5%.

While the risk seems low, there's lots of uncertainty at each stage of the causal chain. I also don’t want to dismiss the idea that the world in which a widescale nuclear war has occurred is very different than a world that avoids such a war in many important but hard to predict ways. I’d be excited to see more research on this question.

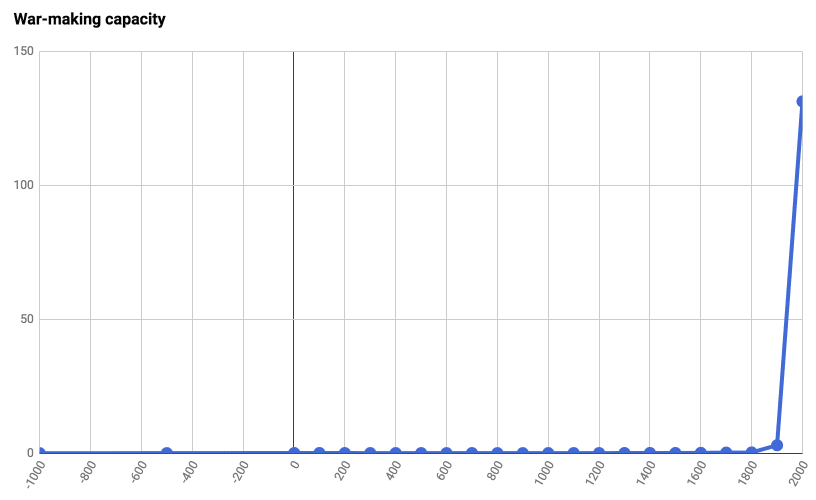

Will future weapons be even worse?

The invention of nuclear weapons caused a discontinuous jump in humanity’s potential to cause destruction. One way of visualizing the effect of technological change on war is to consider humanity’s total “war-making capacity”. This has been defined by historian Ian Morris as “the number of fighters [the world’s armies] can field, modified by the range and force of their weapons, the mass and speed with which they can deploy them, their defensive power, and their logistical capabilities”.[20] War-making capacity increases when more units of available weapons are manufactured, but is dominated by the invention of new, more destructive technologies.

Morris thinks that, at least in Western nations, war-making capacity increased by a factor of 50 between the years 1900 and 2000. I should note that his estimate is “no more than a guesstimate”, and not generated by a transparent quantitative analysis.[21] Still, even if this multiplier is off by a factor of 5, war-making capacity would still have grown by an order of magnitude over the course of the 20th century. Luke Muehlhauser’s graph of Morris’ estimates shows what the discontinuity looks like:

What will the trend in war-making capacity be in the 21st century? I think there are two possibilities:

- The huge 20th century increase in war-making capacity could turn out to be an anomaly. In this case, we might expect war-making capacity to continue to increase, but at a per-century pace somewhere between its 20th century increase and increases in previous centuries.

- The 20th century jump in capacity could indicate a shift to a new growth mode for warmaking capacity. If this is true, we should expect future increases to be as large or larger than the 20th century increases. This would require the invention of weapons at least an order of magnitude more destructive than thermonuclear warheads.

Possibility (2) highlights another pathway from Great Power conflict to existential risk. High international tensions could accelerate the development of such weapons, and cause their (accidental or intentional) use.

I find it difficult to imagine what such weapons would be. But perhaps I am like a researcher in the 1930s, looking back in horror at the brutality of mustard gas and machine guns, unable to forecast the mushroom clouds just 10 years away.

Great Powers are certainly working to develop new military technology. The US, China and Russia together invest about $100 billion in defense research and development each year.[22] That’s between 10 and 13 times the size of the annual budget for the National Science Foundation, which distributes most of the non-health-related public research funding in the US.

Some researchers have speculated about which future technologies could be transformative. James Wither, a security studies researcher, lists “robots, directed-energy weapons, genetically engineered clones, and nanotechnology” as examples of technologies that could “fundamentally alter the character of war”.[23] A 2020 Congressional Research Service report on emerging military technologies focused on artificial intelligence, lethal autonomous weapons, hypersonic weapons, directed energy weapons, biotechnology, and quantum technology.[24] Another list of weapons with high military potential, from a report from the Center for the Study of Weapons of Mass Destruction, comprised weapons utilizing high-powered microwaves or other forms of directed energy, hypersonic kinetic energy, ultra-high explosives and incendiary materials, antimatter, and geophysical manipulation.[25]

Alternatively, perhaps new inventions are not the only way to increase war-making capacity. Future technological changes could also make it easier to develop already-existing weapons of mass destruction[26] or make such weapons more destructive.[27]

I don’t currently have clear views about which of these technologies longtermists should focus on. I’m not aware of many existing analyses. A notable exception is Kyle Bogosian’s report on lethal autonomous weapons (aka AI weapons).[28] Dedicated reports like this one for other potential new superweapons could be highly valuable in directing future research and advocacy efforts.

The proportion of existential risk from Great Power conflict that is accounted for by AI development is a key question in this area. I don’t think military AI development can currently be called an arms race. Arms races are characterized by rapid, simultaneous increases in military spending and production by rival nations. But military AI programs are currently a small proportion of military budgets, and Great Power military budgets themselves are at relatively low, stable levels (<10% of GDP in every Great Power nation). [29]

This could, of course, change if the expected value of AI investments became larger, or if rising international tensions stimulated a significant increase in military spending. Judging from historical levels of spending, military budgets could increase significantly. At the height of WWII, about 75 percent of the German and Soviet economies were devoted to supporting the war effort.[30]

Intentional deployment of such destructive weapons would seem unlikely given that, by definition, we are discussing events that harm everybody alive, not just enemy combatants. But accidents happen. The effects of these weapons could prove different on the battlefield than in test environments. Alternatively, militaries could choose to deploy untested weapons where some degree of risk is known if they are in a high-stress combat environment or feel they are at risk of losing a war.[31]

In the Guesstimate model, I split this pathway into two chains of conditional probabilities. One chain estimates the probability of a technological disaster assuming a Great Power war doesn’t occur this century. The other calculates the same probability for the worlds in which a Great Power war does occur. Each chain has three steps:

I’m pretty uncertain about each of these probabilities. My intuitions are that:

- Transformative military technologies are likely to be developed. Multiple powerful new technologies were invented in the 20th century. Great Powers continue to fund military R&D. I think the probability we see new weapons invented is >50%.

- The deployment of such technologies is unlikely, but not impossible, if a war does not occur. A reasonable estimate could be that the probability is between 5% and 30%.

- If a war occurs, deployment is likely but not certain. It depends on which technologies are invented, when they are invented, and when the war breaks out. My main estimate is between 30% and 70%.

- If the technologies are deployed, I think an existential catastrophe is very unlikely but not impossible. It again depends on the timing and type of technologies. My uncertainty range here is between 0.1% and 15% if no war occurs, and .5% to 25% if a war occurs. It’s slightly higher in the latter case because these technologies are more likely to be deployed at a wide-scale in the event of a war.

I’ve included slightly more detail on these probability estimates in the Guesstimate model. The numbers I use here are the most important in the because the total risk is almost perfectly correlated with the amount of risk I estimate future weapons pose.

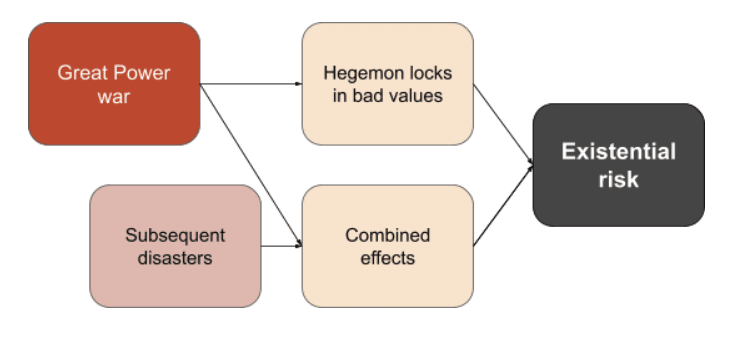

V. Category 3: Post-war events

Finally, if a Great Power war occurred it could increase total existential risk even if it didn’t directly lead to human extinction. Great Power wars have often caused radical reshufflings of the dominant international order, accelerating the rise and fall of global powers, alliances, and international institutions. Imagine how different the 19th century would have been if Napoleon had won in Russia or at Waterloo, or imagine the 20th century if the outcomes of one of the World Wars had been different. I think it’s plausible that the post-war world could have negative long-term effects if it facilitated the long-term lock-in of subpar values, or if it was more vulnerable to subsequent global catastrophes.

What if the bad guys won the war?

One specific risk some people have worried about is that a total victory for a Great Power in a future war could enable a long-term value lock-in. Historically, the winner of major wars has been able to strongly influence the post-war world order. The permanent members of the UN Security Council, for example, are five of the victorious Allied countries. The effects of decisions made following a war are often very significant and enduring.

From a longtermist point of view, the worry is that advancements in surveillance and enforcement technologies could allow those effects to last in perpetuity. Improved surveillance technology could allow future leaders to quickly identify and put down internal dissent, while advances in genetic engineering could allow them to engineer a docile population or greatly extend the lifespan of their leaders, reducing the instability introduced by succession problems. Or future countries could be led by AIs or other digital beings who do not die.

In this scenario, even if humanity were to survive a war, the lock-in of one country’s governance system and values could preclude us from achieving our potential, constituting an existential catastrophe. Some people have specifically worried about a totalitarian lock-in.[32] The centralization of power in a totalitarian system arguably makes a complete lock-in more feasible. But a lock-in of any state’s values could be an existential disaster if one thinks that those values are far from optimal.

Overall I think this scenario is very unlikely, on the order of 1 in 10,000 even assuming a war occurs. The improbability is driven by me assigning a 1% chance to a global totalitarian takeover following a war, plus a 1% chance that the totalitarian lock-in is ironclad enough to qualify as an existential catastrophe. (It would require no variation and no hope for future variation in values, anywhere in the world.)

Despite its improbability, though, the existence of this pathway possibly raises difficult questions about the sign of interventions in this space. Imagine the situation of the world before WWII. Assuming that the rise of fascism was inevitable, then increasing UK and US military spending to increase the chance of defeating Hitler seems like a good thing in the long-term, even if it also increased the chance WWII happened.

As a more general example, assume that leaders in one Great Power care relatively more about the long-term future. They may decide to unilaterally reduce their military budgets to escape a conflict spiral and lower international tensions. In expectation, this would reduce existential risk along the first three pathways. However, it might also raise the probability that, if a war occurs anyway, a bad actor wins and is able to lock itself in as a global hegemon.

In my current model, the baseline probability of a lock-in is so low that an intervention’s effect will be swamped by its effects on the likelihood of a technological disaster. But if the pathway to lock-in later comes to seem more probable, or if a given intervention has a negligible impact on other pathways, it seems like it will be very important to consider its effect on who wins a Great Power war, should one occur

What if we get unlucky and a second disaster hits soon after?

Finally, we might also worry that humanity’s resilience would be generally diminished in the aftermath of a destructive global war. But I think it’s unlikely that a war would be large enough to leave humanity extremely vulnerable to follow-up disasters, but not large enough to kill everyone. Luisa Rodriguez’s analysis suggests that even if 99.99% of humanity were wiped out, leaving just 800,000 survivors, the probability of human extinction rises above 1 in 5000 only when they are clustered in about 80 groups and each group has at least a 90% chance of dying out, or they are clustered in about 800 groups and each group has a 99% chance of dying out.[33]

Fortunately, the background rate of such catastrophic events is, judging from the historical record, very low, given that humanity has not come close to extinction at any point in the modern age. I think civilization would also recover relatively quickly following most kinds of wars, so the period of vulnerability, if it existed, would be relatively short. The risk from this pathway, even conditional on a war, seems low: on the order of 1 in 10,000.

VI. Summary of model

When I put these pathways together I get a model like this:

The Guesstimate model strongly suggests that the future technology pathways are the most concerning. Almost all (>99%) of the total risk flows through that pathway, with the other pathways estimated to be 10% as likely or less. Often they are bottlenecked by one very unlikely step (such as a lock-in) or multiple somewhat unlikely steps (such as a nuclear war generating enough smoke to lead to a nuclear winter that threatens civilization).

I find this model useful for thinking about how Great Power conflict could lead to an existential catastrophe. But several of the academics I talked to during this research kind of hated it. They pointed out—correctly—that it doesn’t capture all the dynamics of this complex question. There are probably missing feedback loops, like how the development of new technologies can feed back and increase or decrease tensions between countries. I also think the model fails to shed much light on the many ways that a world with high international tension is different than a world with low international tension. In future research, it will be really important to dig deeper into the dynamics behind the international cooperation pathway to better calibrate that risk estimate.

The other criticism levelled by experts is that many important actors in the IR sphere don’t appear in the diagram. Rogue states, for example, may drive x-risk by inventing or producing WMDs of their own. Great Power wars can also result from rapid escalation of regional tensions, such as in the Balkans before WWI. I scoped these actors out of this project, primarily for practical reasons, but also because I think the bulk of the risk flows from the direct interactions between Great Powers themselves.

VII. Key points

- Great Power war is an existential risk factor. Changes in the probability of a war affect multiple other risks

- I think five kinds of risk are affected when the probability of a war changes:

- Risks that affect how Great Power countries cooperate to solve other issues

- Risks from the purposeful or unintentional deployment of advanced military technologies

- Nuclear war

- Risk of a hegemon gaining global domination and locking-in its values after a war

- Risk that a war leaves global civilization more vulnerable to other disasters that occur soon after a war

- I think the total probability of all these risks between now and 2100 adds up to between 1% and 2%

- Under my current assumptions, this is almost completely dominated by the technology risks

- But the state of our knowledge in this area is poor. These assumptions are probably wrong in important ways. The purpose of this series of posts is to highlight critical assumptions and encourage discussion.

Next up...

One of the key inputs into the model is the likelihood of a Great Power war. In the next post, I estimate the chance of a major war before 2100. I will also discuss the probability that this war is larger than WWII, and even large enough to threaten the whole of humanity.

- ^

It lines up nicely with Toby Ord’s estimate in The Precipice, where he guesstimated that one-tenth of the total existential risk of 16% (⅙) before 2120 is attributable to Great Power war.

- ^

“An argument quite frequently made by “realists” is that large, powerful states (regardless of the nature of their political or economic systems) tend to be perpetrators of war rather than small states … A sizable amount of empirical evidence tends to support this thesis” (Cashman, Greg. What causes war?: an introduction to theories of international conflict. Rowman & Littlefield Publishers, 2013, 192)

- ^

These data are from a report that compares military spending at current prices and market exchange rates: Diego Lopes Da Silva, Nan Tian, and Alexandra Marksteiner, “Trends in World Military Expenditure, 2020,” SIPRI Fact Sheet (Stockholm International Peace Research Institute, April 2021), 2.

- ^

“According to David Vine, professor of political anthropology at the American University in Washington, DC, the US had around 750 bases in at least 80 countries as of July 2021. The actual number may be even higher as not all data is published by the Pentagon.” Haddad, Mohammed. “Infographic: History of US Interventions in the Past 70 Years.” Accessed November 1, 2021. https://www.aljazeera.com/news/2021/9/10/infographic-us-military-presence-around-the-world-interactive.

- ^

“Notwithstanding these efforts, however,the Chinese arms industry still appears to possess only limited indigenous capabilities for cutting-edge defense R&D ... Most importantly, no real internal competition exists and the industry lacks sufficiently capable R&D and capacity to develop and produce highly sophisticated conventional arms” Michael Raska, “Strategic Competition for Emerging Military Technologies: Comparative Paths and Patterns,” Prism 8, no. 3 (January 2020): 70.

- ^

World Bank data from https://data.worldbank.org/indicator/NY.GDP.MKTP.KD.ZG?locations=IN

- ^

“In October 2012, Russia established the Advanced Research Foundation (ARF)—a counterpart to the U.S. DARPA (Defense Advanced Research Projects Agency). The ARF focuses on R&D of high-risk, high-pay-off technologies in areas that include hypersonic vehicles, artificial intelligence, additive technologies, unmanned underwater vehicles, cognitive technologies, directed energy weapons, and others.” Raska, “Strategic Competition,” 73.

- ^

Da Silva, Tian, and Marksteiner, “Trends in World Military Expenditure, 2020,” 2.

- ^

“In Russian strategic thought, maintaining a variety of sophisticated nuclear weapons can invalidate any conventional advantages of the United States, NATO, and China. Ensuring that Russia remains a nuclear superpower is the basis of all Russian security policies.” Raska, 73.

- ^

Max Roser and Mohamed Nagdy, “Military Spending,” Our World in Data, August 3, 2013, https://ourworldindata.org/military-spending.

- ^

https://nation.time.com/2013/07/16/correcting-the-pentagons-distorted-budget-history/

- ^

Using SIPRI data from https://www.sipri.org/databases/milex

- ^

This definition is from Barry O’Neill, Honor, Symbols, and War (Ann Arbor, MI: University of Michigan Press, 1999), 63, https://doi.org/10.3998/mpub.14453.

- ^

There are various ways we could try to measure the degree of international tension. For example, the number of UN Security Council vetoes per year is plausibly, weakly correlated with international tension. The ratio of global military expenditure to GDP has also been proposed as a measure of tension. For this model, I instead use my subjective probability estimate of the chance war breaks out as my measure of international tension. I justify this probability estimate in post 2 of this series.

- ^

“Moreover, fear of military opponents intensifies willingness to take risks: If they might be doing X, we must do X to keep them from getting there first, or at least so that we under-stand and can defend against what they might do” Richard Danzig, “Technology Roulette: Managing Loss of Control as Many Militaries Pursue Technological Superiority” (Center for a New American Security, June 2018), 8.

- ^

“A type-1 strategic setting is characterized by a traditional security dilemma—that is, a situation in which security relations between potential rivals are unstable and defined by mutual suspicions of each other’s intentions but where both sides are status quo, defensive-oriented states. Despite having aligned interests, they nevertheless are engaged in a destabilizing action-reaction cycle whereby moves to enhance one’s own security for defensive reasons are seen by the other side as evincing potentially offensive intentions. A vicious cycle ensues, as the other side judges it has no choice but to employ countermeasures.” Adam P.

Liff and G. John Ikenberry, “Racing toward Tragedy?: China’s Rise, Military Competition in the Asia Pacific, and the Security Dilemma,” International Security 39, no. 2 (October 2014): 63, https://doi.org/10.1162/ISEC_a_00176. - ^

WWII was the most severe war in history, where severity means number of deaths. Other cataclysms, such as the Mongol invasions, may have been more deadly, but took place over a longer period of time and are difficult to classify as a single war. Other wars, such as the An Lushan Rebellion, may have killed a larger proportion of the world’s population. Since the death tolls for such events are very controversial, I’ve referred to WWII here.

- ^

Clauset, Aaron. "Trends and fluctuations in the severity of interstate wars." Science advances 4, no. 2 (2018), https://www.science.org/doi/10.1126/sciadv.aao3580.

- ^

Fitting a power law distribution that extends beyond the range of the available data can be controversial. I’ll say more about that in part 2 of this series.

- ^

Ian Morris, The Measure of Civilization: How Social Development Decides the Fate of Nations (Princeton: Princeton University Press, 2013), 175.

- ^

Morris, 180.

- ^

To break this number down: the US spends about $60B on Defense R&D annually and China spends about 127 billion yuan ($27 billion). I haven’t found data for Russia, but their military budget is 8% the size of America’s. If the R&D budgets also differ by the same proportion, then Russia’s would be roughly $5B.

- ^

“One future scenario is based on emerging technical innovations, which have the potential to fundamentally alter the character of war, such as robots, directed-energy weapons, genetically engineered clones, and nanotechnology” James K. Wither, “Warfare, Trends In,” in Encyclopedia of Violence, Peace, & Conflict (Elsevier, 2008), 2431, https://doi.org/10.1016/B978-012373985-8.00198-7.

- ^

Kelley M Sayler, “Emerging Military Technologies: Background and Issues for Congress,” CRS Report (Congressional Research Service, November 10, 2020).

- ^

John P. Caves, Jr. and W. Seth Carus, “The Future of Weapons of Mass Destruction: Their Nature and Role in 2030,” Occasional Paper (Washington, DC: Center for the Study of Weapons of Mass Destruction, June 2014), 29, https://doi.org/10.21236/ADA617232.

- ^

“Technologically, by 2030, there will be lower obstacles to the covert develop-ment of nuclear weapons and to the development of more sophisticated nuclear weapons. Chemical and biological weapons (CBW) are likely to be [...] more accessible to both state and nonstate actors due to lower barriers to the acquisition of current and currently emerging CBW technologies” Caves, Jr. and Carus, 4.

- ^

“Our overall conclusion is that it is impossible to predict the specific biological and chemical weapons capabilities that may be available by 2030, but clearly what will be possible will be much greater than today, including in terms of discrimination and the ability to defeat existing defensive countermeasures” Caves, Jr. and Carus, 26.

- ^

Though he’s highly uncertain, Bogosian thinks the benefits of such weapons likely outweigh the harms because they may reduce the human cost of conflict.The enormous caveat is that he’s agnostic about the sign of the effect on the safety of AI development. While I’ve spent less time thinking about this than him, it seems like the effect on AI development, and the potential for misuse by AI systems, make the development of lethal autonomous weapons more likely to be negative.

- ^

“[E]ven crude estimates of defense spending show that military AI investments are nowhere near large enough to constitute an arms race. An independent estimate by Bloomberg Government of U.S. defense spending on AI identified $5 billion in AI-related research and development in fiscal year 2020, or roughly 0.7 percent of the Department of Defense’s over $700 billion budget” Paul Scharre, “Debunking the AI Arms Race Theory,” The Strategist 4, no. 3 (Summer 2021): 121–132, http://dx.doi.org/10.26153/tsw/13985.

- ^

See table 3 in: Mark Harrison, “Resource mobilization for World War II: the U.S.A., U.K., U.S.S.R., and Germany, 1938-1945,” Economic History Review 41, no. 2 (1988): 171-192, https://warwick.ac.uk/fac/soc/economics/staff/mharrison/public/ehr88postprint.pdf.

- ^

“In evaluating new technologies, militaries may be relatively accepting of the risk of accidents, which may lead them to tolerate the deployment of systems that have reliability concerns” Scharre.

- ^

“On balance, totalitarianism could have been a lot more stable than it was, but also bumped into some fundamental difficulties. However, it is quite conceivable that technological and political changes will defuse these difficulties, greatly extending the lifespan of totalitarian regimes. Technologically, the great danger is anything that helps solve the problem of succession. Politically, the great danger is movement in the direction of world government.” Bryan Caplan, “The Totalitarian Threat,” in Global Catastrophic Risks (Oxford University Press, 2008), 510, https://doi.org/10.1093/oso/9780198570509.003.0029.

- ^

See Case 3 of her analysis: Luisa Rodriguez, “What Is the Likelihood That Civilizational Collapse Would Directly Lead to Human Extinction (within Decades)?,” December 24, 2020, https://forum.effectivealtruism.org/posts/GsjmufaebreiaivF7/what-is-the-likelihood-that-civilizational-collapse-would.

Thanks, I thought this was very good. I liked the methodology - the categorisation, the use of Guesstimate, and the extensive input from experts. It's also very clearly written. I look forward to reading the rest of the series.

One thing that might be worth thinking about is how the list of Great Powers interacts with the sources of existential risk. E.g. if one thinks that most risk stems from future technologies, then current nuclear arsenals may not be as relevant as them may seem (cf. the comments on Russia). That would in turn entail that efforts to reduce existential risk from Great Power war should be directed towards countries that are most likely to develop such future technologies.

Relatedly, it seems conceivable to me that those countries could include some smaller ones - that aren't naturally seen as "Great Powers" - such as the UK, France, and Israel. And it may be that use of those technologies will be so devastating that small countries that have them could defeat countries with a much larger GDP. So potentially the analysis shouldn't be focused on Great Power conflict per se but more generally on conflict and war between actors with powerful weapons.

Or even moreso, that we should be aiming to build a broad framework that addresses weapons and military technology as a broad class of things needing regulation of which nuclear weapons are seen as just the beginning.

Right, and the alternative here (US leaders) don't do that?

It seems to me that up to and including WW2, many wars were fought for economic/material reasons, e.g., gaining arable land and mineral deposits, but now, due to various changes, invading and occupying another country is almost certainly economically unfavorable (causing a net loss of resources) except in rare circumstances. Wars can still be fought for ideological ("spread democracy") and strategic ("control sea lanes, maintain buffer states") reasons (and probably others I'm not thinking of right now), but at least one big reason for war has mostly gone away at least for the foreseeable future?

Curious if you agree with this, and what you see as the major potential causes of war in the future.

Leaders of countries and elites (military leaders, political leaders, ...) decide whether to go to war or not. The claimed reasons may or may not have anything to do with the actual reasons: that is just a tool to get to some desired outcome.

Most wars suck for both parties, but groups of people can easily all end up believing the same thing because of excessive deference, resulting in poor decision making and e.g, a war.

Imagine two groups of teenagers quarrelling on the high school playground: if they do decide to fight, was it for strategic / ideological reasons? Usually it's the result of escalating rhetoric or one of the parties being unusually aggressive. This is I think a good model for war as well.

US and Chinese elites today look like a bunch of teenagers quarrelling. It has little to do with reality (ideology/strategy), and much more to do with the social dynamics of the situation, I think.

This misrepresents Luisa's claims in a way that I think is important, and also takes them out of context in a way I think is important.

(Incidentally, I was worried precisely this sort of misrepresentation and taking out of context would occur when I first read her post.)

(Also, as expressed in my other comment, overall I really appreciate this post! This comment is just a criticism of one specific part of the post, and I don't think it should radically change your high level conclusions. Though I do personally think it's worth editing to fix this, since I think that passage could leave people with faulty views on an important question.)

Do you mean non-extinction existential risks? I can think of non-x-risk scenarios that involve humans going extinct, but those are extremely noncentral.

Whoops! Yeah, that was just a typo. Now fixed.

Thanks, this is a great comment! I'm going to edit the main post to reflect some of this.

Does (1) a second catastrophe and (2) failure for civilization to recover exhaust the possibilities for "indirect paths"? I've thought about this less than the other points in my main post, but I think I disagree that these are as worrying as the direct path. I think it's possible they're on the same magnitude, but less likely in expectation, than the direct pathways from war to existential risk via extinction.

First, catastrophes in general are just very unlikely, and I think the 'period of vulnerability' following a war would probably be surprisingly short (on the order of 100 years rather than thousands). Post-WWII recovery in Europe took place over the course of a few years. The US funded some of this recover via the Marshall Plan, but the investment wasn't that big (probably <5% of national income).[1] There's also a paper that found that, just 27 years after the Vietnam War, no difference in economic development between areas that were heavily bombed by the US and areas that weren't.[2]

A war 10-30 times more severe than WWII would obviously take longer to recover from, but I still think we're talking about decades or centuries rather than millenia for civilization to stabilize somewhere (albeit at a much diminished population).

Second, I find it hard to think of specific reasons why we would expect long-term civilizational stagnation. I think a catastrophic war could wipe out most of the world population, but still leave several million people alive. New Zealand alone has 5M people, for example. Humanity has previously survived much smaller population bottlenecks. Conditional on there being survivors, it also seems likely to me that they survive in at least several different places (various islands and isolated parts of the world, for example). That gives us multiple chances for some population to get it together and restart economic growth, population growth, and scientific advancement.

I'd be interested to hear more about why you think the "less direct paths should be seen as more worrying than the fairly direct paths".

"The Marshall Plan's accounting reflects that aid accounted for about 3% of the combined national income of the recipient countries between 1948 and 1951" (from Wikipedia; I haven't chased down the original source, so caveat emptor)

"U.S. bombing does not have a robust negative impact on poverty rates, consumption levels, infrastructure, literacy or population density through 2002. This finding suggests that local recovery from war damage can be rapid under certain conditions, although further work is needed to establish the generality of the finding in other settings." (Miguel & Roland, abstract, https://eml.berkeley.edu/~groland/pubs/vietnam-bombs_19oct05.pdf)

[written quickly, sorry]

One indication of my views is this comment I made on Luisa's post (emphasis added):

I think "[the period before recovery might be only] on the order of 100 years" offers little protection if we think we're living at an especially "hingey" time; a lot could happen in this specific coming 100 years, and the state society is in when those key events happen could be a really big deal.

Also, I agree that society simply remains small or technologically stagnant or whatever indefinitely seems very unlikely. But I'm more worried about either:

Background thought: I think the potential value of the future is probably ridiculously huge, and there are probably many plausible futures where humanity survives for millions of years and advances technologically past the current frontiers and nothing seems obviously horrific, but we still fall massively short of how much good we could've achieved. E.g., we choose to stay on earth or in the solar system forever, we spread to other solar systems but still through far less of the universe than we could've, we never switch to more efficient digital minds, we never switch to something close to the best kind of digital minds having the best kind of lives/experience/societies, we cause unrecognised/not-cared-about large-scale suffering of nonhuman animals or some types of digital beings, ...

So I think we might need to chart a careful course through the future, not just avoiding the super obvious pitfalls. And for various fuzzy reasons, I tentatively think we're notably less likely to chart the right course following a huge but not-immediately-existential catastrophe than if we avoid such catastrophes, though I'm not very confident about that.

Thanks, this is really helpful. I think a hidden assumption in my head was that the hingey time is put on hold while civilization recovers, but now I see that that's pretty questionable.

I also share your feeling that, for fuzzy reasons, a world with 'lesser catastrophes' is significantly worse in the longterm than a world without them. I'm still trying to bring those reasons into focus, though, and think this could be a really interesting direction for future research.

Regarding the "long term stagnation" - to me this suggests you seem to be thinking of the current epoch of history as showcasing the inevitable. Yet stagnation in this sense was the norm for 200,000+ years of modern Homo sapiens existing on Earth. Hence, there is real question whether this period represents a continued given, a blip, the last hurrah before the end, or perhaps the start of a much more complex trajectory of history - perhaps involving multiple periods of rapid technological flourishing, then periods of stagnation or even decline, in various patterns and ways and not to mention also geographically.

One thing to note about history or culture is that there are no inherent drivers to "greater complexity" - indeed, from an anthropological point of view one can question just what that means. It is, in this regard, much like biological evolution outside the human realm. In both biology and anthropology, there is and should be a strong skepticism toward any claim of a teleology or a linear narrative.

That said, I would still support that there is a distinction between a long term stagnation and extinction even if the former is definitely not something one should rule out - and that's that in the latter case, there is absolutely no recovery: while it's possible another intelligent toolmaking species could evolve, looking at the future of geological history which is potentially much more regular, the gradual heating of the Sun suggests that we could potentially be Earth's only shot. It's like the difference between life imprisonment, and the death penalty. The former is not fun at all, but there's a reason there's so much resistance to the latter, and it's that key point of irreversibility.

Thank you Stephen – really interesting to read. Keep up the good work.

Some quick thoughts.

1.

There was less discussion than I expected of mutual assured destruction type dynamics.

My uninformed intuition suggests that a largest source of risk of an actually existential catastrophe comes from scenarios where some actor has an incentive to design weapons that would be globally destructive and also to persuade the other side that they would use those weapons if attacked in order have a mutually assured destruction deterrent. (The easiest way to persuade your enemy that you would destroy the world if attacked is setting up systems to ensure that you would actually destroy the world if attacked).

I think in most scenarios actors incentives are to avoid designing weapons or using weapons that would destroy the world but in this scenario actors incentives are to towards designing weapons or using weapons that would destroy the world, which feels significant.

2.

Changing vulnerabilities. I also think it is possible that global vulnerability could significantly go up or down between now and when a conflict happens. Examples might be:

Thanks for this post! Seems like valuable work.

A couple clarity questions/comments:

These things might be clarified later (haven't finished reading yet) but they seem worth clarifying in the summary anyway.

(I ask in part because I intend to add your estimates to my database of existential risk estimates (or similar).)

It definitely is possible. And perhaps more than 1 percent, but I don't think I'd put a credence at more than 2-3 percent.

Also, I think, and I think this is a dangerous error, that a lot of people confuse the Chinese elites not supporting democracy with them not wanting to create good lives for the average person in their country.

In both the US and China the average member of the equivalent of congress has a soulless power seizing machine in their brain, but they also have normal human drives and hopes.

I suppose I just don't think that with infinite power that Xi would create a world that is less pleasant to live in than Nancy Pelosi, and he'd probably make a much pleasanter one than Paul Ryan.

My real point in saying this is that while I'd modestly prefer a well aligned American democratic ai singleton to a well aligned communist Chinese one, both are pretty good outcomes in my view relative to an unaligned singleton, and we basically should do nothing that increases the odds of an aligned American singleton relative to a Chinese one at the cost of increasing the odds of an unaligned singleton relative to an aligned one.

I'm just reading the relevant section in Will's book, and noticed the footnote 'there is some evidence suggesting that future power transitions may pose a lower risk of war, not an elevated one, and some researchers believe that it is equality of capabilities, not the transition process that leads to equality, that raises the risk of war.'

If this is true, and if we believe China is overtaking the US, this implies that accelerating the transition eg by encouraging policies specifically to boost China's growth rate would reduce the risk of conflict (since the period of equality would be shorter).

I would strongly recommend taking a look at Peter Zeihan's work- he forecasted, among other things, the invasion of Ukraine back in 2015 based on an array of geographic and demographic factors, and expects many similar calamities in the coming years. (TLDR- Russia's top-heaving ageing demography and insecure geostrategic perimeter is prompting it to expand to reach geographical choke points like the Besarabian Gap before it runs out of young men to draft.)

There's an interview on the topic here that you might take a look at. Of particular interest and concern are his predictions of widespread famine due to disruptions of fertiliser inputs needed for agriculture in many parts of the world (due to natural gas and energy prices spiking, disruption of potash exports from Russia and Belarus, and phosphate exports from China recently being banned.) That's effectively an existential risk to civilisation as we would recognise it with or without nuclear weapons being involved.

Even if the person/ group who controls the drone security forces can never be forcibly pushed out of power from below, that doesn't mean that there won't be value drift over long periods of time.

I don't know if a system that stops all relevant value drift amongst its elites forever is actually more likely than 1 percent.

Also, my possibly irrelevant flame thrower comment is that China today really looks pretty good in terms of values and ways of life on a scale that includes extinction and S-risks. I don't think the current Chinese system (as of the end of 2021) being locked in everywhere, forever, would qualify in any sense as an existential risk, or as destroying most value (though that would be worse from the pov of my values than the outcomes I actually want).

To me this also suggests the need to develop a more robust international order that can effectively regulate and limit the development of potentially destructive technologies for military application. For example, consider how much pressure has been put on Iran and North Korea to prevent them from gaining nuclear weapons. Should we treat countries pursuing AI for clearly military aims in the same way?

This isn't really a reply to the article, but where are you making the little causal diagrams with the arrows? I suddenly am having a desire to use little tools like that to think about my own problems.

Those are just screenshots of diagrams made in Google Docs using the "Insert Drawing" feature!

Not what Stephen used, but I recently tried https://excalidraw.com/ for causal diagrams after seeing it in a post by Nuño and was happy with it.