This post will be direct because I think directness on important topics is valuable. I sincerely hope that my directness is not read as mockery or disdain towards any group, such as people who care about AI risk or religious people, as that is not at all my intent. Rather my goal is to create space for discussion about the overlap between religion and EA.

–

A man walks up to you and says “God is coming to earth. I don’t know when exactly, maybe in 100 or 200 years, maybe more, but maybe in 20. We need to be ready, because if we are not ready then when god comes we will all die, or worse, we could have hell on earth. However, if we have prepared adequately then we will experience heaven on earth. Our descendants might even spread out over the galaxy and our civilization could last until the end of time.”

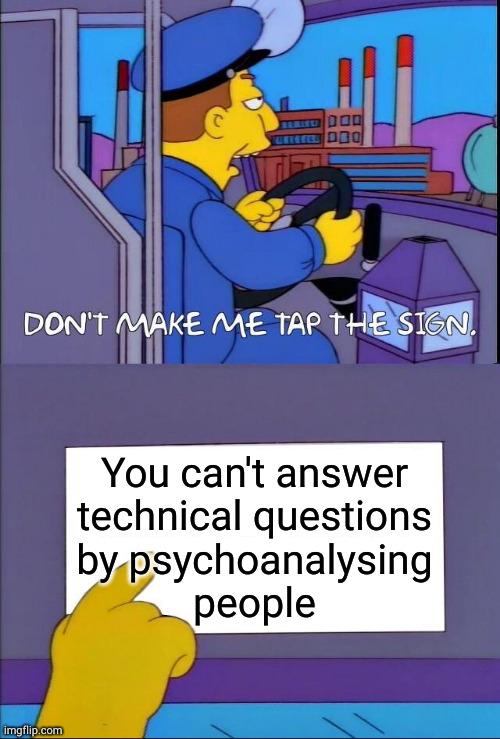

My claim is that the form of this argument is the same as the form of most arguments for large investments in AI alignment research. I would appreciate hearing if I am wrong about this. I realize when it’s presented as above it might seem glib, but I do think it accurately captures the form of the main claims.

Personally, I put very close to zero weight on arguments of this form. This is mostly due to simple base rate reasoning: humanity has seen many claims of this form and so far all of them have been wrong. I definitely would not update much based on surveys of experts or elites within the community making the claim or within adjacent communities. To me that seems pretty circular and in the case of past claims of this form I think deferring to such people would have led you astray. Regardless, I understand other people either pick different reference classes or have inside view arguments they find compelling. My goal here is not to argue about the content of these arguments, it’s to highlight these similarities in form, which I believe have not been much discussed here.

I’ve always found it interesting how EA recapitulates religious tendencies. Many of us literally pledge our devotion, we tithe, many of us eat special diets, we attend mass gatherings of believers to discuss our community’s ethical concerns, we have clear elites who produce key texts that we discuss in small groups, etc. Seen this way, maybe it is not so surprising that a segment of us wants to prepare for a messiah. It is fairly common for religious communities to produce ideas of this form.

–

I would like to thank Nathan Young for feedback on this. He is responsible for the parts of the post that you liked and not responsible for the parts that you did not like.

"Humanity has seen many claims of this form." What exactly is your reference class here? Are you referring just to religious claims of impending apocalypse (plus EA claims about AI technology? Or are you referring more broadly to any claim of transformative near-term change?

I agree with you that claims of supernatural apocalypse have a bad track record, but such a narrow reference class doesn't (IMO) include the pretty technically-grounded concerns about AI. Meanwhile, I think that a wider reference class including other seemingly-unbelievable claims of impending transformation would include a couple of important hits. Consider:

It's 1942. A physicist tells you, "Listen, this is a really technical subject that most people don't know about, but atomic weapons are really coming. I don't know when -- could be 10 years or 100 -- but if we don't prepare now, humanity might go extinct."

It's January 2020 (or the beginning of any pandemic in history). A random doctor tells you "Hey, I don't know if this new disease will have 1% mortality or 10% or 0.1%. But if we don't lock down this entire province today, it could spread to the entire world and cause millions of deaths."

It's 1519. One of your empire's scouts tells you that a bunch of white-skinned people have arrived on the eastern coast in giant boats, and a few priests think maybe it's the return of Quetzalcoatl or something. You decide that this is obviously crazy -- religious-based forecasting has a terrible track record, I mean these priests have LITERALLY been telling you for years that maybe the sun won't come up tomorrow, and they've been wrong every single time. But sure enough, soon the European invaders have slaughtered their way to your capital and destroyed your civilization.

Although the Aztec case is particularly dramatic, many non-European cultures have the experience of suddenly being invaded by a technologically superior foe powered by an exponentially self-improving economic engine -- this sounds at least as similar to AI worries as your claim that Christianity and AI worry are in the same class. There might even be more stories of sudden European invasion than predictions of religious apocalypse, which would tilt your base-rate prediction decisively towards believing that transformational changes do sometimes happen.

I am commenting here and upvoting this specifically because you wrote "I appreciate the pushback." I really like seeing people disagree while being friendly/civil, and I want to encourage us to do even more of that. I like how you are exploring and elaborating ideas while being polite and respectful.