Best books I've read in 2024

(I want to share, but this doesn't seem relevant enough to EA to justify making a standard forum post. So I'll do it as a quick take instead.)

People who know me know that I read a lot, and this is the time of year for retrospectives.[1] Of all the books I read in 2024, I’m sharing the ones that I think an EA-type person would be most interested in, would benefit the most from, etc.

Animal-Focused

There were several animal-focused books I read in 2024. This is the direct result of being a part of an online Animal Advocacy Book Club. I created the book club about a year ago, and it has been helpful in nudging me to read books that I otherwise probably wouldn’t have gotten around to.[2]

- Reading Compassion, by the Pound: The Economics of Farm Animal Welfare was a bit of a slog, but I loved that there were actual data and frameworks and measurements, rather than handwavy references to suffering. The authors provided formulas, the provided estimates and back-of-the-envelope calculations, and did an excellent job looking at farm animal welfare like economists and considering tradeoffs, with far less bias than anything else I’ve ever read

Really enjoyed reading this, thanks for sharing! Any tips for finding a good bookclub? I've not used that website before but I'd expect it would be a good commitment mechanism for me as well.

I try not to do too much self-promotion, but I genuinely think that the book clubs I run are good options, and I'd be happy to have you join us. Libraries sometimes have in-person book clubs, so if you want something away from the internet you could ask your local librarian about book clubs. And sometimes some simple Googling is helpful too: various cities have some variation of 'book club in a bar,' 'sci-fi book club,' 'professional development book club,' etc. But I think it is fairly uncommon to have a book club that is online and relatively accessible, so I think that mine are a bit special (although I am certainly not unbiased).

I've browsed through bookclubs.com a bit, but my memory is that the majority of the book clubs on there seem to be some combination of fiction, local to a specific place, focused on topics that aren't interesting to me, or defunct.

Another option that I haven't really tried: instead of having a regular club, you could just occasionally make a post "I want to read [BOOK] and talk about it with people, so I'll make a Google Calendar event for [DATE]. If you want to read it and talk about it, please join." At least one person is doing that in the EA Anywhere Slack workspace in the #book-club channel. The trick is to find a book that is popular enough, and then to accept the fact that 50% to 80% of the people who RSVP simply won't show up. But it could be a more iterative/incremental approach to get started.

I'm considering of doing a sort of pub quiz (quiz night, trivia night, bar trivia) for EA in the future. If you have some random trivia knowledge that you think would be good for such an occasion, please send it to me.

I'm not so much looking for things along the lines of "prove how brilliantly intelligent" you are, but more so fun/goofy/silly trivia with a bit of EA vibes mixed in. Hard/obscure questions are okay,[1] easy questions are okay, serious questions are okay,[2] goofy questions are okay[3], and tricky questions without any clear answer ... (read more)

I'm going to repeat something that I did about a year ago:

A very small, informal announcement: if you want someone to review your resume and give you some feedback or advice, send me your resume and I'll help. If you would like to do a mock interview, send me a message and we can schedule a video call to practice. If we have never met before, that is okay. I'm happy to help you, even if we are total strangers.

To be clear: this is not a paid service, I'm not trying to drum up business for some kind of a side-hustle, and I'm not going to ask you to subscribe to a newsletter. I am just a person who is offering some free informal help. I enjoy helping people bounce ideas around, and people whom I've previously helped in this way seemed to have benefited from it and appreciated it.

A few related thoughts:

- There are a lot of people that are looking for a job as part of a path to greater impact, but many people feel somewhat awkward or ashamed to ask for help. If I am struggling in a job hunt, I don't want to ask friends or professional contacts for help due to shame; I worry that they will think less of me for not being competent. So asking a stranger that you've never met and that isn't c

I've found explanation freeze to be a useful concept, but I haven't found a definition or explanation of it on the EA Forum. So I thought I'd share a little description of explanation freeze here so that anyone searching the forum can find it, and so that I can easily link to it.

The short version is:

explanation freeze is the tendency to stick with the first explanation we come up with.

The slightly longer explanation is:

Situations that are impossible to conclusively explain can afflict us with explanation freeze, a condition in which we come up with just one possible explanation for an event that may have resulted from any of several different causes. Since we're only considering one explanation at a time, this condition leads us to make the available evidence fit that explanation, and then overestimate how likely that explanation is. We can consider it as a type of cognitive bias or flaw, because it hinders us in our attempts to form an accurate view of reality.

A very tiny, very informal announcement: if you want someone to review your resume and give you some feedback or advice, send me your resume and I'll help. If we have never met before, that is okay. I'm happy to help you, even if we are total strangers.

For the past few months I've been active with a community of Human Resources professionals and I've found it quite nice to help people improve their resumes. I think there are a lot of people in EA that are looking for a job as part of a path to greater impact, but many people feel somewhat awkward or ashamed to ask for help. There is also a lot of 'low-hanging fruit' for making a resume look better, from simply formatting changes that make a resume easier to understand to wordsmithing the phrasings.

To be clear: this is not a paid service, I'm not trying to drum up business for some kind of a side-hustle, and I'm not going to ask you to subscribe to a newsletter. I am just a person who is offering some free low-key help.

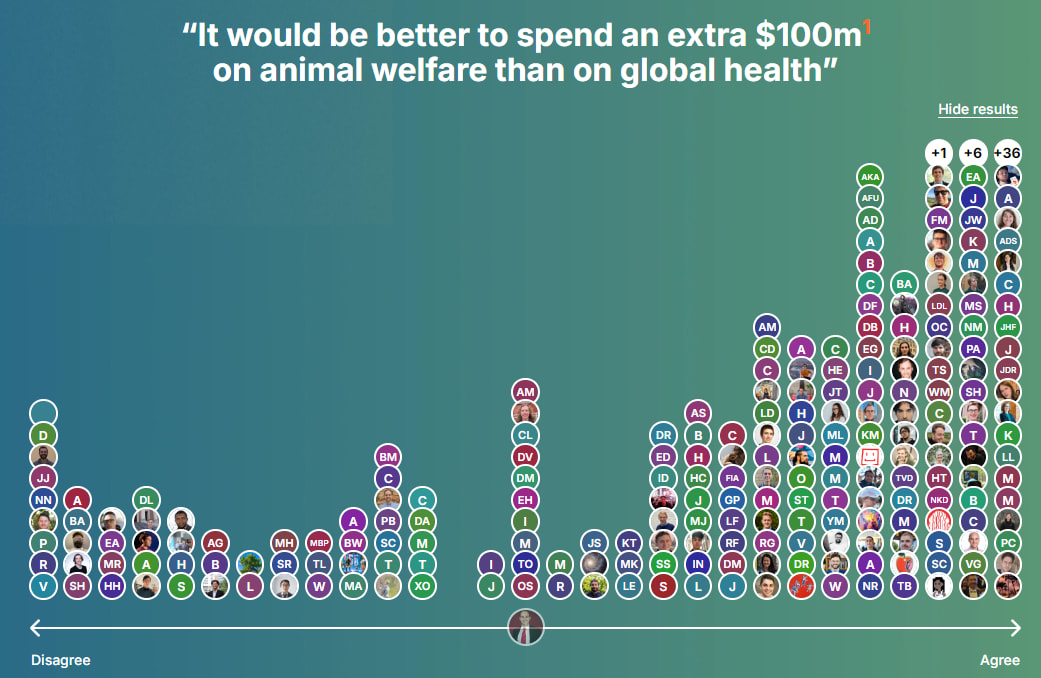

The 80,000 Hours team just published that "We now rank factory farming among the top problems in the world." I wonder if this is a coincidence or if this planned to coincide with the EA Forum's debate week? Combined with the current debate week's votes on where an extra $100 should be spent, these seem like nice data points to show to anyone that claims EA doesn't care about animals.

Note: I'm sharing this an undisclosed period of time after the conference has occurred, because I don't want to inadvertently reveal who this individual is, and I don't want to embarrass this person.

I'm preparing to attend a conference, and I've been looking at the Swapcard profile of someone who lists many areas of expertise that I think I'd be interested in speaking with them about: consulting, people management, operations, policymaking, project management/program management, global health & development... wow, this person knows about a lot of different areas. Wow, this person even lists Global coordination & peace-building as an area of expertise! And Ai strategy & policy! Then I look at this person's LinkedIn. They graduated from their bachelor's degree one month ago. So many things arise in my mind.

- One is about how this typifies a particular subtype of person who talks big about what they can do (which I think has some overlap with "grifter" or "slick salesman," and has a lot of overlap with people who promote themselves on social media).

- Another is that I notice that this person attended Yale, and it makes me want to think about

I list "social media manager" for Effective Altruism on LinkedIn - but I highlight that it's a voluntary role, not a job. I have done this for over 10 years, maintaining the "effective altruism" page amongst others, as well as other volunteering for EA.

+1 to the EAG expertise stuff, though I think that it’s generally just an honest mistake/conflicting expectations, as opposed to people exaggerating or being misleading. There aren’t concrete criteria for what to list as expertise so I often feel confused about what to put down.

@Eli_Nathan maybe you could add some concrete criteria on swapcard?

e.g. expertise = I could enter roles in this specialty now and could answer questions of curious newcomers (or currently work in this area)

interest = I am either actively learning about this area, or have invested at least 20 hours learning/working in this area .

One of the best experiences I've had at a conference was when I went out to dinner with three people that I had never met before. I simply walked up to a small group of people at the conference and asked "mind if I join you?" Seeing the popularity of matching systems like Donut in Slack workspaces, I wonder if something analogous could be useful for conferences. I'm imagining a system in which you sign up for a timeslot (breakfast, lunch, or dinner), and are put into a group with between two and four other people. You are assigned a location/restaurant that is within walking distance of the conference venue, so the administrative work of figuring out where to go is more-or-less handled for you. I'm no sociologist, but I think that having a small group is better for conversation than a large group, and generally also better than a two-person pairing. An MVP version of this could perhaps just be a Google Sheet with some RANDBETWEEN formulas.

The topics of conversation were pretty much what you would expect for people attending an EA conference: we sought advice about interpersonal relationships, spoke about careers, discussed moral philosophy, meandered through miscellaneous interests... (read more)

What are the norms on the EA Forum about ChatGPT-generated content?

If I see a forum post that looks like it was generated by a LLM generative AI tool, it is rude to write a comment asking "Was this post written by generative AI?" I'm not sure what the community's expectations are, and I want to be cognizant of not assuming my own norms/preferences are the appropriate ones.

Best books I've read in 2023

(I want to share, but this doesn't seem relevant enough to EA to justify making a standard forum post. So I'll do it as a quick take instead.)

People who know me know that I read a lot.[1] Although I don’t tend to have a huge range, I do think there is a decent variety in the interests I pursue: business/productivity, global development, pop science, sociology/culture, history. Of all the books I read in 2023, here is my best guess as to the ones that would be of most interest to an effective altruist.

For people who haven’t explored much yet

- Scrum: The Art of Doing Twice the Work in Half the Time. If you haven’t worked in 'startupy' or lean organizations, this books may introduce you to some new ideas. I first worked for a startup in my late 20s, and I wish that I had read this book at that point.

- Developing Cultural Adaptability: How to Work Across Differences. This 32 page PDF is a good introduction to ideas of working with people from other cultures. This will be particularly useful if you are going to work in a different country (although there are cultural variations within a single country). This is fairly light introduction, so don't stop h

Is talk about vegan diets being more healthy is mostly just confirmation bias and tribal thinking? A vegan diet can be very healthy or very unhealthy, and a non-vegan diet can also be very healthy or very unhealthy. The simplistic comparisons that I tend to see are contrasting vegans who put a lot of care and attention toward their food choices and the health consequences, versus people who aren't really paying attention to what they eat (something like the standard American diet or some similar diet without much intentionality). I suppose in a statistics class we would talk about non representativeness.

Does the actual causal factor for health tend to be something more like cares about diet, or pays attention to what they eat, or socio-economic status? If we controlled for factors like these, would a vegan diet still be healthier than a non-vegan diet?

Is talk about vegan diets being more healthy is mostly just confirmation bias and tribal thinking?

I also think if often is. I find discussions for and against veganism surprisingly divisive and emotionally-charged (see e.g. r/AntiVegan and r/exvegans )

That said, my understanding is that many studies do control for things like socio-economic status, and they mostly find positive results for many diets (including, but not exclusively, plant-based ones). You can see some mentioned in a previous discussion here.

In general, I think it's very reasonable when deciding whether something is "more healthy" to compare it to a "standard". As an extreme example, I would expect a typical chocolate-based diet to be less healthy than the standard American diet. So, while it would be healthier than a cyanide-based diet, it would still be true and useful to say that a chocolate-based diet is unhealthy.

I'm currently reading a lot of content to prepare for HR certification exams (from HRCI and SHRM), and in a section about staffing I came across this:

some disadvantages are associated with relying solely on promotion from within to fill positions of increasing responsibility:

■ There is the danger that employees with little experience outside the organization will

have a myopic view of the industry

Just the other day I had a conversation about the tendency of EA organizations to over-weight how "EA" a job candidate is,[1] so it particularly stuck me to come across this today. We had joked about how a recent grad with no work experience would try figuring out how to do accounting from first principles (the unspoken alternative was to hire an accountant). So perhaps I would interpret the above quotation in the context of EA as "employees with little experience outside of EA are more likely to have a myopic view of the non-EA world." In a very simplistic sense, if we imagine EA as one large organization with many independent divisions/departments, a lot of the hiring (although certainly not all) is internal hiring.[2]

And I'm wondering how much expertise, skill, or experience i... (read more)

I think that the worries about hiring non-EAs are slightly more subtly than this.

Sure, they may be perfectly good at fulfilling the job description, but how does hiring someone with different values affect your organisational culture? It seems like in some cases it may be net-beneficial having someone around with a different perspective, but it can also have subtle costs in terms of weakening the team spirit.

Then you get into the issue where if you have some roles you are fine hiring EAs for and some you want them to be value-aligned for, then you may have an employee who you would not want to receive certain promotions or be elevated into certain positions, which isn't the best position to be in.

Not to mention, often a lot of time ends up being invested in skilling up an employee and if they are value-aligned then you don't necessarily lose all of this value when they leave.

I just looked at [ANONYMOUS PERSON]'s donations. The amount that this person has donated in their life is more than double the amount that I have ever earned in my life. This person appears to be roughly the same age as I am (we graduated from college ± one year of each other). Oof. It makes me wish that I had taken steps to become a software developer back when I was 15 or 18 or 22.

Oh, well. As they say, comparison is the thief of joy. I'll try to focus on doing the best I can with the hand I'm dealt.

Because my best estimate is that there are different steps toward different paths that would be better than trying to rewind life back to college age and start over. Like the famous Sylvia Plath quote about life branching like a fig tree, unchosen paths tend to wither away. I think that becoming a software developer wouldn't be the best path for me at this point: cost of tuition, competitiveness of the job market for entry-level developers, age discrimination, etc.

Being a 22-year old fresh grad with a bachelor's degree in computer science in 2010 is quite a different scenario than being a 40-year old who is newly self-taught through Free Code Camp in 202X. I predict that the former would tend to have a lot of good options (with wide variance, of course), while the latter would have fewer good options. If there was some sort of 'guarantee' regarding a good job offer or if a wealthy benefactor offered to cover tuition and cost of living while I learn then I would give training/education very serious consideration, but my understanding is that the 2010s were an abnormally good decade to work in tech, and there is now a glut of entry-level software developers.

Whether or not I personally act in the morally best way is irrelevant to the truth of the moral principles we’ve been discussing. Even if I’m hypocritical as you claim, that wouldn’t make it okay for you to keep buying meat.

This quote made me think of the various bad behaviors that we've seen within EA over the past few years. Although this quote is from a book about vegetarianism, the words "keep buying meat" could easily be substituted for some other behavior.

While publicity and marketing and optics all probably oppose this to a certain extent, I take some solace in the fact that some people behaving poorly doesn't actually diminish the validity of the core principles. I suppose the pithy version would be something like "[PERSON] did [BAD THING]? Well, I'm going to keep buying bednets."

I want to try and nudge some EAs engaged in hiring to be a bit more fair and a bit less exclusionary: I occasionally see job postings for remote jobs with EA organizations that set time zone location requirements.[1] Location seems like the wrong criteria; the right criteria is something more like "will work a generally similar schedule to our other staff." Is my guess here correct, or am I missing something?

What you actually want are people who are willing to work "normal working hours" for your core staff. You want to be able to schedule meetings an... (read more)

I've previously written a little bit about recognition in relation to mainanence/prevention, and this passage from Everybody Matters: The Extraordinary Power of Caring for Your People Like Family stood out to me as a nice reminder:

... (read more)We tell the story in our class about the time our CIO Craig Hergenroether’s daughter was working in another organization, and she said, “We’re taking our IT team to happy hour tonight because we got this big e-mail virus, but they did a great job cleaning it up.”

Our CIO thought, “We never got the virus. We put all the disciplines

A very minor thought.

TLDR: Try to be more friendly and supportive, and to display/demonstrate that in a way the other person can see.

Slightly longer musings: if you attend an EA conference (or some other event that involves you listening to a speaker), I suggest that you:

- look at the speaker while they are speaking

- have some sort of smile, nodding, or otherwise encouraging/supportive body language or facial expression.

This is likely less relevant for people that are very experienced public speakers, but for people that are less comfortable and at ease speaking in front of a crowd[1] it can be pretty disheartening to look out at an audience and see the majority of people looking at their phone and their laptops.

I was at EAGxNYC recently, and I found it a little disheartening at how many people in the audience were paying attention to their phones and laptops instead of paying attention to the speaker.[2] I am guilty of doing this in at least one talk that I didn't find interesting, and I am moderately ashamed of my behavior. I know that I wouldn't want someone to do that to me if I was speaking in front of a crowd. One speaker mentioned to me later that they appreciated my n... (read more)

I wish that people wouldn't use "rat" as shorthand for "rationalist."

For people who aren't already aware of the lingo/jargon it makes things a bit harder to read and understand. Unlike terms like "moral patienthood" or "mesa-optimizers" or "expected value," a person can't just search Google to easily find out what is meant by a "rat org" or a "rat house."[1] This is a rough idea, but I'll put it out there: the minimum a community needs to do in order to be welcoming to newcomers is to allow newcomers to figure out what you are saying.

Of course, I don't expect that reality will change to meet my desires, and even writing my thoughts here makes me feel a little silly, like a linguistic prescriptivist tell people to avoid dangling participles.

- ^

Try searching Google for what is rat in effective altruism and see how far down you have to go before you find something explaining that rat means rationalist. If you didn't know it already and a writer didn't make it clear from context that "rat" means "rationalist", it would be really hard to figure out what "rat" means.

A brief thought on 'operations' and how it is used in EA (a topic I find myself occasionally returning to).

It struck me that operations work and non-operations work (within the context of EA) maps very well onto the concept of staff and line functions. Line function are those that directly advances an organization's core work, while staff functions are those that do not. Staff functions have advisory and support functions; they help the line functions. Staff functions are generally things like accounting, finance, public relations/communication, legal, and HR. Line functions are generally things like sales, marketing, production, and distribution. The details will vary depending on the nature of the organization, but I find this to be a somewhat useful framework for bridging concepts between EA and the broader world.

It also helps illustrate how little information is conveyed if I tell someone I work in operations. Imagine 'translating' that into non-EA verbiage as I work in a staff function. Unless the person I am talking to already has a very good understanding of how my organization works, they won't know what I actually do.

I run some online book clubs, some of which are explicitly EA and some of which are EA-adjacent: one on China as it relates to EA, one on professional development for EAs, and one on animal rights/welfare/advocacy. I don't like self-promoting, but I figure I should post this at least once on the EA Forum so that people can find it if the search for "book club" or "reading group." Details, including links for joining each of the book clubs, are in this Google Doc.

I want to emphasize that this isn't funded through an organization, I'm not trying to get email... (read more)

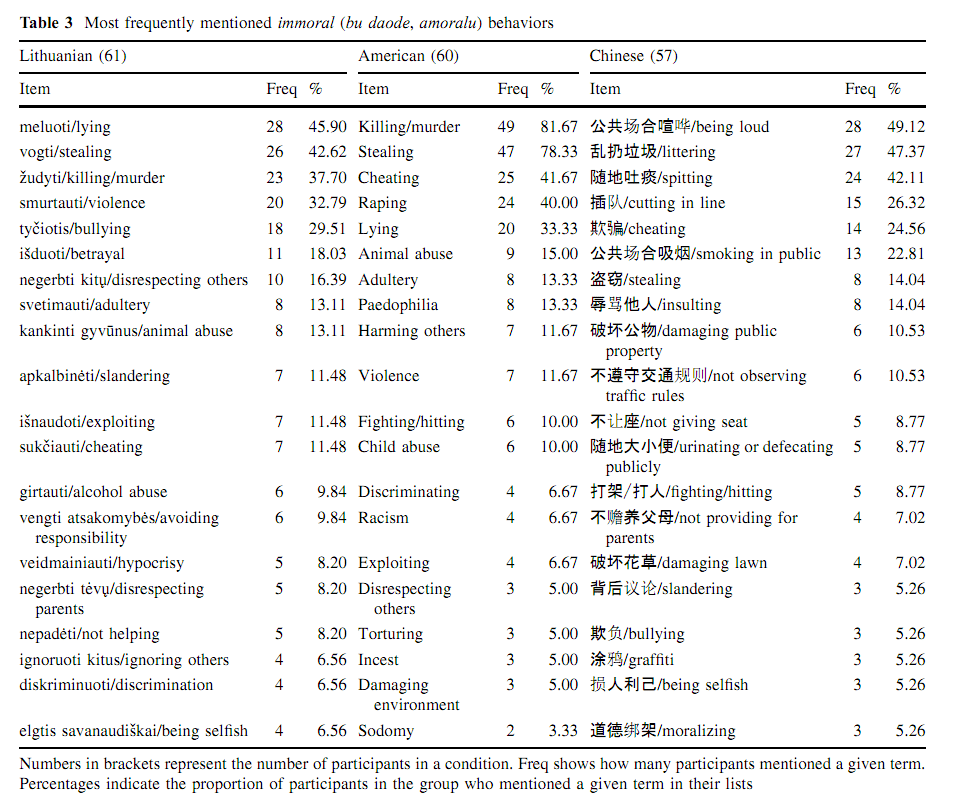

I'm skimming through an academic paper[1] that I'd roughly describe as cross-cultural psychology about morality, and the stark difference between what kinds of behaviors Americans and China view as immoral[2] was surprising to me.

The American list has so much of what I could consider as causing harm to others, or malicious. The Chinese list has a lot of what I would consider as rude, crass, or ill-mannered. The differences here remind me of how I have occasionally pushed against the simplifying idea of words having easy equivalents between English and Chinese.[3]

There are, of course, issues with taking this too seriously: issues like spitting, cutting in line, or urinating publicly are much more salient issues in Chinese society than in American society. I'm also guessing that news stories about murders and thefts are more commonly seen in American media than in China's domestic media. But overall I found it interesting, and a nice nudge/reminder against the simplifying idea that "we are all the same."

I want to provide an alternative to Ben West's post about the benefits of being rejected. This isn't related to CEA's online team specifically, but is just my general thoughts from my own experience doing hiring over the years.

While I agree that "the people grading applications will probably not remember people whose applications they reject," two scenarios[1] come to mind for job applicants that I remember[2]:

- The application is much worse than I expected. This would happen if somebody had a nice resume, a well-put together cover letter, and then showed up to an interview looking slovenly. Or if they said they were good at something, and then were unable to demonstrate it when prompted.[3]

- Something about the application is noticeably abnormal (usually bad). This could be the MBA with 20 years of work experience who applied for an entry level part-time role in a different city & country than where he lived[4]. This could be the French guy I interviewed years ago who claimed to speak unaccented American English, but clearly didn't.[5] It could be the intern who came in for an interview and requested a daily stipend that was higher than the salary of anyone on my team. I

I'm very pleased to see that my writing on the EA Forum is now referenced in a job posting from Charity Entrepreneurship to explain to candidates what operations management is, described as "a great overview of Operations Management as a field." This gives me some warm fuzzy feelings.

Imperfect Parfit (written by by Daniel Kodsi and John Maier) is a fairly long review (by 2024 internet standards) of Parfit: A Philosopher and His Mission to Save Morality. It draws attention to some of his oddities and eccentricity (such as brushing his teeth for hours, or eating the same dinner every day (not unheard of among famous philosophers)). Considering Parfit's influence on the ideas that many of us involved in EA have, it seemed worth sharing here.

Anyone can call themselves a part of the EA movement.

I sort of don't agree with this idea, and I'm trying to figure out why. It is so different from a formal membership (like being a part of a professional association like PMI), in which you have a list of members and maybe a card or payment.

Here is my current perspective, which I'm not sure that I fully endorse: on the 'ladder' or being an EA (or of any other informal identity) you don't have to be on the very top rung to be considered part of the group. You probably don't even have to be on the top handful of rungs. Is halfway up the ladder enough? I'm not sure. But I do think that you need to be higher than the bottom rung or two. You can't just read Doing Good Better and claim to be an EA without any additional action. Maybe you aren't able to change your career due to family and life circumstances. Maybe you don't earn very much money, and thus aren't donating. I think I could still consider you an EA if you read a lot of the content and are somehow engaged/active. But there has to be something. You can't just take one step up the ladder, then claim the identity and wander off.

My brain tends to jump to analogies, so I'll use t... (read more)

I'm concerned whenever I see things like this:

"I want to place [my pet cause], a neglected and underinvested cause, at the center of the Effective Altruism movement."[1]

In my mind, this seems anti-scouty. Rather than finding what works and what is impactful, it is saying "I want my team to win." Or perhaps the more charitable interpretation is that this person is talking about a rough hypothesis and I am interpreting it as a confident claim. Of course, there are many problems with drawing conclusions from small snippets of text on the internet, and if I meet this person and have a conversation I might feel very differently. But at this point it seems like a small red flag, demonstrating that there is a bit less cause-neutrality here (and a bit more being wedded to a particular issue) than I would like. But it is hard to argue with personal fit; maybe this person simply doesn't feel motivated about lab grown meat or bednets or bio-risk reduction, and this is their maximum impact possibility.

- ^

I changed the exact words to that I won't publicly embarrass or draw attention to the person who wrote this. But to be clear, this is not a thought experiment of mine, someone actually wrote thi

In my experience, many of those arguments are bad and not cause-neutral, though to me your take seems too negative -- cause prioritization is ultimately a social enterprise and the community can easily vet and detect bad cases, and having proposals for new causes to vet seems quite important (i.e. the Popperian insight, individuals do not need to be unbiased, unbiasedness/intersubjectivity comes from open debate).

This feels misplaced to me. Making an argument for some cause to be prioritised highly is in some sense one of the core activities of effective altruism. Of course, many people who'd like to centre their pet cause make poor arguments for its prioritisation, but in that case I think the quality of argument is the entire problem, not anything about the fact they're trying to promote a cause. "I want effective altruists to highly prioritise something that they currently don't" is in some sense how all our existing priorities got to where they are. I don't think we should treat this kind of thing as suspicious by nature (perhaps even the opposite).

The third one seems at least generally fine to me -- clearly the poster believes in their theory of change and isn't unbiased, but that's generally true of posts by organizations seeking funding. I don't know if the poster has made a (metaphorically) better bednet or not, but thought the Forum was enhanced by having the post here.

The other two are posts from new users who appear to have no clear demonstrated connection to EA at all. The occasional donation pitch or advice request from a charity that doesn't line up with EA very well at all is a small price to pay for an open Forum. The karma system dealt with preventing diversion of the Forum from its purposes. A few kind people offered some advice. I don't see any reason for concern there.

I think a lot of the EA community shares your attitude regarding exuberant people looking to advance different cause areas or interventions, which actually concerns me. I am somewhat encouraged by the disagreement with you regarding your comment that makes this disposition more explicit. Currently, I think that EA, in terms of extension of resources, has much more solicitude for thoughts within or adjacent to recognized areas. Furthermore, an ability to fluently convey ones ideas in EA terms or with an EA attitude is important.

Expanding on jackva re the Popperian insight, having individuals passionately explore new areas to exploit is critical to the EA project and I am a bit concerned that EA is often disinterested in exploring in directions where a proponent lacks some of the EA's usual trappings and/or lacks status signals. I would be inclined to be supportive of passion and exuberance in the presentation of ideas where this is natural to the proponent.

the worry that someone with high degrees of partiality for a particular cause manages to hijack EA resources is much weaker than the concern that potentially promising cases may be ignored because they have an unfortunate messenger

I think you've phrased that very well. As much as I may want to find the people who are "hijacking" EA resources, the benefit of that is probably outweighed by how it disincentivized people to try new things. Thanks for commenting back and forth with me on this. I'll try to jump the gun a bit less from now on when it comes to gut feeling evaluations of new causes.

In a recent post on the EA forum (Why I Spoke to TIME Magazine, and My Experience as a Female AI Researcher in Silicon Valley), I couldn't help but notice that a comments from famous and/or well-known people got lots more upvotes than comments by less well-known people, even though the content of the comments was largely similar.

I'm wondering to what extent this serves as one small data point in support of the "too much hero worship/celebrity idolization in EA" hypothesis, and (if so) to what extent we should do something about it. I feel kind of conflicted, because in a very real sense reputation can be a result of hard work over time,[1] and it seems unreasonable to say that people shouldn't benefit from that. But it also seems antithetical to the pursuit of truth, philosophy, and doing good to weigh to the messenger so heavily over the message.

I'm mulling this over, but it is a complex and interconnected enough issue that I doubt I will create any novel ideas with some casual thought.

Perhaps just changing the upvote buttons to something more like this content creates nurtures a discussion space that lines up with the principles of EA? I'm not confident that would change muc... (read more)

I'm not convinced by this example; in addition to expressing the view, Toby's message is a speech act that serves to ostracize behaviour in a way that messages from random people do not. Since his comment achieves something the others do not it makes sense for people to treat it differently. This is similar to the way people get more excited when a judge agrees with them that they were wronged than when a random person does; it is not just because of the prestige of the judge, but because of the consequences of that agreement.

If we interpret an up-vote as "I want to see more of this kind of thing", is it so surprising that people want to see more such supportive statements from high-status people?

I would feel more worried if we had examples of e.g. the same argument being made by different people and the higher-status person getting rewarded more. Even then - perhaps we do really want to see more of high-status people reasoning well in public.

Generally, insofar as karma is a lever for rewarding behaviour, we probably care more about the behaviour of high-status people and so we should expect to see them getting more karma when they behave well, and also losing more when they behave badly (which I think we do!). Of course, if we want karma to be something other than an expression of what people want to see more of then it's more problematic.

Every now and I then I see (or hear) people involved in EA refer to Moloch[1], as if this is a specific force that should be actively resisted and acted against. Genuine question: are people just using the term "Moloch" to refer to incentives [2] that nudge us to do bad things? Is there any reason why we should say "Moloch" instead of "incentives," or is this merely a sort of in-group shibboleth? Am I being naïve or otherwise missing something here?

- ^

Presumably, Scott Alexander's 2014 Meditations on Moloch essay has been very widely read among EAs.

- ^

As well as the other influences on our motives from things external to ourselves, such as the culture and society that we grew up in, or how we earn respect and admiration from peers.

Some people involved in effective altruism have really great names for their blogs: Ollie Base has Base Rates, Diontology from Dion Tan, and Ben West has Benthamite. It is really cool how people are able to take their names and with some slight adjustments make them into cool references. If I was the blogging type and my surname wasn't something so uncommon/unique, I would take a page from their book.

It is sort of curious/funny that posts stating "here is some racism" get lots of attention, and posts stating "let's take the time to learn about inclusivity, diversity, and discrimination"[1] don't get much attention. I suppose it is just a sort of an unconscious bias: some topics are incendiary and controversial and are more appealing/exciting to engage with, while some topics are more hufflepuffy and do the work and aren't so exciting. Is it vaguely analogous to a polar bear stranded on an ice floe getting lots of clicks, but the randomized control... (read more)

This is in relation to the Keep EA high-trust idea, but it seemed tangential enough and butterfly idea-ish that it didn't make sense to share this as a comment on that post.

Rough thoughts: focus a bit less on people and a bit more on systems. Some failures are 'bad actors,' but my rough impression is that far more often bad things happen because either:

- the system/structures/incentives nudge people toward bad behavior, or

- the system/structures/incentives allow bad behavior

It very much reminds me of "Good engineering eliminates users being able to do the wrong thing as much as possible. . . . You don't design a feature that invites misuse and then use instructions to try to prevent that misuse." I've also just learned about the hierarchy of hazard controls, which seems like a nice framework for thinking about 'bad things.'

I think it is great to be able to trust people, but I also want institutions designed in such a way that it is okay if someone is in the 70th percentile of trustworthiness rather than the 95th percentile of trustworthiness.

Low confidence guess: small failures often occur not because people are malicious or selfish, but because they aren't aware of better ways to do t... (read more)

I just had a call with a young EA from Oyo State in Nigeria (we were connected through the excellent EA Anywhere), and it was a great reminder of how little I know regarding malaria (and public health in developing countries more generally). In a very simplistic sense: are bednets actually the most cost effective way to fight against malaria?

I've read a variety of books on the development economics canon, I'm a big fan of the use of randomized control trials in social science, I remember worm wars and microfinance not being so amazing as people thought and... (read more)

I suspect that the biggest altruistic counterfactual impact I've had in my life was merely because I was in the right place at the right time: a moderately heavy cabinet/shelf thing was tipping over and about to fall on a little kid (I don't think it would have killed him. He probably would have had some broken bones, lots of bruising, and a concussion). I simply happened to be standing close enough to react.

It wasn't as a result of any special skillset I had developed, nor of any well thought-out theory of change; it was just happenstance. Realistically, ... (read more)

Some musings about experience and coaching. I saw another announcement relating to mentorship/coaching/career advising recently. It looked like the mentors/coaches/advisors were all relatively junior/young/inexperienced. This isn't the first time I've seen this. Most of this type of thing I've seen in and around EA involves the mentors/advisors/coaches being only a few years into their career. This isn't necessarily bad. A person can be very well-read without having gone to school, or can be very strong without going to a gym, or can speak excellent Japane... (read more)

Decoding the Gurus is a podcast in which an anthropologist and a psychologist critique popular guru-like figures (Jordan Peterson, Nassim N. Taleb, Brené Brown, Imbram X. Kendi, Sam Harris, etc.). I've listened to two or three previous episodes, and my general impression is that the hosts are too rambly/joking/jovial, and that the interpretations are harsh but fair. I find the description of their episode on Nassim N. Taleb to be fairly representative:

Taleb is a smart guy and quite fun to read and listen to. But he's also an infinite singularity of arrogance and hyperbole. Matt and Chris can't help but notice how convenient this pose is, when confronted with difficult-to-handle rebuttals.

Taleb is a fun mixed bag of solid and dubious claims. But it's worth thinking about the degree to which those solid ideas were already well... solid. Many seem to have been known for decades even by all the 'morons, frauds and assholes' that Taleb hates.

To what degree does Taleb's reputation rest on hyperbole and intuitive-sounding hot-takes?

A few weeks ago they released an episode about Eliezer Yudkowksy titled Eliezer Yudkowksy: AI is going to kill us all. I'm only partway through listening to it... (read more)

If anybody wants to read and discuss books on inclusion, diversity, and similar topics, please let me know. This is a topic that I am interested in, and a topic that I want to learn more about. My main interest is on the angle/aspect of diversity in organizations (such as corporations, non-profits, etc.), rather than broadly society-wide issues (although I suspect they cannot be fully disentangled).

I have a list of books I intend to read on DEI topics (I've also listed them at the bottom of this quick take in case anybody can't access my shared Notio... (read more)

I'm reading Brotopia: Breaking Up the Boys' Club of Silicon Valley, and this paragraph stuck in my head. I'm wondering about EA and "mission alignment" and similar things.

... (read more)Which brings me to a point the PayPal Mafia member Keith Rabois raised early in this book: he told me that it’s important to hire people who agree with your “first principles”—for example, whether to focus on growth or profitability and, more broadly, the company’s mission and how to pursue it. I’d agree. If your mission is to encourage people to share more online, you shouldn’t hire some

In discussions (both online and in-person) about applicant experience in hiring rounds, I've heard repeatedly that applicants want feedback. Giving in-depth feedback is costly (and risky), but here is an example I have received that strikes me as low-cost and low-risk. I've tweaked it a little to make it more of a template.

... (read more)"Based on your [resume/application form/work sample], our team thinks you're a potential fit and would like to invite you to the next step of the application process: a [STEP]. You are being asked to complete [STEP] because you are curre

Ben West recently mentioned that he would be excited about a common application. It got me thinking a little about it. I don't have the technical/design skills to create such a system, but I want to let my mind wander a little bit on the topic. This is just musings and 'thinking out out,' so don't take any of this too seriously.

What would the benefits be for some type of common application? For the applicant: send an application to a wider variety of organizations with less effort. For the organization: get a wider variety of applicants.

Why not just have t... (read more)

I didn't learn about Stanislav Petrov until I saw announcements about Petrov Day a few years ago on the EA Forum. My initial thought was "what is so special about Stanislav Petrov? Why not celebrate Vasily Arkhipov?"

I had known about Vasily Arkhipovfor years, but the reality is that I don't think one of them is more worthy of respect or idolization than the other. My point here is more about something like founder effects, path dependency, and cultural norms. You see, at some point someone in EA (I'm guessing) arbitrarily decided that Stanislav Petrov was ... (read more)

Random musing from reading a reddit comment:

Some jobs are proactive: you have to be the one doing the calls and you have to make the work yourself and no matter how much you do you're always expected to carry on making more, you're never finished. Some jobs are reactive: The work comes in, you do it, then you wait for more work and repeat.

Proactive roles are things like business development/sales, writing a book, marketing and advertising, and research. You can almost always do more, and there isn't really an end point unless you want to impose an arbitrar... (read more)

Some questions cause me to become totally perplexed. I've been asked these (or variations of these) by a handful people in the EA community. These are not difficulties or confusions that require PhD-level research to explain, but instead I think they represent a sort of communication gap/challenge/disconnect and differing assumptions.

Note that this fuzzy musings on communication gaps, and on differing assumptions of what is normal. In a very broad sense you could think of this as an extension of the maturing/broadining of perspectives that we all do when w... (read more)

I've been reading about performance management, and a section of the textbook I'm reading focuses on The Nature of the Performance Distribution. It reminded me a little of Max Daniel's and Ben Todd's How much does performance differ between people?, so I thought I'd share it here for anyone who is interested.

The focus is less on true outputs and more on evaluated performance within an organization. It is a fairly short and light introduction, but I've put the content here if you are interested.

A theme that jumps out at me is situational specificity, as it ... (read more)

(caution: grammatical pedantry, and ridiculously low-stakes musings. possibly the most mundane and unexciting critique of EA org ever)

The name of Founders Pledge should actually be Founders' Pledge, right? It is possessive, and the pledge belongs to multiple founders. If I remember my childhood lessons, apostrophes come after the s for plural things:

- the cow's friend (this one cow has a friend)

- the birds' savior (all of these birds have a savior)

A new thought: maybe I've been understanding it wrong. I've always thought of the "pledge" in Founders Pledge as a... (read more)

I'm been thinking about small and informal ways to build empathy[1]. I don't have big or complex thoughts on this (and thus I'm sharing rough ideas as a quick take rather than as a full post). This is a tentative and haphazard musing/exploration, rather than a rigorous argument.

- Read about people who have various hardships or suffering. I think that this is one of the benefits of reading fiction: it helps you more realistically understand (on an emotional level) the lives of other people. Not all fiction is created equal, and you probably won't won't develo

For anyone who is interested in tech policy, I thought I'd share this list of books from the University of Washington's Gallagher Law Library: https://lib.law.uw.edu/c.php?g=1239460&p=9071046

The collection ranging from court-focused, to privacy-focused books, to ethics, to criminal justice. There is an excellence breadth of material.

This is about donation amounts, investing, and patient philanthropy. I want to share a simple excel graph showing the annual donation amounts from two scenarios: 10% of salary, and 10% of investment returns.[1] While back a friend was astounded at the difference in dollar amounts, so I thought I should share this a bit more widely. The specific outcomes will change based on the assumptions that we input, of course.[2] A person could certainly combine both approaches, and there really isn't anything stopping you from donating more than 10%, so int... (read more)

I'm been mulling over the idea of proportional reciprocity for a while. I've had some musings sitting a a Google Doc for several months, and I think that I either share a rough/sloppy version of this, or it will never get shared. So here is my idea. Note that this is in relation to job applications within EA, and I felt nudged to share this after seeing Thank You For Your Time: Understanding the Experiences of Job Seekers in Effective Altruism.

- - - -

Proportional reciprocity

I made this concept up.[1] The general idea is that relationships ... (read more)

I just finished reading Science Fictions: How Fraud, Bias, Negligence, and Hype Undermine the Search for Truth. I think the book is worth reading for anyone interested in truth and the figuring out what is real, but I especially liked the aspiration Mertonian norms, a concept I had never encountered before, and which served as a theme throughout the book.

I'll quote directly from the book to explain, but I'll alter the formatting a bit to make it easier to read:

... (read more)In 1942, Merton set out four scientific values, now known as the ‘Mertonian Norms’. None of them

I remember being very confused by the idea of an unconference. I didn't understand what it was and why it had a special name distinct from a conference. Once I learned that it was a conference in which the talks/discussions were planned by participants, I was a little bit less confused, but I still didn't understand why it had a special name. To me, that was simply a conference. The conferences and conventions I had been to had involved participants putting on workshops. It was only when I realized that many conferences lack participative elements that I r... (read more)

This is a sloppy rough draft that I have had sitting in a Google doc for months, and I figured that if I don't share it now, it will sit there forever. So please read this as a rough grouping of some brainstormy ideas, rather than as some sort of highly confident and well-polished thesis.

- - - - - -

What feedback do rejected applicants want?

From speaking with rejected job applicants within the EA ecosystem during the past year, I roughly conclude that they want feedback in two different ways:

- The first way is just emotional care, which is really j

I was recently reminded about BookMooch, and read a short interview with the creator, John Buckman.

I think that the interface looks a bit dated, but it works well: you send people books you have that you don't want, and other people send you books that you want but you don't have. I used to use BookMooch a lot from around 2006 to 2010, but when I moved outside of the USA in 2010 I stopped using it. One thing I like is that it feels very organic and non-corporate: it doesn't cost a monthly membership, there are no fees for sending and receiving books,[1]&nb... (read more)

(not well thought-out musings. I've only spent a few minutes thinking about this.)

In thinking about the focus on AI within the EA community, the Fermi paradox popped into my head. For anyone unfamiliar with it and who doesn't want to click through to Wikipedia, my quick summary of the Fermi paradox is basically: if there is such a high probability of extraterrestrial life, why haven't we seen any indications of it?

On a very naïve level, AI doomerism suggests a simple solution to the Fermi paradox: we don't see signs of extraterrestrial life because c... (read more)

I guess shortform is now quick takes. I feel a small amount of negative reaction, but my best guess its that this reaction is nothing more than a general human "change is bad" feeling.

Is quick takes a better name for this function that shortform? I'm not sure. I'm leaning toward yes.

I wonder if this will have an effect to nudge people to not write longer posts using the quick takes function.

This is just for my own purposes. I want to save this info somewhere so I don't lose it. This has practically nothing to do with effective altruism, and should be viewed as my own personal blog post/ramblings.

I read the blog post What Trait Affects Income the Most?, written by Blair Fix, a few years ago, I really enjoyed seeing some data on it. At some point later I wanted to find it and I couldn't find it, and today I stumbled upon it again. The very short and simplistic summary is that hierarchy (a fuzzy concept that I understand to be roughly "cla... (read more)

I vaguely remember reading something about buying property with a longtermism perspective, but I can't remember the justification against doing it. This is basically using people's inclination to choose immediate rewards over rewards that come later in the future. The scenario was (very roughly) something like this:

... (read more)You want to buy a house, and I offer to help you buy it. I will pay for 75% of the house, you will pay for 25% of the house. You get to own/use the house for 50 years, and starting in year 51 ownership transfers to me. You get a huge discount to

This is a random musings of cultural norms, mainstream culture, and how/where we choose to spend our time and attention.

Barring the period when I was roughly 16-20 and interested in classic rock, I've never really been invested in music culture. By 'music culture' I mean things like knowing the names of the most popular bands of the time, knowing the difference between [subgenre A] and [subgenre A] off the top of my head, caring about the lives of famous musicians, etc.[1] Celebrity culture in general is something I've never gotten into, but avoiding ... (read more)

Would anyone find it interesting/useful for me to share a forum post about hiring, recruiting, and general personnel selection? I have some experience running hiring for small companies, and I have been recently reading a lot of academic papers from the Journal of Personnel Psychology regarding the research of most effective hiring practices. I'm thinking of creating a sequence about hiring, or maybe about HR and managing people more broadly.

I'm grappling with an idea of how to schedule tasks/projects, how to prioritize, and how to set deadlines. I'm looking for advice, recommending readings, thoughts, etc.

The core question here is "how should we schedule and prioritize tasks whose result becomes gradually less valuable over time?" The rest of this post is just exploring that idea, explaining context, and sharing examples.

Here is a simple model of the world: many tasks that we do at work (or maybe also in other parts of life?) fall into either sharp decrease to zero or sharp reduction in value... (read more)

I've been reading a few academic papers on my "to-read" list, and The Crisis of Confidence in Research Findings in Psychology: Is Lack of Replication the Real Problem? Or Is It Something Else? has a section that made me think about epistemics, knowledge, and how we try to make the world a better place. I'll include the exact quote below, but my rough summary of it would be that multiple studies found no relationship between the presence or absence of highway shoulders and accidents/deaths, and thus they weren't built. Unfortunately, none of the studies had... (read more)

Evidence-Based Management

What? Isn't it all evidence-based? Who would take actions without evidence? Well, often people make decisions based on an idea they got from a pop-business book (I am guilty of this), off of gut feelings (I am guilty of this), or off of what worked in a different context (I am definitely guilty of this).

Rank-and-yank (I've also heard it called forced distribution and forced ranking, and Wikipedia describes it as vitality curve) is an easy example to pick on, but we could easily look at some other management practice in hiring, mark... (read more)

Really enjoyed reading this, thanks for sharing! Any tips for finding a good bookclub? I've not used that website before but I'd expect it would be a good commitment mechanism for me as well.