Posts tagged community

Quick takes

Popular comments

Recent discussion

This is the first in a sequence of four posts taken from my recent report: Why Did Environmentalism Become Partisan?

Introduction

In the United States, environmentalism is extremely partisan.

It might feel like this was inevitable. Caring about the environment, and supporting...

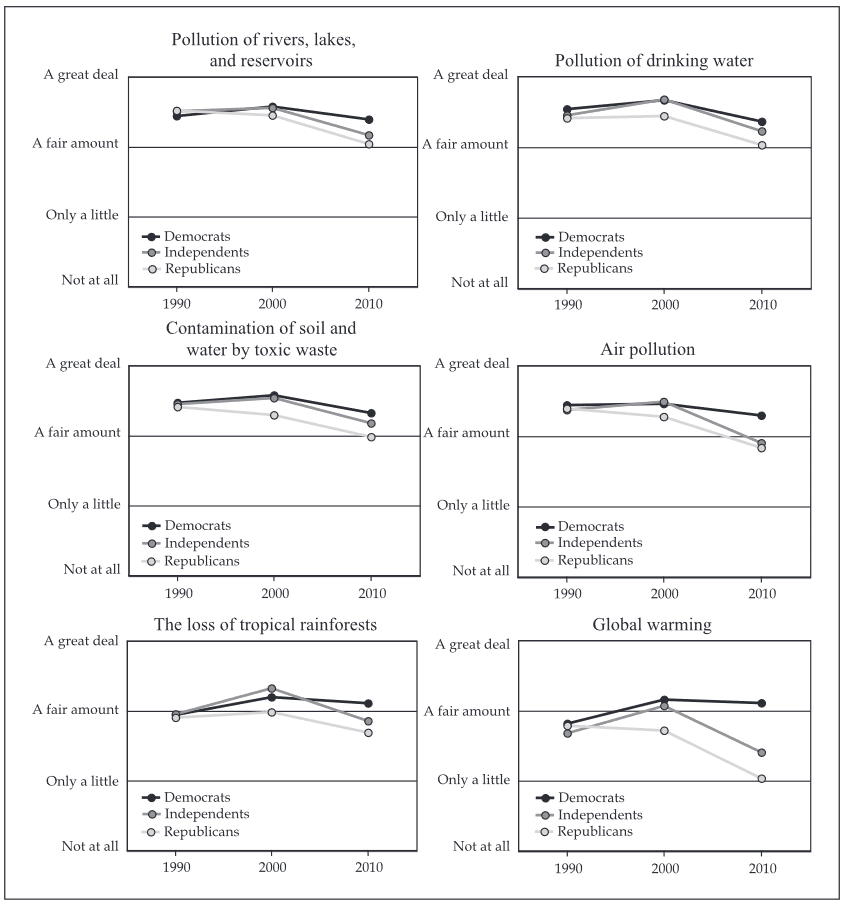

Climate change is more partisan than other environmental issues, but other environmental issues have also become partisan since 1990.[1] The shift in focus from local environmental issues to climate change is part of what made it easier for environmentalism to become partisan, but it is not the only factor.

Environmental concern by partisan identification, averaged over a four point scale. “I’m going to read you a list of environmental problems. As I read each one, please tell me if you personally worry about this problem a great deal, a fair amount, o...

This is the second in a sequence of four posts taken from my recent report: Why Did Environmentalism Become Partisan?

Many of the specific claims made here are investigated in the full report. If you want to know more about how fossil fuel companies’ campaign contributions, the partisan lean of academia, or newspapers’ reporting on climate change have changed since 1980, the information is there.

Introduction

Environmentalism in the United States today is unusually partisan, compared to other issues, countries, or even the United States in the 1980s. This contingency suggests that the explanation centers on the choices of individual decision makers, not on broad structural or ideological factors that would be consistent across many countries and times.

This post describes the history of how particular partisan alliances were made involving the environmental movement between 1980 and 2008. Since...

This could be a long slog but I think it could be valuable to identify the top ~100 OS libraries and identify their level of resourcing to avoid future attacks like the XZ attack. In general, I think work on hardening systems is an underrated aspect of defending against...

I'd be interested in exploring funding this and the broader question of ensuring funding stability and security robustness for critical OS infrastructure. @Peter Wildeford is this something you guys are considering looking at?

Around the end of Feb 2024 I attended the Summit on Existential Risk and EAG: Bay Area (GCRs), during which I did 25+ one-on-ones about the needs and gaps in the EA-adjacent catastrophic risk landscape, and how they’ve changed.

The meetings were mostly with senior managers...

Intellectual diversity seems very important to figuring out the best grants in the long term.

If atm the community, has, say $20bn to allocate, you only need a 10% improvement to future decisions to be worth +$2bn.

Funder diversity also seems very important for community health, and therefore our ability to attract & retain talent. It's not attractive to have your org & career depend on such a small group of decision-makers.

I might quantify the value of the talent pool around another $10bn, so again, you only need a ~10% increase here to be worth a b...

Here’s the funding gap that gets me the most emotionally worked up:

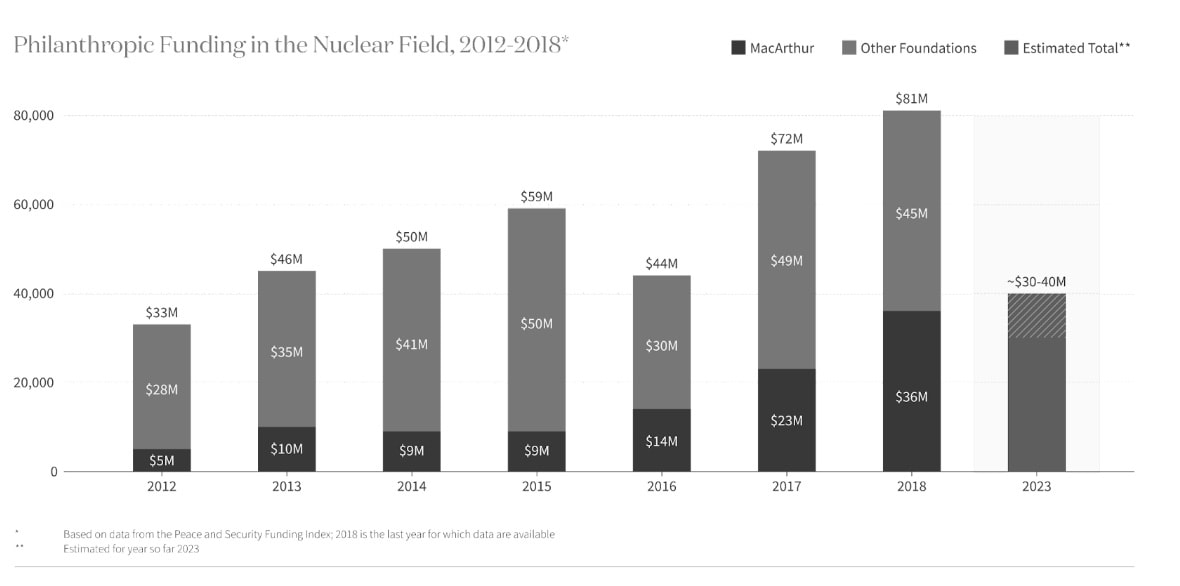

In 2020, the largest philanthropic funder of nuclear security, the MacArthur Foundation, withdrew from the field, reducing total annual funding from $50m to $30m.

That means people who’ve spent decades building experience in the field will no longer be able to find jobs.

And $30m a year of philanthropic funding for nuclear security philanthropy is tiny on conventional terms. (In fact, the budget of Oppenheimer was $100m, so a single movie cost more than 3x annual funding to non-profit policy efforts to reduce nuclear war.)

And even other neglected EA causes, like factory farming, catastrophic biorisks and AI safety, these days receive hundreds of millions of dollars of philanthropic funding, so at least on this dimension, nuclear security is even more neglected.

I agree that a full accounting of neglectedness should consider...

Just as the 2022 crypto crash had many downstream effects for effective altruism, so could a future crash in AI stocks have several negative (though hopefully less severe) effects on AI safety.

Why might AI stocks crash?

The most obvious reason AI stocks might crash is that...

One quick point is divesting, while it would help a bit, wouldn't obviously solve the problems I raise – AI safety advocates could still look like alarmists if there's a crash, and other investments (especially including crypto) will likely fall at the same time, so the effect on the funding landscape could be similar.

With divestment more broadly, it seems like a difficult question.

I share the concerns about it being biasing and bad for PR, and feel pretty worried about this.

On the other side, if something like TAI starts to happen, then the index will go ...

Animal Ethics has recently launched Senti, an Ethical AI assistant designed to answer questions related to animal ethics, wild animal suffering, and longtermism. We at Animal Ethics believe that while AI technologies could potentially pose significant risks to animals, ...

Caspar Oesterheld came up with two of the most important concepts in my field of work: Evidential Cooperation in Large Worlds and Safe Pareto Improvements. He also came up with a potential implementation of evidential decision theory in boundedly rational agents called decision auctions, wrote a comprehensive review of anthropics and how it interacts with decision theory which most of my anthropics discussions built on, and independently decided to work on AI some time late 2009 or early 2010.

Needless to say, I have a lot of respect for Caspar’s work. I’ve often felt very confused about what to do in my attempts at conceptual research, so I decided to ask Caspar how he did his research. Below is my writeup from the resulting conversation.

How Caspar came up with surrogate goals

The process

- Caspar had spent six months FTE thinking about a specific bargaining problem

Date: Tuesday May 14th

Time: 7pm - 9pm

Location: Mad Radish at 859 Bank St

Theme: beginner friendly & social

We’ve been on a good streak of seeing new faces at our events and I hope that trend will continue for this one. We’ll start with introductions and a brief ...

Unfortunately I'm sick and bowing out, but the meetup is still on!

To help you find the group, at least one person should be wearing a shirt with the heart-in-lightbulb logo of effective altruism, and there should be a decent turnout (~8-10 people?) based on RSVPs from the various platforms we advertise the event. The group may be in the upstairs portion of the venue.