It's a common story. Someone who's passionate about EA principles, but has little in the way of resources, tries and fails to do EA things. They write blog posts, and nothing happens. They apply to jobs, and nothing happens. They do research, and don't get that grant. Reading articles no longer feels exciting, but like a chore, or worse: a reminder of their own inadequacy. Anybody who comes to this place, I heartily sympathize, and encourage them to disentangle themselves from this painful situation any way they can.

Why does this happen? Well, EA has two targets.

- Subscribers to EA principles who the movement wants to become big donors or effective workers.

- Big donors and effective workers who the movement wants to subscribe to EA principles.

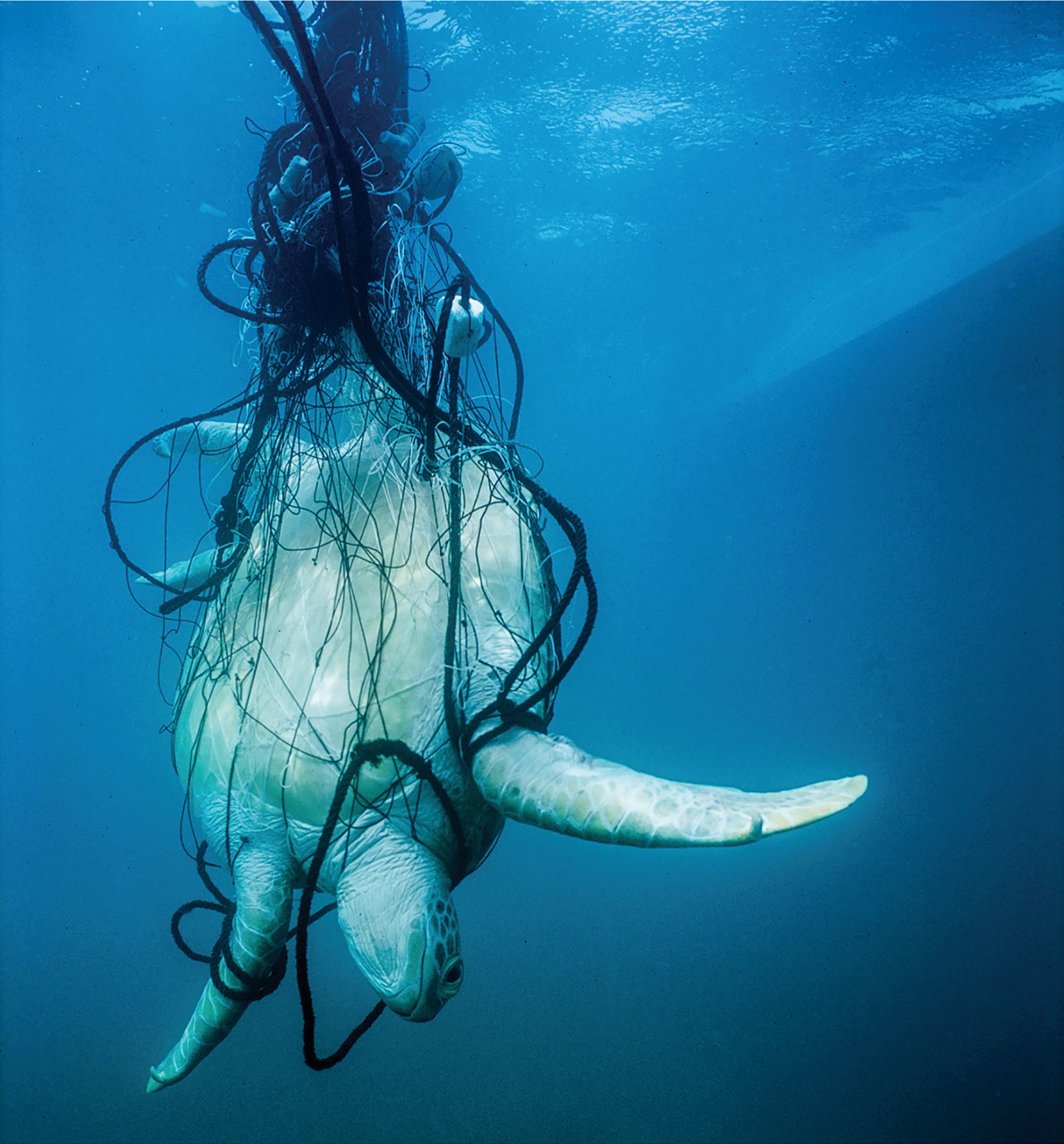

I won't claim what weight this community and its institutions give to (1) vs. (2). But when we set out to catch big fish, we risk turning the little fish into bycatch. The technical term for this is churn.

Part of the issue is the planner's fallacy. When we're setting out, we underestimate how long and costly it will be to achieve an impact, and overestimate what we'll accomplish. The higher above average you aim for, the more likely you are to fall short.

And another part is expectation-setting. If the expectation right from the get-go is that EA is about quickly achieving big impact, almost everyone will fail, and think they're just not cut out for it. I wish we had a holiday that was the opposite of Petrov Day, where we honored somebody who went a little bit out of their comfort zone to try and be helpful in a small and simple way. Or whose altruistic endeavor was passionate, costly, yet ineffective, and who tried it anyway, changed their mind, and valued it as a learning experience.

EA organizations and writers are doing us a favor by presenting a set of ideas that speak to us. They can't be responsible for addressing all our needs. That's something we need to figure out for ourselves. EA is often criticized for its "think global" approach. But the EA is our local, our global local. How do we help each other to help others?

From one little fish in the sEA to another, this is my advice:

- Don't aim for instant success. Aim for 20 years of solid growth. Alice wants to maximize her chance of a 1,000% increase in her altruistic output this year. Zahara's trying to maximize her chance of a 10% increase in her altruistic output. They're likely to do very different things to achieve these goals. Don't be like Alice. Be like Zahara.

- Start small, temporary, and obvious. Prefer the known, concrete, solvable problem to the quest for perfection. Yes, running an EA book club or, gosh darn it, picking up trash in the park is a fine EA project to cut our teeth on. If you donate 0% of your income, donating 1% of your income is moving in the right direction. Offer an altruistic service to one person. Interview one person to find out what their needs are.

- Ask, don't tell. When entrepreneurs do market research, it's a good idea to avoid telling the customer about the idea. Instead, they should ask the customer about their needs and problems. How do they solve their problems right now? Then they can go back to the Batcave and consider whether their proposed solution would be an improvement.

- Let yourself become something, just do it a little more gradually. It's good to keep your options open, but EA can be about slowing and reducing the process of commitment, increasing the ability to turn and bend. It doesn't have to be about hard stops and hairpin turns. It's OK to take a long time to make decisions and figure things out.

- Build each other up. Do zoom calls. Ask each other questions. Send a message to a stranger whose blog posts you like. Form relationships, and care about those relationships for their own sake. That is literally what EA community development is about; a community of like-minded friends is far stronger than an organization of ideologues.

- You don't have to brand everything as EA. If you want to encourage your friends to donate to MIRI or GiveWell, you can just talk about those specific organizations. An argument that's true often doesn't need to be argued. "They work to keep AI technology safe for humanity" and "they give bed nets to prevent malaria" are causes that kind of sell themselves. If people want to know why you recommend them, you'll be able to answer very well.

- Be a founder and an instigator, even if the organization is temporary, the activity incomplete. Do a little bit of everything. Have the guts to write for this forum, if you have time. Organize an event with a friend. Buy a domain name and throw together a website.

- Stay true to the principles, even if you're not sure how to put them into practice.

- Don't be bycatch. It's OK to come in and out of EA, and back in again if you want to. The best thing you can possibly do for the community is make EA work for you, rather than just making yourself work for EA.

Nemo day, perhaps