Posts tagged community

Quick takes

Popular comments

Recent discussion

I am writing this post in response to a question that was raised by Nick a few days ago,

1) as to whether the white sorghum and cassava that our project aims to process will be used in making alcohol, 2) whether the increase in production of white sorghum and cassava...

I worked at OpenAI for three years, from 2021-2024 on the Alignment team, which eventually became the Superalignment team. I worked on scalable oversight, part of the team developing critiques as a technique for using language models to spot mistakes in other language models. I then worked to refine an idea from Nick Cammarata into a method for using language model to generate explanations for features in language models. I was then promoted to managing a team of 4 people which worked on trying to understand language model features in context, leading to the release of an open source "transformer debugger" tool.

I resigned from OpenAI on February 15, 2024.

GPT-5 training is probably starting around now. It seems very unlikely that GPT-5 will cause the end of the world. But it’s hard to be sure. I would guess that GPT-5 is more likely to kill me than an asteroid, a supervolcano, a plane crash or a brain tumor. We can predict...

Yup.

the small movement that PauseAI builds now will be the foundation which bootstraps this larger movement in the future

Is one of the main points of my post. If you support PauseAI today you may unleash a force which you cannot control tomorrow.

Episodes 5 and 6 of Netflix's 3 Body Problem seem to have longtermist and utilitarian themes (content warning: spoiler alert)

- In episode 5 ("Judgment Day"), Thomas Wade leads a secret mission to retrieve a hard drive on a ship in order to learn more about the San-Ti who are going to arrive on Earth in 400 years. The plan involves using an array of nanofibers to tear the ship to shreds as it passes through the Panama Canal, killing everyone on board. Dr. Auggie Salazar (who invented the nanofibers) is uncomfortable with this plan, but Wade justifies it in that saving the whole world from the San-Ti will outweigh the deaths of the few hundred on board.

- In episode 6 ("The Stars Our Destination"), Jin Cheng asks Auggie for help building a space sail out of her nanofibers, but Auggie refuses, saying that we should focus on the problems affecting humanity now rather than the San-Ti who are coming in 400 years. This mirrors the argument that concerns about existential risks from advanced AI systems yet to be developed distract from present-day concerns such as climate change and issues with existing AI systems.

Summary

- U.S. poverty is deadlier than many might realize and worse than most wealthy countries.

- The U.S. spends $600B/year on poverty interventions, and many are less effective than they could be because of poor design.

- The work of GiveDirectly U.S. and others

Cool article! I enjoyed reading it. Seems like a great way to apply effective altruism style thinking into a different cause area.

My main takeaway from this is currently domestic aid is not neglected but highly ineffective. Making something that already has lots of resources going into it (and a lot more people willing to put resources into it) much more effective can be extremely impactful.

I'm curious how likely it is for US domestic aid to be a lot better given that a lot of people care about making it go well / political resistance.

Thanks again for this article!

^I'm going to be lazy and tag a few people: @Joey @KarolinaSarek @Ryan Kidd @Leilani Bellamy @Habryka @IrenaK Not expecting a response, but if you are interested, feel free to comment or DM.

Have you done some research on the expected demand (e.g. survey the organisers of the mentioned programs, community builders, maybe Wytham Abbey event organisers)? I can imagine the location and how long it takes to get there (unless you are already based in London, though even then it's quite the trip) could be a deterrent, especially for events <3 days. (Another factor may be "fanciness" - I've worked with orgs and attended/organise events where fancy venues were eschewed, and others where they were deemed indispensable. If that building is anything like the EA Hotel - or the average Blackpool building - my expectation is it would rank low on this. Kinda depends on your target audience/user.)

Not done any research (but asking here, now :)). I guess 1 week is more of a sweet spot, but we have hosted weekend events before at CEEALAR. In the last year, CEEALAR has hosted retreats for a few orgs (ALLFED, Orthogonal, [another AI Safety org], PauseAI (upcoming)) and a couple of bootcamps (ML4G), all of which we have charged for. So we know there is at least some demand. Because of hosting grantees long term, CEEALAR isn't able to run long events (e.g. 10-12 week courses or start-up accelerators), so demand there is untested. But I think given the cost competitiveness, there would be some demand there.

Re fanciness, this is especially aimed at the budget (cost effectiveness) conscious. Costs would be 3-10x less than what is typical for UK venues. And there would be another bonus of having an EA community next door.

I make a quick (and relatively uncontroversial) poll on how people are feeling about EA. I'll share if we get 10+ respondents.

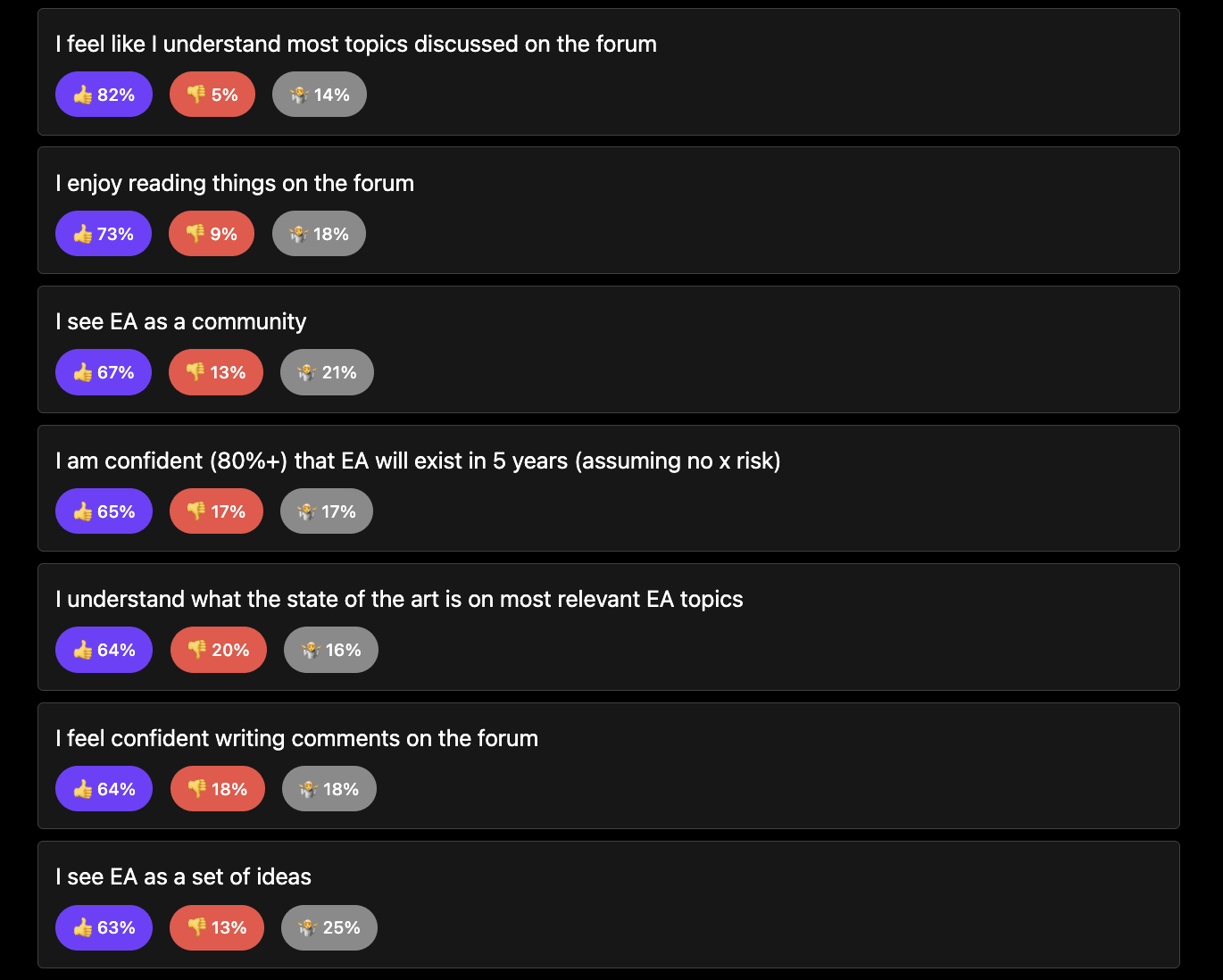

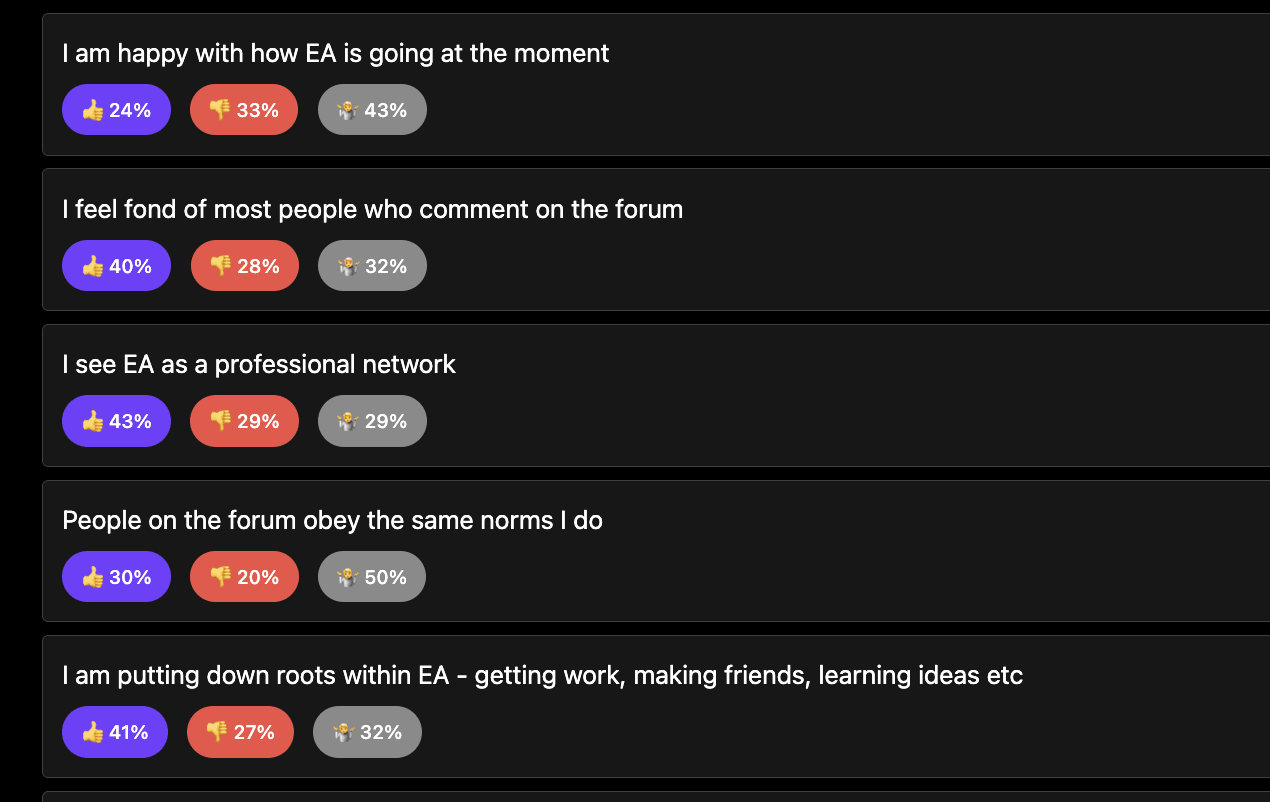

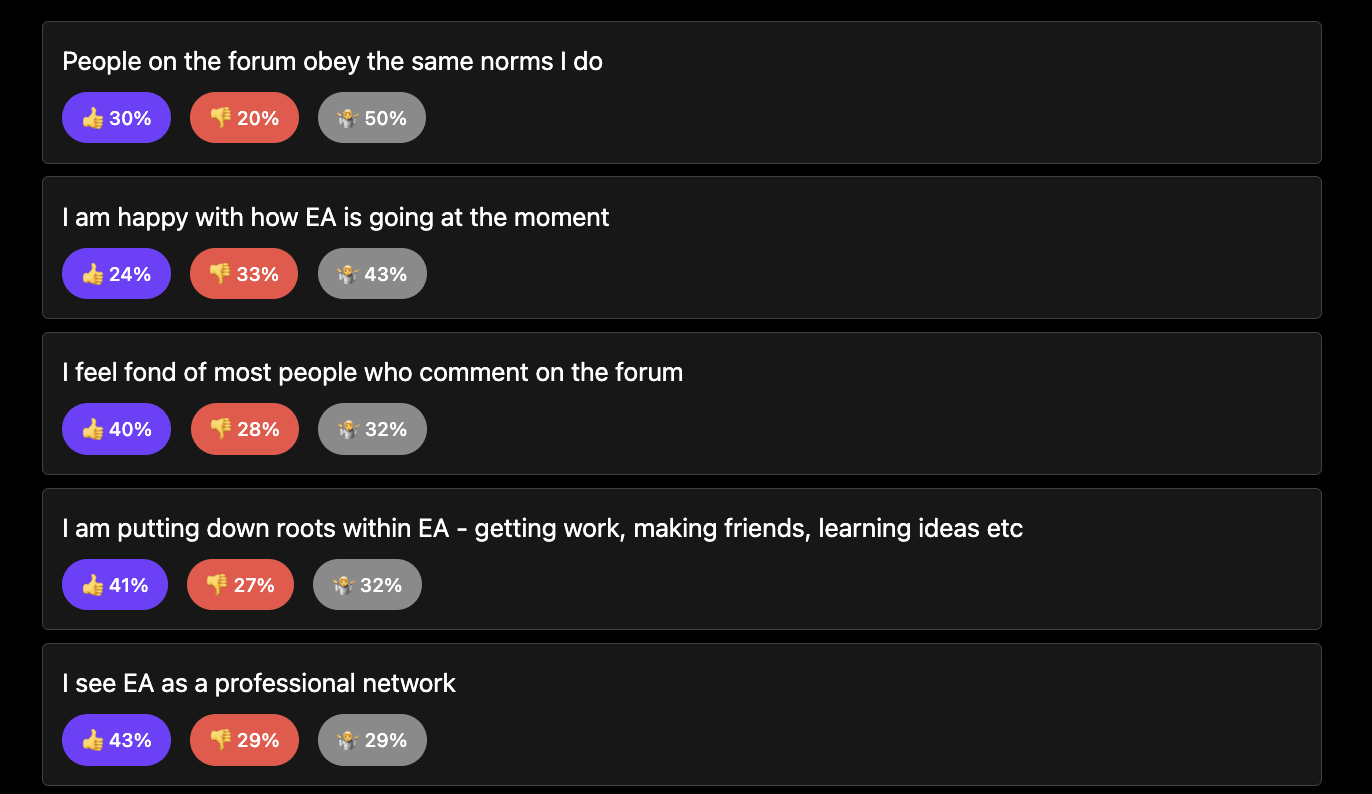

Currently 27-ish[1] people have responded:

Full results: https://viewpoints.xyz/polls/ea-sense-check/results

Statements people agree with:

Statements where there is significant conflict:

Statements where people aren't sure or dislike the statement:

- ^

The applet makes it harder to track numbers than the full site.

Thanks Anthony, this is a very interesting post (and I appreciate your answer to my question on your previous post). I have a couple more questions if you have time to answer them: