There was a recent discussion on twitter about whether global development had been deprioritised within EA. This struck a chord with some (*edit* despite the claim in the twitter thread being false). So:

What is the priority of Global poverty within EA, compared to where it ought to be?

I am going to post some data and some theories. I'd like if people in the comments falsified them and then we'd know the answer.

- Some people seem to think that global development is lower priority than it should be within EA. Is this view actually widespread?

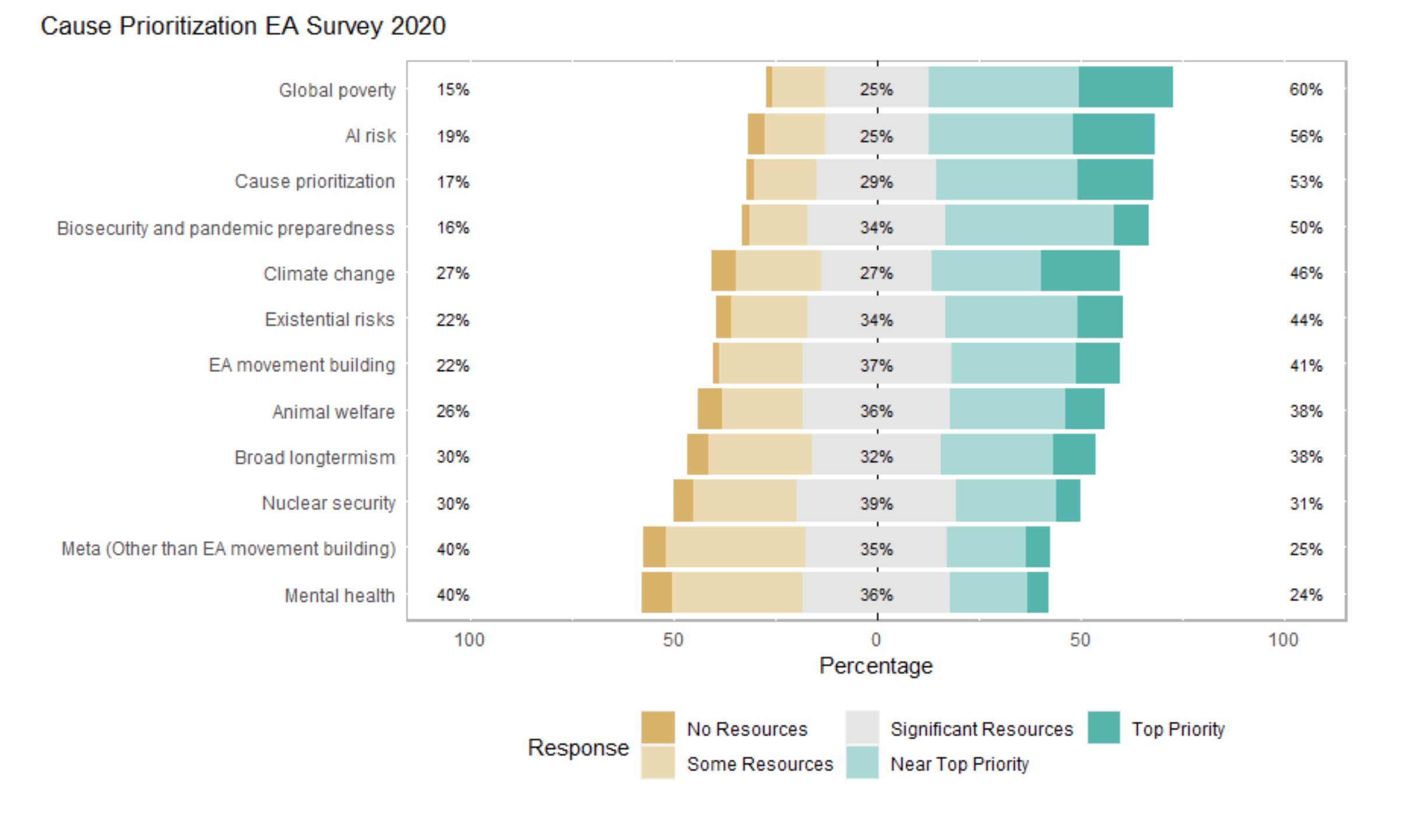

- Global poverty was held in very high esteem in 2020. Without further evidence we should assume it still is. In the 2020 survey, no cause area had a higher average rating (I'm eyeballing this graph) or a higher % of near top + top priority ratings. In 2020, global development was considered the highest priority by EAs in general.

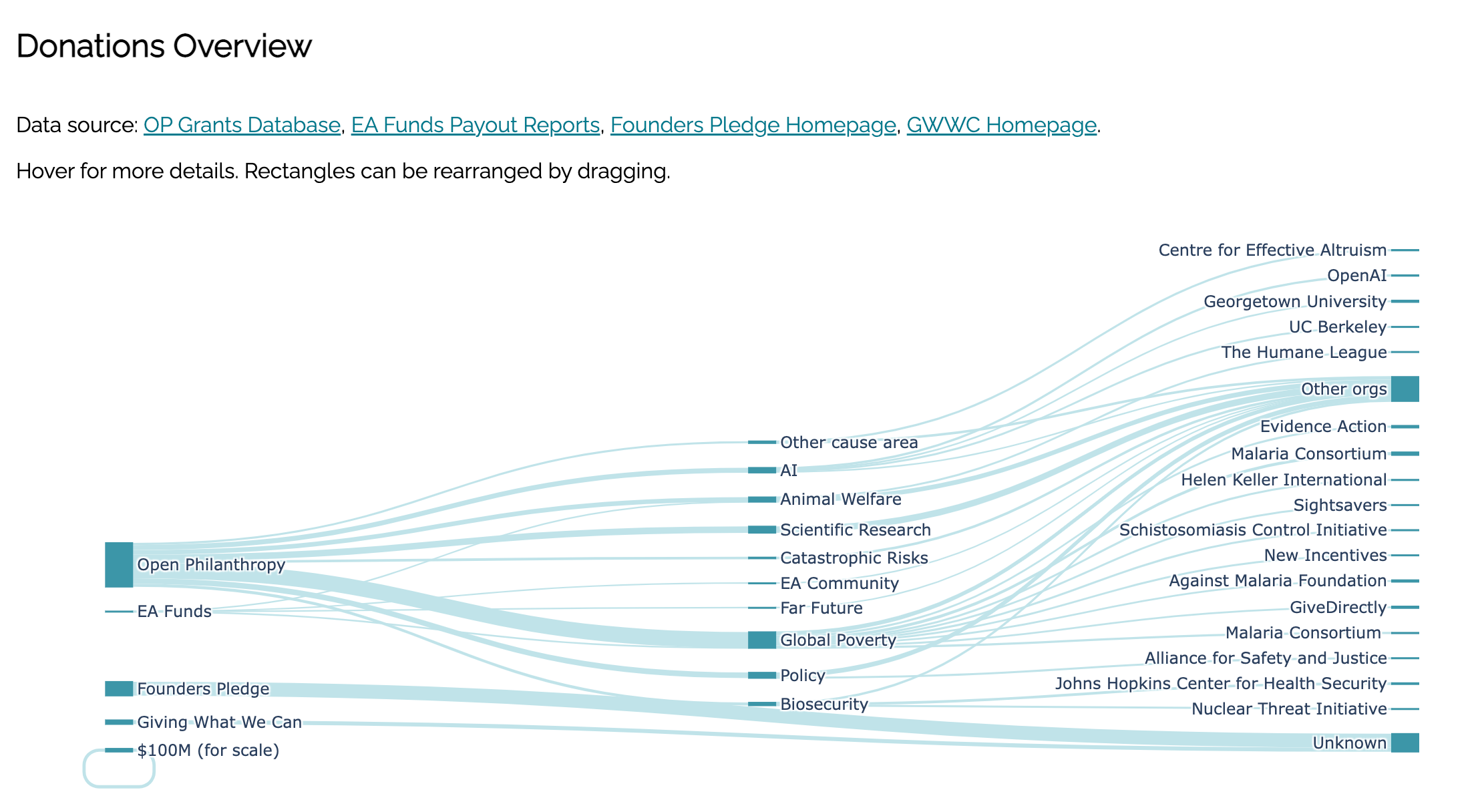

- Global poverty gets the most money by cause area from Open Phil & GWWC according to https://www.effectivealtruismdata.com/

- The FTX future fund lists economic growth as one of its areas of interest (https://ftxfuturefund.org/area-of-interest/)

- Theory: Elite EA conversation discusses global poverty less than AI or animal welfare. What is the share of cause areas among forum posts, 80k episodes or EA tweets? I'm sure some of this information is trivial for one of you to find. Is this theory wrong?

- Theory: Global poverty work has ossified around GiveWell and their top charities. Jeff Mason and Yudkowsky both made variations of this point. Yudkowsky's reasoning was that risktakers hadn't been in global poverty research anyway - it attracted a more conservative kind of person. I don't know how to operationalise thoughts against this, but maybe one of you can.

- Personally, I think that many people find global poverty uniquely compelling. It's unarguably good. You can test it. It has quick feedback loops (compared to many other cause areas). I think it's good to be in coalition with the most effective area of an altruistic space that vibes with so many people. I like global poverty as a key concern (even though it's not my key concern) because I like good coalitional partners. And Longtermist and global development EAs seem to me to be natural allies.

- I can also believe that if we care about the lives of people currently alive in the developing world and have AI timelines of less than 20 years, we shouldn't focus on global development. I'm not an expert here and this view makes me uncomfortable, but conditional on short AI timelines, I can't find fault with it. In terms of QALYs there may be more risk to the global poor from AI than malnourishment. If this is the case, EA would moves away from being divided by cause areas towards a primary divide of "AI soon" vs "AI later" (though deontologists might argue it's still better to improve people's lives now rather than save them from something that kills all of us). Feel fry to suggest flaws in this argument

- I'm going to seed a few replies in the comments. I know some of you hate it when I do this, but please bear with me.

What do you think? What are the facts about this?

endnote: I predict 50% that this discussion won't work, resolved by me in two weeks. I think that people don't want to work together to build a sort of vague discussion on the forum. We'll see.

[redacted because tone sounds innocent and making excuses, lacks humility]

[comment edited loads to reduce confusion]

What are people objecting to here? Is it the style or the ideas? Or were certain phrases provoking bad reactions like

[examples deleted. i think they were fuelling it and doing more harm than good.]

The thing is, none of these understandable reactiv3 guesses are remotely true of me. I was simply trying to satisfy OP's desire to avoid the anticipated unwillingness of respondents tohave the sort of 'vague discussion' he wanted, which I took to mean something unfiltered, direct from the subconscious and super authentic. If I don't at least do that in draft, the ideas disappear.

And rephrasing is a kind of punishment for unfashionable thought, and often takes hours of self torture, and results in something watered down anyway. I really think it's more fun and intellectually rewarding to go Deleuzian over everybody's asses like a Narcissist from It's Always Sunny in Philadelphia. Also, my ocd and anxiety and reality issues make it very hard for me to edit at the moment. I thought it better to post unpolished, relevant stuff than not post.

I'm sorry I seem to have caused offence, confusion, reduction of social trust and general harm to ea, which is pretty much the only movement I ever cared about, that I abstained from participating in from ages 15 to 28 or 29 due to fear of doing harm to a very young movement with my craziness. Or perhaps it's less serious and people just picked up on a few phrases and thought Iwas just a stupid troll... which superficially [edit: sorry for that word] I guess it does look like it.

I think I'll add some quotes to show what I was responding to and try to make things a bit clearer. [edit: i chose to delete 2 of 3 of my top level comments here instead] I am guessing that the problem is primarily me and my communication and not other people for not jiving with the basically curated-to-transgress as an overcoming bias exercise contents of my mind.