There was a recent discussion on twitter about whether global development had been deprioritised within EA. This struck a chord with some (*edit* despite the claim in the twitter thread being false). So:

What is the priority of Global poverty within EA, compared to where it ought to be?

I am going to post some data and some theories. I'd like if people in the comments falsified them and then we'd know the answer.

- Some people seem to think that global development is lower priority than it should be within EA. Is this view actually widespread?

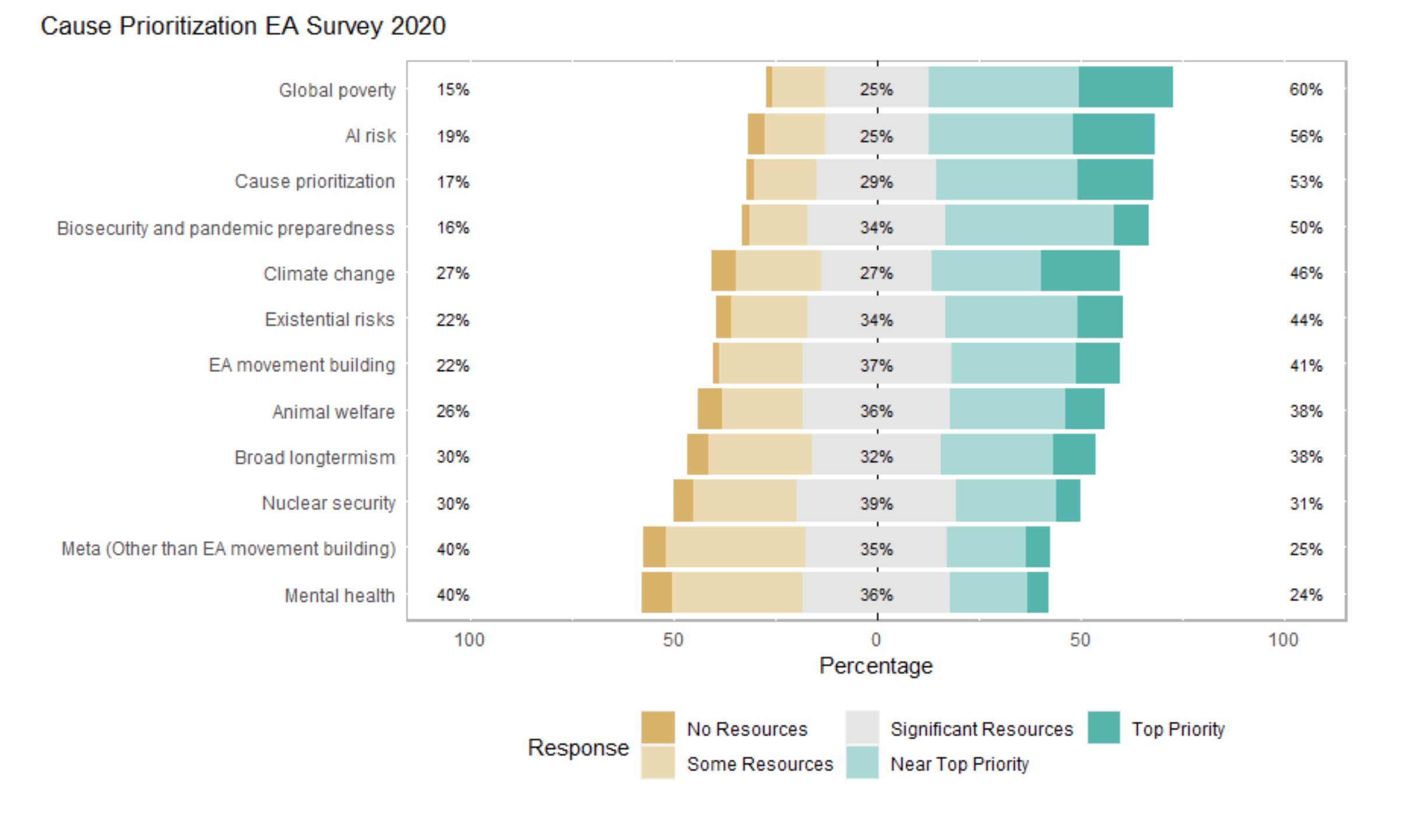

- Global poverty was held in very high esteem in 2020. Without further evidence we should assume it still is. In the 2020 survey, no cause area had a higher average rating (I'm eyeballing this graph) or a higher % of near top + top priority ratings. In 2020, global development was considered the highest priority by EAs in general.

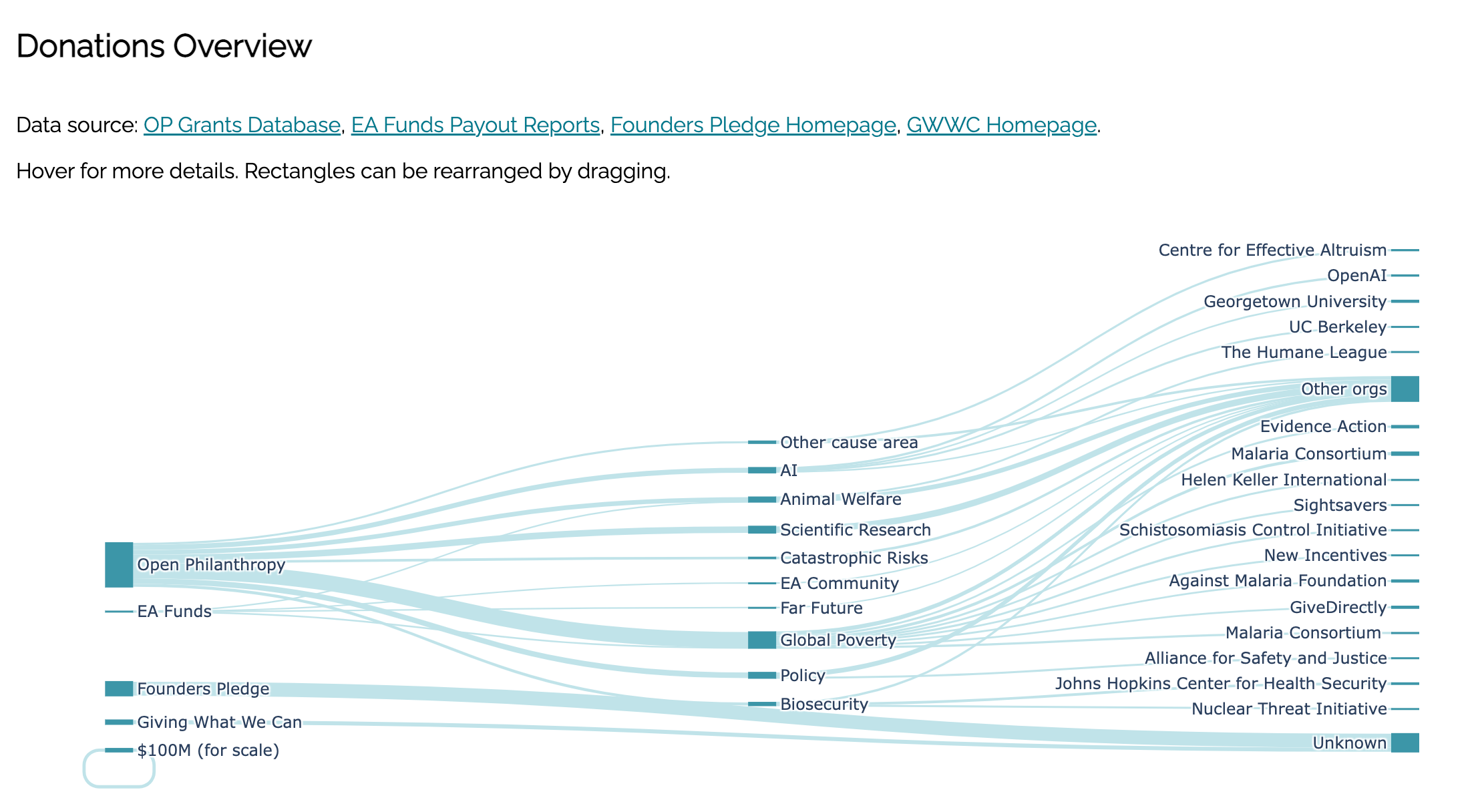

- Global poverty gets the most money by cause area from Open Phil & GWWC according to https://www.effectivealtruismdata.com/

- The FTX future fund lists economic growth as one of its areas of interest (https://ftxfuturefund.org/area-of-interest/)

- Theory: Elite EA conversation discusses global poverty less than AI or animal welfare. What is the share of cause areas among forum posts, 80k episodes or EA tweets? I'm sure some of this information is trivial for one of you to find. Is this theory wrong?

- Theory: Global poverty work has ossified around GiveWell and their top charities. Jeff Mason and Yudkowsky both made variations of this point. Yudkowsky's reasoning was that risktakers hadn't been in global poverty research anyway - it attracted a more conservative kind of person. I don't know how to operationalise thoughts against this, but maybe one of you can.

- Personally, I think that many people find global poverty uniquely compelling. It's unarguably good. You can test it. It has quick feedback loops (compared to many other cause areas). I think it's good to be in coalition with the most effective area of an altruistic space that vibes with so many people. I like global poverty as a key concern (even though it's not my key concern) because I like good coalitional partners. And Longtermist and global development EAs seem to me to be natural allies.

- I can also believe that if we care about the lives of people currently alive in the developing world and have AI timelines of less than 20 years, we shouldn't focus on global development. I'm not an expert here and this view makes me uncomfortable, but conditional on short AI timelines, I can't find fault with it. In terms of QALYs there may be more risk to the global poor from AI than malnourishment. If this is the case, EA would moves away from being divided by cause areas towards a primary divide of "AI soon" vs "AI later" (though deontologists might argue it's still better to improve people's lives now rather than save them from something that kills all of us). Feel fry to suggest flaws in this argument

- I'm going to seed a few replies in the comments. I know some of you hate it when I do this, but please bear with me.

What do you think? What are the facts about this?

endnote: I predict 50% that this discussion won't work, resolved by me in two weeks. I think that people don't want to work together to build a sort of vague discussion on the forum. We'll see.

For whatever reason, the EA Forum's culture is to have a very friendly, kind of academic-ish / wikipedia-ish writing style, even in comments. Personally I think this goes too far. But when you are just spinning off random jokes and personal associations and offensive stuff, it becomes legitimately harder to understand:

- "Goyim" -- I'm pretty sure this means non-jews but I don't know the exact emotional connotations? I guess this is a reference to high Ashkenazi IQ and the idea that there are a lot of jews in EA? (Is it really the case that EA is overwhelmingly jewish? I feel like EA is less jewish, and more british, than LessWrong rationalism. Although that is just a gut impression and I don't think people would understand me if I started making jokes referring to OpenPhil as "the crown" or "parliament" or whatever.) Anyways, what do you mean by this joke -- are you just saying "there are a lot of smart people in EA" and using jewishness as a synonym for "smart people"? Or are you saying that EA has jewish values or is in some sense a fundamentally jewish project? (As an ethnically british/german guy, I disagree and don't see what's so jewish about EA??)

- Insulting everyone with sub-130 IQ -- are you just trying to tell people how smart you are? Or are you trying to express a worldview where IQ is the dominant factor in whether someone can correctly recognize that longtermism is the best cause area? (As a longtermist myself, I am sad to report that I have a lot of very smart friends and coworkers who have yet to spontaneously convert to longtermism, or even to effective altruism broadly.) Or are you saying that high-IQ people are generally more interested in complex-multi-step plans, so longtermism appeals to their personalities more?

- Calling things cults or religions -- famously, there are lots of different ways that things can be compared to cults or religions. I get the sense that you are insulting rationality, but what exactly are you trying to communicate? That rationality is too centralized around a few charismatic leaders? That its ideas are non-falsifiable?

- For someone interested in recruiting "less bright but still very nice and helpful people", you seem pretty off-putting to that goal? For someone afraid of "persecution and state repression", you seem pretty happy to fire off #nofilter hot takes? These layers of irony / dissonance make it unclear what your message is and where you are coming from.

Besides the above points of confusion, your writing style also pattern-matches onto "rantings of an internet rando who is probably ramming everything into one hedgehog-y worldview". EAs are (as you say) smart people whose time is valuable; they don't have time to engage with lots of people who seem belligerent and ideological on their off chance that their shitpost actually contains valuable insight, because even though it sometimes happens, the prior probability of encountering valuable insight is low.