All Comments

Not quite a draft amnesty thing, but I have been playing with the idea of writing short stories or perhaps even novels that use time travellers as a vehicle for Longtermism. The idea is that time travellers from the far distant future are our descendents, the very people that Longtermism cares about, so their perspective could be something worth exploring in fiction.

Given, I'm more of a soft Longtermist, and creative writing is notoriously hard to make any kind of living out of, so I'm not sure to what extent this is worth doing/trying/exploring, even as just a side project.

I've been on this forum since 2014 and I -still- feel this way sometimes. Although, I will say Less Wrong is notably worse for this.

It does get better after you make a few comments/posts and notice people aren't jumping all over you. I used to be much more terrified, but now, I'm only kinda apprehensive whenever I post.

Thanks for sharing Vasco! On the point about public support - sadly I don't think that Welfare Footprint's data will be enough to convince people about these kinds of reforms. I think welfare reforms have to almost immediately make intuitive sense for them to receive mainstream public support. E.g. organizations have been doing a great job trying to popularize the term 'Frankenchickens' as a way to make the welfare issues of broiler chicken breeding more salient, but that's still been a really heavy lift and comparing two different kinds of cages seems even harder.

Is there a risk of boiling the ocean here?

The 'community notes everywhere' proposal seems easy enough to build (I've been hacking away at a Chrome extension version of it). I'm not sure it makes sense to wait for personal computing to change fundamentally before trying to attempt this.

I agree that distribution is an issue, which I'm not sure how to solve. One approach might be to have a core group of users onboarded who annotate a specific subset of pages - like the top 20 posts on Hacker News - so that there's some chance of your notes being seen if you'r...

PS: responding to my first comment about how we don't yet have a proper definition for AGI -

We do in fact have workable definitions for what AGI could be (such as AI fulfilling 90% of human tasks to a level equal or superior to a human), even though in practice many people don't bother defining their terms before using them.

Some descriptive terms that I learned about which are useful are below. In practice, they are worth defining more precisely when using them so others know exactly what you mean when you use them.

TAI (transformative AI): The ...

Thanks for the reply Vasco.

However, in reality, I expect furnished cages to be built or renewed gradually, not all at once across the EU in a few years.

My understanding is that the battery-enriched transition did happen all at once in the EU. That's also confirmed here on page 11 (and also here p. 28-33). Battery cage companies waited until 2009-2011 (just before the ban on battery cage entered into force). The only exceptions are the cages that broke/burned down and new cages (in the period 1999-2010) but they are limited. But maybe I underestimate the po...

Hi again,

Coming back to this post as I have changed my mind significantly on this topic since my first comment and wanted to share my reasons.

The point is not whether AGI is possible in principle or whether it will eventually be created if science and technology continue making progress — it seems hard to argue otherwise — but that this is not the moment. It's not even close to the moment.

I used to agree with this statement on the basis that:

- I saw AI as basically being a stochastic parrot, and presentations like those of François Chollet (October 2024) con

I would define "extremely well" relative to the extremes of the income distribution rather than the median. However, according to https://statisticalatlas.com/metro-area/California/San-Francisco/Household-Income the "mean of top 5%" income is $563k so $600k would count as "extremely high" by my definition too.

Perhaps that also answers my other question. The reason so many orgs are based in high-cost cities is that there are lots of workers who are willing to eat that cost themselves, taking a big hit to everything I would include in a "quality of lif...

Good interview. On why there is such vast disagreement about AI's potential economic impacts - I do think Ajeya's hypothesis about different base rates has merit, and I also agree that many economists are simply not entertaining the premise where AI actually can beat the top humans at all cognitive tasks.

But I think one grossly underrated reason why economists and AI futurists talk past each other on this point is that most futurists don't understand what "economic growth" and "GDP" actually measure. Presumably, when futurists talk about "economic gr...

Thanks for looking into this, Joren.

You seem to assuming that furnished cages are overwhelmingly built every 20 years, and were last build just before 2012. If this was the case, the vast majority of furnished cages would be renewed just before 2032 without a ban to end furnished caged before then. So I would agree that a ban would as a result happen in 2032 or 2052. However, in reality, I expect furnished cages to be built or renewed gradually, not all at once across the EU in a few years. So I believe a ban on furnished cages does not have to start in ve...

Thanks, Nick. The background may be funny. I was leaving home to meet my brother and grandpa for dinner. I was going to throw away a cardboard box into the bin, but it was too big to fit in. I was a bit in a rush. So I decided to leave it next to the bin instead of smashing it. This made me wonder about what would be the impact on soil animals of not having put the box into the bin, neglecting effects on my time (which was the real driver of my decision). I realised the people taking care of the garbage would probably do it, and therefore spend a tiny bit ...

Hi Dom! I run the Career Services team at 80,000 hours. We're also in the early stages of trying to build a product in this space! I feel very excited about the vision of scalably helping users find their comparative advantage in the impactful job landscape.

That said, when we've been working on these early prototypes, we've realised that there are a bunch of different visions for how to use AI to achieve this and we don't have capacity to really try every option. So I'd love to see competition in the space to increase the chance that one of us finds ...

Indeed, I updated some model parameters for the simulations in the paper, which resulted in a lower DLES burden calculation. I believe the main difference was the estimated fraction of severe attacks relative to milder attacks.

Given the large error bars, I also included a sensitivity analysis in the paper, i.e., I calculated the DLES burden assuming lower and upper bounds for various key variables. This resulted in lower and upper bounds of 700k and 11m DLES.

I think its incredibly hard to determine, but probably negative EV, as has been the history (IMO) of many (perhaps even most?) EA AI safety interventions. Well intended but for-the-worse-in-the-end. As a side note, I think the history of EA intervention in AI is a great example of just how hard it is to intentionally positively influence even the mid-term future.

It would only be positive if it actually contributed to some kind of slowdown or policy change, which seems pretty unlikely to me. I don't think an eval reslut will ever sound an alarm bell, that w...

Thanks Isabel! I still think you could perhaps have some soft recommendations? We are thinking of ditching our red and green plastic containers (which bits of plastic are often flaking off from) and replacing them with aluminium ones. I figure if the water is not hot that's surely safer? I think consumers can do something here to lower risk. We can't figure out what is contaminated but we can know which is more likely at least?

Yeah pregnant women eating soil a thing and some people do but I honestly don't know how much is ingested here so can't help ...

"I was actually wondering about how many nematode-years were affected by 1 kcal just a few days ago, although not in the context of love". This is part of what makes @Vasco Grilo🔸 unique. Can anyone else on the forum claim to have been thinking about this a few days before this post?

I don't think atproto is really a well designed protocol

- No private records yet, so can't really build anything you'd wanna live in on it.

- Would an agenty person respond to this situation by taking atproto and inventing their own private record extension for it and then waiting for atproto to catch up with them? Maybe. But also:

- The use of dns instead of content-addressing for record names is really ugly, since they're already using content-addressing elsewhere, so using dns is just making it hard to make schema resolution resilient, and it prohibits people w

I personally do think the probability of eventual disempowerment is high. However, you are implying that it is 100%. If it is 99%, or indeed even 99.9999999%, and one thinks the value of the future is significantly higher with humanity (not necessarily biological humans) in control vs AI, then there are still astronomical stakes of humanity remaining in control.

A relevant question I'm not sure about: for people who talk to politicians about AI risk, how useful are benchmarks? I'm not involved in those conversations so I can't really say. My guess is that politicians are more interested in obvious capabilities (e.g. Claude can write good code now) than they are in benchmark performance.

Know when to sound alarm bells

What is the situation where people coordinate to sound alarm bells over an AI benchmark? I basically don't think people pay attention to benchmarks in the way that matters: a benchmark comes out demonstrating some new potential danger, AI safety people raise concerns about it, and the people with the actual power continue to ignore them.

Thinking of some historical examples:

- Anthropic's findings on alignment faking should have alerted people that AI is too dangerous to keep building, but it didn't.

- Anthropic/OpenAI recently

As the founder of the premier fellowship for content creators in AI Safety (The Frame) , I'm happy to share my thoughts, experience and research on this topic. I've spent significant time researching what's missing, both through this research and from the conversations that I've had with other creators and cohort members.

This fund genuinely excites me. I think this remains one of the most underrated and important areas of AI Safety

I feel this way because media/films/content is usually the first interaction people have with AI Safety (and everything e...

Great post. I started reading and expected to find the explanation totally divorced from why I (a lacto-veg EA) am uninvolved in animal activism. But the high level explanation 'it is unpleasant & often unrewarding moment-to-moment' really resonated with me.

Making a community is really hard. Godspeed & good luck :)

Definitely relate to this. It helps to recognise that the people on this forum are all here by their desire to help, and everyone I've interacted with has been a genuinely awesome person. The worst case scenario is some constructive feedback which helps us grow and develop our ideas; people aren't usually coming from a place of 'gotcha' or condescension. If that's the worst case scenario, sharing your thoughts sounds pretty good!

Very interesting discussion - thank you for this!

"However, even if furnished cages are fully banned in the EU from 2032 on, which I guess is optimistic, there would still have been 20 years (2012 to 2032) with battery cages fully banned, but furnished cages not banned in the EU."

I think this scenario is accurate but only if the period between the (first) ban on battery cages and the (second) ban on enriched cages is exactly 20 years.

In this two-step approach, any ban on enriched cages will require a transition period that is approx. 20 years st...

Thanks for the comment!

On the No True Scotsman concern

Fair point. But I think there's a genuine structural asymmetry here. When liberal democracies commit atrocities, they do so by violating their own safeguards—secrecy, executive overreach, circumventing checks and balances. The CIA's Cold War operations required hiding what they were doing from Congress and the public, precisely because the actions were incompatible with the system's principles. And liberal democracies contain built-in self-correcting mechanisms: free press, independent courts, elections...

I am strongly in favour of Manchester for a conference. Oxford and Cambridge are quite annoying to get to from almost anywhere except London. People who haven't been before might not realise this.

I also worry that this form will mostly reach people in the south of England, since they will be more likely to be signed up to the EA UK newsletter as it has historically mainly catered to London.

I will try to circulate this form with groups and individuals in the North of England, Wales, and Scotland before the deadline.

Also, Oxford and Cambridge are likely going to be more expensive than Manchester, where we could maybe even get a free venue.

Thanks, I think this narrows the disagreement productively! :)

On the reframed Frankfurt School argument: I strongly agree with the claim that modern societies can retain technical rationality while losing wisdom and ethical reflection (cf. the section "differential intellectual regress").

Where I still disagree is with locating this tension inside Enlightenment reason. The decoupling of technological competence from moral reasoning isn't something Enlightenment values produce. It's what happens when Enlightenment values are abandoned while the technology re...

Communism probably also provides intellectual resources that would enable you to condemn most of the many very bad things communists have done, but that doesn't mean that those outcomes aren't relevant to assessing how good an idea communism is in practice.

But much less clearly so than classical liberalism. Communism lacks any clear rejection of violence, provides no robust mechanism for resolving disagreements or conflicts, and doesn't advocate for universal individual rights. Lenin—one of its most influential theorists—explicitly advocated for a "d...

that’s nearly 5 million person-days of extreme suffering (≥9/10 pain) annually

I notice you recently reported a slightly lower figure of 3 million DLES here, and in the accompanying research paper:

The Days Lived with Extreme Suffering (DLES) would then be 8,569 years x 365 days/year = 3,127,855

The difference doesn't matter much in itself, I guess: It's the same order of magnitude, and error bars are probably larger than 2 million DLES anyway. But I'm curious what caused this change in your estimate.

Hi Nick,

Thanks for sharing this. Really appreciate the solution-focused lens on a system that clearly needs rethinking. I recognize and mainly agree with the points you raise.

For context, I’ve spent about six years working in an international NGO, primarily living and working in Sub-Saharan Africa. I’m currently with a small, EA-aligned organization that already seems to implement many of the practices you highlight.

One possible addition to your list (though I realize it may be less immediately “doable”) is a deeper engagement with decolonization in ...

Executive summary: The author argues that it is worryingly likely that reward-seeking AIs will respond to “distant” incentives such as retroactive rewards or anthropic capture, which would undermine developer control and create new takeover risks, and that existing mitigation strategies appear unreliable.

Key points:

- The author defines “remotely-influenceable reward-seekers” as AIs that respond not only to local reward signals during training and deployment but also to distant incentives like retroactive rewards or being simulated in high-fidelity “anthropic

Not really. Rule consequentialism as an ethical theory has various (implausible) implications about which acts are objectively right or wrong, that can be assessed independently of the question of whether it would be a good thing for (some, many, or all) people to use a rule-based decision procedure.

See also the collective epistemics discussion, if you haven't already, which I suspect might also be of interest to you!

But after the first 50 customers, I run out of jackfruit, and the rest of the customers don't get to try the tacos. How do you think those customers would feel about my restaurant?

Quite possibly they infer this must be the most exciting new product, feel FOMO, and arrive even earlier the next day? Restaurant behaviour is weird - see for example how long lines are seen as a sign of success rather than mispricing.

Could you explain the community reuse thing again? I don't understand the tuples, but is the idea that query responses (which yield something like document sets?) can be cached with some identifiers? This helps future users by...? (Thinking: it can serve as a tag to a reproducible/amendable/updateable query, it can save someone running the exact same query again, ...)

That looks ambitious and awesome! I haven't looked deeply, but a few quick qs

- what do the costs look like to get embeddings for all those docs? How are you making choices about which embedding models to use and things like that?

- do you have qualitative (or quantitative?) sense of how well the semantic joins work for queries like the examples on the homepage?

- what's your sense of how this compares to tools like elicit?

Thanks for posting! Your axiom feels very familiar to me, but I am not sure if I have seen anyone seriously present it as the only required moral axiom. It would be surprising, though, if no one has done it before. Anyway, there are a few problems that immediately catch my attention.

Firstly, you don't seem to give any arguments to explain why we should accept this core principle. I interpret that you base it on intuition, which is something I dislike, but that is besides the point. After all, I don't think that the consequences of such a worldview are very...

I found this article quite interesting and well written, thank you for the contribution.

Here's a couple considerations I have not seen brought up:

1. You write: "Supplements can be expensive and difficult to obtain, especially EPA/DHA and certain carninutrients."

I agree that algae omega 3 supplements are quite expensive, on the range of 15-20 eur/month or more. Reading this I was hoping for a cheaper way to supplement that would still be ethical. However, sardines and anchovies (at least where I live) are still several times more expensive than just supplem...

I’ve also been thinking about incentives at the senior end as well, how do these orgs decide to pay a small number of senior staff extremely well, like for example I’ve seen figures that Eliezer Yudkowsky is compensated $600k at MIRI alone (*just used as an example, don’t have anything against him personally),

$600k is not "extremely well" for the Bay Area, given the high taxes and ridiculous cost of living there. But the obvious next question is: why are so many EA organisations located in extremely-high-cost cities?

I don't think it's an EA-specific proble...

Hi, happy to speak to the methodological points here.

Thanks for sharing the link and suggestion. We agree that understanding how much we can trust the data is crucial for interpreting our results, so thank you for engaging with this critically.

We didn’t measure individual reaction times for questions so using RT modelling isn’t an option. Modelling carelessness in other ways (e.g., modelling it as a latent tendency) would be fascinating, but I don’t endorse the assumptions we’d need to model carelessness as a latent variable. (I like how Rohrer ...

I think there is something to this, but the US didn't just "prop up" Suharto in the sense of had normal relations of trade and mutual favours even though he did bad things. (That indeed may well be the right attitude to many bad governments, and ones that many lefitsts might demand the US to take to bad left-wing governments, yes.) They helped install him, a process which was incredibly bloody and violent, even apart from the long-term effects of his rule: https://en.wikipedia.org/wiki/Indonesian_mass_killings_of_1965%E2%80%9366

Remember also that the same ...

Communism is a "reason-based" ideology, at least originally, in that it sees itself as secular and scientific and dispassionate and based on hard economics, rather than tradition or God. I mean, yes, Marxists tend to be more keen on evoking sociological explanations for people's beliefs than liberals are, but even Marxists usually believe social science is possible and even liberals admit people's beliefs are distorted by bias all the time, so the difference is one of emphasis rather than fundamental commitment I think.

This isn't a defence of communism particularly. The mere fact that people claim that something is the output of reason and science doesn't mean it actually is. That goes for liberalism too.

"Classical liberalism provides the intellectual resources to condemn the Jakarta killings. "

Communism probably also provides intellectual resources that would enable you to condemn most of the many very bad things communists have done, but that doesn't mean that those outcomes aren't relevant to assessing how good an idea communism is in practice.

Not that you said otherwise, and I am a liberal, not a communist. But I do think sometimes liberals can be a bit too quick to conclude that all crimes of liberal regimes having nothing distinctive...

I'm typically a non-interventionist when it comes to foreign policy (probably fairly extreme by EA standards; I support US withdrawal from NATO). But it seems to me that the evaluation of a given foreign policy depends largely on what baseline you use for comparison purposes. If North Korea is used as the baseline for what communism can do to a country, modern Indonesia seems preferable by comparison.

Critics of US foreign policy typically use a high implicit baseline which allows them to blame the US no matter what the US does.

Consider a country with a b...

Seems to me that a lot of EA experience could actually be a negative, if it worsens organizational groupthink.

Number one is, we hire for capability and learning ability before we hire for expertise. We actually would rather hire smart, curious people than people who are deep, deep experts in one area or another... somebody who's been doing the same thing forever will typically just replicate what they've seen before. You need a mix, but we skew heavily towards people who are kind of open to new ideas and creative.

"We need more people working on these neglected issues" doesn't necessarily mean that orgs have the management capacity to absorb more people.

Imagine I'm running a vegan restaurant. I've started serving my customers jackfruit tacos. They really like the tacos. So I run a giant advertising campaign all over the city telling people about my tacos. Come the weekend, my restaurant is flooded with customers. But after the first 50 customers, I run out of jackfruit, and the rest of the customers don't get to try the tacos. How do you think those custome...

Thanks for the relevant points, James.

Infrastructure lock-in

Changing from conventional to furnished cages, and from these to cage-free aviaries is more costly than directly changing from conventional cages to cage-free aviaries. However, the direct change requires a greater initial investment, and has a greater potential to decrease revenue due to increasing the cost of eggs 4.02 (= 1/0.249) times as much as the change from conventional to furnished cages. So I think having furnished cages as an intermediate step may at least in some cases derisk the overa...

As I currently live in an urban area in the Netherlands, I made the comment with the consumer walking or biking in mind. While ordering my groceries would be more convenient for me, I specifically do not because of the higher environmental costs. My using the app, and so being limited to online ordering, would be a worse environmental outcome than my continuing to walk and review products myself manually. But I am, to your point, likely not in the majority.

There are two main reasons I ask: first, because I don't know that the environmental costs of the pro...

Frankly, posting on this forum intimidates me the way reading any critical essay by Chomsky does. I do want to be intellectually responsible, but I don't much like the idea of being on either end, frankly, of an intellectual firing squad.

In some ways, this comment is in the spirit of Draft Amnesty Week.

Thanks so much for reading, Nick.

It really is nearly impossible as an individual consumer to figure out which of the many products we interact with everyday are safe, and which might be contaminated, unfortunately... so hoping we can collectively make progress on these things at a systems level soon!

On a related topic, I'm curious whether you see any geophagia (soil consumption) among pregnant women, or other people, in Northern Uganda? It's fairly common in Kenya and Malawi and we've unfortunately seen that the soils (which are often compacted, so they look like small stones) frequently contain lead levels well above what you'd want to see in something that's being directly consumed.

-- Isabel Arjmand (cofounder)

I completely agree! The fact that people don't understand this is probably one of the main reasons so many reject utilitarianism as too demanding. They don't get that maximizing utility is an ideal, not a minimum requirement for being a good person. I have usually referred to ideals simply as directions, but I really like how you compare them to the North Star. The idea is the same, but your wording is a bit more poetic.

TARA Round 1, 2026 — Last call: 9 Spots Remaining

𝗪𝗲'𝘃𝗲 𝗮𝗰𝗰𝗲𝗽𝘁𝗲𝗱 ~𝟳𝟱 𝗽𝗮𝗿𝘁𝗶𝗰𝗶𝗽𝗮𝗻𝘁𝘀 across 6 APAC cities for TARA's first round this year. Applications were meant to close in January, but we have room for 9 more people in select cities.

𝗢𝗽𝗲𝗻 𝗰𝗶𝘁𝗶𝗲𝘀: Sydney, Melbourne, Brisbane, Manila, Tokyo & Singapore

𝗜𝗳 𝘆𝗼𝘂'𝗿𝗲 𝗶𝗻𝘁𝗲𝗿𝗲𝘀𝘁𝗲𝗱: → Apply by March 1 (AOE) → Attend the March 7 icebreaker → Week 1 begins March 14

TARA is a 14-week, part-time technical AI safety program delivering the ARENA curriculum through weekl...

I don't think this is a good idea:

- Infrastructure lock-in. Furnished cage systems last 10-20 years. Once companies adopt it, they will likely not want to go cage-free until the end of this lifespan, which isn't great. This creates lock-in against further reform, not momentum toward it.

- The public won't be excited/that supportive of this: Corporate campaigns work best when there is public consensus and pressure. "Better cages" likely will not be something many people want to get behind. So you might lose your primary campaign tool and also the potential to br

Thank you for the post, which was an interesting read.

But these atrocities were largely driven by fanatical anti-communism (especially on the US side) that divided the world into an existential struggle between good and evil... They represent failures of liberal democracies to live up to their own principles, not evidence that those principles caused the violence.

If liberalism simply has temporary failings - is this not something of a no true Scotsman? Marxists might (and often do) say similarly that the examples you cite were the result of the leade...

One more side note, I am actually glad that, you as one of the author of this post are aware of the existing body of scholarship and the broader historical reality of the world’s politics. My concern was whether you are trying to reinvent the wheel while substantial body of work has been produced about this topic. I apologize if I have been overly provocative.

The second generation of the Frankfurt School, particularly Jürgen Habermas, also addresses the totalizing tendencies in the first generation’s argument, though that is a separate discussion.

Hi there :)

I think your response raises important criticisms, but some of the disagreement comes from talking past what the theoritical argument is actually trying to do, and I also may be oversimplifying what I intend to convey for the sake of clarity but lost its nuances in the first place. It may help to narrow the disagreement rather than treat it as a choice between Enlightenment reason vs. its critics. (PS: I don't think we're disagreeing much here.)

On Nazism and Dialectics of Enlightenment

You are right that the movement was openly anti-liberal and h...

Thanks for clarifying. Sill, this suggests that the Chinese participants were on average much less conscientious about answering truthfully/carefully than the US/UK ones, which implies that even the filtered samples may still be relatively more noisy.

Perplexity w/ GPT-5.2 Thinking when I asked "Are there standard methods for dealing with this in surveying/statistics?", among other ideas (sorry I don't know how good the answer actually is):

...Model carelessness (don’t only drop)

If you’re worried that “filtered China” still contains more residual noise, a more

addressing large, persistent gaps in care or prevention that lead to avoidable suffering or poor life outcomes

I'm so grateful you're looking into this! I think there's a lot of low-hanging fruit in this space (I'll soon share other ideas I have for folks to look into). I'll be following with excitement. :)

This is more of a note for myself that I felt might resonate/help some other folks here...

For Better Thinking, Consider Doing Less

I am, like I believe many EAs are, a kind of obsessive, A-type, "high-achieving" person with 27 projects and 18 lines of thought on the go. My default position is usually "work very very hard to solve the problem."

And yet, some of my best, clearest thinking consistently comes when I back off and allow my brain far more space and downtime than feels comfortable, and I am yet again being reminded of that over the past couple of (d...

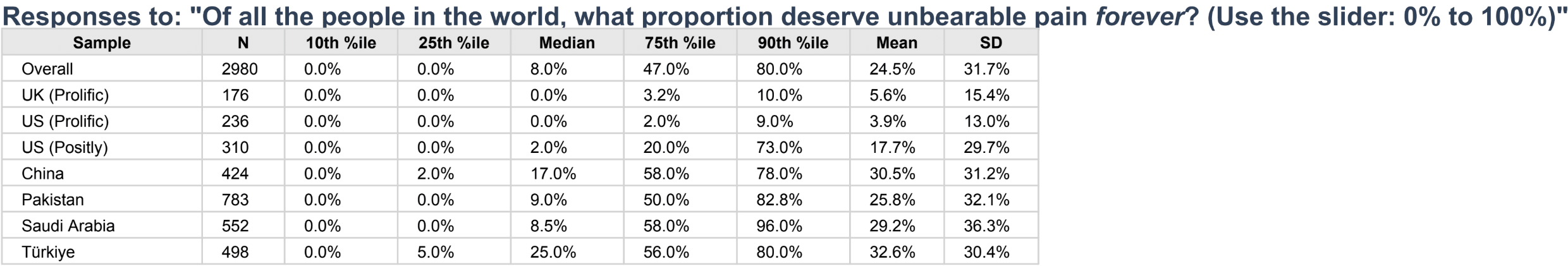

Thanks for flagging this, but you're looking at an unfiltered sample (N=2,980) which includes almost all participants regardless of data quality. All statistics in the main text use a filtered sample (N=1,084), which excludes participants who failed two attention checks, reported not answering honestly, gave invalid birth years, or strongly violated additivity (see the relevant section in the main post, including footnotes 94 and 95 for more details). The unfiltered numbers should be ignored as they clearly contain a lot of inattentive participants. (We wi...

And I'll add that RL training (and to a lesser degree inference scaling) is limited to a subset of capabilities (those with verifiable rewards and that the AI industry care enough about to run lots of training on). So progress on benchmarks has been less representative of how good they are at things that aren't being benchmarked than it was in the non-reasoning-model era. So I think the problems of the new era are somewhat bigger than the effects that show up in benchmarks.

That's a great question. I'd expect a bit of slowdown this year, though not necessarily much. e.g. I think there is a 10x or so possible for RL before RL-training-compute reaches the size of pre-training compute, and then we know they have enough to 10x again beyond that (since GPT-4.5 was already 10x more), so there are some gains still in the pipe there. And I wouldn't be surprised if METR timelines keep going up in part due to increased inference spend (i.e. my points about inference scaling not being that good are to do with costs exploding, so if a co...

I think the post makes a useful snapshot point: for some public “reasoning model vs base” comparisons, a large fraction of measured uplift shows up as increased inference-time deliberation.

But I don’t think that supports the stronger, extrapolative claim that “RL is extremely inefficient for frontier models” or that “recent gains are mostly inference” in a way that should generalize.

Two missing gears:

- Inference-time compute is not just a permanent tax: it can become training signal via an inference → data → filtering/verification → distillation loop. So “mo

I think that because so many of us here are so immersed/involved in AI safety discussions, it's easy to forget that most people really aren't (at least not to anything close to the same depth)

And I definitely agree with your overall emphasis towards political action.

For me though, I might put the title as "we're not getting through (quickly enough)" because I do think there are some notable examples of politicians getting far more switched on to this stuff.

I appreciate your thoughtful comment, but I think several of the claims here don't hold up under scrutiny.

Nazism was not a product of Enlightenment rationality

The Frankfurt School's thesis in Dialectic of Enlightenment, that the Holocaust was somehow a product of Enlightenment reason, is one of the most influential yet poorly supported claims in 20th-century social theory. The problem is simply that Nazism was explicitly anti-Enlightenment and anti-rational.

The Nazis rejected virtually every core Enlightenment principle. They replaced reason with Blut und ...

Thanks, Joseph. The post is a bit in the spirit of Cunningham's Law. "The best way to get the right answer on the Internet is not to ask a question; it's to post the wrong answer", although I do ask a question in the title.

Hi Dom

I really like your idea. I'm also an outsider looking in and until now - not feeling equipped to comment. Your post is different!

I'm 30 years into my career. I've worked in several roles and successfully scaled up a couple of small businesses before selling them. I've made plenty of mistakes along the way but learned a lot.

I have taken different career steps during the past 5 years and have looked at several roles in various EA orgs because I would love to make the next 10 years of my career really count for something much more than anything I've don...

I don’t think the inference is “Indonesia happened, therefore one must adopt Marxism,” or even that Marxist literature is uniquely authoritative. My point is narrower.

If the concern is ideological fanaticism, then understanding the internal logic of influential ideologies seems like a reasonable starting point. Reading historians who describe outcomes is valuable, but it is different from engaging with the conceptual frameworks that shaped how people understood the world in the first place. Marx matters here not because he must be agreed with, but because ...

From where I stand, when violence and ideological destruction happens outside the developed world, why it often becomes framed as a regrettable but acceptable cost of protecting the “right” ideology. This begins to resemble a form of hipocritical moral hierarchy.

I agree that this is a thing that happens and it must be frustrating when you are from such parts of the world. But note that I didn't do this in my comment.

Also, my impression is that many Westerners these days are not particularly attached to defending the actions of their countries in the past. ...

If I'm interpreting this correctly, 25% of people in China think that at least 58% of all people in the world deserve eternal unbearable pain (with similar results in 3 other countries). This is so crazy that I think there must be another explanation, e.g., results got mixed up, or a lot of people weren't paying attention and just answered randomly.

Did you know that after the communist purge, Western powers helped install one of the most corrupt regimes on earth under Suharto in Indonesia, a regime that lasted for 32 years? That period (until -1998) fundamentally shaped why Indonesia is still struggling to build functioning democratic institutions today. Institutional weakness, oligarchic power, and entrenched corruption remain structural problems. Now Suharto’s former son-in-law, Prabowo Subianto, is president. The consequences of the purge are still lived by 280 millions of people today (Indonesia

Thanks for this post, I had no idea posts or comments couldn't be deleted after a certain time. This seems like a pretty major privacy flaw, and as someone who cares strongly about digital privacy it certainly makes me more hesitant to post or comment in the future.

As far as I know though, it's still possible to change the username and email attached to your activity? This could be a way of anonymizing yourself, although of course this isn't perfect and doesn't let you take action on specific posts or comments.

It also seems a little ironic that a community...

"But I think maybe the cruxiest bits are (a) I think export controls seem great in Plan B/C worlds, which seem much likelier than Plan A worlds, and (b) I think unilaterally easing export controls is unlikely to substantially affect the likelihood of Plan A happening (all else equal). It seems like you disagree with both, or at least with (b)?"

Yep, this is pretty close to my views. I do disagree with (b), since I am afraid that controls might poison the well for future Plan A negotiations. As for (a), I don’t get how controls help with Plan C, and I don’t ...

You might be interested in looking into the work ControlAI is doing in the UK. They'd probably disagree with your point that the message is "not getting through", given they claim that 100+ Parliamentarians support their campaign. There also have been various debates in parliament, in particular in the Lords in AI safety, where lots of people spoke in support (e.g. this one)

Definitely agree this is important, but I also think we need to reframe the narrative of 'soulless alt proteins companies vs. hard-working farmers' to 'scrappy underdogs vs. huge animal ag corporations that are more like Amazon or Ford than the kinds of cute farms people imagine'. I also wonder how major job displacement from AI will play into this - maybe there will be less public concern about animal farmers losing their jobs if this is part of a general pattern that affects almost all industries? Not at all confident in that though.

A bit bold to unqualifiedly recommend a list of thinkers of which ~half were Marxists, on the topic of ideological fanaticism causing great harms.

Obviously that doesn't mean it's all bad, I admit I don't know much about most of these thinkers and I found your comment interesting and informative. I think you make an important point that reason/liberty-branded ideologies can get off the rails too.

Anti-communist purges have this element of "The Great Evil" that you are fighting, like witch hunts but secular, and that can cause people to become fanatical in th...

exopriors.com/scry is something I've been building quite in these directions.

I've done the fairly novel, tricky thing of hardening up a SQL+vector database to give the public and their research agents arbitrary readonly access over lots of important content. I've built robust ingestion pipelines where I can point my coding agents at any source and increasingly they can fully metabolize it dress-right-dress into database without my oversight. all of arXiv, EA Forum, thousands of substacks and comments, HackerNews, soon all of reddit pre-2025.5, soon all of ...

Aaron, this is a great idea. I strongly agree that bringing research closer to commercial farms is essential if we want findings that actually reflect what happens in practice, with much of what is produced in research settings (even those that try to mimick commercial practice) suffering from what we call the 'healthy farm effect'. Commercial data capture the full messiness of real production, which is why it is so valuable.

External validity is not the only thing missing in welfare science. Most of what we know about animal welfare at commercial scale com...

A much better order of operations would be to 1) try to negotiate with China to establish an international regulatory framework (plan A), with export control and other stuff being imposed as something that is explicitly linked to China not agreeing to that framework, in the same way sanctions on Russia are imposed explicitly because its aggression against Ukraine, and 2) only if they refuse, try to crush them (plan B).

Maybe if you are President of the United States you can first try the one thing, and then the other. But from the perspective of an individu...

Great post, Aaron — I completely agree with your framing of why the lab-to-farm leap feels overdue. Most published welfare research is tied up in universities and controlled settings, which often are not only expensive and slow but often miss how animals actually experience their environments on commercial operations.

I love your emphasis on starting with engaged farmers — that is a low-friction entry point, especially because so much welfare-relevant data is already being collected in everyday farm management but never shared or analyzed. If even a h...

Great post. Two things come to mind:

-

-

... (read more)One way to just be able to do more stuff is to take stimulants. I think there are cases where being on them can dent your intelligence in some subtle ways but broadly they can drastically increase your ability to do more, work through when you're fatigued, etc. Maybe it's still a sufficiently edgy position that you don't mentioned it here but the absence was interesting. People at college are all taking modafinil for a reason.

I worry that some incredibly ambitious people in the EA world have gone on to pursue paths