This post was updated on February 9 to reflect new dates. We changed the post date to reflect this.

CEA will be running and supporting conferences for the EA community all over the world in 2022.

We are currently organizing the following events. All dates are provisional and may change in response to local COVID-19 restrictions.

EA Global conferences

- EA Global: London (15 - 17 April)[1]

- EA Global: San Francisco (29 - 31 July)

- EA Global: Washington, D.C. (23 - 25 September)

EAGx conferences

- EAGx Oxford (26 - 27 March)

- EAGx Boston (1 - 3 April)

- EAGx Prague (13 - 15 May)

- EAGx Australia (8 - 10 July)

- EAGx Singapore (2 - 4 September)

- EAGx Berlin (September/October)

Applications for the EA Global: London, EAGx Oxford, and EAGx Boston conferences are open! You can find the application here.

If you'd like to add EA events like these directly to your Google Calendar, use this link.

Some notes on these conferences:

- EA Global conferences are for people who are knowledgeable about the core ideas of effective altruism and are taking significant actions (e.g. work or study) based on these ideas.

- To attend an EAGx conference, you should at least be familiar with the core ideas of effective altruism.

- Please apply to all conferences you wish to attend once applications open — we would rather get too many applications for some conferences and recommend that applicants attend a different one, than miss out on potential applicants to a conference.

- Applicants can request financial aid to cover the costs of travel, accommodation and tickets.

- Find more info on our website.

As always, please feel free to email hello@eaglobal.org with any questions, or comment below.

Options for the timing of this conference were extremely constrained, and we realize that the date is not ideal. We chose the date after polling a sample of potential attendees and seeing which of the available dates might work for the most people, but understand that the weekend we chose will not be possible for everyone. If you would have liked to attend EA Global: London 2022, but can’t because of the dates (e.g. for religious reasons), please feel free to let us know. ↩︎

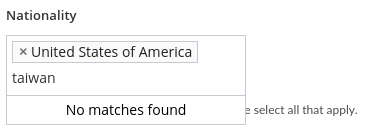

It would be useful if the application website told you which conferences you've already applied to so you can avoid submitting the same application twice or worrying if you've forgotten to submit it.

You should get an email with your submitted content for each application to a conference.

Personally, for myself this “receipt” with my content is super useful when applying to successive conferences, at least until they figure out I’m an imposter.

(Sometimes it’s slightly confusing to search your inbox you might need to search for hello@eaglobal.org)