A number of recent proposals have detailed EA reforms. I have generally been unimpressed with these - they feel highly reactive and too tied to attractive sounding concepts (democratic, transparent, accountable) without well thought through mechanisms. I will try to expand my thoughts on these at a later time.

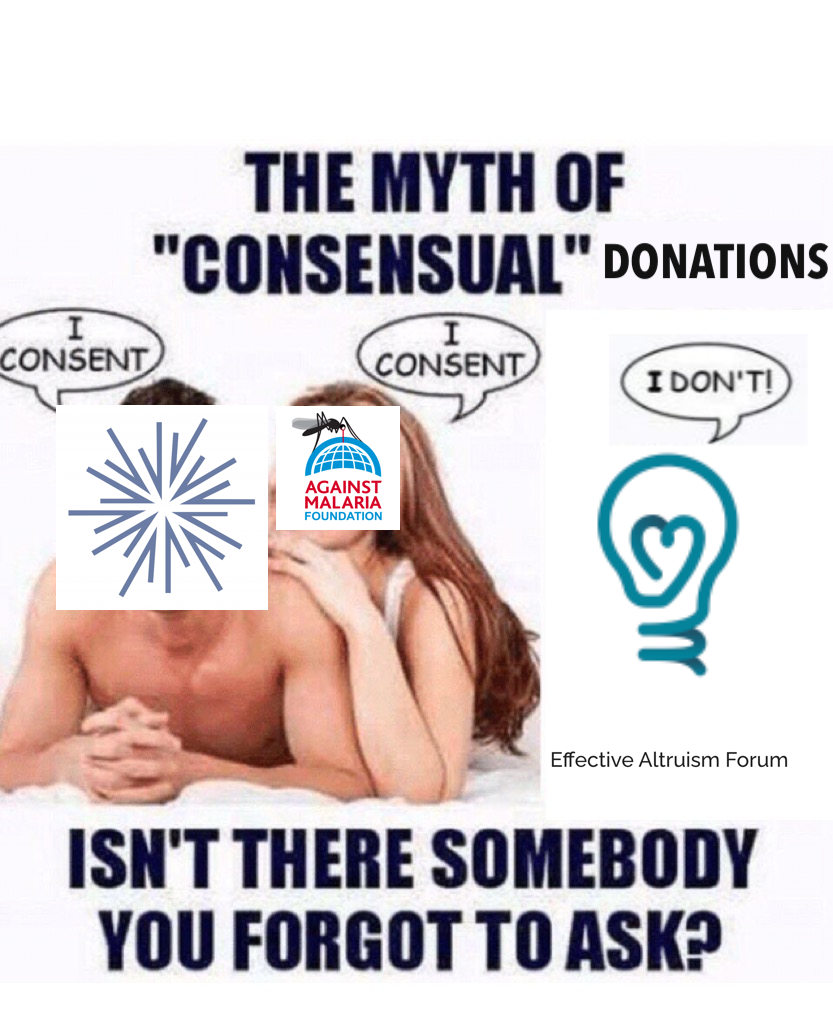

Today I focus on one element that seems at best confused and at worst highly destructive: large-scale, democratic control over EA funds.

This has been mentioned in a few proposals: It originated (to my knowledge) in Carla Zoe Cremer's Structural Reforms proposal:

- Within 5 years: EA funding decisions are made collectively

- First set up experiments for a safe cause area with small funding pots that are distributed according to different collective decision-making mechanisms

(Note this is classified as a 'List A' proposal - per Cremer: "ideas I’m pretty sure about and thus believe we should now hire someone full time to work out different implementation options and implement one of them")

It was also reiterated in the recent mega-proposal, Doing EA Better:

Within 5 years, EA funding decisions should be made collectively

Furthermore (from the same post):

Donors should commit a large proportion of their wealth to EA bodies or trusts controlled by EA bodies to provide EA with financial stability and as a costly signal of their support for EA ideas

And:

The big funding bodies (OpenPhil, EA Funds, etc.) should be disaggregated into smaller independent funding bodies within 3 years

(See also the Deciding better together section from the same post)

How would this happen?

One could try to personally convince Dustin Moskovitz that he should turn OpenPhil funds over to an EA Community panel, that it would help OpenPhil distribute its funds better.

I suspect this would fail, and proponents would feel very frustrated.

But, as with other discourse, these proposals assume that because a foundation called Open Philanthropy is interested in the "EA Community" that the "EA Community" has/deserves/should be entitled to a say in how the foundation spends their money. Yet the fact that someone is interested in listening to the advice of some members of a group on some issues does not mean they have to completely surrender to the broader group on all questions. They may be interested in community input for their funding, via regranting for example, or invest in the Community, but does not imply they would want the bulk of their donations governed by the EA community.

(Also - I'm using scare quotes here because I am very confused who these proposals mean when they say EA community. Is it a matter of having read certain books, or attending EAGs, hanging around for a certain amount of time, working at an org, donating a set amount of money, or being in the right Slacks? These details seem incredibly important when this is the set of people given major control of funding, in lieu of current expert funders)

So at a basic level, the assumption that EA has some innate claim to the money of its donors is basically incorrect. (I understand that the claim is also normative). But for now, the money possessed by Moskovitz and Tuna, OP, and GoodVentures is not the property of the EA community. So what, then, to do?

Can you demand ten billion dollars?

Say you can't convince Moskovitz and OpenPhil leadership to turn over their funds to community deliberation.

You could try to create a cartel of EA organizations to refuse OpenPhil donations. This seems likely to fail - it would involve asking tens, perhaps hundreds, of people to risk their livelihoods. It would also be an incredibly poor way of managing the relationship between the community and its most generous funder--and very likely it would decrease the total number of donations to EA organizations and causes.

Most obviously, it could make OpenPhil less interested in funding the EA community. But additionally, this sort of behavior would also make EA incredibly unattractive to new donors - who would want to try to help a community of people trying to do good and then be threatened by them? Even for potential EAs pursuing Earn to Give, this seems highly demotivating.

Let's say a new donor did begin funding EA organizations, and didn't want to abide by these rules. Perhaps they were just interested in donating to effective bio orgs. Would the community ask that all EA-associated organizations turn down their money? Obviously they shouldn't - insofar as they are not otherwise unethical - organizations should accept money and use it to do as much good as possible. Rejecting a potential donor simply because they do not want to participate on a highly unusual funding system seems clearly wrong headed.

One last note - The Doing EA Better post emphasizes:

Donors should commit a large proportion of their wealth to EA bodies or trusts . . . [as in part a] costly signal of their support for EA ideas

Why would we want to raise the cost of supporting EA ideas? I understand some people have interpreted SBF and other donors as using EA for 'cover' but this has always been pretty specious precisely because EA work is controversial. You can always donate to children's hospitals for good cover. Ideally supporting EA ideas and causes should be as cheap as possible (barring obvious ethical breaches) so that more money is funneled to highly important and underserved causes.

The Important Point

I believe Holden Karnofsky and Alexander Berger, as well as the staff of OpenPhil, are much better at making funding decisions than almost any other EA, let alone a "lottery selected" group of EAs deliberating. This seems obvious - both are immersed more deeply in the important questions of EA than almost anyone. Indeed, many EAs are primarily informed by Cold Takes and OpenPhil investigations.

Why democratic decision making would be better has gone largely unargued. To the extent it has been, "conflicts of interest" and "insularity" seem like marginal problems compared to basically having a deep understanding of the most important questions for the future/global health and wellbeing.

But even if we weren't so lucky to have Holden and Alex, it still seems to be bad strategic practice to demand community control over donor resources. It would:

- Endanger current donor relationships

- Make EA unattractive to potential new donors

- Likely lower the average quality of grantmaking

- Likely yield nebulous benefits

- And potentially drive some of the same problems it purports to solve, like community group-think

(Worth pointing out an irony here: part of the motivation for these proposals is for grant making to escape the insularity of EA, but Alex and Holden have existed fairly separately from the "EA Community" throughout their careers, given that they started GiveWell independently of Oxford EA.)

If you are going to make these proposals, please consider:

- Who you are actually asking to change their behavior?

- What actions you would be willing to take if they did not change their behavior?

A More Reasonable Proposal

Doing EA Better specifically mentions democratizing the EA Funds program within Effective Ventures. This seems like a reasonable compromise. As it is Effective Ventures program, that fund is much more of an EA community project than a third party foundation. More transparency and more community input in this process seem perfectly reasonable. To demand that donors actually turn over a large share of their funds to EA is a much more aggressive proposal.

If folks don't mind, a brief word from our sponsors...

I saw Cremer's post and seriously considered this proposal. Unfortunately I came to the conclusion that the parenthetical point about who comprises the "EA community" is, as far as I can tell, a complete non-starter.

My co-founder from Asana, Justin Rosenstein, left a few years ago to start oneproject.org, and that group came to believe sortition (lottery-based democracy) was the best form of governance. So I came to him with the question of how you might define the electorate in the case of a group like EA. He suggests it's effectively not possible to do well other than in the case of geographic fencing (i.e. where people have invested in living) or by alternatively using the entire world population.

I have not myself come up with a non-geographic strategy that doesn't seem highly vulnerable to corrupt intent or vote brigading. Given that the stakes are the ability to control large sums of money, having people stake some of their own (i.e. become "dues-paying" members of some kind) does not seem like a strong enough mitigation. For example, a hostile takeover almost happened to the Sierra Club in SF in 2015 (albeit fo... (read more)

It is 2AM in my timezone, and come morning I may regret writing this. By way of introduction, let me say that I dispositionally skew towards the negative, and yet I do think that OP is amongst the best if not the best foundation in its weight class. So this comment generally doesn't compare OP against the rest but against the ideal.

One way which you could allow for somewhat democratic participation is through futarchy, i.e., using prediction markets for decision-making. This isn't vulnerable to brigading because it requires putting proportionally more money in the more influence you want to have, but at the same time this makes it less democratic.

More realistically, some proposals in that broad direction which I think could actually be implementable could be:

- allowing people to bet against particular OpenPhilanthropy grants producing successful outcomes.

- allowing people to bet against OP's strategic decisions (e.g., against worldview diversification)

- I'd love to see bets between OP and other organizations about whose funding is more effective, e.g., I'd love to see a bet between your and Jaan Tallinn on who's approach is better, where the winner gets some large amount (e.g., $20

... (read more)Strongly disagree about betting and prediction markets being useful for this; strongly agree about there being a spectrum here, where at different points the question "how do we decide who's an EA" is less critical and can be experimented with.

One point on the spectrum could be, for example, that the organisation is mostly democratically run but the board still has veto power (over all decisions, or ones above some sum of money, or something).

Hi Dustin :)

FWIW I also don't particularly understand the normative appeal of democratizing funding within the EA community. It seems to me like the common normative basis for democracy would tend to argue for democratizing control of resources in a much broader way, rather than within the self-selected EA community. I think epistemic/efficiency arguments for empowering more decision-makers within EA are generally more persuasive, but wouldn't necessarily look like "democracy" per se and might look more like more regranting, forecasting tournaments, etc.

Also, the (normative, rather than instrumental) arguments for democratisation in political theory are very often based on the idea that states coerce or subjugate their members, and so the only way to justify (or eliminate) this coercion is through something like consent or agreement. Here we find ourselves in quite a radically different situation.

It seems like the critics would claim that EA is, if not coercing or subjugating, at least substantially influencing something like the world population in a way that meets the criteria for democratisation. This seems to be the claim in arguments about billionaire philanthropy, for example. I'm not defending or vouching for that claim, but I think whether we are in a sufficiently different situation may be contentious.

This argument seems to be fair to apply towards CEA's funding decisions as they influence the community, but I do not think I as a self described EA have more justification to decide over bed net distribution than the people of Kenya who are directly affected.

That argument would be seen as too weak in the political theory context. Then powerful states would have to enfranchise everyone in the world and form a global democracy. It also is too strong in this context, since it implies global democratic control of EA funds, not community control.

This is a great point, Alexander. I suspect some people, like ConcernedEAs, believe the specific ideas are superior in some way to what we do now, and it's just convenient to give them a broad label like "democratizing". (At Asana, we're similarly "democratizing" project management!)

Others seem to believe democracy is intrinsically superior to other forms of governance; I'm quite skeptical of that, though agree with tylermjohn that it is often the best way to avoid specific kinds of abuse and coercion. Perhaps in our context there might be more specific solutions along those lines, like an appeals board for COI or retaliation claims. The formal power might still lie with OP, but we would have strong soft reasons for wanting to defer.

In the meantime, I think the forum serves that role, and from my POV we seem reasonably responsive to it? Esp. the folks with high karma.

I probably should have been clearer in my first comment that my interest in democratizing the decisions more was quite selfish: I don't like having the responsibility, even when I'm largely deferring it to you (which itself is a decision).

GiveWell did some of this research in 2019 (summary, details):

A couple replies imply that my research on the topic was far too shallow and, sure, I agree.

But I do think that shallow research hits different from my POV, where the one person I have worked most closely with across nearly two decades happens to be personally well researched on the topic. What a fortuitous coincidence! So the fact that he said "yea, that's a real problem" rather than "it's probably something you can figure out with some work" was a meaningful update for me, given how many other times we've faced problems together.

I can absolutely believe that a different person, or further investigation generally, would yield a better answer, but I consider this a fairly strong prior rather than an arbitrary one. I also can't point at any clear reference examples of non-geographic democracies that appear to function well and have strong positive impact. A priori, it seems like a great idea, so why is that?

The variations I've seen so far in the comments (like weighing forum karma) increase trust and integrity in exchange for decreasing the democratic nature of the governance, and if you walk all the way along that path you get to institutions.

I think we’re already along the path, rather than at one end, and thus am inclined to evaluate the merits of specific ideas for change rather than try to weigh the philosophical stance.

https://forum.effectivealtruism.org/posts/zuqpqqFoue5LyutTv/the-ea-community-does-not-own-its-donors-money?commentId=PP7dbfkQQRsXddCGb

Thanks Dustin!

I am agnostic wrt to your general argument but as the fund manager of the FP Climate Fund I wanted quickly weigh in, so some brief comments (I am on a retreat so my comments are more quickly written than otherwise and I won’t be able to get back immediately, please excuse lack of sourcing and polishing):

- At this point, it seems the dominant view on climate interventions seems to be one of uncertainty on how they compare to GW style charity, certainly on neartermist grounds. E.g. your own Regranting Challenge allocated 10m to a climate org on renewables in Southeast Asia. This illustrates that OP seems to believe that climate interventions can clear the near-termist bar absent democratic pressures that dilute. While I am not particularly convinced by that grant (though I am arguably not neutral here!), I do think this correctly captures that it seems plausible that the best climate interventions can compare with GW-style-options, esp. when taking into account very strong “co-benefits” of climate interventions around air pollution and reducing energy poverty.

- The key meta theory of impact underlying the Climate Fund is to turn neglectedness on its head and

... (read more)Sure, I think you can make an argument like that for almost any cause area (find neglected tactics within the cause to create radical leverage). However, I've become more skeptical of it over time, both bc I've angled for those opps myself, and because there are increasingly a lot of very smart, strategic climate funders deploying capital. On some level, we should expect the best opps to find funders.

>> E.g. your own Regranting Challenge allocated 10m to a climate org on renewables in Southeast Asia. This illustrates that OP seems to believe that climate interventions can clear the near-termist bar absent democratic pressures that dilute.

The award page has this line "We are particularly interested in funding work to decarbonize the power sector because of the large and neglected impacts of harmful ambient air pollution, to which coal is a meaningful contributor. i.e. it's part of our new air quality focus area. Without actually haven read the write-up, I'm sure they considered climate impact too, but I doubt it would have gotten the award without that benefit.

That said, the Kigali grant from 2017 is more like your framing. (There was much less climate funding then.)

Thank you for your reply and apologies for the delay!

To be clear, the reason I think this is a more convincing argument in climate than in many other causes is (a) the vastness of societal and philanthropic climate attention and (b) it’s very predictable brokenness, with climate philanthropy and climate action more broadly generally “captured” by one particular vision of solving the problem (mainstream environmentalism, see qualification below).

Yes, one can make this argument for more causes but the implication of this does not seem clear – one implication could be that there actually are much more interventions that in fact do meet the bar and that discounting leverage arguments unduly constrains our portfolio.

That seems fair and my own uncertainty primarily stems from this – I d... (read more)

Nit: "a top-rated fund"

(GWWC brands many funds as "top-rated", reflecting the views of their trusted evaluators. )

I think this idea is worth an orders-of-magnitude deeper investigation than what you've described. Such investigations seem worth funding.

It's also worth noting that OP's quotation is somewhat selective, here I include the sub-bullets:

Absolutely, I did not mean my comment to be the final word, and in fact was hoping for interesting suggestions to arise.

Good point on the more detailed plan, though I think this starts to look a lot more like what we do today if you squint the right way. e.g. OP program officers are subject matter experts (who also consult with external subject matter experts), and the forum regularly tears apart their decisions via posts and discussion, which then gets fed back into the process.

Thanks so much for sharing your perspective, as the main party involved.[1] A minor nitpick:

I think this might be misleading: there are 12 top-rated funds. The Climate Change one is one of the three "Top-rated funds working across multiple cause areas".

(and thank you for all the good you're doing)

Ah I didn’t know that, thank you!

Good comment.

But I think I don't really buy the first point. We could come up with some kind of electorate that's frustrating but better than the whole world. Forum users weighted by forum karma is a semi democratic system that's better than any you suggest and pretty robust to takeover (though the forum would get a lot of spam)

My issue with that is I don't believe the forum makes better decisions than OpenPhil. Heck we could test it, get the forum to vote on it's allocation of funds each year and then compare in 5 years to what OpenPhil did and see which we'd prefer.

I bet that we'd pick OpenPhil's slate from 5 years ago to the forum average from then.

So yeah, mainly I buy your second point that democratic approaches would lead to less effective resource allocation.

(As an aside there is democratic power here. When we all turned on SBF, that was democratic power - turns out that his donations did not buy him cover after the fact and I think that was good)

In short. I think the current system works. It annoys me a bit, but I can't come up with a better one.

The proposal of forum users weighted by karma can be taken over if you have a large group of new users all voting for each other. You could require a minimum number of comments, lag in karma score by a year or more, require new comments within the past few months and so on to make it harder for a takeover, but if a large enough group is invested in takeover and willing to put in the time and effort, I think they could do it. I suppose if the karma lags are long enough and engagement requirement great enough, they might lose interest and be unable to coordinate the takeover.

You could stop counting karma starting from ~now (or some specific date), but that would mean severely underweighting legitimate newcomers. EDIT: But maybe you could just do this again in the future without letting everyone know ahead of time when or what your rules will be, so newcomers can eventually have a say, but it'll be harder to game.

You could also try to cluster users by voting patterns to identify and stop takeovers, but this would be worrying, since it could be used to target legitimate EA subgroups.

I was trying to highlight a bootstrapping problem, but by no means meant it to be the only problem.

It's not crazy to me to create some sort of formal system to weigh the opinions of high-karma forums posters, though as you say that is only semi-democratic, and so reintroduces some of the issues Cremer et al were trying to solve in the first place.

I am open-minded about whether it would be better than openphil, assuming they get the time to invest in making decisions well after being chosen (sortition S.O.P.).

I agree that some sort of periodic rules reveal could significantly mitigate corruption issues. Maybe each generation of the chosen council could pick new rules that determine the subsequent one.

Dustin, I'm pleased that you seriously considered the proposal. I do think that it could be worth funding deeper research into this (assuming you haven't done this already) - both 'should we expect better outcomes if funding decisions were made more democratically?' and 'if we were coming up with a system to do this, how could we get around the problems you describe?' One way to do this would be a sort of 'adversarial collaboration' between someone who is sceptical of the proposal and someone who's broadly in favour.

Hi Dustin,

We’re very happy to hear that you have seriously considered these issues.

If the who-gets-to-vote problem was solved, would your opinion change?

We concur that corrupt intent/vote-brigading is a potential drawback, but not an unsolvable one.

We discuss some of these issues in our response to Halstead on Doing EA Better:

There are several possible factors to be used to draw a hypothetical boundary, e.g.

These and others could be combined to define some sort of boundary, though of course it would need to be kept under constant monitoring & evaluation.

Given a somewhat costly signal of alignment it seems very unlikely that someone would dedicate a significant portion of their lives going “deep cover” in EA in order to have a very small chance of being randomly selected to become one among multiple people in a sortition assembly deliberating on broad strategic questions about the allocation of a certain proportion of one EA-related fund or another.

In any case, it seems like ... (read more)

Given that your proposal is to start small, why do you need my blessing? If this is a good idea, then you should be able to fund it and pursue it with other EA donors and effectively end up with a competitor to the MIF. And if the grants look good, it would become a target for OP funds. I don't think OP feels their own grants are the best possible, but rather the best possible within their local specialization. Hence the regranting program.

Speaking for myself, I think your list of criteria make sense but are pretty far from a democracy. And the smaller you make the community of eligible deciders, the higher the chance they will be called for duty, which they may not actually want. How is this the same or different from donor lotteries, and what can be learned from that ? (To round this out a little, I think your list is effectively skin in the game in the form of invested time rather than dollars)

In general, doing small-scale experiments seems like a good idea. However, in this case, there are potentially large costs even to small-scale experiments, if the small-scale experiment already attempts to tackle the boundary-drawing question.

If we decide on rules and boundaries, who has voting rights (or participate in sortition) and who not, it has the potential to create lots of drama and politics (eg discussions whether we should exclude right-wing people, whether SBF should have voting rights if he is in prison, whether we should exclude AI capability people, which organizations count as EA orgs, etc.). Especially if there is "constant monitoring & evaluation". And it would lead to more centralization and bureaucracy.

And I think its likely that such rules would be understood as EA membership, where you are either EA and have voting rights, or you are not EA and do not have voting rights. At least for "EAG acceptance", people generally understand that this does not constitute EA membership.

I think it would be probably bad if we had anything like an official EA membership.

My decision criteria would be whether the chosen grants look likely to be better than OPs own grants in expectation. (n.b. I don't think comparing to the grants people like least ex post is a good way to do this).

So ultimately, I wouldn't be willing to pre-commit large dollars to such an experiment. I'm open-minded that it could be better, but I don't expect it to be, so that would violate the key principle of our giving.

Re: large costs to small-scale experiments, it seems notable that those are all costs incurred by the community rather than $ costs. So if the community believes in the ROI, perhaps they are worth the risk?

I agree with some of the points of this post, but I do think there is a dynamic here that is missing, that I think is genuinely important.

Many people in EA have pursued resource-sharing strategies where they pick up some piece of the problems they want to solve, and trust the rest of the community to handle the other parts of the problem. One very common division of labor here is

I think a lot of this type of trade has happened historically in EA. I have definitely forsaken a career with much greater earning potential than I have right now in order to contribute to EA infrastructure and to work on object-level problems.

I think it is quite important to recognize that in as much as a trade like this has happened, this gives the people who have done object level work a substantial amount of ownership over the funds that other people have earned, as well as the funds that other people have fundraised (I also think this applies to Open Phil, though I think the case here is bunch messier and I won't go into my models of the g... (read more)

I find this framing a bit confusing. It doesn't to me seem that there is any obligation between EAs who are pursuing direct work and EA funders than there is between EA funders and any other potentially effective program that needs money.

Consider:

Do we want to say that we should try and fund Alice more because we made some kind of implicit deal with her even though that money won't produce effective good in the world? And if the money is conditional on the person doing good work... how is that different from the funders just funding good work without consideration for who's doing it?

If I were going to switch to direct work the deal I would expect is "There is a large group of value-aligned funders, so insofar as I do work that seems high-impact, I can expect to get funded". I would not expect that the community was going to somehow ensure I got well above median outcomes for the career area I was going into.

I'm not sure why... (read more)

I think it could make sense in various instances to form a trade agreement between people earning and people doing direct work, where the latter group has additional control over how resources are spent.

It could also make sense to act like that trade agreement which was not in fact made was in fact made, if that incentivises people to do useful direct work.

But if this trade has never in fact transpired, explicitly or tacitly, I see no sense in which these resources "are meaningfully owned by the people who have forsaken direct control over that money in order to pursue our object-level priorities."

Great comment. I think "people who sacrifice significantly higher salaries to do EA work" is a plausible minimum definition of who those calling for democratic reforms feel deserve a greater say in funding allocation. It doesn't capture all of those people, nor solve the harder question of "what is EA work/an EA organization?" But it's a start.

Your 70/30 example made me wonder whether redesigning EA employee compensation packages to include large matching contributions might help as a democratizing force. Many employers outside EA/in the private sector offer a matching contributions program, wherein they'll match something like 1-5% of your salary (or up to a certain dollar value) in contributions to a certified nonprofit of your choosing. Maybe EA organizations (whichever voluntarily opt into this) could do that except much bigger - say, 20-50% of your overall compensation is paid not to you but to a charity of your choosing. This could also be tied to tenure so that the offered match increases at a faster rate than take home pay, reflecting the intuition that committed longtime members of the EA community have engaged with the ideas more, and potentially sacrificed more, and cons... (read more)

I think this is a proposal worth exploring. Open Phil could earmark additional funding to orgs for employee donation matching.

Another option would be to just directly give EA org employees regranting funds, with no need for them to donate their own money to regrant them. However, requiring some donation, maybe matching at a high rate, e.g. 5:1, gets them to take on at least some personal cost to direct funding.

Also, the EA org doesn't need to touch the money. The org can just confirm employment, and the employee can regrant through a system Open Phil (or GWWC) sets up or report a donation for matching to Open Phil (or GWWC, with matching funds provided by Open Phil).

Thank you for this. These are very interesting points. I have two (lightly held) qualms with this that I'm not sure obtain.

Again, just the thoughts that came to mind, both tentative.

As an example: I specifically chose to start working on AI alignment rather than trying to build startups to try to fund EA because of SBF. I would probably be making a lot more money had I took a different route and would likely not have to deal with being in such a shaky, intense field where I’ve had to put parts of my life on hold for.

I think the case of OP and SBF are very different.Alameda was set up with a lot of help from EA and expected to donate a lot if not most to EA causes. Whereas Dustin made his wealth without help from EA.

I also think this applies somewhat to Open Phil, though it's messy and I honestly feel confused a bit confused about it.

I do think that overall I would say that either Open Phil should pay at least something close to market rate for the labor in various priority areas (which for a chunk of people would be on the order of millions per year), or that they should give up some control over the money and take a stance towards the broader community that allows people with a strong track record of impact to direct funds relatively unilaterally.

In as much as neither of that happens, I would take that as substantial evidence that I would want more EAs to go into earning to give who would be happy to take that trade, and I would encourage people doing direct work to work less unless someone pays them a higher salary, though again, the details of the game theory here get messy really quickly, and I don't have super strong answers.

Great post. I strongly agree with the core point.

Regarding the last section: it'd be an interesting experiment to add a "democratic" community-controlled fund to supplement the existing options. But I wouldn't want to lose the existing EA funds, with their vetted expert grantmakers. I personally trust (and agree with) the "core EAs" more than the "concerned EAs", and would be less inclined to donate to a fund where the latter group had more influence. But by all means, let a thousand flowers bloom -- folks could then direct their donations to the fund that's managed as they think best.

[ETA: Just saw that Jason has already made a similar point.]

Yeah, I strongly agree with this and wouldn't continue to donate to the EA fund I currently donate to if it became "more democratic" rather than being directed by its vetted expert grantmakers. I'd be more than happy if a community-controlled fund was created, though.

To lend further support to the point that this post and your comment makes, making grantmaking "more democratic" through involving a group of concerned EAs seems analogous to making community housing decisions "more democratic" through community hall meetings. Those who attend community hall meetings aren't a representative sample of the community but merely those who have time (and also tend to be those who have more to lose from community housing projects).

So its likely that not only would concerned EAs not be experts in a particular domain but would also be unrepresentative of the community as a whole.

The idea seems intuitively appealing, an option for those who want to try it, see how it works. But I wonder whether the people who would fund this would actually prefer to participate in a donor lottery?

Curious to hear from anyone who would be excited to put their money a community-controlled fund.

I would probably donate a small amount, not because I think the EV would be higher, but because I assign some utility to the information value of seeing grant decisions by a truly independent set of decisionmakers. If there were consistent and significant differences from the grant portfolio of the usual expert decisionmakers, I would entertain the possibility that the usual decisionmakers had a blind spot (even though I would predict the probability as perhaps 25 percent, low confidence). If the democratic fund were small enough in comparison to the amount of expert-directed funding, and could be operated cheaply enough, losing a little EV would feel acceptable as a way to gain this information.

I think this year's donor lottery is likely to go to the benefactor, especially the higher dollar amounts, so will produce zero information value.

Thanks for writing this. On the one hand, I think these calls for democracy are caused by a lack of trust in EA orgs to spend the money as they see fit. On the other hand, that money comes from donors. If you don't agree with a certain org or some actions of an org in the past, just don't donate to them. (This sounds so obvious to me that I'm probably missing something.) Whether somebody else (who might happen to have a lot of money) agrees with you is their decision, as is where they allocate their money to.

In addition, EA is about "doing the most good", not "doing what the majority believes to be the most good", probably because the majority isn't always right. I think it's good that EA Funds are distributed in a technocratic way, rather than a democratic way, although I agree that more transparency would help people at least understand the decision processes behind granting decisions and allow for them to be criticized and improved.

“If you don't agree with a certain org or some actions of an org in the past, just don't donate to them. (This sounds so obvious to me that I'm probably missing something.) Whether somebody else (who might happen to have a lot of money) agrees with you is their decision, as is where they allocate their money to.“

I think what you’re missing is that a significant aspect of EA has always (rightly) been trying to influence other people’s decisions on how they spend their money, and trying to make sure that their money is spent in a way that is more effective at improving the world.

When EA looks at the vast majority of Westeners only prioritising causes within their own countries, EA generally doesn’t say “that is your money so it’s your decision and we will not try to influence your decision, and we will just give our own money to a different cause”, it says “that is your money and it’s your decision, but we’re going to try to convince you to make a different decision based on our view of what is more effective at improving the world”.

I believe the “democratise EA funding decisions” critics are doing the same thing.

There's a big difference between your two examples. EA has historically tried to influence people to use their money differently through persuasion: actually making an argument that they should use their money in a particular way and persuading them of it.

"Democratising funding decisions" instead gives direct power over decisions, regardless of argumentation. You don't have to persuade people if you have a voting bloc that agrees with you.

And the old route is still there! If you think people should donate differently, then make this case. This might be a lot of work, but so be it. I think a good example of this is Michael Plant and HLI: they've put in serious work in making their case and they get taken seriously.

I don't see why people are entitled to more power than that.

If the money for EA Funds comes from donors who have the impression the fund is allocated in a technocratic way do you still think it is a reasonable compromise for EA Funds to become more democratic? It seems low intergrity for an entity to raise funding after communicating a fairly specific model for how the funding will be used and then change it's mind and spend it on a different program (unless we have made it pretty clear upfront that we might do other programs).

If the suggestion is to start a new fund that does not use existing donations that seems more reasonable to me, but then I don't think that EA Funds has a substantial advantage in doing this over other organisations with similarly competent staff.

Of course, it is not impossible that the reformers could find significant donors who agree with their democratic grantmaking philosophy.

As far as EA Funds, I support empowering EAs by giving them options. You could give to the current funds (with some moderate reforms), or you could give to a fund in each cause area with strongly democratic processes. "I want to defer to expert fund managers rather than to a democratic process" is a valid donor preference, as is the converse.

Yes! If you want a democratic EA fund, why not start one? I'm pretty sure this would get funding from the EAIF. Generally, I think it's a much healthier ecosystem when people compete by doing different things rather than by lobbying some "authoritative" party to get them to make changes.

I am also skeptical of democratizing EA funding decisions because I share your concerns about effective implementation, but want to note that EA norms around this are somewhat out of step with norms around priority setting in other fields, particularly global health. In recent years, global health funders have taken steps to democratize funding decisions. For instance, the World Health Organization increasingly emphasizes the importance of fair decision-making processes; here's a representative title: "Priority Setting for Universal Health Coverage: We Need Evidence-Informed Deliberative Processes, Not Just More Evidence on Cost-Effectiveness."

Plausibly the increased emphasis on procedural approaches to priority setting is misguided, but there has been a decent amount of philosophical and empirical work on this. At a minimum, it seems like funders should engage with this literature before determining whether any of these approaches are worth considering.

So I disagree with this post on the object level, but I more strongly object to...something about the tone, or the way in which you're making this critique?

First, the title: 'The EA community does not own its donors' money'. idk - I bite the bullet and say that while the EA community of course does not legally own its donors' money, the world at large does morally have claim to the wealth that, through accidents of history, happens to be pooled in the hands of a few. I legally own the money in my bank account, but I don't feel like I have some deep moral right to it or something. Some of it comes from my family. Some of it does come from hard work... but there are lots of people who work really hard but are still poor because they happen to have been born in a poorer country, or lacked other opportunities that I had. That's why I give away a lot of my money - I don't think I have "claim" to all my money, just because it happens to be in my bank account.

Second, the headings: 'How would this happen?' and 'Can you demand ten billion dollars?' If someone has proposed a new way of doing things, it's reasonable to ask for details. But the fact that details don't already... (read more)

Much as I am sympathetic to many of the points in this post, I don't understand the purpose of the section, "Can you demand ten billion dollars?". As I understand the proposal to democratise EA it's just that: a proposal about what, morally, EA ought to do. It certainly doesn't follow that any particular person or group should try to enforce that norm. So pointing out that it would be a bad idea to try to use force to establish this is not a meaningful criticism of the proposal.

Yes I think it’s uncharitable to assume that Carla means other people taking control of funds without funder buy in. I think the general hope with a lot of these posts is to convince funders too.

Strongly upvoted.

I endorse basically everything here.

In general, I'm very unconvinced that raising EA bureaucracy and more democratically driven funding/impact decisions would be net positive.

I've wanted to write something similar myself, and you've done a great job with it! Thanks!

I feel that there's an illusion of control that a lot of people have when it comes to imagining ways that EA could be different, and the parts of this post that I quote below do a good job of explaining how that control is so illusory.

EA is a genie out of its bottle, so to speak. There's no way to guarantee that people won't call themselves EAs or fund EA-like causes in the ways that they want rather than the way you might want them to. This is in part a huge strength, because it allows a lot of funding and interest, but it's also a big weakness.

I feel this especially acutely when people lament how entangled EA was with SBF. It's possible to imagine a version of EA that was less officially exuberant about him, but very hard imo to imagine a version that refuses to grant any influence whatsoever to a person who credibly promises billions of dollars of funding. Avoiding a situation like the FTX/SBF one is hard not just because the central EA orgs can be swayed by funding but also, as the quotes below point out, because there will almost always be EAs who (rightly) will care more about the... (read more)

Thanks for writing this Nick, I'm sympathetic and strongly upvoted (to declare a small COI, I work at Open Philanthropy). I will add two points which I don't see as conflicting with your post but which hopefully complement it.

Firstly, if you're reading this post you probably have "EA resources".

You can donate your own money to organisations that you want to. While you can choose to donate to e.g. a CEA managed EA Fund (e.g. EA Infrastructure Fund, EA Animal Welfare Fund) or to a GiveWell managed fund, you can equally choose to donate ~wherever you want, including to individual charitable organisations. Extremely few of us have available financial resources within two orders of magnitude of Open Philanthropy's core donors, but many of us are globally and historically rich.

It's more difficult to do well, but many of you can donate your time, or partially donate your time through e.g. taking a lower salary to do direct work on pressing and important problems than your market value in another domain.

Secondly, and more personally, I just don't think of most of my available resources as being deservedly "mine". However badly my life goes, save civilizational catastrophe, I wi... (read more)

I nodded vigorously the entire time I read this post. Thank you.

This post and headline conflate several issues:

It seems many of the comments express agreement with 1) and 2), while ignoring 3).

I would hope that a majority of the EA community would agree that there aren't good reasons for someone to claim ownership to billions of dollars. Perhaps there are those that disagree. If many disagree, in my mind that would mark a significant change in the EA community. See Derek Parfit's comments on this to GWWC: http://www.youtube.com/watch?v=xTUrwO9-B_I&t=6m25s. The headline "The EA community does not own its donors' money" may be true in a strictly legal sense, but the EA community, in that some of it prioritizes helping the worst off, has a much stronger moral claim to donors money than do the donors.

It's entirely fair and reasonable to point out the practical difficulties and questionable efficiencies involved with implementing a democratic voting mechanism to allocate wealth. But I think it would be a mistake to further make some claim that major donors ought to be entitled to some significant control over how their money is spent. It's worth keeping those ideas separate.

I would certainly disagree vehemently with this claim, and would hope the majority of EAs also disagree. I might clarify that this isn't about arbitrarily claiming ownership of billions of dollars - it's a question of whether you can earn billions of dollars through mutual exchange consistent with legal rules.

We might believe, as EAs, that it is either a duty or a supererogatory action to spend one's money (especially as a billionaire) to do good, but this need not imply that one does not "own their money."

("EA community, in that some of it prioritizes helping the worst off, has a much stronger moral claim to donors money than do the donors" may also prove a bit too much in light of some recent events)

I think a significant part of the whole project of effective altruism has always been telling people how to spend money that we don’t own, so that the money is more effective at improving the world.

Seems reasonable to me for EAs to suggest ways of spending EA donor money that they think would be more effective at improving the world, including if they think that would be via giving more power to random EAs. Now whether that intervention would be more effective is a fair thing to debate.

As you touch on in the post, there are many weaker versions of some suggestions that could be experimented with at a a small scale, using EA Funds or some funding from Open Phil, and trying out a few different definitions of the ‘EA community’ - eg - EAG acceptance, Forum karma, etc, and using different voting models, eg - quadratic voting, one person one vote, uneven votes etc, veto power for Open Phil.

Agree. Mood affiliation is not a reliable path to impact.

“ But, as with other discourse, these proposals assume that because a foundation called Open Philanthropy is interested in the "EA Community" that the "EA Community" has/deserves/should be entitled to a say in how the foundation spends their money.”

I think the claim of entitlement here is both an uncharitable interpretation and irrelevant to the object level claim of “more democratic decision making would be more effective at improving the world”.

I think these proposals can be interpreted as “here is how EA could improve the long-term effectiveness of its spending”, in a similar way to how EA has spent years telling philanthropists “here is how you could improve the effectiveness of your spending”.

I don’t think it’s a good idea to pay too much attention to the difference in framing between “EA should do X” and “EA would be better at improving the world if it did X”.

Definitely agree with this post.

That said, I suspect that the underlying concern here is one of imbalance of power. As I've seen from the funder's side, there are a number of downsides when a grantee is overly dependent on one particular funder: 1) the funder might change direction in a way that is devastating to the grantee and its employees; 2) the grantee is incentivized to cater solely to that one funder while remaining silent about possible criticisms, all of which can silently undermine the effectiveness of the work.

I wonder to what... (read more)

I've been a part of one unfinished whitepaper and one unsubmitted grant application on mechanisms and platforms in this space, in particular they were interventions aimed at creating distributed epistemics which is I think a very slightly more technical way of describing the value prop of "democracy" without being as much of an applause light.

The unfinished whitepaper was a slightly convoluted grantmaking system driven by asset prices on a project market, based on the hypothesis that alleviating (epistemic) pressure from elite grantmakers would be go... (read more)

I want to push back a bit on my own intuition, which is that trying to build out collective (or market-based) decision-making for EA funding is potentially impractical and/or ineffective. Most EAs respect the power of the "wisdom of crowds", and many advocate for prediction markets. Why exactly does this affinity for markets stop at funding? It sounds like most think collective decision-making for funding is not feasible enough to consider, and that's 100% fair, but were it easy to implement, would it be ineffective?

Again, my intuition is to trust th... (read more)

Thanks for writing this, I've wanted to write something like this for a while, but could never frame it right.

I often think that rather than making decisions the community could have more scope to vet decisions in parallel and suggest changes. Eg if there was an automatic hidden forum post for every grant then you could comment there about that grant. Systems like this could allow information to build up before someone took it to the granted in question.

"Also - I'm using scare quotes here because I am very confused who these proposals mean when they say EA community. Is it a matter of having read certain books, or attending EAGs, hanging around for a certain amount of time, working at an org, donating a set amount of money, or being in the right Slacks?"

It is of course a relevant question who this community is supposed to consist of, but at the same time, this question could be asked whenever someone refers to the community as a collective agent doing something, having a certain opinion, benefitting from ... (read more)

I notice that you quoted:

This feels like a totally seperate proposal right? Evaluated separately a world like 'Someone who I trust about as much as I trust Holden or Alex (for independent reasons) is running an independent org which allocates funding' seems pretty good. Specifically it seems more robust to 'conflict-of-interest' style concerns whilst keeping grant decisions in the hands of skilled grantmakers. (Maybe a smaller ver... (read more)

I have written this post on the Effective Altruism governance and democratization.

https://forum.effectivealtruism.org/posts/KjahfX4vCbWWvgnf7/effective-altruism-governance-still-a-non-issue

I hope it helps to frame the issue.