This is a special post for quick takes by Ozzie Gooen. Only they can create top-level comments. Comments here also appear on the Quick Takes page and All Posts page.

A bit sad to find out that Open Philanthropy’s (now Coefficient Giving) GCR Cause Prioritization team is no more.

I heard it was removed/restructured mid-2025. Seems like most of the people were distributed to other parts of the org. I don't think there were public announcements of this, though it is quite possible I missed something.

I imagine there must have been a bunch of other major changes around Coefficient that aren't yet well understood externally. This caught me a bit off guard.

There don't seem to be many active online artifacts about this team, but I found this hiring post from early 2024, and this previous AMA.

Thanks for flagging this, Ozzie. I led the GCR Cause Prio team for the last year before it was wound down, so I can add some context.

The honest summary is that the team never really achieved product-market fit. Despite the name, we weren't really doing “cause prioritization” as most people would conceive of it. GCR program teams have wide remits within their areas and more domain expertise and networks than we had, so the separate cause prio team model didn't work as well as it does for GHW, where it’s more fruitful to dig into new literatures and build quantitative models. In practice, our work ended up being a mix of supporting a variety of projects for different program teams and trying to improve grant evaluation methods. GCR leadership felt that this set-up wasn’t on track to answer their most important strategy and research questions and that it wasn’t worth the opportunity cost of the people on the team. GCR leadership are considering alternative paths forward, though haven’t decided on anything yet.

I don't think there are any other comparably major structural changes at Coefficient to flag, other than that we’re trying to scale Good Ventures' giving and work with other part... (read more)

Thanks so much for this response! That's really useful to know. I really appreciate the transparency and clarity here.

Hope that the team members of it are all doing well now.

4

anormative

Do you think this is evidence that OpenPhil's GCR staff/team is doing less cause prioritization now than they were before? The specific things you say don't seem to be much evidence either way about this (and also not much evidence about whether or not they actually need to be doing more cause prioritization on the margin). Maybe you have further reason to believe this is bad?

What makes you expect this and why (assuming you do) do you expect these changes to be negative?

2

Ozzie Gooen

I don't mean to sound too negative on this - I did just say "a bit sad" on that one specific point.

Do I think that CE is doing worse or better overall? It seems like Coefficient has been making a bunch of changes, and I don't feel like I have a good handle on the details. They've also been expanding a fair bit. I'd naively assume that a huge amount of work is going on behind the scenes to hire and grow, and that this is putting CE in a better place on average.

I would expect this (the GCR prio team change) to be some evidence that specific ambitious approaches to GCR prioritization are more limited now. I think there are a bunch of large projects that could be done in this area that would probably take a team to do well, and right now it's not clear who else could do such projects.

Bigger-picture, I personally think GCR prioritization/strategy is under-investigated, but I respect that others have different priorities.

I've been experimenting recently with a longtermist wiki, written fully with LLMs.

Some key decisions/properties: 1. Fully LLM-generated, heavily relying on Claude Code. 2. Somewhat opinionated. Tries to represent something of a median longtermist/EA longview, with a focus on the implications of AI. All pages are rated for "importance". 3. Claude will estimates a lot of percentages and letter grades for things. If you see a percentage or grade, and there's no citation, it might well be a guess by Claude. 4. An emphasis on numeric estimates, models, and diagrams... (read more)

I think it would be really useful for there to be more public clarification on the relationship between effective altruism and Open Philanthropy.

My impression is that: 1. OP is the large majority funder of most EA activity. 2. Many EAs assume that OP is a highly EA organization, including the top. 3. OP really tries to explicitly not take responsibility for EA and does not claim to themselves be highly EA. 4. EAs somewhat assume that OP leaders are partially accountable to the EA community, but OP leaders would mostly disagree. 5. From the point of view of many EAs, EA represents something like a community of people with similar goals and motivations. There's some expectations that people will look out for each other. 6. From the point of view of OP, EA is useful insofar as it provides valuable resources (talent, sometimes ideas and money).

My impression is that OP basically treats the OP-EA relationship as a set of transactions, each with positive expected value. Like, they would provide a $20k grant to a certain community, if they expect said community to translate into over $20k of value via certain members who would soon take on jobs at certain companies. Perh... (read more)

Ozzie my apologies for not addressing all of your many points here, but I do want to link you to two places where I've expressed a lot of my takes on the broad topic:

On medium, I talk about how I see the community at some length. tldr: aligned around the question of how to do the most good vs the answer, heterogenous, and often specifically alien to me and my values.

On the forum, I talk about the theoretical and practical difficulties of OP being "accountable to the community" (and here I also address an endowment idea specifically in a way that people found compelling). Similarly from my POV it's pretty dang hard to have the community be accountable to OP, in spite of everything people say they believe about that. Yes we can withold funding, after the fact, and at great community reputational cost. But I can't e.g. get SBF to not do podcasts nor stop the EA (or two?) that seem to have joined DOGE and started laying waste to USAID. (On Bsky, they blame EAs for the whole endeavor)

Thanks for the response here! I was not expecting that.

This is a topic that can become frustratingly combative if not handled gracefully, especially in public forums. To clarify, my main point isn't disagreement with OP's position, but rather I was trying to help build clarity on the OP-EA relationship.

Some points:

1. The relationship between the "EA Community" and OP is both important (given the resources involved) and complex[1] .

2. In such relationships, there are often unspoken expectations between parties. Clarity might be awkward initially but leads to better understanding and coordination long-term.

3. I understand you're uncomfortable with OP being considered responsible for much of EA or accountable to EA. This aligns with the hypotheses in my original comment. I'm not sure we're disagreeing on anything here.

4. I appreciate your comments, though I think many people might reasonably still find the situation confusing. This issue is critical to many people's long-term plans. The links you shared are helpful but leave some uncertainty - I'll review them more carefully.

5. At this point, we might be more bottlenecked by EAs analyzing the situation than by additional writing from OP (though both are useful). EAs likely need to better recognize the limitations of the OP-EA relationship and consider what that means for the community.

6. When I asked for clarification, I imagined that EA community members working at the OP-EA intersection would be well positioned to provide insight. One challenge is that many people feel uncomfortable discussing this relationship openly due to the power imbalance.[2]. As well as the funding issue (OP funds EA), there's also the fact that OP has better ways of privately communicating[3]. (This is also one issue why I'm unusually careful and long with my words with these discussions, sorry if it comes across as harder to read.) That said, comment interactions and assurances from the OP do help build trust.

1. ^

the

I'm interested in hearing from those who provided downvotes. I could imagine a bunch of reasons why one might have done so (there were a lot of points included here).

(Upon reflection, I don't think my previous comment was very good. I tried to balance being concise, defensive, and comprehensive, but ended up with something confusing. I'd be happy to clarify my stance on this more at any time if asked, though it might well be too late now for that to be useful. Apologies!)

8

Ozzie Gooen

Minor points, from your comment:

I believe most EAs would agree these examples should never have been in OP's proverbial sphere of responsibility.

There are other examples we could discuss regarding OP's role (as makes sense, no organization is perfect), but that might distract from the main topic: clarity on the OP-EA relationship and the mutual expectations between parties.

It seems obvious that such Bsky threads contain significant inaccuracies. The question is how much weight to give such criticisms.

My impression is that many EAs wouldn't consider these threads important enough to drive major decisions like funding allocations. However, the fact you mention it suggests it's significant to you, which I respect.

About the OP-EA relationship - if factors like "avoiding criticism from certain groups" are important for OP's decisions, saying so clearly is the kind of thing that seems useful. I don't want to get into arguments about if it should[1], the first thing is to just understand that that's where a line is.

1. ^

More specifically, I think these discussions could be useful - but I'm afraid they will get in the way of the discussions of how OP will act, which I think is more important.

[anonymous]21

1

1

Ozzie I’m not planning to discuss it any further and don’t plan to participate on the forum anymore.

This is probably off-topic, but I was very surprised to read this, given how much he supported the Harris campaign, how much he gives to reduce global poverty, and how similar your views are on e.g. platforming controversial people.

Just flagging that the EA Forum upvoting system is awkward here. This comment says:

1. "I can't say that we agree on very much"

2. "you are often a voice of reason"

3. "your voice will be missed"

As such, I'm not sure what the Agree / Disagree reacts are referring to, and I imagine similar for others reading this.

This isn't a point against David, just a challenge with us trying to use this specific system.

8

Rebecca

This seems like quite a stretch.

4

Robi Rahman🔸

I'm out of the loop, who's this allegedly EA person who works at DOGE?

6

AnonymousTurtle

Many people claim that Elon Musk is an EA person, @Cole Killian has an EA Forum account and mentioned effective altruism on his (now deleted) website, Luke Farritor won the Vesuvius Challenge mentioned in this post (he also allegedly wrote or reposted a tweet mentioning effective altruism, but I can't find any proof and people are skeptical)

This reminds me of another related tension I've noticed. I think that OP really tries to not take much responsibility for EA organizations, and I believe that this has led to something of a vacuum of leadership.

I think that OP has functionally has great power over EA.

In many professional situations, power comes with corresponding duties and responsibilities.

CEOs have a lot of authority, but they are also expected to be agentic, to keep on the lookout for threats, to be in charge of strategy, to provide guidance, and to make sure many other things are carried out.

The President clearly has a lot of powers, and that goes hand-in-hand with great expectations and duties.

There's a version of EA funding where the top funders take on both leadership and corresponding responsibilities. These people ultimately have the most power, so arguably they're best positioned to take on leadership duties and responsibilities.

But I think nonprofit funders often try not to take much in terms of responsibilities, and I don't think OP is an exception. I'd also flag that I think EA Funds and SFF are in a similar boat, though these are smaller.

My impression is that OP explicitly tries not ... (read more)

Thanks for writing this Ozzie! :) I think lots of things about the EA community are confusing for people, especially relationships between organizations. As we are currently redesigning EA.org it might be helpful for us to add some explanation on that site. (I would be interested to hear if anyone has specific suggestions!)

From my own limited perspective (I work at CEA but don’t personally interact much with OP directly), your impression sounds about right. I guess my own view of OP is that it’s better to think of them as a funder rather than a collaborator (though as I said I don’t personally interact with them much so haven’t given this much thought, and I wouldn’t be surprised if others at CEA disagree). They have their own goals as an organization, and it’s not necessarily bad if those goals are not exactly aligned with the overall EA community. My understanding is that it’s very standard for projects to adapt their pitches for funders that do not have the same goals/values as them. For example, I’m not running the Forum in a way that would maximize career changes[1] (TBH I don’t think OP would want me to do this anyway), but it’s helpful to include data we have about... (read more)

This seems directionally correct, but I would add more nuance.

While OP, as a grantmaker, has a goal it wants to achieve with its grants (and they wouldn't be EA aligned if they didn't), this doesn't necessarily mean they are very short term. The Open Phil EA/LT Survey seems to me to show best what they care about in outcomes (talent working in impactful areas) but also how hard it is to pinpoint the actions and inputs needed. This leads me to believe that OP instrumentally cares about the community/ecosystem/network as it needs multiple touchpoints and interactions to get most people from being interested in EA ideas to working on impactful things.

On the other side, we use the term community in confusing ways. I was on a Community Builder Grant by CEA for two years when working at EA Germany, which many call national community building. What we were actually doing was working on the talent development pipeline, trying to find promising target groups, developing them and trying to estimate the talent outcomes.

Working on EA as a social movement/community while being paid is challenging. On one hand, I assume OP would find it instrumentally useful (see above) but still desire to... (read more)

Thanks for the details here!

> would add more nuance

I think this is a complex issue. I imagine it would be incredibly hard to give it a really robust write-up, and definitely don't mean for my post to be definitive.

7

JWS 🔸

I think this is downstream of a lot of confusion about what 'Effective Altruism' really means, and I realise I don't have a good definition any more. In fact, because all of the below can be criticised, it sort of explains why EA gets seemingly infinite criticism from all directions.

* Is it explicit self-identification?

* Is it explicit membership in a community?

* Is it implicit membership in a community?

* Is it if you get funded by OpenPhilanthropy?

* Is it if you are interested or working in some particular field that is deemed "effective"?

* Is it if you believe in totalising utilitarianism with no limits?

* To always justify your actions with quantitative cost-effectiveness analyses where you're chosen course of actions is the top ranked one?

* Is it if you behave a certain way?

Because in many ways I don't count as EA based off the above. I certainly feel less like one than I have in a long time.

For example:

I don't know if this refers to some gestalt 'belief' than OP might have, or Dustin's beliefs, or some kind of 'intentional stance' regarding OP's actions. While many EAs shared some beliefs (I guess) there's also a whole range of variance within EA itself, and the fundamental issue is that I don't know if there's something which can bind it all together.

I guess I think the question should be less "public clarification on the relationship between effective altruism and Open Philanthropy" and more "what does 'Effective Altruism' mean in 2025?"

2

Ozzie Gooen

I had Claude rewrite this, if the terminology is confusing. I think it's edit is decent.

---

The EA-Open Philanthropy Relationship: Clarifying Expectations

The relationship between Effective Altruism (EA) and Open Philanthropy (OP) might suffer from misaligned expectations. My observations:

1. OP funds the majority of EA activity

2. Many EAs view OP as fundamentally aligned with EA principles

3. OP deliberately maintains distance from EA and doesn't claim to be an "EA organization"

4. EAs often assume OP leadership is somewhat accountable to the EA community, while OP leadership likely disagrees

5. Many EAs see their community as a unified movement with shared goals and mutual support

6. OP appears to view EA more transactionally—as a valuable resource pool for talent, ideas, and occasionally money

This creates a fundamental tension. OP approaches the relationship through a cost-benefit lens, funding EA initiatives when they directly advance specific OP goals (like AI safety research). Meanwhile, many EAs view EA as a transformative cultural movement with intrinsic value beyond any specific cause area.

These different perspectives manifest in competing priorities:

EA community-oriented view prioritizes:

* Long-term community health and growth

* Individual wellbeing of community members

* Building EA's reputation for honesty and trustworthiness

Transactional view prioritizes:

* Short-term talent pipeline and funding opportunities

* Risk management (not wanting EA activities to wind up reflecting poorly on OP)

* Minimizing EA criticism of OP and OP activities. (This is both annoying to deal with, and could hurt their specific activities)

This disconnect explains why some people might feel betrayed by EA. Recruiters often promote EA as a supportive community/movement (which resonates better), but if the funding reality treats EA more as a talent network, there's a fundamental misalignment.

Another thought I've had: "EA Global has major communit

Anthropic has been getting flak from some EAs for distancing itself from EA. I think some of the critique is fair, but overall, I think that the distancing is a pretty safe move.

Compare this to FTX. SBF wouldn't shut up about EA. He made it a key part of his self-promotion. I think he broadly did this for reasons of self-interest for FTX, as it arguably helped the brand at that time.

I know that at that point several EAs were privately upset about this. They saw him as using EA for PR, and thus creating a key liability that could come back and bite EA.

And come back and bite EA it did, about as poorly as one could have imagined.

So back to Anthropic. They're taking the opposite approach. Maintaining about as much distance from EA as they semi-honestly can. I expect that this is good for Anthropic, especially given EA's reputation post-FTX.

And I think it's probably also safe for EA.

I'd be a lot more nervous if Anthropic were trying to tie its reputation to EA. I could easily see Anthropic having a scandal in the future, and it's also pretty awkward to tie EA's reputation to an AI developer.

To be clear, I'm not saying that people from Anthropic should actively lie or deceive. So I have mixed feelings about their recent quotes for Wired. But big-picture, I feel decent about their general stance to keep distance. To me, this seems likely in the interest of both parties.

I hope my post was clear enough that distance itself is totally fine (and you give compelling reasons for that here). It's ~implicitly denying present knowledge or past involvement in order to get distance that seems bad for all concerned. The speaker looks shifty and EA looks like something toxic you want to dodge.

Responding to a direct question by saying "We've had some overlap and it's a nice philosophy for the most part, but it's not a guiding light of what we're doing here" seems like it strictly dominates.

I agree.

I didn’t mean to suggest your post suggested otherwise - I was just focusing on another part of this topic.

4

Ben_West🔸

Do you think that distancing is ever not in the interest of both parties? If so, what is special about Anthropic/EA?

(I think it's plausible that the answer is that distancing is always good; the negative risks of tying your reputation to someone always exceed the positive. But I'm not sure.)

4

Ozzie Gooen

Arguably, around FTX, it was better. EA and FTX both had strong brands for a while. And there were worlds in which the risk of failure was low.

I think it's generally quite tough to get this aspect right though. I believe that traditionally, charities are reluctant to get their brands associated with large companies, due to the risks/downsides. We don't often see partnerships between companies and charities (or say, highly-ideological groups) - I think that one reason why is that it's rarely in the interests of both parties.

Typically companies want to tie their brands to very top charities, if anyone. But now EA has a reputational challenge, so I'd expect that few companies/orgs want to touch "EA" as a thing.

Arguably influencers are a often a safer option - note that EA groups like GiveWell and 80k are already doing partnerships with influencers. As in, there's a decent variety of smart YouTube channels and podcasts that hold advertisements for 80k/GiveWell. I feel pretty good about much of this.

Arguably influencers are crafted in large part to be safe bets. As in, they're very incentivized to not go crazy, and they have limited risks to worry about (given they represent very small operations).

4

Jason

This feels different to me. In most cases, there is a cultural understanding of the advertiser-ad seller relationship that limits the reputational risk. (I have not seen the "partnerships" in question, but assume there is money flowing in one direction and promotional consideration in the other.) To be sure, activists will demand for companies to pull their ads from a certain TV show when it does something offensive, to stop sponsoring a certain sports team, or so on. However, I don't think consumers generally hold prior ad spend against a brand when it promptly cuts the relationship upon learning of the counterparty's new and problematic conduct.

In contrast, people will perceive something like FTX/EA or Anthropic/EA as a deeper relationship rather than a mostly transactional relationship involving the exchange of money for eyeballs. Deeper relationships can have a sense of authenticity that increases the value of the partnership -- the partners aren't just in it for business reasons -- but that depth probably increases the counterparty risks to each partner.

I’ve heard multiple reports of people being denied jobs around AI policy because of their history in EA. I’ve also seen a lot of animosity against EA from top organizations I think are important - like A16Z, Founders Fund (Thiel), OpenAI, etc. I’d expect that it would be uncomfortable for EAs to apply or work in to these latter places at this point.

This is very frustrating to me.

First, it makes it much more difficult for EAs to collaborate with many organizations where these perspectives could be the most useful. I want to see more collaborations and cooperation - not having EAs be allowed in many orgs makes this very difficult.

Second, it creates a massive incentive for people not to work in EA or on EA topics. If you know it will hurt your career, then you’re much less likely to do work here.

And a lighter third - it’s just really not fun to have a significant stigma associated with you. This means that many of the people I respect the most, and think are doing some of the most valuable work out there, will just have a much tougher time in life.

Who’s at fault here? I think the first big issue is that resistances get created against all interesting and powerful groups. There are sim... (read more)

On the positive front, I know its early days but GWWC have really impressed me with their well produced, friendly yet honest public facing stuff this year - maybe we can pick up on that momentum?

Also EA for Christians is holding a British conference this year where Rory Stewart and the Archbishop of Canterbury (biggest shot in the Anglican church) are headlining which is a great collaboration with high profile and well respected mainstream Christian / Christian-adjacent figures.

I think in general their public facing presentation and marketing seems a cut above any other EA org - happy to be proven wrong by other orgs which are doing a great job too. What I love is how they present their messages with such positivity, while still packing a real punch and not watering down their message. Check out their web-page and blog to see their work.

A few concrete examples

- This great video "How rich are you really?"

- Nice rebranding of "Giving what we can pledge" to the snappier and clearer "10% pledge"

- The diamond symbol as a simple yet strong sign of people taking the pledge, both on the forum here and linkedin

- An amazing linked-in push with lots of people putting the diamond and explaining why they took the pledge. Many posts have been received really positively on my wall.

That's just what I've noticed.

(Jumping in for our busy comms/exec team) Understanding the status of the EA brand and working to improve it is a top priority for CEA :) We hope to share more work on this in future.

Thanks, good to hear! Looking forward to seeing progress here.

[anonymous]12

4

10

I wrote a downvoted post recently about how we should be warning AI Safety talent about going into labs for personal branding reasons (I think there are other reasons not to join labs, but this is worth considering).

I think people are still underweighting how much the public are going to hate labs in 1-3 years.

I was telling organizers with PauseAI like Holly Elmore they should be emphasizing this more several months ago.

2[anonymous]

I think from an advocacy standpoint it is worth testing that message, but based on how it is being received on the EAF, it might just bounce off people.

My instinct as to why people don't find it a compelling argument;

1. They don't have short timelines like me, and therefore chuck it out completely

2. Are struggling to imagine a hostile public response to 15% unemployment rates

3. Copium

3

Evan_Gaensbauer

At least at the time, Holly Elmore seemed to consider it at least somewhat compelling. I mentioned this was an argument I provided framed in the context of movements like PauseAI--a more politicized, and less politically averse coalition movement, that includes at least one arm of AI safety as one of its constituent communities/movements, distinct from EA.

>They don't have short timelines like me, and therefore chuck it out completely

Among the most involved participants in PauseAI, presumably there may estimates of short timelines comparable to the rate of such estimates among effective altruists.

>Are struggling to imagine a hostile public response to 15% unemployment rates

Those in PauseAI and similar movements don't.

>Copium

While I sympathize with and appreciate why there would be high rates of huffing copium among effective altruists (and adjacent communities, such as rationalists), others who have been picking up slack effective altruists have dropped in the last couple years, are reacting differently. At least in terms of safeguarding humanity from both the near-term and long-term vicissitudes of advancing AI, humanity has deserved better than EA has been able to deliver. Many have given up hope that EA will ever rebound to the point it'll be able to muster living up to the promise of at least trying to safeguard humanity. That includes both many former effective altruists, and those who still are effective altruists. I consider there to still be that kind of 'hope' on a technical level, though on a gut level I don't have faith in EA. I definitely don't blame those who have any faith left in EA, let alone those who see hope in it.

Much of the difference here is the mindset towards 'people', and how they're modeled, between those still firmly planted in EA but somehow with a fatalistic mindset, and those who still care about AI safety but have decided to move on in EA. (I might be somewhere in between, though my perspective as a single individual a

Very sorry to hear these reports, and was nodding along as I read the post.

If I can ask, how do they know EA affiliation was the decision? Is this an informal 'everyone knows' thing through policy networks in the US? Or direct feedback for the prospective employer than EA is a PR-risk?

Of course, please don't share any personal information, but I think it's important for those in the community to be as aware as possible of where and why this happens if it is happening because of EA affiliation/history of people here.

I think that certain EA actions in ai policy are getting a lot of flak.

On Twitter, a lot of VCs and techies have ranted heavily about how much they dislike EAs.

See this segment from Marc Andreeson, where he talks about the dangers of Eliezer and EA. Marc seems incredibly paranoid about the EA crowd now.

(Go to 1 hour, 11min in, for the key part. I tried linking to the timestamp, but couldn't get it to work in this editor after a few minutes of attempts)

Organized, yes. And so this starts with a mailing list. In the nineties is a transhumanist mailing list called the extropions. And these extropions, they might have got them wrong, extropia or something like that, but they believe in the singularity. So the singularity is a moment of time where AI is progressing so fast, or technology in general progressing so fast that you can't predict what happens. It's self evolving and it just. All bets are off. We're entering

To be fair to the CEO of Replit here, much of that transcript is essentially true, if mildly embellished. Many of those events or outcomes associated with EA, or adjacent communities during their histories, that should be the most concerning to anyone other than any FTX-related events and for reasons beyond just PR concerns, can and have been well-substantiated.

My guess is this is obvious, but the "debugging" stuff seems as far as I can tell completely made up.

I don't know of any story in which "debugging" was used in any kind of collective way. There was some Leverage-research adjacent stuff that kind of had some attributes like this, "CT-charting", which maybe is what it refers to, but that sure would be the wrong word, and I also don't think I've ever heard of any psychoses or anything related to that.

The only in-person thing I've ever associated with "debugging" is when at CFAR workshops people were encouraged to create a "bugs-list", which was just a random list of problems in your life, and then throughout the workshop people paired with other people where they could choose any problem of their choosing, and work with their pairing partner on fixing it. No "auditing" or anything like that.

I haven't read the whole transcript in-detail, but this section makes me skeptical of describing much of that transcript as "essentially true".

I have personally heard several CFAR employees and contractors use the word "debugging" to describe all psychological practices, including psychological practices done in large groups of community members. These group sessions were fairly common.

In that section of the transcript, the only part that looks false to me is the implication that there was widespread pressure to engage in these group psychology practices, rather than it just being an option that was around. I have heard from people in CFAR who were put under strong personal and professional pressure to engage in *one-on-one* psychological practices which they did not want to do, but these cases were all within the inner ring and AFAIK not widespread. I never heard any stories of people put under pressure to engage in *group* psychological practices they did not want to do.

I think it might describe how some people experienced internal double cruxing. I wouldn't be that surprised if some people also found the 'debugging" frame in general to give too much agency to others relative to themselves, I feel like I've heard that discussed.

Based on the things titotal said, seems like it very likely refers to some Leverage stuff, which I feel a bit bad about seeing equivocated with the rest of the ecosystem, but also seems kind of fair. And the Zoe Curzi post sure uses the term "debugging" for those sessions (while also clarifying that the rest of the rationality community doesn't use the term that way, but they sure seemed to)

For what it’s worth, I was reminded of Jessica Taylor’s account of collective debugging and psychoses as I read that part of the transcript. (Rather than trying to quote pieces of Jessica’s account, I think it’s probably best that I just link to the whole thing as well as Scott Alexander’s response.)

I presume this account is their source for the debugging stuff, wherein an ex-member of the rationalist Leverage institute described their experiences. They described the institute as having "debugging culture", described as follows:

In the larger rationalist and adjacent community, I think it’s just a catch-all term for mental or cognitive practices aimed at deliberate self-improvement.

At Leverage, it was both more specific and more broad. In a debugging session, you’d be led through a series of questions or attentional instructions with goals like working through introspective blocks, processing traumatic memories, discovering the roots of internal conflict, “back-chaining” through your impulses to the deeper motivations at play, figuring out the roots of particular powerlessness-inducing beliefs, mapping out the structure of your beliefs, or explicating irrationalities.

and:

1. 2–6hr long group debugging sessions in which we as a sub-faction (Alignment Group) would attempt to articulate a “demon” which had infiltrated our psyches from one of the rival groups, its nature and effects, and get it out of our systems using debugging tools.

The podcast statements seem to be an embel... (read more)

Leverage was an EA-aligned organization, that was also part of the rationality community (or at least 'rationalist-adjacent'), about a decade ago or more. For Leverage to be affiliated with the mantles of either EA or the rationality community was always contentious. From the side of EA, the CEA, and the side of the rationality community, largely CFAR, Leverage faced efforts to be shoved out of both within a short order of a couple of years. Both EA and CFAR thus couldn't have then, and couldn't now, say or do more to disown and disavow Leverage's practices from the time Leverage existed under the umbrella of either network/ecosystem/whatever. They have. To be clear, so has Leverage in its own way.

At the time of the events as presented by Zoe Curzi in those posts, Leverage was basically shoved out the door of both the rationality and EA communities with--to put it bluntly--the door hitting Leverage on ass on the on the way out, and the door back in firmly locked behind them from the inside. In time, Leverage came to take that in stride, as the break-up between Leverage, and the rest of the institutional polycule that is EA/rationality, was extremely mutual.

Ien short, the course of events, and practices at Leverage that led to them, as presented by Zoe Curzi and others as a few years ago from that time circa 2018 to 2022, can scarcely be attributed to either the rationality or EA communities. That's a consensus between EA, Leverage, and the rationality community agree on--one of few things left that they still agree on at all.

From the side of EA, the CEA, and the side of the rationality community, largely CFAR, Leverage faced efforts to be shoved out of both within a short order of a couple of years. Both EA and CFAR thus couldn't have then, and couldn't now, say or do more to disown and disavow Leverage's practices from the time Leverage existed under the umbrella of either network/ecosystem/whatever…

At the time of the events as presented by Zoe Curzi in those posts, Leverage was basically shoved out the door of both the rationality and EA communities with--to put it bluntly--the door hitting Leverage on ass on the on the way out, and the door back in firmly locked behind them from the inside.

While I’m not claiming that “practices at Leverage” should be “attributed to either the rationality or EA communities”, or to CEA, the take above is demonstrably false. CEA definitely could have done more to “disown and disavow Leverage’s practices” and also reneged on commitments that would have helped other EAs learn about problems with Leverage.

Quick point - I think the relationship between CEA and Leverage was pretty complicated during a lot of this period.

There was typically a large segment of EAs who were suspicious of Leverage, ever since their founding. But Leverage did collaborate with EAs on some specific things early on (like the first EA Summit). It felt like an uncomfortable alliance type situation. If you go back on the forum / Lesswrong, you can read artifacts.

I think the period of 2018 or so was unusual. This was a period where a few powerful people at CEA (Kerry, Larissa) were unusually pro-Leverage and got to power fairly quickly (Tara left, somewhat suddenly). I think there was a lot of tension around this decision, and when they left (I think this period lasted around 1 year), I think CEA became much less collaborative with Leverage.

One way to square this a bit is that CEA was just not very powerful for a long time (arguably, its periods of "having real ability/agency to do new things" have been very limited). There were periods where Leverage had more employees (I'm pretty sure). The fact that CEA went through so many different leaders, each with different stances and strategies, makes it more confusing to look back on.

I would really love for a decent journalist to do a long story on this history, I think it's pretty interesting.

2

Habryka [Deactivated]

Huh, yeah, that sure refers to those as "debugging". I've never really heard Leverage people use those words, but Leverage 1.0 was a quite insular and weird place towards the end of its existence, so I must have missed it.

I think it's kind of reasonable to use Leverage as evidence that people in the EA and Rationality community are kind of crazy and have indeed updated on the quotes being more grounded (though I also feel frustration with people equivocating between EA, Rationality and Leverage).

(Relatedly, I don't particularly love you calling Leverage "rationalist" especially in a context where I kind of get the sense you are trying to contrast it with "EA". Leverage has historically been much more connected to the EA community, and indeed had almost successfully taken over CEA leadership in ~2019, though IDK, I also don't want to be too policing with language here)

2

Evan_Gaensbauer

I wouldn't and didn't describe that section of the transcript, as a whole, as essentially true. I said much of it is. As the CEO might've learned from Tucker Carlson, who in turned learned from FOX News, we should seek to be 'fair and balanced.'

As to the debugging part, that's an exaggeration that must have come out the other side of a game of broken telephone on the internet. It seems that on the other side of that telephone line would've been some criticisms or callouts I've read years ago of some activities happening in or around CFAR. I don't recollect them in super-duper precise detail right now, nor do I have the time today to spend an hour or more digging them up on the internet

For the perhaps wrongheaded practices that were introduced into CFAR workshops for a period of time other than the ones from Leverage Research, I believe the others were some introduced by Valentine (e.g., 'againstness,' etc.). As far as I'm aware, at least as it was applied at one time, some past iterations of Connection Theory bore at least a superficial resemblance to some aspects of 'auditing' as practiced by Scientologists.

As to perhaps even riskier practices, I mean they happened not "in" but "around" CFAR in the sense of not officially happening under the auspices of CFAR, or being formally condoned by them, though they occurred within the CFAR alumni community and the Bay Area rationality community. It's murky, though there was conduct in the lives of private individuals that CFAR informally enabled or emboldened, and could've/should've done more to prevent. For the record, I'm aware CFAR has effectively admitted those past mistakes, so I don't want to belabor any point of moral culpability beyond what has been drawn out to death on LessWrong years ago.

Anyway, activities that occurred among rationalists in the social network that in CFAR's orbit, that arguably arose to the level of triggering behaviour comparable in extremity to psychosis, include 'dark arts' rationalit

3

Gil

I think it's worth noting that the two examples you point to are right-wing, which the vast majority of Silicon Valley is not. Right-wing tech ppl likely have higher influence in DC, so that's not to say they're irrelevant, but I don't think they are representative of silicon valley as a whole

2

Ozzie Gooen

I think Garry Tan is more left-wing, but I'm not sure. A lot of the e/acc community fights with EA, and my impression is that many of them are leftists.

I think that the right-wing techies are often the loudest, but there are also lefties in this camp too.

(Honestly though, the right-wing techies and left-wing techies often share many of the same policy ideas. But they seem to disagree on Trump and a few other narrow things. Many of the recent Trump-aligned techies used to be more left-coded.)

2

Ozzie Gooen

Random Tweet from today: https://x.com/garrytan/status/1820997176136495167

Garry Tan is the head of YCombinator, which is basically the most important/influential tech incubator out there. Around 8 years back, relations were much better, and 80k and CEA actually went through YCombinator.

I'd flag that Garry specifically is kind of wacky on Twitter, compared to previous heads of YC. So I definitely am not saying it's "EA's fault" - I'm just flagging that there is a stigma here.

I personally would be much more hesitant to apply to YC knowing this, and I'd expect YC would be less inclined to bring in AI safety folk and likely EAs.

5

Rebecca

I find it very difficult psychologically to take someone seriously if they use the word ‘decels’.

3

JWS 🔸

Want to say that I called this ~9 months ago.[1]

I will re-iterate that clashes of ideas/worldviews[2] are not settled by sitting them out and doing nothing, since they can be waged unilaterally.

1. ^

Especially if you look at the various other QTs about this video across that side of Twitter

2. ^

Or 'memetic wars', YMMV

4

Ozzie Gooen

My impression is that the current EA AI policy arm isn't having much active dialogue with the VC community and the like. I see Twitter spats that look pretty ugly, I suspect that this relationship could be improved on with more work.

At a higher level, I suspect that there could be a fair bit of policy work that both EAs and many of these VCs and others would be more okay with than what is currently being pushed. My impression is that we should be focused on narrow subsets of risks that matter a lot to EAs, but don't matter much to others, so we can essentially trade and come out better than we are now.

3

Chris Leong

That seems like the wrong play to me. We need to be focused on achieving good outcomes and not being popular.

6

Ozzie Gooen

My personal take is that there are a bunch of better trade-offs between the two that we could be making. I think that the narrow subset of risks is where most of the value is, so from that standpoint, that could be a good trade-off.

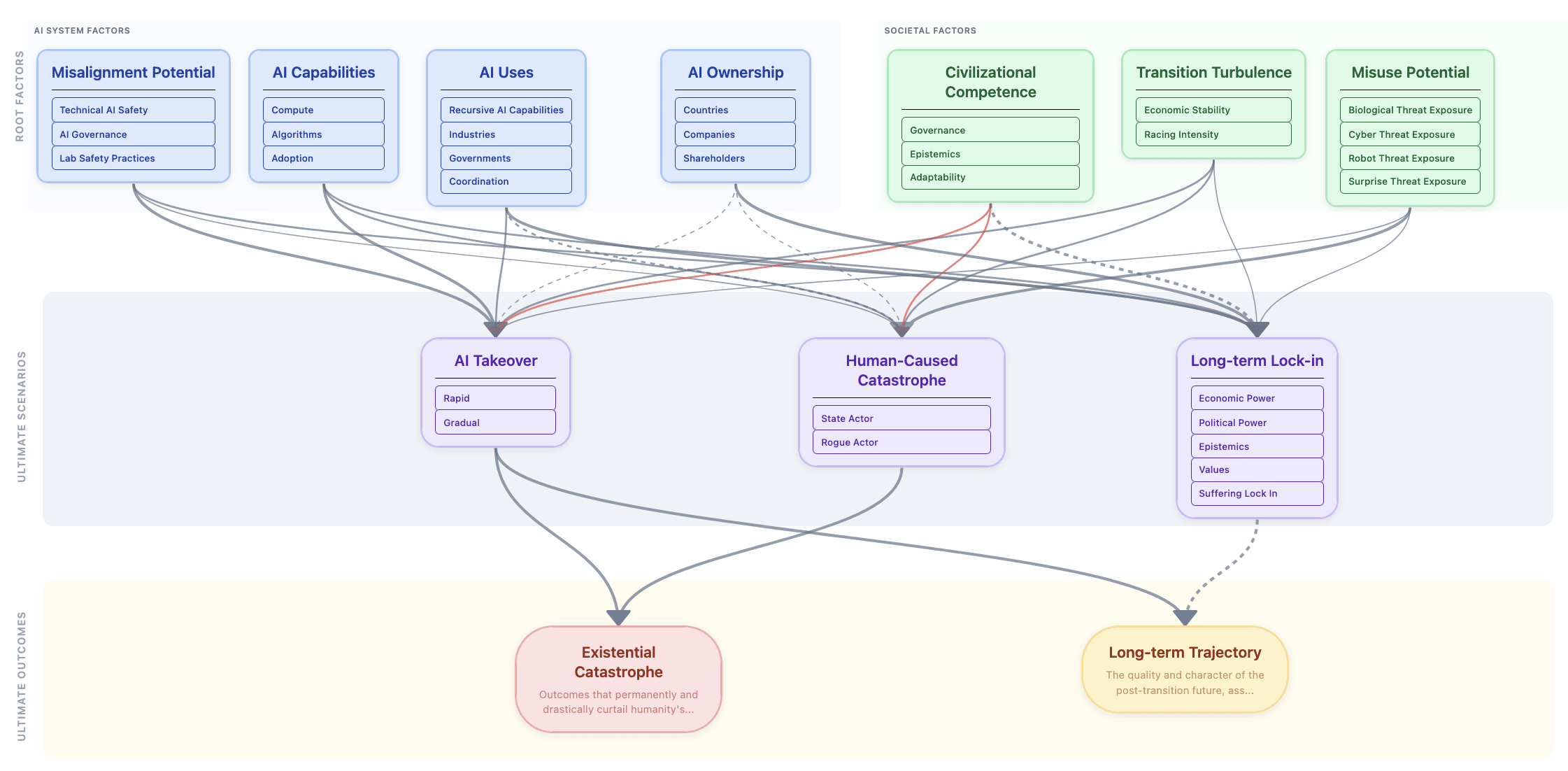

I made this simple high-level diagram of critical longtermist "root factors", "ultimate scenarios", and "ultimate outcomes", focusing on the impact of AI during the TAI transition.

This involved some adjustments to standard longtermist language. "Accident Risk" -> "AI Takeover "Misuse Risk" -> "Human-Caused Catastrophe" "Systemic Risk" -> This is spit up into a few modules, focusing on "Long-term Lock-in", which I assume is the main threat.

You can read interact with it here, where there are (AI-generated) descriptions and pages for things.

Curious to get any feedback!

I'd love it if there could eventually be one or a few well-accepted and high-quality assortments like this. Right now some of the common longtermist concepts seem fairly unorganized and messy to me.

---

Reservations:

This is an early draft. There's definitely parts I find inelegant. I've played with the final nodes instead being things like, "Pre-transition Catastrophe Risk" and "Post-Transition Expected Value", for instance. I didn't include a node for "Pre-transition value"; I think this can be added on, but would involve some complexity that didn't seem worth it at this stage. T... (read more)

Just finding about about this & crux website. So cool. Would love to see something like this for charity ranking (if it isn't already somewhere on the site).

Don't you need a philosophy axioms layer between outputs and outcomes? Existential catastrophe definitions seems to be assuming a lot of things.

Would also need to think harder about why/in what context i'm using this but "governance" being a subcomponent when it's arguably more important/ can control literally everything else at the top level seems wrong.

4

Ozzie Gooen

Good points!

>Would love to see something like this for charity ranking (if it isn't already somewhere on the site).

I could definitely see this being done in the future.

>Don't you need a philosophy axioms layer between outputs and outcomes?

I'm nervous that this can get overwhelming quickly. I like the idea of starting with things that are clearly decision-relevant to the certain audience the website has, then expanding from there. Am open to ideas on better / more scalable approaches!

>"governance" being a subcomponent when it's arguably more important/ can control literally everything else at the top level seems wrong.

Thanks! I'll keep in mind. I'd flag that this is an extremely high-level diagram, meant more to be broad and elegant than to flag which nodes are most important. Many critical things are "just subcomponents". I'd like to make further diagrams on many of the different smaller nodes.

Reflections on "Status Handcuffs" over one's career

(This was edited using Claude)

Having too much professional success early on can ironically restrict you later on. People typically are hesitant to go down in status when choosing their next job. This can easily mean that "staying in career limbo" can be higher-status than actually working. At least when you're in career limbo, you have a potential excuse.

This makes it difficult to change careers. It's very awkward to go from "manager of a small team" to "intern," but that can be necessary if you want to learn a new domain, for instance.

The EA Community Context

In the EA community, some aspects of this are tricky. The funders very much want to attract new and exciting talent. But this means that the older talent is in an awkward position.

The most successful get to take advantage of the influx of talent, with more senior leadership positions. But there aren't too many of these positions to go around. It can feel weird to work on the same level or under someone more junior than yourself.

Pragmatically, I think many of the old folks around EA are either doing very well, or are kind of lost/exploring other avenues. Other areas allow... (read more)

I've just ran into this, so excuse a bit of grave digging. As someone who has entered the EA community with prior career experience I disagree with your premise

"It's very awkward to go from "manager of a small team" to "intern," but that can be necessary if you want to learn a new domain, for instance."

To me this kind of situation just shouldn't happen. It's not a question of status, it's a question of inefficiency. If I have managerial experience and the organization I'd be joining can only offer me the exact same job they'd be offering to a fresh grad, then they are simply wasting my potential. I'd be better off at a place which can appreciate what I bring and the organization would be better off with someone who has a fresher mind and less tempting alternatives.

IMO the problem is not with the fact that people are unwilling to take a step down. The problem is with EA orgs unwilling or unable to leverage the transferrable skills of experienced professionals, forcing them into entry-level positions instead.

4

calebp

Pragmatically, I think many of the old folks around EA are either doing very well, or are kind of lost/exploring other avenues. Other areas allow people to have more reputable positions, but these are typically not very EA/effective areas. Often E2G isn't very high-status in these clusters, so I think a lot of these people just stop doing much effective work.

I haven't really noticed this happening very much empirically, but I do think the effect you are talking about is quite intuitive. Have you seen many cases of this that you're confident are correct (e.g. they aren't lost for other reasons like working on non-public projects or being burnt out)? No need to mention specific names.

In theory, EAs are people who try to maximize their expected impact. In practice, EA is a light ideology that typically has a limited impact on people. I think that the EA scene has demonstrated success at getting people to adjust careers (in circumstances where it's fairly cheap and/or favorable to do so)

This seems incorrect to me, in absolute terms. By the standards of ~any social movement, EAs are very sacrificial and focused on increasing their impact. I suspect you somewhat underrate how rare it is outside of EA to be highly committed to ~any non-self-serving principles seriously enough to sacrifice significant income and change careers, particularly in new institutions/movements.

2

Ozzie Gooen

I'm sure that very few of these are explained by "non-public projects".

I'm unsure about burnout. I'm not sure where the line is between "can't identify high-status work to do" and burnout. I expect that the two are highly correlated. My guess is that they don't literally think of it as "I'm low status now", instead I'd expect them to feel emotions like resentment / anger / depression. But I'd also expect that if we could change the status lever, other negative feelings would go away. (I think that status is a big deal for people! Like, status means you have a good career, get to be around people you like, etc)

> I suspect you somewhat underrate how rare it is outside of EA to be highly committed to ~any non-self-serving principles seriously enough to sacrifice significant income and change careers.

I suspect we might have different ideologies in mind to compare to, and correspondingly, that we're not disagreeing much.

I think that a lot of recently-popular movements like BLM or even MAGA didn't change the average lifestyle of the median participant much at all, though much of this is because they are far larger.

But religious groups are far more intense, for example. Or maybe take dedicated professional specialties like ballet or elite music, which can require intense sacrifices.

4

Benevolent_Rain

A related issue I have actually encountered is something like "but you seem overqualified for this role we are hiring for". Even if previously successful people wanted to take a "less prestigious" role, they might encounter real problems in doing so. I hope the EA eco system might have some immunity to this though - as hopefully the mission alignment will be strong enough evidence of why such a person might show interest in a "lower" role.

6

Joseph

As a single data point: seconded. I've explicitly been asked by interviewers (in a job interview) why I left a "higher title job" for a "lower title job," with the implication that it needed some special justification. I suspect there have also been multiple times in which someone looking at my resume saw that transition, made an assumption about it, and choose to reject me. (although this probably happens with non-EA jobs more often than EA jobs, as the "lower title role" was with a well-known EA organization)

2

Ozzie Gooen

Good point. And sorry you had to go through that, it sounds quite frustrating.

3

ASuchy

Thanks for writing this, this is also something I have been thinking about and you've expressed it more eloquently.

One thing I have thought might be useful is at times showing restraint with job titling. I've observed cases where people have had a title for example Director in a small org or growing org, and in a larger org this role might be a coordinator, lead, admin.

I've thought at times this doesn't necessarily set people up for long term career success as the logical career step in terms of skills and growth, or a career shift, often is associated with a lower sounding title. Which I think decreases motivation to take on these roles.

At the same time I have seen people, including myself, take a decrease in salary and title, in order to shift careers and move forward.

1

SiobhanBall

I agree with you. I think in EA this is especially the case because much of the community-building work is focused on universities/students, and because of the titling issue someone else mentioned. I don't think someone fresh out of uni should be head of anything, wah. But the EA movement is young and was started by young people, so it'll take a while for career-long progression funnels to develop organically.

I'm nervous that the EA Forum might be having a small role for x-risk and some high-level prioritization work. - Very little biorisk content here, perhaps because of info-hazards. - Little technical AI safety work here, in part because that's more for LessWrong / Alignment Forum. - Little AI governance work here, for whatever reason. - Not too much innovative, big-picture longtermist prioritization projects happening at the moment, from what I understand. - The cause of "EA community building" seems to be fairly stable, not much bold/controversial experimentation, from what I can tell. - Fairly few updates / discussion from grantmakers. OP is really the dominant one, and doesn't publish too much, particularly about their grantmaking strategies and findings.

It's been feeling pretty quiet here recently, for my interests. I think some important threads are now happening in private slack / in-person conversations or just not happening.

I don't comment or post much on the EA forum because the quality of discourse on the EA Forum typically seems mediocre at best. This is especially true for x-risk.

The whole manifund debacle has left me quite demotivated. It really sucks that people are more interested debating contentious community drama, than seemingly anything else this forum has to offer.

I think there signal vs noise tradeoffs, so I'm naively tempted to retreat toward more exclusivity.

This poses costs of its own, so maybe I'd be in favor of differentiation (some more and some less exclusive version).

Low confidence in this being good overall.

4

Vasco Grilo🔸

Hi Ryan,

Could you share a few examples of what you consider good quality EA Forum posts? Do you think the content linked on the EA Forum Digest also "typically seems mediocre at best"?

Very little biorisk content here, perhaps because of info-hazards.

When I write biorisk-related things publicly I'm usually pretty unsure of whether the Forum is a good place for them. Not because of info-hazards, since that would gate things at an earlier stage, but because they feel like they're of interest to too small a fraction of people. For example, I could plausibly have posted Quick Thoughts on Our First Sampling Run or some of my other posts from https://data.securebio.org/jefftk-notebook/ here, but that felt a bit noisy?

It also doesn't help that detailed technical content gets much less attention than meta or community content. For example, three days ago I wrote a comment on @Conrad K.'s thoughtful Three Reasons Early Detection Interventions Are Not Obviously Cost-Effective, and while I feel like it's a solid contribution only four people have voted on it. On the other hand, if you look over my recent post history at my comments on Manifest, far less objectively important comments have ~10x the karma. Similarly the top level post was sitting at +41 until Mike bumped it last week, which wasn't even high enough that (before I changed my personal settings to boo... (read more)

I'd be excited to have discussions of those posts here!

A lot of my more technical posts also get very little attention - I also find that pretty unmotivating. It can be quite frustrating when clearly lower-quality content on controversial stuff gets a lot more attention.

But this seems like a doom loop to me. I care much more about strong technical content, even if I don't always read it, than I do most of the community drama. I'm sure most leaders and funders feel similarly.

Extended far enough, the EA Forum will be a place only for controversial community drama. This seems nightmarish to me. I imagine most forum members would agree.

I imagine that there are things the Forum or community can do to bring more attention or highlighting to the more technical posts.

Here you go: Detecting Genetically Engineered Viruses With Metagenomic Sequencing

But this was already something I was going to put on the Forum ;)

9

Vaidehi Agarwalla 🔸

I wonder if the forum is even a good place for a lot of these discussions? Feels like they need some combination of safety / shared context, expertise, gatekeeping etc?

7

Ozzie Gooen

If it's not, there is a question of what the EA Forum's comparative advantage will be in the future, and what is a good place for these discussions.

Personally, I think this forum could be good for at least some of this, but I'm not sure.

9

Seth Ariel Green 🔸

Three use cases come to mind for the forum:

* establishing a reputation in writing as a person who can follow good argumentative norms (perhaps as a kind of extended courtship of EA jobs/orgs)

* disseminating findings that are mainly meant for other forums, e.g. research reports

* keeping track of what the community at large is thinking about/working on, which is mostly facilitated by organizations like RP & GiveWell using the forum to share their work.

I don’t think I would use the forum for hashing out anything I was really thinking hard about; I’d probably have in-person conversations or email particular persons.

7

JP Addison🔸

I don't know about you but I just learned about one of the biggest updates to OPs grantmaking in a year on the Forum.

That said, the data does show some agreement with your and commenters vibe of lowering quantity.

I agree that the Forum could be a good place for a lot of these discussions. Some of them aren't happening at all to my knowledge.[1] Some of those should be, and should be discussed on the Forum. Others are happening in private and that's rational, although you may be able to guess that my biased view is that a lot more should be public, and if they were, should be posted on the Forum.

Broadly: I'm quite bullish on the EA community as a vehicle for working on the world's most pressing problems, and of open online discussion as a piece of our collective progress. And I don't know of a better open place on the internet for EAs to gather.

1. ^

Part of that might be because as EA gets older the temperature (in the annealing sense) rationally lowers.

6

Ozzie Gooen

Yep - I liked the discussion in that post a lot, but the actual post seemed fairly minimal, and written primarily outside of the EA Forum (it was a link post, and the actual post was 320 words total.)

For those working on the forum, I'd suggest work on bringing in more of these threads to the forum. Maybe reach out to some of the leaders in each group and see how to change things.

I think that AI policy in particular is most ripe for better infrastructure (there's a lot of work happening, but no common public forums, from what I know), though it probably makes sense to be separate from the EA Forum (maybe like the Alignment Forum), because a lot of them don't want to be associated too much with EA, for policy reasons.

I know less about Bio governance, but would strongly assume that a whole lot of it isn't infohazardous. That's definitely a field that's active and growing.

For foundational EA work / grant discussions / community strategy, I think we might just need more content in the first place, or something.

I assume that AI alignment is well-handled by LessWrong / Alignment Forum, difficult and less important to push to happen here.

4

Nathan Young

So I did used to do more sort of back of the envelope stuff, but it didn't get much traction and people seemed to think it was unfished (it was) so I guess I had less enthusiasm.

4

NickLaing

Yeah even on the global health front the last 3 months or so have felt especially quiet

2

Vaidehi Agarwalla 🔸

Curious if you think there was good discussion before that and could point me to any particularly good posts or conversations?

5

NickLaing

There are still bunch of good discussions (see mostly posts with 10+ comments) in the last 6 months or so, its just that we can sometimes even go a week or two without more than one or two ongoing serious GHD chats. Maybe I'm wrong and there hasn't actually been much (or any) meaningful change in activity this year looking at this.

https://forum.effectivealtruism.org/?tab=global-health-and-development

3

T_W

As a random datapoint, I'm only just getting into the AI Governance space, but I've found little engagement with (some) (of[1]) (the) (resources) I've shared and have just sort of updated to think this is either not the space for it or I'm just not yet knowledgeable enough about what would be valuable to others.

1. ^

I was especially disappointed with this one, because this was a project I worked on with a team for some time, and I still think it's quite promising, but it didn't receive the proportional engagement I would have hoped for. Given I optimized some of the project for putting out this bit of research specifically, I wouldn't do the same now and would have instead focused on other parts of the project.

2

Ozzie Gooen

It seems from the comments that there's a chance that much of this is just timing - i.e. right now is unusually quiet. It is roughly mid-year, maybe people are on vacation or something, it's hard to tell.

I think that this is partially true. I'm not interested in bringing up this point to upset people, but rather to flag that maybe there could be good ways of improving this (which I think is possible!)

I want to see more discussion on how EA can better diversify and have strategically-chosen distance from OP/GV.

One reason is that it seems like multiple people at OP/GV have basically said that they want this (or at least, many of the key aspects of this).

A big challenge is that it seems very awkward for someone to talk and work on this issue, if one is employed under the OP/GV umbrella. This is a pretty clear conflict of interest. CEA is currently the main organization for "EA", but I believe CEA is majority funded by OP, with several other clear strong links. (Board members, and employees often go between these orgs).

In addition, it clearly seems like OP/GV wants certain separation to help from their side. The close link means that problems with EA often spills over to the reputation of OP/GV.

I'd love to see some other EA donors and community members step up here. I think it's kind of damning how little EA money comes from community members or sources other than OP right now. Long-term this seems pretty unhealthy.

One proposal is to have some "mini-CEA" that's non-large-donor funded. This group's main job would be to understand and act on EA interests that organi... (read more)

Yeah I agree that funding diversification is a big challenge for EA, and I agree that OP/GV also want more funders in this space. In the last MCF, which is run by CEA, the two main themes were brand and funding, which are two of CEA’s internal priorities. (Though note that in the past year we were more focused on hiring to set strong foundations for ops/systems within CEA.) Not to say that CEA has this covered though — I'd be happy to see more work in this space overall!

Personally, I worry that funding diversification is a bit downstream of improving the EA brand — it may be hard for people to be excited to support EA community building projects if they feel like others dislike it, and it may be hard to convince new people/orgs to fund EA community things if they read stuff about how EA is bad. So I’m personally more optimistic about prioritizing brand-related work (one example being highlighting EA Forum content on other platforms like Instagram, Twitter, and Substack).

I'd love to see some other EA donors and community members step up here. I think it's kind of damning how little EA money comes from community members or sources other than OP right now. Long-term this seems pretty unhealthy.

There was some prior relevant discussion in November 2023 in this CEA fundraising thread, such as my comment here about funder diversity at CEA. Basically, I didn't think that there was much meaningful difference between a CEA that was (e.g.) 90% OP/GV funded vs. 70% OP/GV funded. So I think the only practical way for that percentage to move enough to make a real difference would be both an increase in community contributions/control and CEA going on a fairly severe diet.

As for EAIF, expected total grantmaking was ~$2.5MM for 2025. Even if a sizable fraction of that went to CEA, it would only be perhaps 1-2% of CEA's 2023 budget of $31.4MM.

I recall participating in some discussions here about identifying core infrastructure that should be prioritized for broad-based funding for democratic and/or epistemic reasons. Identifying items in the low millions for more independent funding seems more realistic than meaningful changes in CEA's funding base. Th... (read more)

Personally, I'm optimistic that this could be done in specific ways that could be better than one might initially presume. One wouldn't fund "CEA" - they could instead fund specific programs in CEA, for instance. I imagine that people at CEA might have some good ideas of specific things they could fund that OP isn't a good fit for.

One complication is that arguably we'd want to do this in a way that's "fair" to OP. Like, it doesn't seem "fair" for OP to pay for all the stuff that both OP+EA agrees on, and EA only to fund the stuff that EA likes. But this really depends on what OP is comfortable with.

Lastly, I'd flag that CEA being 90% OP/GV funded really can be quite different than 70% in some important ways, still. For example, if OP/GV were to leave - then CEA might be able to go to 30% of its size - a big loss, but much better than 10% of its size.

Personally, I'm optimistic that this could be done in specific ways that could be better than one might initially presume. One wouldn't fund "CEA" - they could instead fund specific programs in CEA, for instance. I imagine that people at CEA might have some good ideas of specific things they could fund that OP isn't a good fit for.

That may be viable, although I think it would be better for both sides if these programs were not in CEA but instead in an independent organization. For the small-donor side, it limits the risk that their monies will just funge against OP/GV's, or that OP/GV will influence how the community-funded program is run (e.g., through its influence on CEA management officials). On the OP/GV side, organizational separation is probably necessary to provide some of the reputational distance it may be looking for. That being said, given that small/medium donors have never to my knowledge been given this kind of opportunity, and the significant coordination obstacles involved, I would not characterize them not having taken it as indicative of much in particular.

~

More broadly, I think this is a challenging conversation without nailing down the objective better --... (read more)

I think you bring up a bunch of good points. I'd hope that any concrete steps on this would take these sorts of considerations in mind.

> The concerns implied by that statement aren't really fixable by the community funding discrete programs, or even by shelving discrete programs altogether. Not being the flagship EA organization's predominant donor may not be sufficient for getting reputational distance from that sort of thing, but it's probably a necessary condition.

I wasn't claiming that this funding change would fix all of OP/GV's concerns. I assume that would take a great deal of work, among many different projects/initiatives.

One thing I care about is that someone is paid to start thinking about this critically and extensively, and I imagine they'd be more effective if not under the OP umbrella. So one of the early steps to take is just trying to find a system that could help figure out future steps.

> I speculate that other concerns may be about the way certain core programs are run -- e.g., I would not be too surprised to hear that OP/GV would rather not have particular controversial content allowed on the Forum, or have advocates for certain political positions admitted to EAGs, or whatever.

I think this raises an important and somewhat awkward point that levels of separation between EA and OP/GV would make it harder for OP/GV to have as much control over these areas, and there are times where they wouldn't be as happy with the results.

Of course:

1. If this is the case, it implies that the EA community does want some concretely different things, so from the standpoint of the EA community, this would make funding more appealing.

2. I think in the big picture, it seems like OP/GV doesn't want to be held as responsible for the EA community. Ultimately there's a conflict here - on one hand, they don't want to be seen as responsible for the EA community - on the other hand, they might prefer situations where they can have a very large amount of control

I want to see more discussion on how EA can better diversify and have strategically-chosen distance from OP/GV.

One reason is that it seems like multiple people at OP/GV have basically said that they want this (or at least, many of the key aspects of this).

I agree with the approach's direction, but this premise doesn't seem very helpful in shaping the debate. It doesn't seem that there is a right level of funding for meta EA or that this is what we currently have.

My perception is that OP has specific goals, one of which is to reduce GCR risk. As there are not so many high absorbency funding opportunities and a lot of uncertainty in the field, they focus more on capacity building, of which EA has proven to be a solid investment in talent pipeline building.

If this is true, then the level of funding we are currently seeing is downstream from OP's overall yearly spending and their goals. Other funders will come to very different conclusions as to why they would want to fund EA meta and to what extent.

If you're a meta funder who agrees with GCR risks, you might see opportunities that either don't want OPs' money, that OP doesn't want to fund, or that want to keep OPs' funding... (read more)

I think you raise some good points on why diversification as I discuss it is difficult and why it hasn't been done more.

Quickly:

> I agree with the approach's direction, but this premise doesn't seem very helpful in shaping the debate.

Sorry, I don't understand this. What is "the debate" that you are referring to?

> At the last, MCF funding diversification and the EA brand were the two main topics

This is good to know. While mentioning MCF, I would bring up that it seems bad to me that MCF seems to be very much within the OP umbrella, as I understand it. I believe that it was funded by OP or CEA, and the people who set it up were employed by CEA, which was primarily funded by OP. Most of the attendees seem like people at OP or CEA, or else heavily funded by OP.

I have a lot of respect for many of these people and am not claiming anything nefarious. But I do think that this acts as a good example of the sort of thing that seems important for the EA community, and also that OP has an incredibly large amount of control over. It seems like an obvious potential conflict of interest.

4

Patrick Gruban 🔸

I just meant the discussion you wanted to see; I probably used the wrong synonym.

I generally believe that EA is effective at being pragmatic, and in that regard, I think it's important for the key organizations that are both giving and receiving funding in this area to coordinate, especially with topics like funding diversification. I agree that this is not the ideal world, but this goes back to the main topic.

4

Ozzie Gooen

For reference, I agree it's important for these people to be meeting with each other. I wasn't disagreeing with that.

However, I would hope that over time, there would be more people brought in who aren't in the immediate OP umbrella, to key discussions of the future of EA. At least have like 10% of the audience be strongly/mostly independent or something.

6

zchuang

I mean Dustin Moskovitz used to come on the forum and beg people to do earn to give yet I don't think the number of donors has grown that much. More people should go to Jane Street and do Y-Combinator but it feels as though that's taboo to say for some reason.

6

sapphire

I think its better to start something new. Reform is hard but no one is going to stop you from making a new charity. The EA brand isn't in the best shape. Imo the "new thing" can take money from individual EAs but shouldn't accept anything connected to OpenPhil/CEA/Dustin/etc.

If you start new you can start with a better culture.

6

huw

AIM seems to be doing this quite well in the GHW/AW spaces, but lacks the literal openness of the EA community-as-idea (for better or worse)

5

Matrice Jacobine🔸🏳️⚧️

I have said this in other spaces since the FTX collapse: The original idea of EA, as I see it, was that it was supposed to make the kind of research work done at philanthropic foundations open and usable for well-to-do-but-not-Bill-Gates-rich Westerners. While it's inadvisable to outright condemn billionaires using EA work to orient their donations for... obvious reasons, I do think there is a moral hazard in billionaires funding meta EA. Now, the most extreme policy would be to have meta EA be solely funded by membership dues (as plenty organizations are!). I'm not sure if that would really be workable for the amounts of money involved, but some kind of donation cap could be plausibly envisaged.

6

Ozzie Gooen

This part doesn't resonate with me. I worked at 80k early on (~2014) and have been in the community for a long time. Then, I think the main thing was excitement over "doing good the most effectively". The assumption was that most philanthropic foundations weren't doing a good job - not that we wanted regular people to participate, specifically. I think then, most community members would be pretty excited about the idea of the key EA ideas growing as quickly as possible, and billionaires would help with that.

GiveWell specifically was started with a focus on smaller donors, but there was a always a separation between them and EA.

(I am of course more sympathetic to a general skepticism around any billionaire or other overwhelming donor. Though I'm personally also skeptical of most other donation options to other degrees - I want some pragmatic options that can understand the various strengths and weaknesses of different donors and respond accordingly)

3

Matrice Jacobine🔸🏳️⚧️

... I'm confused by what you would mean by early EA then? As the history of the movement is generally told it started by the merger of three strands: GiveWell (which attempt to make charity effectiveness research available for well-to-do-but-not-Bill-Gates-rich Westerners), GWWC (which attempt to convince well-to-do-but-not-Bill-Gates-rich Westerners to give to charity too), and the rationalists and proto-longtermists (not relevant here).