The EA Forum team is experimenting with design options for reacting to posts or comments, and we’d like your feedback over the next week. This post presents some reasoning and a few design directions we are considering.

Motivation and goals

We’ve been working on a feature to allow users to react to content in more granular ways than upvoting without needing to write a full comment. This project is similar to what our friends at LessWrong are working on, with some differences in motivation and design constraints that we’ll lay out below.

Motivation

We’ve heard from a number of users that after putting in many hours of work into Forum posts, sometimes the only (positive) feedback they get is in the form of karma. Karma can sometimes feel opaque and sterile rather than motivating. While some users do write short positive comments, we’ve heard that it can feel like the bar for commenting is high, requiring a nuanced take or useful feedback. We think reactions can provide a middle ground between an upvote and a comment.

Why restrict reactions to positive feedback?

In our first iteration of this feature, we tested several reactions with neutral or negative emotional connotations like 🤔 (confused). We found that authors were motivated to follow up with users reacting in such a way and clarify confusion or feedback, but negative reaction emojis didn’t have enough information to be actionable in the way that a full comment is. Therefore, we’ve decided to limit ourselves to positive reactions. One counterargument we’ve heard is that focusing only on positive reactions could make it harder to give constructive and critical feedback. We suspect this wouldn’t deter most readers who have good points to make, but it is something we want to hear from you about and track over time.

Keep it simple

As we approach this feature, we want to keep the user experience simple and intuitive.

If we add another form of voting / reacting, it must be very easy to understand how it relates to karma and agree/disagree voting. Our first iteration of the feature added reactions at the comment level, and most users we interviewed found it confusing to deal with karma, agree/disagree, and reactions on the same piece of content. Based on that feedback, we’re quite confident we need to prioritize simplicity. For instance, we’re unlikely to add a third dimension of voting like LessWrong are considering without some way of maintaining simplicity, and we’re unlikely to add a very large set of possible reactions.

Design questions we have

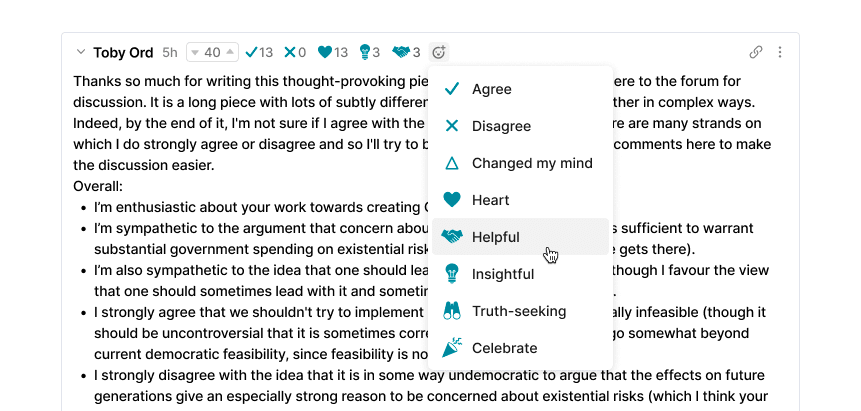

1. Reactions on posts, comments, or both?

Importantly, if we add reactions to comments, we would likely change agree/disagree voting significantly (removing strong votes and possibly anonymity) to maintain simplicity. We’re curious how users feel about this tradeoff.

We hypothesize that the biggest gap in terms of content-interaction and feedback is at the post level, since comments are generally shorter and more specific, and already have agree/disagree voting. On the other hand, we recognize that a lot of interesting back-and-forth discussion happens in comments, where reactions can also be useful.

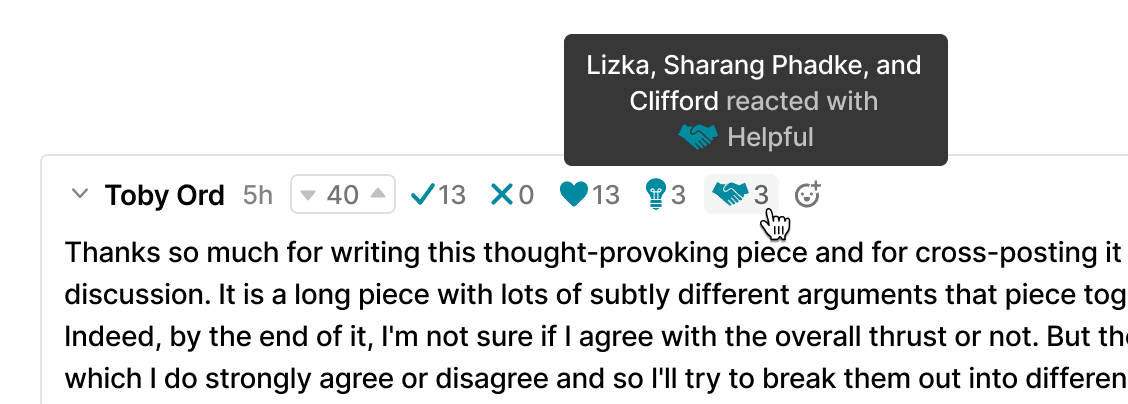

2. Anonymous or non-anonymous?

We’ve heard that seeing positive feedback from identified individuals can feel more meaningful. On the other hand, we’ve heard that non-anonymous reactions may deter some users from using the feature, particularly if we combine agree/disagree voting into reactions (which we would only do for comments, since posts don’t have agree/disagree voting).

3. What set of reactions?

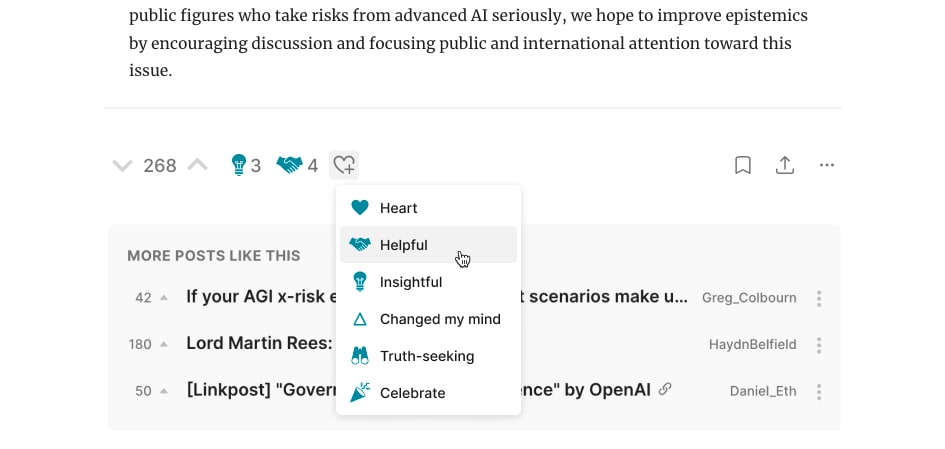

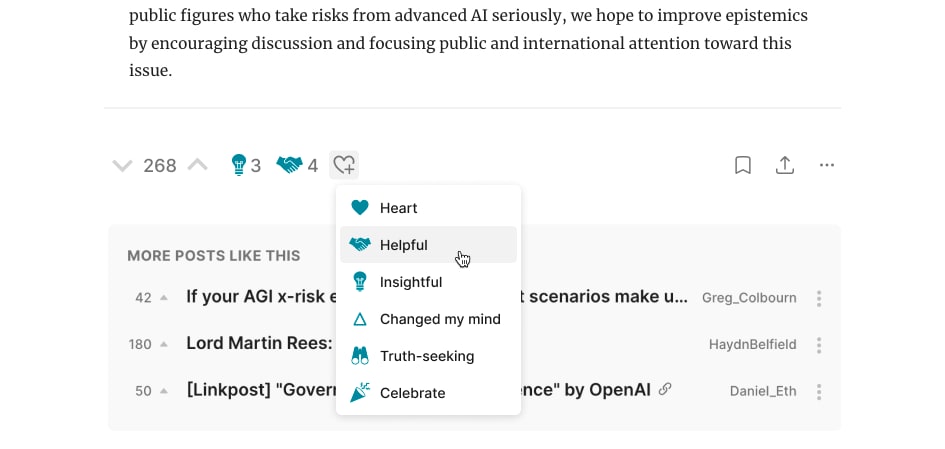

As mentioned above, we plan to introduce a fairly restricted set of reactions, but we’re interested in what reactions are most compelling for users and communicate the most important concepts. Do you think we’re missing any key reactions? Are any of these confusing?

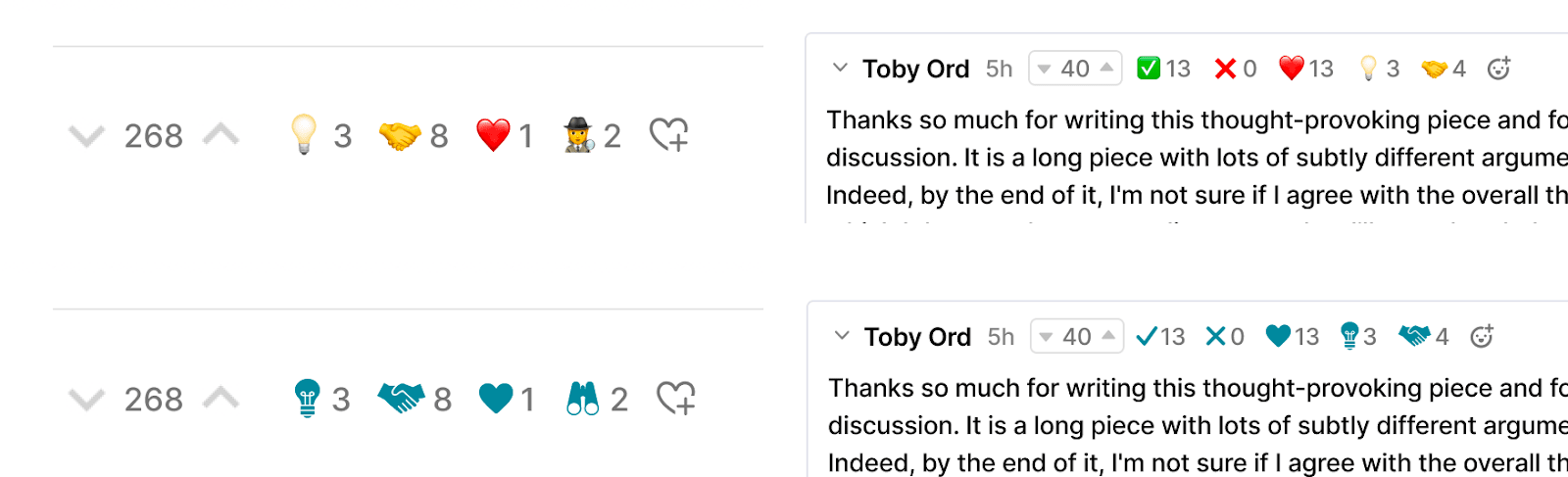

4. (minor) Forum-styled or common emojis?

Forum-styled icons would fit better with the aesthetic of the site, but common emojis are more recognizable and add a bit more energy and color to reactions.

We’re really looking forward to feedback and arguments from the community, so please share your thoughts!

I'd consider adding a single negative reaction -- a generic disagree -- to posts. I'd rather people select that than downvoting karma to express disagreement, which is I think what too often happens.

I think retaining the ability to anonymously express agreement or disagreement in some fashion is critical -- and would prefer that mechanism not be karma-upvote/downvote. My recollection is that some people have expressed concerns about what they say on the Forum in good faith having an adverse professional impact on them. Whether or not those concerns are well-founded in fact, disallowing the option for anonymity may render reported levels of support for a post or comment less accurate.

Thanks, I think this is a good point. We'll try out what a clear negative react at the post level could be to see if we can maintain this option for readers. FWIW, one reason we don't have agree/disagree at the post level is that a post often contains a bunch of different points, which makes it hard to agree/disagree on the whole.

Even if it's true that it can be hard to agree or disagree with a post as a whole, I do get the impression that people sometimes feel like they disagree with posts as a whole, and so simply downvote the post.

Also, I suspect it is possible to disagree with a post as a whole. Many posts are structured like "argument 1, argument 2, argument 3, therefore conclusion". If you disagree with the conclusion, I think it's reasonable to say that that's disagreeing with the post as a whole. If you agree with the arguments and the conclusion, then you agree with the post as a whole. Otherwise, I agree it's more ambiguous, but people then have the option of not agree/disagree-voting.

What's the downside of allowing this post-level agree/disagree voting that the team is worried about? (I'm not saying there isn't one, just that I can't immediately see it.)

I'd personally much rather have agree/disagree on posts than these reactions, if we had to pick. I'm not sure if these reactions add any useful information. But I'm happy to see you're trying to make it simpler than at LessWrong. Curious to hear what the other reasons are for no agree/disagree on posts. I frequently upvote posts I disagree with and would like to express that disagreement without writing a comment. Maybe agree/disagrees can be optionally set by the authors of the post?

I've only recently started posting on EA Forum, so my 2 cents may be useful as feedback from a newbie, but also with the caveat that it's from a newbie!

1. Often times I just want to say "thank you for making the effort, for taking the time, for writing this post and/or for doing the research behind it". This can be separate from judgment about quality or agreement, it's just saying "I see you put a lot of effort into this, and I appreciate that." Even just to say "I read this". My fear when writing a new post is not that people will disagree with it (which sparks useful discussion), but rather that nobody will read it at all, and my time will have been wasted. So I think any kind of feedback that says "thanks for writing this" or "I read this" or just tracks the number of reads, can be important, especially for people who are new to the forum and don't have a ready audience.

2. Related to the above, I'd appreciate clarity on how people feel about short, positive feedbacks, like "great post" or "fully agree". It's interesting that you say people would like more of this, because my natural reaction has always been to do that on every forum I've ever been on when I read a good post, usually with a few words explaining what I particularly liked. But here I don't feel comfortable doing that - literally. It feels out-of-place, as if people don't like that. Almost all the comments I see are about disagreements. (this is a very subjective, non-data-based impression!)

3. Feedback is a wonderful gift, and EA Forum readers are a great resource. If someone spends X minutes reading my post or comment, I would LOVE to know what they think of it. Especially the disagreements or constructive feedback, so I can get better. I've got a few negative karmas where there was no comment to indicate what exactly the disagreement or feedback was. I didn't really know what to do with them. Someone took some of their valuable time to read my post, and thought about it - and I appreciate that. Then they gave some feedback on my post, which is great. Except that the feedback doesn't really tell me what they think I should change! Feedback in the form of words will help me write better next time. I totally know my posts need work, just point me in the right direction :). If people feel bad about writing constructive (negative?) comments publicly, is there an option for "visible only to author" comments or even "anonymous and visible only to author" comments?

It's great that you're looking into this, it can make the Forum even better!

Hey Denis,

thank you for sharing your perspective. I see that you appreciate positive feedback in a medium like this forum, and that you are following your preferences – being the change you want to see in the world, and spreading positive feedback.

I really value this behaviour and am glad that you are part of the community.

As for your question, a personal message would suffice the need for "visible only for the author"?

Thanks Felix,

Yes, I suppose a personal message is a good solution for "visible only to the author" :D

This is a great forum, I'm really enjoying and appreciating all the posts and the debates and the tone of polite but firm disagreements!

Cheers

Denis

my two cents:

Thanks for your thoughts. Our hypothesis based on interviews is that authors will appreciate the more nuanced positive feedback from reactions beyond "changed my mind". That said, I think the easiest way to get to an answer is trying a set of reactions, seeing how readers and authors find them after a few weeks, and being open to revising.

At present we have like/dislike, and agree/disagree, but nothing for this contributes to the type of discourse we want to see on the forum EA. I don't think this is a major issue, but I'd love to see something like this contributes to making the forum a better place, or this detracts from making the forum a better place. I occasionally see a comment that isn't making a claim (and thus can't be disagreed with), but I which I dislike because it is tangential to the topic being discussed or it otherwise detracts from the level of discourse.

It might be that karma was originally intended to serve this purpose.

this is how I generally try to up/downvote, but I agree that it isn't at all clear. I now see that the hint is about how much you "like" this:

This seems like it can be improved

Very much agree. I use upvotes/downvotes to indicate what I would like to see more/less of on the forum. Making that clearer in the pop-up would be great!

I do think karma is a bit of a catch-all, and mostly I think that's OK. We need a way to integrate a diversity of positive / negative sentiment into a metric, even if people have different reasons for their votes.

In terms of the tool tip, the other sites lack any explanation for their "upvote" or "like" buttons, and one of the reasons we have a whole explanation is to differentiate karma-votes from agree-votes, which other sites don't have.

That said, It's possible that reactions will help call out some of the behaviors we want to encourage on the Forum, like truth-seekingness and helpfulness. We're also open to other suggestions for core reactions, but as mentioned in the post we do value simplicity.

The LessWrong version includes many negatively valenced reacts, most of which seem to represent meaningful attitudes people might want to express. Are there any particular features of the EA Forum that differ from LessWrong in a way that means we want a much more positively-biased collection here?

The main reason for this is that in our interviews with an initial set of reactions that included negative attitudes, we found that authors felt fairly strongly compelled to respond to these reactions in some way - clarifying their writing, understanding the nature of the reaction, etc. With this experience in mind, we felt that it would be preferable for negative reactions to be articulated as comments with some explanation.

Reading the comments here so far, I think I’m more open to some very clear negative reactions to bring more balance particularly at the post level - (e.g. thumbs down or disagree at the post level).

But broadly, I’m personally more worried about the downsides of negative reactions to discussion coherence than the risks of reactions being positive-biased.

A lot of these suggestions make sense, but it's very hard to anticipate in advance what will work!

So I would just adopt a 'rapid prototyping'/'agile marketing' mindset in trying these things out, and be willing to update and improve them over a few iterated cycles, without expecting that we'll get everything right the first time.

For example, I think it could be helpful to have a few different emoji-type reactions available, but I have no idea whether the optimal number of these is closer to 3, or closer to 8. I guess if you try some, it would be important to gather ongoing data about which ones are used most often, which ones provoke the most puzzlement or frustration, etc.

We could also nudge some of our EA Forum norms a little bit, reminding people it's OK to leave quite short comments (whether supportive or critical)-- maybe especially on posts that we like, but that are getting a bit neglected by others, in ways that might be disappointing to the authors.

Fair points, we'll definitely be iterating on this as we learn what works, and I should have been clear that this isn't the one and only decision point!

Forum style most definitely

Maybe make it configurable? I'd prefer the regular, colorful, style (because it's easier to understand at a glance)

Questions and comments in no particular order: